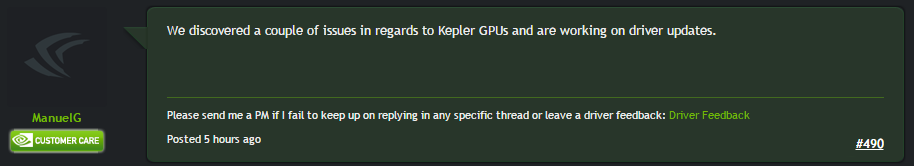

@04dcarraher: Well, nvidia did admit that there is something wrong with their kepler drivers, and that they are currently working on a driver update. They even said kepler GPUs will see performance improvements across the board, not just in the Witcher 3. This goes to show that it was indeed incompetence on their part. That, or they crippled the performance on purpose but never really expected gamers to catch them in the act and the shitstorm that followed. I'm gonna give nvidia the benefit of the doubt and say they simply screwed up. But what bothers is that nvidia fanboys are still coming up with silly excuses and meaningless arguments for nvidia when nvidia themselves have admitted that something is wrong.

dangamit's forum posts

You can still get invaded in while hollow. And yes, you are most likely banned. If you want to check, go to the bonefire at the entrance of the Iron Keep. There literally dozens of summon signs there, if you don't see any or if you can only see a couple, it means you've been banned and can only play with other banned players.

I was softbanned too because of Al+F4'ing. I did it to exit the game quickly. Had no idea it was something you get banned for.

The game bans your steam account. You can unban yourself by making a new steam account and using family view to share DS2 with your new account.

You can even transfer your save to your new account. It's a little bit tricky but it's doable.

@04dcarraher: So in short, nvidia's shortsightedness and/or incompetence is the reason their cards are falling behind. I really don't care about the engineering/design aspect of the problem. When I bought the 780 I was told it was going to be future proof. You're saying it's not. Had nvidia told me that Kepler lacks the technology to be relevant in a couple of years, I would told em to shove it. No matter how you look at it, nvidia has done fucked up.

The top-tier GTX 780, which is supposed to be outperforming the R9 290, is being outbenched by the -at best- second-tier R9 280X. Although it was the Witcher 3 that started this shitstorm, the 700 series has been going downhill for more than half a year now. In fact, 280X was trading blows with the GTX 780 when Far Cry 4 was released.

Just look at these benchmarks

http://www.techspot.com/review/1006-the-witcher-3-benchmarks/page4.html

http://www.techspot.com/review/917-far-cry-4-benchmarks/page4.html

http://www.techspot.com/review/991-gta-5-pc-benchmarks/page3.html

http://www.techspot.com/review/921-dragon-age-inquisition-benchmarks/page4.html

Just to put things into perspective, look at these older benchmarks and notice the difference between the 780/680 and the 280X

http://www.techspot.com/review/733-batman-arkham-origins-benchmarks/page2.html

http://www.techspot.com/review/615-far-cry-3-performance/page5.html

http://www.techspot.com/review/642-crysis-3-performance/page4.html

For the Crysis 3 benchmark, the 680 (not even the 780) blows the 7970 out of the water! 7970 is basically the same card as the 280X. Nowadays, the 7970 shits on both 680 AND the 780. Either AMD guys are wizards, or nvidia does not give a shit.

At lower resolutions and settings, the GTX 780 is the clear winner, but the higher you go in resolution and graphics quality, the worse the GTX 780 performs. The GTX 780 is not even in the same league as the R 290 anymore. Why should I keep my 2 GTX 780s when I can sell them, buy a couple of R 290s for their better performance, and make $100 in the process?

Unless nvidia explains what is happening and fixes the problem, I will never buy their products again, because either nvidia is doing some shady shit behindd the scenes to promote their Maxwell cards, or they simply build overpriced products that are clearly inferior to the competition, and that can't stand the test of time

And to the fanboys who keep repeating the same crap about "teh maxwell tessellation technology", please remember that you might find yourselves in a similar position a year from now when nvidia releases the new series of graphics cards.

The recent patch made significant improvements in performance, at least for me. Before the patch, turning on V-Sync caused the FPS to hit middle 40s, not it works great.

Also I used to get mid 50 on high before the patch, now I have stable 60 with a high/ultra combination. And thankfully the stuttering issue with the camera and movement no longer exists. Everything is smooth now.

Edit: Also, for some reason, my average GPU temps went up by like 6 or 7 degrees Celsius. Used to be high 70s. now they reach mid 80s after the patch. Anyone else notice this?

Nvidia is screwing the 7 series owners to force them to buy 9 series cards. What a terrible business model. I will never again buy an Nvidia card after this slap to the face. The 280X is owing the 780 when they're not even in the same league.

Nvidia's been kinda shady, I feel like

You know, I used to think this whole conspiracy about nvidia ditching old devices (or even crippling their performance on purpose) was a bunch crap. But a 960 outperforming a 780? Yea I think I should invest in a tinfoil hat.

For people running the game at 1440p, disabling AA improves performance by 5 to 15 FPS depending on how graphically demanding the area is.

Just tried with my 780ti. Looks like this card is now obsolete, this game is killing it at Ultra with Hairworks. Getting between 20-45fps at the start at 1440p. All the new games this year will make Kepler a dead horse. I was waiting for the 980ti, but might just have to bite the bullet and pickup a Titan X, since I really want to play this game decently. I heard a rumour that the 980ti will be faster than the Titan X but cheaper. What a dilemma.

I'm kind of cheesed that a 780ti has become such crap all of a sudden.

SLI 780's here. Playing at 2K with everything on high and getting FPS in the low 50s with Hairworks off. Very disappointing. The game looks nice, but it's nothing revolutionary. GTA looks better AND runs much better.

Nvidia is screwing the 7 series owners to force them to buy 9 series cards. What a terrible business model. I will never again buy an Nvidia card after this slap to the face. The 280X is owing the 780 when they're not even in the same league.

Seems like Nvidia has favored Maxwell more and more over Kepler

They've provided some nice comparisons for folks to play with to examine 1) what it looks like on PC and 2) how the settings play out

http://www.geforce.com/whats-new/guides/the-witcher-3-wild-hunt-graphics-performance-and-tweaking-guide

Of course, GW stuff eats hardware like candy...

Favored implies they actually put any effort into optimization the driver/game for the 700 series.

Look at these benchmarks.

http://www.pcgameshardware.de/The-Witcher-3-PC-237266/Specials/Grafikkarten-Benchmarks-1159196/

The 780 3GB is getting pwned by the 285 2GB, which means this shit performance has nothing to do with memory, but with nvidia not giving a damn about cards that were released 2 years ago or less.

Even with HW being turned off the 780 is losing a a card that cost half the price. AMD is starting to look more attractive for my next GPU purchase. I mean, they have crap drivers but at least their top-end GPUs don't become obsolete after a couple of years.

Remember when the 780ti used to beat the 290X?

Damn, nvidia...

Log in to comment