Lots of threads popping up now that this beast has released! Thought it might be useful if we keep performance stats and impressions in a nice bundle!

postingImpressions: no rules, just share at will

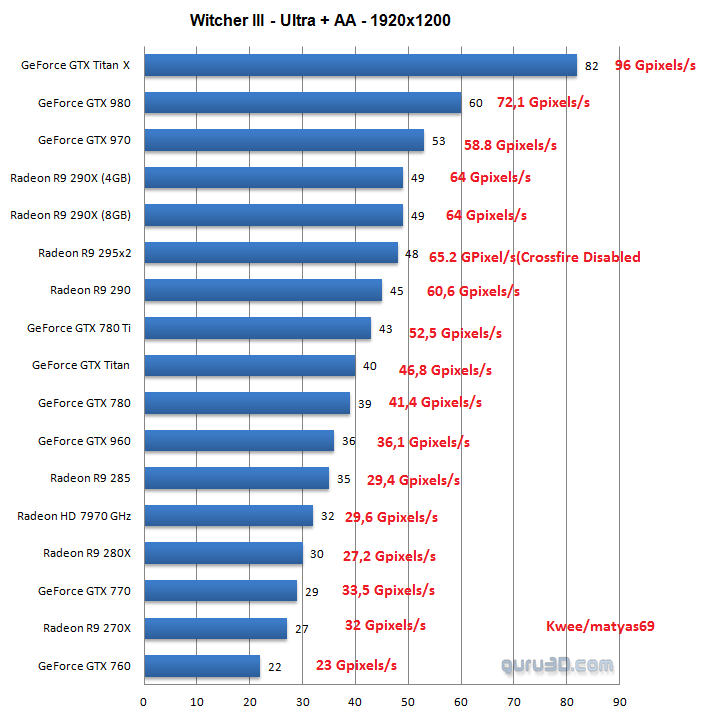

postingBenchmarks: I'd ask that if you do provide benchmarks, please provide details on the game settings you used and the hardware in your rig! Posting professional benchmark links is welcome as well

I'll start with first impressions of my own, and add some benchmarks later:

This game is gorgeous (playing on Ultra @ 1080p)! Big fan of their lighting and color choices - they know how to create a truly vibrant world.

Combat is much improved over TW2. There are still some awkward things, but it no longer feels like trying to steer a boat around. He is quick and responsive; rolls are useful but not near-mandatory. It's just tighter and more fun than the last game.

I gotta say - after an hour and a half with it I'm even more stoked than I was before

Log in to comment