@slimdogmilionar said:

Don't you mean 30 ROPS and 18 CU's balanced at 14 + 4? I know people will have their own opinion but my question is " Why is the PS4 not crushing xbox in graphics even with devs not being able to fully use ESram"? Most of what I hear is that the PS4 sacrifices gameplay for resolution seeing as how most games on xbox play better according to the people. So what if Amd, Nvidia, and M$ all move towards using these new api's? Where does that leave PS4? It leaves them using outdated developing tech.

M$ cloud is worthless? Really? Sony's cloud is less complicated with only 8,000 servers and they have yet to crank it up.

M$ has a complicated cloud infrastructure like Google's(which Sony doesn't understand) who nobody believe's in despite the fact that's it's working now, and not just on Xbox1, businesses are actually using M$ and Google's cloud compute servers. Anything Google does will make money and M$ followed suite and its paying off enough that they now have commercials advertising the cloud for businesses.

Yep the gimmick is right in everyone's face again sort of like when M$ got flamed for introducing broadband gaming to the industry. To bad that didn't work out for them like everyone said. Oh wait broadband gaming is the standard for online gaming now just like everyone thought that the Xbox 360's unified architecture would be the standard for gaming consoles because Microsoft did it. Besides AMD has already said DX12 is better and that it will work hand in hand with DX12. Not to mention Xbox is built like a PC with dgpu and Cpu which have seperate ram pools.

Sony would not be hiring someone to do this now if M$ hadn't made DX exclusive or if they new these major companies were going to shift to these new API's.

http://www.dsogaming.com/news/amd-on-why-mantle-dx12-is-the-result-of-common-goals/

"Microsoft has been working with GPU hardware manufacturers to find you ways the GPU can be enabled to do the best rendering techniques with great quality and performance, and DX12 is the fruit of that collaboration. DX12 aims to fully exploit the GPUs supporting it. In addition, DX12 was the result of common goals as everyone participating in this program wanted to resolve performance issues that could not be resolved otherwise."

So DX12 was not done by M$ alone AMD and Nvidia also had a hand in it. Imagine that M$ bringing competing companies together to create something better.

I'm hoping to be able play games at higher FPS on my outdated crossfire setup when DX12 releases.

Firs of all the PS4 is crushing the xbox one...

The difference between the PS4 and xbox one should not be more than 20 FPS is the worse case at the same resolution,musty 15 17FPS,the PS4 is kicking the living ass out of the xbox one.

You know the power it takes to double another GPU resolution wise.? Yeah i am sure you have no idea,just like the king of power it requires by one GPU to double another in frames..

Lets see.

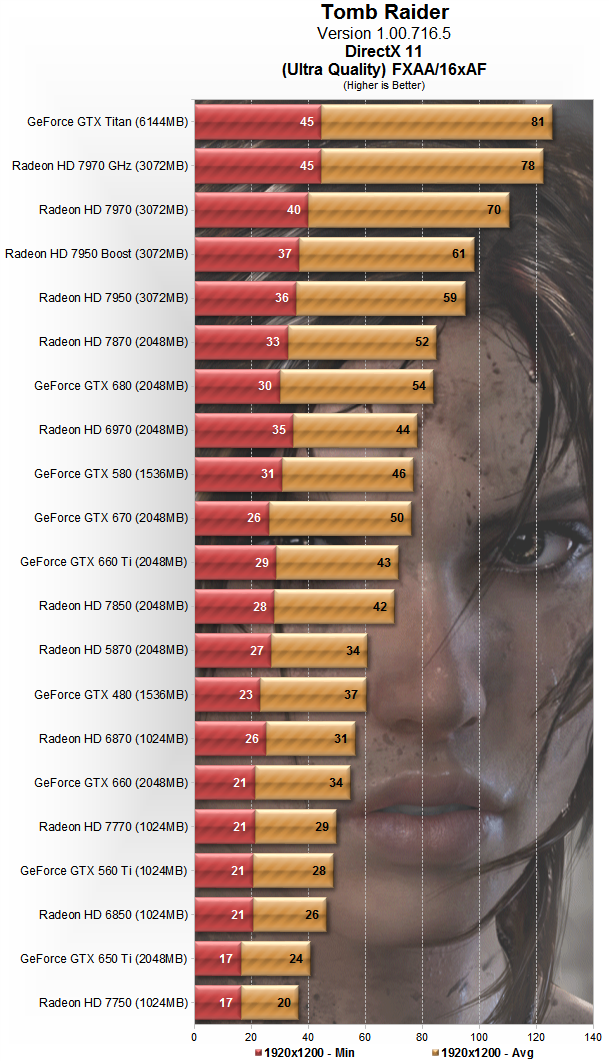

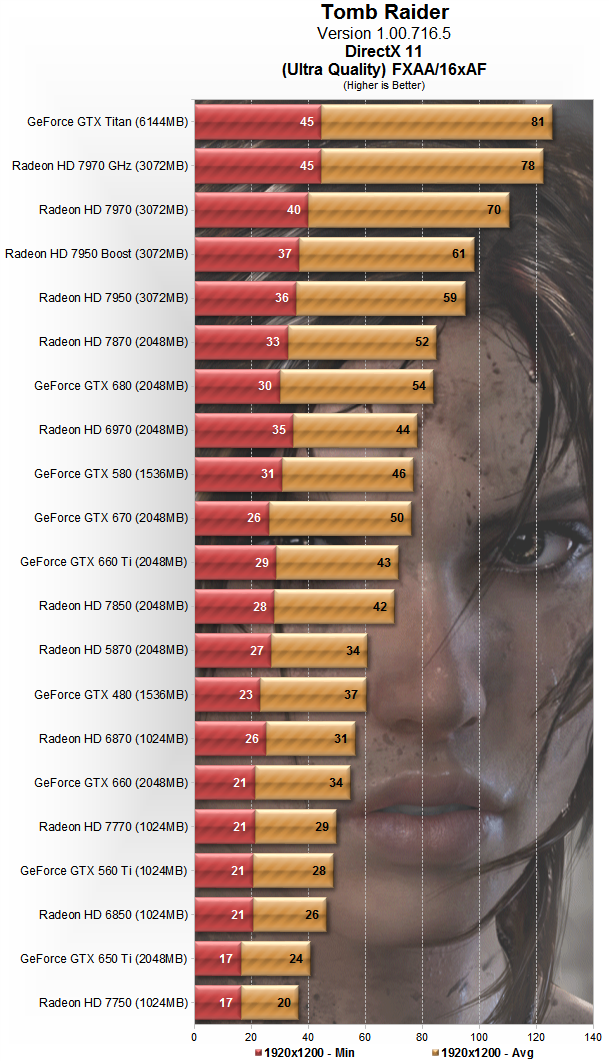

Tomb Raider is 1080p on both xbox one and PS4,but there stop the similarity,the PS4 version runs at 60 FPS in many instances the xbox one version at just 30FPS thats 100% difference,the PS4 version is 20 FPS on average faster than the xbox one version.

Look at what GPU represent the xbox one and PS4 closes frames,the difference between the PS4 and xbox one should be like 15 frames which is a little higher than 7770 vs 7850,yet the difference is a big as 7950 vs 7770 why and how is that possible.?

That bold part is completely stupid and has nothing to do with this argument,Ryse is a sh** game and look good ,one isn't tied to the other Infamous look better is more open 1080p and doesn't have drop to 17 frames like Ryse and is a better game to.

The PS4 is not 14+4 is so you are an alter account of the other joke posters here which insist in the 14+4 crap.

The PS4 is 18 CU units the xbox one is 12 there is nothing that can change that,even if it was true using 4CU for compute is something that the xbox one need to compensate with sad part is that if 4 CU of the xbox one are use for compute to level the playing field the xbox one end with 8 CU for rendering,maybe that is the reason why Ghost is 720p on xbox one and 1080p on PS4..lol Same with MGS5 as well 1080p 60 FPS PS4 with extra effects 720p on xbox one,and that came 1 month ago..

Sony's cloud isn't to magically boost performance like MS wanted to make believe,only to own then self when developers claimed it was mostly for dedicated servers for games,and maybe backed lighting or AI which are thing that don't need to be constantly refresh,mind you that the majority on xbox one games don't use the cloud for AI,because that would mean that any one who bough those games most have online.

MS introduce what.? Hahahahaaaaaaaaaaaaaaa Broadband gaming,..... to the console market..?

hahahaaaaaaaaaaaaaaaaaa............

The PS2 did that before the xbox you buffoon..hahaha

Since before launch Sony stated that the PS2 would connect to the internet to allow to play games online by add on,which was 56k and Broadband was well,it was release before xbox live was dude,in fact the first games to run on the broadband network adapter was release before the adapter THPS 3,you know that game came before the xbox was even out right on 2001.?

So yet again another thing that lemming claim MS did that they didn't.

AMD has released two new videos in which the red team reveals the reasons why both Mantle and DirectX 12 are really important to PC gaming. As AMD noted, both Mantle and DirectX 12 will benefit existing customers, and will offer better performance for all games supporting them.

From you own link selective reader...

If you don't get that drift..lol LibGNM is like Mantle..lol

By the way most of those performance issues are not found on PS4 because those issues are created by DX in the first place..hahahaaaaaaaaaaaa

PS4′s library and Mantle are similar

The good news is that the mantle API will be similar to the PS4 library. This was revealed at AMD’s APU13 presentation, and in addition they’ll be pushing Frostbite’s design based on these key pieces of technology. The rep speaking also mentioned that the Mantle API and the PS4′s library are going to be much closer together than Mantle and Microsoft’s DirectX11. DX11 as we know has many issues, and a lot of the problems revolving around PC performance is currently directly linked to DX11. The similarity between Mantle and the PS4 isn’t the only one for AMD, there was news recently that AMD’s TrueAudio technology has more than a little in common with the PS4′s Audio DSP (article).

AMD’s Guennadi Riguer was speaking during the developer summit and said that the current API’s are only capable of getting games developers “so far”.

http://www.redgamingtech.com/amd-mantle-works-on-nvidia-gpus-100000-draw-calls-similar-to-ps4-api-library/

Butbutbut DX12....Sony is copying ...hahahahaaa

The common goal between DX 12 and Mantle is been low level and get rid of PC likes bottle necks created by other DX version in the first place,DX was never an ace vs the PS3 it will not be now that the hardware is friendlier..hahahaha

But like i already told you keep the dream alive..lol

Log in to comment