Note that AMD's GL_AMD_sparse_texture(1) vendor specific extension was displaced by GL_ARB_sparse_texture(2).

GL_ARB_sparse_texture is part of OpenGL 4.4 and AMD doesn't support OpenGL 4.4(3) at this time and AMD's GL_AMD_sparse_texture extension is dead.

References

1. https://www.opengl.org/registry/specs/AMD/sparse_texture.txt

2. http://www.opengl.org/registry/specs/ARB/sparse_texture.txt it has NVIDIA's input.

3. http://www.khronos.org/news/press/khronos-releases-opengl-4.4-specification

Without ARB's support, it's a dead end API.

----------------

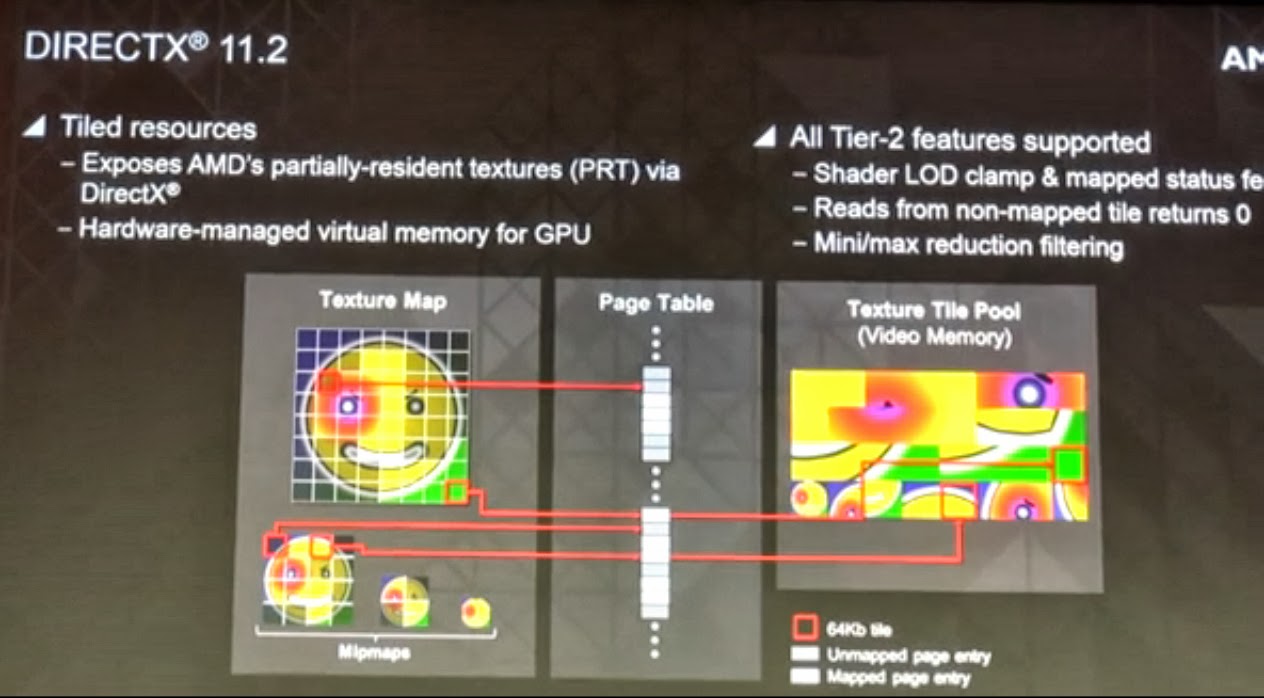

For textures that fits within the 6 GB memory, AMD PRT has more performance gain on X1 (1) than PS4(2).

1. X1's TMU fetch source starts from 68 GB/s** to 204 GB/s** ESRAM. Hotchip.org stated peak 204 GB/s** BW (Back Write). "Tiling tricks" needs to be applied for textures (via AMD PRT) and render targets.

2. PS4's TMU fetch source remains at 176 GB/s**.

**theoretical peak values.

For greater than 6 GB textures, AMD PRT can be applied on HDD or Blu-ray sources.

In terms of AMD PRT functionality, AMD Kaveri APU's single speed memory design is similar to PS4's single speed memory design and the only large difference is with memory speed e.g. Kaveri's 128bit DDR3-2xx0 Mhz vs PS4's 256bit GDDR5-5500 Mhz.

PS4's PRT function just with HDD/Blu-Ray -> GDDR5.

X1's PRT function is with HDD/Blu-Ray -> DDR3 -> ESRAM.

Kaveri's PRT function just with HDD/SSD -> DDR3.

Gaming PC with dGPU's PRT function is with HDD/SSD -> DDR3 -> GDDR5.

So basically your argument is that OpenGL extension is dead.hahahaaaaaaaaaaaaaaaaa

Industry Support

“AMD has a long tradition of supporting open industry standards, and congratulates the Khronos Group on the announcement of the OpenGL 4.4 specification for state-of-the-art graphics processing,” said Matt Skynner, corporate vice president and general manager, Graphics Business Unit, AMD. “Maintaining and enhancing OpenGL as a strong and viable graphics API is very important to AMD in support of our APUs and GPUs. We’re proud to continue support for the OpenGL development community.”

From you own link selective reader...

1.

The same discussion with ESRAM as well - the 204GB/s number that was presented at Hot Chips is taking known limitations of the logic around the ESRAM into account. You can't sustain writes for absolutely every single cycle. The writes is known to insert a bubble [a dead cycle] occasionally... One out of every eight cycles is a bubble, so that's how you get the combined 204GB/s as the raw peak that we can really achieve over the ESRAM. And then if you say what can you achieve out of an application - we've measured about 140-150GB/s for ESRAM. That's real code running. That's not some diagnostic or some simulation case or something like that.

Digital Foundry: So 140-150GB/s is a realistic target and you can integrate DDR3 bandwidth simultaneously?

Nick Baker: Yes. That's been measured.

http://www.eurogamer.net/articles/digitalfoundry-the-complete-xbox-one-interview

Don't mix rebellions claims with ESRAM speed they never claimed that,ESRAM can't do 204gb/s stated by MS 2 months after the hotships conference,it can do 140 to 150GB/s which is slower than the PS4 176 gb/s bandwidth even if you take away the 20GB for CPU still faster.

2-Which doesn't matter again for all intended purposes,GCN doesn't need ESRAM to use PRT and i already quote that from AMD then self the textures need to be taken for the HDD and partially load into the GPU ram system ram had nothing to do with it,i quote it and posted it again you and you still ignore it because you only read what serve you best and ignore the rest,juts like you quoted activision for COD Ghost been 1080p and you were wrong.

Oh and loading textures directly to the DDR3 will affect performance i don't think DDR3 will be use other than final destination after the data pass for ESRAM,Turn 10 gave an example of this already,the sky which is static and does nothing can be place on the main memory bank but the cars and other stuff that demand speed are placed on the ESRAM.

PRT works on PS4 period and no GCN need ESRAM to make it work,PRT is a way to save memory which for now the xbox one and PS4 both have to waste.

@tormentos:

updated conversation from Brad Wardell:

Brad Wardell - "One way to look at the XBox One with DirectX 11 is it has 8 cores but only 1 of them does dx work. With dx12, all 8 do."

Some guy - "Xboxone alredy does that. All those dx 12 improvements are about windows not xbox."

Brad Wardell - "I'm sorry but you're totally wrong on that front. If you disagree, better tell MS. I'm just the messenger."

Some guy - "in all materials published they talk about PC. They only mention XBOX as already hawing close to zero owerhead."

Brad Wardell - "XBO is very low on overhead. Overhead is not the problem. It is that the XBO isn't splitting DX tasks across the 8 cores."

Multi-core issue:

Some guy - "I thought the Xbox One had 6 cores available for gaming and 2 cores reserved for the OS, does DX12 change this?"

Brad Wardell - "not that I'm aware of. #cores for gaming is different than cores that interact with the GPU."

Did you watch that video demo they showed where they used 3DMark to demonstrate the workload on the cpu, where one core was main thread and pulled the biggest load then they switched to DX12 and the wordload was splitted evenly across all cores? This might be a pretty big deal, Infamous had CPU bottleneck right? It might be that also the PS4 currently dosent split the load evenly accross the cores, but what do I know. Im not a dev

That is a joke DX doesn't use just 1 core of multicore CPU,in fact the exampled give using screens showed how all cores were working on DX11,in fact what they did was improve timing,which showed pretty easy on the screen MS showed.

Not only that the xbox one doesn't have 8 cores to use,it has 6 so you have already 2 wrong statements there all cores on CPU had been use on consoles for years,and on PC as well,it took more time but all know games demand multicore CPU and DX12 isn't here so yeah those cores are been use.

The load was already split but most of the work was done by the first core,if you see what they do they lower the first core time greatly while increasing the secondary cores ones,in fact in the screen show you can compare how they got to cutting in half the timing,but all cores were already in US that demo was for PC not xbox one,which use only 6 cores for games confirmed already not 8 so he was wrong.

But here is a nice take on this..

Writer’s take: It’s relevant to mention that after the presentation some alleged that this would lead to multiplying the performance of the Xbox One’s GPU or of the Xbox One in general by two. That’s a very premature (and not very likely) theory.

What is seeing a 2x performance boost is the efficiency of the CPU, but it won’t necessarily scale 1:1 with the GPU. First of all, no matter how fast you can feed commands to the GPU, you can’t push it past its hardware limits, secondly, the demo showcased below (the results of which were used to make those allegations) has been created with a graphics benchmark and not with a game, meaning that it doesn’t involve AI, advanced physics and all those computations that are normally loaded on the CPU and that already use the secondary threads.

Thirdly, the demo ran on PC, and part of the advantage of D3D and DirectX 12 is to bring PC coding closer to console-like efficiency, which is already partly present on the Xbox One.

http://www.dualshockers.com/2014/04/07/directx-12-unveiled-by-microsoft-promising-large-boost-impressive-tech-demo-shows-performance-gain/

All you have to do is use some common sense much like Nvidia and AMD tech demos on GPU that never show AI and are done to so call demo what the GPU it is the same here,that demo had no AI advance physics or anything,so under those conditions the demo was make they gain 2X CPU performance,but when a game code is running things will be difference because the CPU is in charge of more things than just simple code.

This is a fact with all Nvidia and AMD demo,you see impressive demos surpass by a truck load the games that actually run on the GPU.

Another point is that this demo was done on PC,and Mantle as well as DX12 are API bring to PC for the sole purpose of emulating console API,so the xbox one already is using part of that optimization that you saw on that PC demo,and the improvement on xbox one will not be the same,many here don't actually understand what they are arguing for example one of the dudes arguing this with me claim that Mantle may come to PS4 but if it doesn't the xbox one will have an advantage because it has DX12,which is totally false because the PS4 doesn't need mantle,Mantle is a PC api done to micmic the PS4 API LIBGNM.

Tormentos keeps thinking he knows more than Brad Wardell, haha, silly boy

Oh i don't know more than him i just know that he is Damage controlling for MS,the xbox one doesn't have 8 cores for games it has 6,and all cores on CPU for consoles have been in use before DX11 even existed...lol

Hell all SPE on Cell were been use before DX11 existed as well..lol

Multicore use on consoles is older than on PC,so claiming that only 1 cores does the jobs is a joke,not even on PC is like that and MS example prove that they compare timing between both codes and all the cores were running and been use under both codes DX11 and DX12.

Is not my fault that you don't know what the fu** your talking about,console API are what Mantle and DX12 are trying to emulate,part of DX12 is already on xbox one working since day 1,so yeah don't expect 2X performance that is a joke,to trick suckers like you into buying the xbox one..lol

Log in to comment