Well that confirms it then, it was suspected that XBox One was not a true hUMA uarch for this very reason and although there is a line going from the ESRAM to the link between the CPU and the cache coherent access block there were not details about what that link enabled.

Xbone not hUMA compliant? Will this be a drawback?

This topic is locked from further discussion.

I never said that. Stop trying to change shit to prove a point. lol!! You're such a joke. That's why everyone laughs at your dumbass. HAHAHAHAHAHAHAHA!!!

I said "Just Add Water" has never made any Xbox games. That's a fact. You see any XBOX games in that list?

Oddworld made Xbox games, yes, but not this company. The CEO was trying to change Lanning comments because he's obviously biased for PS. Get your story straight jackass. Kid? LMAO!! I'm older then you little boy. I was playing Pong while you were still in Mommies belly. lol!!

| Release Date | Titles | Genre | Platform(s) |

|---|---|---|---|

| 2009 | Gravity Crash | Multidirectional shooter | PlayStation 3, PlayStation Portable |

| 2011 | Oddworld: Stranger's Wrath HD | First-person/Third-personaction-adventure | PlayStation 3, PlayStation Vita, Windows |

| 2012 | Oddworld: Munch's Oddysee HD | Platform | PlayStation 3, PlayStation Vita |

| 2014 | Oddworld: New 'n' Tasty | Side-scrolling, Platform | PlayStation 3, PlayStation Vita, PlayStation 4, Wii U, Windows, Mac, Linux |

| 2014 | Gravity Crash Ultra[7] | Multidirectional shooter | PlayStation Vita |

However, it remained an active, operating company during this period, primarily through the development of a movie called Citizen Siege, though to this day it has not been released.[1]

Currently, the company has returned to the video game industry, though so far its role has mostly been in guiding UK-based developers Just Add Water in resurrecting the Oddworld franchise through the remastering of existing titles and the development of new ones.[2] On July 16, 2010, Just Add Water

http://en.wikipedia.org/wiki/Oddworld_Inhabitants

By the way you moronic fanboy they try to make Oddworld for xbox 360 but MS sh**ty ass limitation didn't allow it,they wanted the developer to charge $20 on xbox 360 while the game was cheaper on PS3 and PC so that mean by the parity clause MS has that they had to raise the price for PS3 and PC,not only that by the time the first game was been made for PS3 the xbox 360 had a size limit for arcade games Oddworld was 2GB on PS3 on xbox 360 it didn't fit.

Developer Just Add Water says it will take a "miracle" to get its upcoming downloadable game, Oddworld: Stranger's Wrath, onto the Xbox 360 because of Microsoft's limiting download cap.

It's a one-two punch to the studio's gut. Oddworld: Stranger's Wrath was originally released on the Xbox, but it's not backward compatible with the 360. So Just Add Water decided to remake it, and bring it to not only the 360 but also the PS3 and PC.

For the latter systems, it's no problem, but the 360 isn't having it.

"Currently the title weighs in at 2.1GB and that is without any updates, so either we have to pull a miracle out of the bag with some type of amazing compression system, or ask Microsoft to increase their 2GB limit. However this limit is apparently hardware-led, so please let us try and make a miracle happen," wrote the development team on its blog

http://www.gamesradar.com/oddworld-xbla-plans-scrubbed-due-to-size-limit/

So no Just add water is no biased in any way MS fu** up,stop your crying you blind biased lemming..lol

Now do you have any more theories on how MS will magically catch the PS4...lol

Well that confirms it then, it was suspected that XBox One was not a true hUMA uarch for this very reason and although there is a line going from the ESRAM to the link between the CPU and the cache coherent access block there were not details about what that link enabled.

Yep i argue that allot and Ronvalencia pulled like 100 links and irrelevant crap to prove it wasn't true.'

Funny enough MS lie on their presentation when they claimed the CPU could see ESRAM they even had a black line that supposedly represented the coherent connecting between CPU and ESRAM,no wonder it didn't have any speed associated with it..lol

Another theory in which Ron is wrong.

lmao remember when lemmings said this POS was future proof?

Its replacement will be out in four years.

I just get a kick out of the fact that while Lems constantly rehash the 4.5GB available for developers argument regarding the PS4, their fuckup of a console needs to duplicate objects in RAM due to the lack of HSA/hUMA. The XBone needs almost 9GBs of RAM available to developers to pull off what devs can do with PS4s 4.5 and it would still be slow as molasses comparatively.

Once again, proof that Lems need to just shut up while they are at least going somewhere with their console (hey, it is selling faster than the WiiU) rather than trying to lock horns with PS4 and its fans constantly. The truth is only going to get uglier. Right now, very very few developers want to be honest about the XBone because they don't want to burn their bridges with MS or its fanbase. All that goes out the window at the first hint of weakness, just like developers blasted the PS3 in the second half of 2007.

I just get a kick out of the fact that while Lems constantly rehash the 4.5GB available for developers argument regarding the PS4, their fuckup of a console needs to duplicate objects in RAM due to the lack of HSA/hUMA. The XBone needs almost 9GBs of RAM available to developers to pull off what devs can do with PS4s 4.5 and it would still be slow as molasses comparatively.

Once again, proof that Lems need to just shut up while they are at least going somewhere with their console (hey, it is selling faster than the WiiU) rather than trying to lock horns with PS4 and its fans constantly. The truth is only going to get uglier. Right now, very very few developers want to be honest about the XBone because they don't want to burn their bridges with MS or its fanbase. All that goes out the window at the first hint of weakness, just like developers blasted the PS3 in the second half of 2007.

Ice...cold lol

@clyde46: Exactly............

Oh some of us do i have argue this against Ron many times,which by the way i find odd how he hasn't show even that he was tag several times,normally he appears in a second to discus anything technical,i guess that this one ownage is to big for him to handle.

Maybe you don't know what this mean for the xbox one but i do.

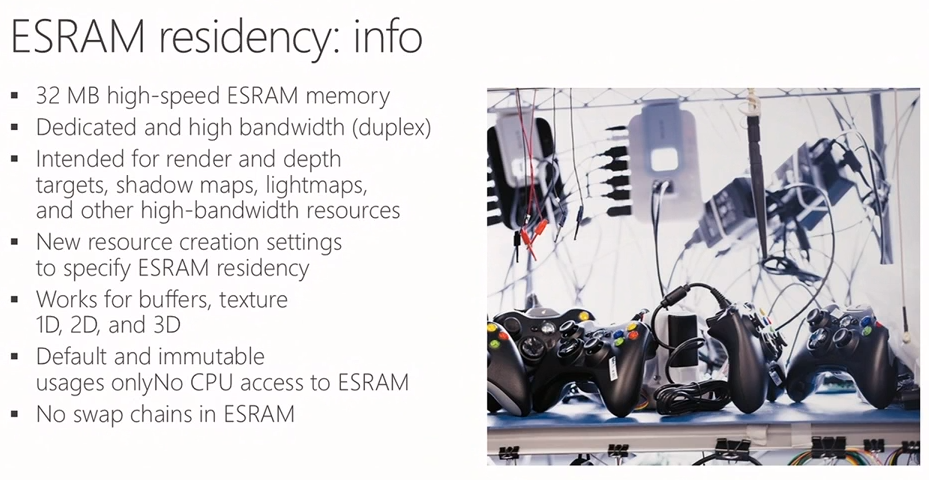

This mean flushing the GPU manually.

This mean duplicated data since the CPU can't see data on the ESRAM.

This mean lower efficiency in the long run.

Hell even using physics and getting the results back on the same frame is not possible on xbox one,while on PS4 it is..lol

The PS4 is more efficient,more future proof,less complicated and stronger,with that gap no wonder xbox one games were performing so bad.

I just get a kick out of the fact that while Lems constantly rehash the 4.5GB available for developers argument regarding the PS4, their fuckup of a console needs to duplicate objects in RAM due to the lack of HSA/hUMA. The XBone needs almost 9GBs of RAM available to developers to pull off what devs can do with PS4s 4.5 and it would still be slow as molasses comparatively.

Once again, proof that Lems need to just shut up while they are at least going somewhere with their console (hey, it is selling faster than the WiiU) rather than trying to lock horns with PS4 and its fans constantly. The truth is only going to get uglier. Right now, very very few developers want to be honest about the XBone because they don't want to burn their bridges with MS or its fanbase. All that goes out the window at the first hint of weakness, just like developers blasted the PS3 in the second half of 2007.

Ice...cold lol

Oh, I can get much more real with it. PS4 having 8GBs instead of 4 was literally a throat punch to Microsofts plans this gen. All that "Entertainment Console" talk? That's because MS thought they held all the cards. In the videogame industry, loose lips sink ships. There were developers with actual Alpha PS4 devkits who were shocked by the official reveal and Beta kits were shipped shortly thereafter so the real work could get underway.

I don't know how or why the Lems have this second coming of swagger lately, but the reality is that the PS4 torpedoes the XBone behind the curtain, but no one talks about it because there's a chance that MS can change its fate and they are expected to have a strong E3 this year. Also, XBone games will be reaching 1080p, but at dicey framerates and missing effects. The song and dance changed by Spencer because unlike most of the top brass at MS, he does have a grasp of what the challenges ahead are.

The PS4 was supposed to be a little bit faster and a good improvement over the PS3 for Sony, not the golden bullet that slit the XBones throat. The question is whether or not Sony knew all along they were moving to 8GBs or if the legend of new GDDR5 fabrication making parts available at the last moment is true. Whatever the case may be, there is not a prayer that the XBone will match PS4s performance. The one-off comparable games are merely a biproduct of Sony giving alpha kits to multiplat devs with half the memory. So, as cross-gen cross-plat games flush out, expect PS4 games to only improve from here out. Even DX12 will take a development cycle to show improvement for third party XBone games (yes, first party games like Halo 5 are already test-beds for the new tools, in case you don't know how game development doesn't work). Meanwhile, PS4s toolchains are a little more in constant play and evolution. This had the effect of a lot of delays. Even though that plays out poorly in the game media, the truth is that the developers would rather put out a better running game than a commercial turd. It's very competitive right now.

Esram and ddr3 was there from the start they never had a thought about gddr5 because speed between the multicore and cpu. And I thing ps fanboys need to go back and listen to what Cerny said in his last interview on esram

He said the esram method was very dev unfriendly and would have took to long for dev to tap into the power and they needed performance now. He also said once devs get to understand how to use that small bit of esram possiblities are endless for the console, he sound like he knew they would have a headstart because the straight forward method but wasn't sure how long or how fast devs would start using esram.

Esram and ddr3 was there from the start they never had a thought about gddr5 because speed between the multicore and cpu. And I thing ps fanboys need to go back and listen to what Cerny said in his last interview on esram

He said the esram method was very dev unfriendly and would have took to long for dev to tap into the power and they needed performance now. He also said once devs get to understand how to use that small bit of esram possiblities are endless for the console, he sound like he knew they would have a headstart because the straight forward method but wasn't sure how long or how fast devs would start using esram.

Depends on the kind of game engine you use. The ESRAM is negligible for some games. Hence, Call of Duty running like ass on the XBone due to differed rendering as just one example. The ESRAM helps an otherwise completely bandwidth starved system but it isn't suitable for all things. Devs don't just push the ESRAM button and get things to work. Also, the GDDR5 is still much faster than theoretical combined write to the framebuffer even if the tools run at maximum efficiency.

Look at the bandwidth in these terms. XBone is like a two lane road where only Ford Taurus can drive in one lane and a Mustang in the other. Your "sauce" comes by getting as many Ford Tauruses and Mustangs to reach the mile marker (framebuffer) nose and nose with each other. Meanwhile, you can push a few more Mustangs past that mark for good measure and data flow, but your combined write only happens when the Taurus and the Mustang are synchronized. You have two choices and the simple one is a pain in the neck while the more efficient one will make you sweat bullets. There's a reason why Crytek had to literally make MS rewrite some app code on the XBone to get Ryse to run. Meanwhile, the PS4 bandwidth is a single lane road but only McLaren 650ses are running the course. More cars cross the mile marker (frame buffer) as a result and you have more data to the framebuffer if you will.

Now here comes the fucked up part about that. Each Mustang you run down the mile has a limit to how much weight in can carry as a result of the limited size of the ESRAM. Meanwhile, those McLaren 650s running on the PS4 can be jam packed. It makes no difference to the PS4 because it has a massive pool of memory and it's more efficient than a PC due to both the CPU and the GPU having access to the same addresses in memory. Starting to look more and more unfair isn't it?

This is why I can't stress enough, you XBox fans need to quit comparing the XBone to the PS4 even though your fanboyism makes that an impossibility, you are going to soon find out the answer is:

@Shewgenja: that was totally bogus like is said you are only been looking at 1.3 tf and small esram you it takes way more to make a game than pretty colors, Ryse still looks better than anything on console with a straight forward method on a console that wasn't meant for it. They didn't uses the two compute units or the two graphic compute units, no swizzle encoding, decoding, upscaler or display planes, no offloading locally or to the azure cloud, with using only 3.5gb of Ram 5 cores while infamous is using all 6 cores and 4.5gbs now out of that who has to most room to grow?

@Shewgenja: that was totally bogus like is said you are only been looking at 1.3 tf and small esram you it takes way more to make a game than pretty colors, Ryse still looks better than anything on console with a straight forward method on a console that wasn't meant for it. They didn't uses the two compute units or the two graphic compute units, no swizzle encoding, decoding, upscaler or display planes, no offloading locally or to the azure cloud, with using only 3.5gb of Ram 5 cores while infamous is using all 6 cores and 4.5gbs now out of that who has to most room to grow?

1x AA below a 1080p framebuffer in Ryse says the PS4. FYI "swizzle" or PRT was done on OpenGL first. Silly Lem, the PS4 can do it as well, there's just no reason to for now because as you so adroitly pointed out with Infamous there is plenty of available RAM without it.

/sigh

You're going to continue this jihad aren't you? Just as I said, blinded by fanboyism.

I mean, you're really going to compare a corridor crawler action adventure game that can't has 1080p or 2xAA to an open world game on the PS4 and then ask the absurd question of whether there is headroom on the system? You didn't think that one through, did you? Not even a little bit.

@Shewgenja: I see that you are still on the system war shit and FYI ps4 doesn't have OPEN gl so shut that shit up and data going to the cpu on the ps4 is slow ass **** PRT is very cpu hungry so good luck with that, and yes I'm comparing the two games because INFamous is LINEAR as **** you can't do anything that you can really do in a openworld game. Open no door, no interaction with people walking down the street NPCs look the same, no interaction with the ground puddles on the ground and he don't react to the wet street, no people even in the cars I could go on and on but you get the picture I have to game at the house and its open but lacks person to world interaction for a game that put so much into single player an no multiplayer at all.

Esram and ddr3 was there from the start they never had a thought about gddr5 because speed between the multicore and cpu. And I thing ps fanboys need to go back and listen to what Cerny said in his last interview on esram

He said the esram method was very dev unfriendly and would have took to long for dev to tap into the power and they needed performance now. He also said once devs get to understand how to use that small bit of esram possiblities are endless for the console, he sound like he knew they would have a headstart because the straight forward method but wasn't sure how long or how fast devs would start using esram.

What.? lol..

It was the most cost effective way STATED BY MS.

You know what cost effective means right.? Cheapest way..lol

No he didn't say tap into the power because ESRAM has no power what so ever stop spreading lies.

"One thing we could have done is drop it down to 128-bit bus, which would drop the bandwidth to 88 gigabytes per second, and then have eDRAM on chip to bring the performance back up again," said Cerny. While that solution initially looked appealing to the team due to its ease of manufacturability, it was abandoned thanks to the complexity it would add for developers. "We did not want to create some kind of puzzle that the development community would have to solve in order to create their games. And so we stayed true to the philosophy of unified memory."

"I think you can appreciate how large our commitment to having a developer friendly architecture is in light of the fact that we could have made hardware with as much as a terabyte [Editor's note: 1000 gigabytes] of bandwidth to a small internal RAM, and still did not adopt that strategy," said Cerny. "I think that really shows our thinking the most clearly of anything."

http://www.gamasutra.com/view/feature/191007/inside_the_playstation_4_with_mark_.php?print=1

Cerny say they could had 1 Terabyte bandwidth for the PS4 using the same method the xbox one use,still they didn't.

ESRAM is not a flop generator is damn memory for Christ sake stop spreading stupidity,that like saying if PS4 has the bandwidth of the 7970 it would equal a 7970 or even surpass it which is what you want to imply with that part about Cerny knowing they would have an early advantage,the xbox one GPU can't produce graphics better than the PS4 because it lacks the power it can has 100GB's bandwidth it wouldn't matter.

@Shewgenja: that was totally bogus like is said you are only been looking at 1.3 tf and small esram you it takes way more to make a game than pretty colors, Ryse still looks better than anything on console with a straight forward method on a console that wasn't meant for it.

They didn't uses the two compute units or the two graphic compute units, no swizzle encoding, decoding, upscaler or display planes, no offloading locally or to the azure cloud, with using only 3.5gb of Ram 5 cores while infamous is using all 6 cores and 4.5gbs now out of that who has to most room to grow?

First of all 1.3TF is the maximum output of the xbox one GPU, the xbox one can't go beyond that period without a over clock this is GPU 101 period,no matter what you falsely claim,ESRAM isn't a damn performance booster or flop generator that will increase the xbox one GPU performance to 1.90TF,the most ESRAM can do is allow the xbox one GPU work at its fullest,which isn't saying much since is 1.31TF and not even all can be use for games.

Ryse is a piece of sh** game where you can't move basically all you do is hack and slash,it draws nothing but some characters and some distant building which you can't even access,is the pinnacle of constricted games,and even then it can run at 1080p nor even 30 FPS,is a hack and slash build like a corridor shooter,when you can do nothing and movement is severely restricted,trying to imply that Infamous is just pretty colors is a joke is one of the most impressive games ever made,and basically the most impressive on consoles,having incredible particle effects second to non,with some of the most crisp and clean graphics that ever pass in a console,and the most impressive AA system done on any platform,is a damn beauty and the simple fact that this game is open and it 1080p and runs over 30FPS basically make any comparison to Ryse irrelevant and stupid.

If Ryse was as open as Infamous it would be 720p with DR3 like graphics,the reason it looks good is because all the resources are target over a small area,much like Tekken 5 was so great looking that could pass as a xbox game back on the PS2 days.

Did you just mix 2CU with 2 CPU cores.?

That last bold part make no sense what so ever.

The xbox one uses 6 cores because 2 are reserved for OS and system,as of now that is also the case on PS4.

2CU are disable from the hardware for redundancy those can't be active again,period because it will cause problem on any xbox one that doesn't have 14 working CU.

Ryse uses the upscaler the game is upscale to 1080p from 900p,coding and decoding if for movies and media content which is irrelevant to Ryse,why would they need to offload.? Titanfall uses the cloud for AI and the bots are some of the dumbest bots ever put on a game,you have to much hope for the cloud,because you don't understand how GPU work and how latency and bandwidth will stop the cloud for doing anything significant.

Have you stop and think why they didn't use 4.5GB or 5Gb on Ryse.? Is because they don't need it to,Ryse is not an open game like Infamous so ram consumption is lower,hell and i think many of that usage was wasted copy and paste code because of the xbox one not been true HSA or hUMA.

@Shewgenja: I see that you are still on the system war shit and FYI ps4 doesn't have OPEN gl so shut that shit up and data going to the cpu on the ps4 is slow ass **** PRT is very cpu hungry so good luck with that, and yes I'm comparing the two games because INFamous is LINEAR as **** you can't do anything that you can really do in a openworld game. Open no door, no interaction with people walking down the street NPCs look the same, no interaction with the ground puddles on the ground and he don't react to the wet street, no people even in the cars I could go on and on but you get the picture I have to game at the house and its open but lacks person to world interaction for a game that put so much into single player an no multiplayer at all.

So, what you're saying is that there are no destructable objects in the environment, that you have to complete every mission and quest in order and the game exists between a series of checkpoints? Cuz, while you're accusing me of some horse shit you're plenty capable of it. All of those things you mentioned are more of a biproduct of the game being first-generation than it is the boundaries of the machine. All of those additional touches would have delayed the game for months and the payoff would have been very limited in terms of sales. Then again, maybe you're playing a different version of Ryse than I did. Were you able to leap from building to building in Ryse like an ancient Roman god at some point? You learn something new every day.

Also, using native 1600x900 resources to pass through AA at 1080p fb is a neat trick but it's not the same as rendering the image at 1080p and you apparently know enough to know better. You're nothing more than an elevated bullshit artist. You know damn fucking well that the XBone GPU is fillrate bound. All I can say is, it's 2014 and the XBone is not a FullHD game machine in the rapidly approaching 4K world. It is antiquated to the point of ridiculousness. A cable TV box in a Roku world rehashing the motion controls from the 360 generation that is desperately underpowered if VR takes off.

Am I lying to kick it yet? I'll be around if you want more of this.

I just get a kick out of the fact that while Lems constantly rehash the 4.5GB available for developers argument regarding the PS4, their fuckup of a console needs to duplicate objects in RAM due to the lack of HSA/hUMA. The XBone needs almost 9GBs of RAM available to developers to pull off what devs can do with PS4s 4.5 and it would still be slow as molasses comparatively.

Once again, proof that Lems need to just shut up while they are at least going somewhere with their console (hey, it is selling faster than the WiiU) rather than trying to lock horns with PS4 and its fans constantly. The truth is only going to get uglier. Right now, very very few developers want to be honest about the XBone because they don't want to burn their bridges with MS or its fanbase. All that goes out the window at the first hint of weakness, just like developers blasted the PS3 in the second half of 2007.

Ice...cold lol

Oh, I can get much more real with it. PS4 having 8GBs instead of 4 was literally a throat punch to Microsofts plans this gen. All that "Entertainment Console" talk? That's because MS thought they held all the cards. In the videogame industry, loose lips sink ships. There were developers with actual Alpha PS4 devkits who were shocked by the official reveal and Beta kits were shipped shortly thereafter so the real work could get underway.

I don't know how or why the Lems have this second coming of swagger lately, but the reality is that the PS4 torpedoes the XBone behind the curtain, but no one talks about it because there's a chance that MS can change its fate and they are expected to have a strong E3 this year. Also, XBone games will be reaching 1080p, but at dicey framerates and missing effects. The song and dance changed by Spencer because unlike most of the top brass at MS, he does have a grasp of what the challenges ahead are.

The PS4 was supposed to be a little bit faster and a good improvement over the PS3 for Sony, not the golden bullet that slit the XBones throat. The question is whether or not Sony knew all along they were moving to 8GBs or if the legend of new GDDR5 fabrication making parts available at the last moment is true. Whatever the case may be, there is not a prayer that the XBone will match PS4s performance. The one-off comparable games are merely a biproduct of Sony giving alpha kits to multiplat devs with half the memory. So, as cross-gen cross-plat games flush out, expect PS4 games to only improve from here out. Even DX12 will take a development cycle to show improvement for third party XBone games (yes, first party games like Halo 5 are already test-beds for the new tools, in case you don't know how game development doesn't work). Meanwhile, PS4s toolchains are a little more in constant play and evolution. This had the effect of a lot of delays. Even though that plays out poorly in the game media, the truth is that the developers would rather put out a better running game than a commercial turd. It's very competitive right now.

Brutal.

@Shewgenja: you are a real special guy lol how is the xbox not a full hd console when its the only console that has a game that's native 1080p @60 fps the only console.

Like I said you don't know what you gonna talk about ROPs the lack of bw ps4 will never use them 32 effectively while thinking the xbox doesn't have enough ROPs can easliy bypass going that by going straight to shader compute.

4k will be seen on the xbox before ps4 vr takes off. As cpu thread balance start to being used good luck with your overheated ps4.

Why dont you put the rest of the info up so everyone can see how superior ESRam will be once implemented by devs???

http://www.dualshockers.com/2014/04/04/microsoft-explains-why-the-xbox-ones-esram-is-a-huge-win-and-helps-reaching-1080p60-fps/

Your link says to help get up to 1080p 60fps. How is that superior?

@Shewgenja: you are a real special guy lol how is the xbox not a full hd console when its the only console that has a game that's native 1080p @60 fps the only console.

Like I said you don't know what you gonna talk about ROPs the lack of bw ps4 will never use them 32 effectively while thinking the xbox doesn't have enough ROPs can easliy bypass going that by going straight to shader compute.

4k will be seen on the xbox before ps4 vr takes off. As cpu thread balance start to being used good luck with your overheated ps4.

Quit

lying

to

kick

it.

*drops the mic*

@Shewgenja: you are a real special guy lol how is the xbox not a full hd console when its the only console that has a game that's native 1080p @60 fps the only console.

Like I said you don't know what you gonna talk about ROPs the lack of bw ps4 will never use them 32 effectively while thinking the xbox doesn't have enough ROPs can easliy bypass going that by going straight to shader compute.

4k will be seen on the xbox before ps4 vr takes off. As cpu thread balance start to being used good luck with your overheated ps4.

Quit

lying

to

kick

it.

*drops the mic*

Shew cleaning up in this thread still I see.

@misterpmedia: slew not cleaning up shit when I hit with facts he go with old engines and kojaima trying to make money but keep face in japan we will see with phantom pain drops.

He had no comment to what I said because all people do here is copy and paste without having any knowledge of there own.

@misterpmedia: slew not cleaning up shit when I hit with facts he go with old engines and kojaima trying to make money but keep face in japan we will see with phantom pain drops.

He had no comment to what I said because all people do here is copy and paste without having any knowledge of there own.

Oh, you want to talk about game engines and Forza? Someone pinch me, he's going in for thirds. If last gen engines is an excuse then how come the XBone can't run some of that stuff anywhere near as well? I won't even go into Forza with you until you pull yourself out of your own mess with that one.

You may want to consider your options at this point. Not responding IS one of them.

@Shewgenja: you are a real special guy lol how is the xbox not a full hd console when its the only console that has a game that's native 1080p @60 fps the only console.

Like I said you don't know what you gonna talk about ROPs the lack of bw ps4 will never use them 32 effectively while thinking the xbox doesn't have enough ROPs can easliy bypass going that by going straight to shader compute.

4k will be seen on the xbox before ps4 vr takes off. As cpu thread balance start to being used good luck with your overheated ps4.

COD Ghost 1080p 60FPS,MGS5 1080p 60FPS.

And unlike the xbox one those aren't racing games which are pretty easy to pull on that resolution,specially with last gen effects and no dynamic effect what so ever.

The xbox one can't do 1080p for most games and you are claiming it will do 4k...lol

What PS4 CPU over head.? last thing i saw showed the PS4 beating the xbox one on a engine CPU wise,which is funny because the xbox one is suppose to be 100mhz faster..lol

Dat GDDR5 fake claim...lol

@misterpmedia: slew not cleaning up shit when I hit with facts he go with old engines and kojaima trying to make money but keep face in japan we will see with phantom pain drops.

He had no comment to what I said because all people do here is copy and paste without having any knowledge of there own.

Funny because Forza which is the only 1080-p 60 FPS game on xbox one uses an old engine with old effects and no dynamic effects what so ever,it even use baked card damage,and card board box spectators.lol

So basically you can't stop getting own on this board..

I just reopened the thread. Is crackace having another meltdown?

Is GrenadeLicker still making unintelligent posts and crying because he doesn't have a clue.

There.. there kiddie cow. You'll get smarter some day.

Are you still butthurt over getting your ass smashed in the news article about Phil Spencer yesterday? Give it a rest, I'm nursing a headache.

Never got smashed anywhere. I remember everyone shutting you up a few times however. lol!! You got a headache from talking BS in panic mode. Hurts, don't it!?!?! lol!!

More demented damage control from crackace. I hope my favourite little panda bear is smashing his keyboard up out of rage. :(

For the context, read http://www.dualshockers.com/2014/04/04/microsoft-explains-why-the-xbox-ones-esram-is-a-huge-win-and-helps-reaching-1080p60-fps/

"There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that."

At this time, Microsoft didn't enable CPU to ESRAM via GPU access tunnel feature.

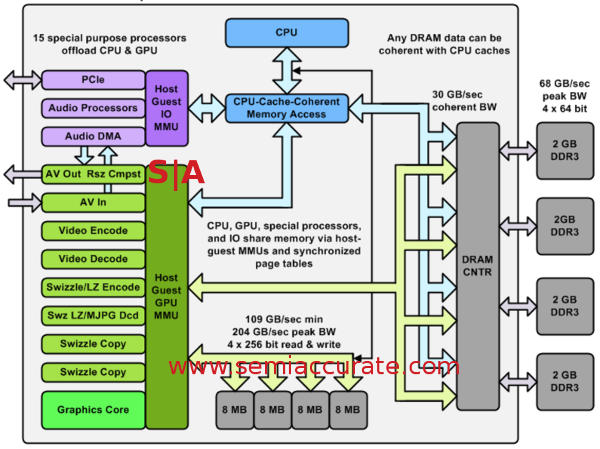

This diagram was from Microsoft's hotchip.org's reveal.

There's a difference between hardware features vs API/runtime features.

For example, DirectX 11.2 doesn't expose any additional features on my AMD GCNs i.e. needs DirectX 12, Mantle, TrueAudio, HSA runtimes e.g. I know my 290X's 8 ACE units are being wasted by DirectX 11.2.

Also from http://www.dualshockers.com/2014/04/04/microsoft-explains-why-the-xbox-ones-esram-is-a-huge-win-and-helps-reaching-1080p60-fps/

"So the last thing you have to do to get it all composited up is to get it copied over to main memory. That copy over to main memory is really fatst, and it doesn’t use any CPU or GPU time either, because we have DNA engines that actually do that for you in the console. This is how you get to 1080p, this is how you run at 60 frames per second… period, if you’re bottlenecked by graphics."

Sounds like Rebellion's "Tiling tricks".

Well that confirms it then, it was suspected that XBox One was not a true hUMA uarch for this very reason and although there is a line going from the ESRAM to the link between the CPU and the cache coherent access block there were not details about what that link enabled.

Yep i argue that allot and Ronvalencia pulled like 100 links and irrelevant crap to prove it wasn't true.'

Funny enough MS lie on their presentation when they claimed the CPU could see ESRAM they even had a black line that supposedly represented the coherent connecting between CPU and ESRAM,no wonder it didn't have any speed associated with it..lol

Another theory in which Ron is wrong.

LOL, You missed it's context yet again.

Read http://www.dualshockers.com/2014/04/04/microsoft-explains-why-the-xbox-ones-esram-is-a-huge-win-and-helps-reaching-1080p60-fps/

"There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that."

At this time, Microsoft didn't enable CPU to ESRAM via GPU access tunnel feature. You keep missing the details.

There's a difference between hardware features vs API/runtime features. Your hitting a Microsoft runtime limitation.

There's very little need for the CPU intervention during GPU's ROPS and TMU operations on ESRAM. The CPU is only interested with the final calculated results from the GPU.

ESRAM on X1 is being use as a fast scratch space for the GPU.

If you read http://timothylottes.blogspot.com.au/2013/08/notes-on-amd-gcn-isa.html

DX and GL are years behind in API design compared to what is possible on GCN. For instance there is no need for the CPU to do any binding for a traditional material system with unique shaders/textures/samplers/buffers associated with geometry. Going to the metal on GCN, it would be trivial to pass a 32-bit index from the vertex shader to the pixel shader, then use the 32-bit index and S_BUFFER_LOAD_DWORDX16 to get constants, samplers, textures, buffers, and shaders associated with the material. Do a S_SETPC to branch to the proper shader.

AMD GCN has hardware features to reduce CPU related dependency i.e. it's HSA's CPU/GPU fusion (AMD CPU department) vs heavy GpGPU bias (AMD GPU department).

-------------------

On the PC, accessing the GPU's VRAM is via the GPU's memory controllers and crossbar switch i.e. you tunnel to GPU's VRAM via the GPU.

.

The ONE doesnt have that GPU in it.

Show me where MS has stated it. Besides that GPU cant run DX12 so it is now debunked.

Didnt read the rest.

Top lel. Show me one PC graphics card that uses a pathetic secondary bank of ram as a crutch for limited bandwidth?

This time around, your retort completely betrayed the ghettoness of the XBone's pathetic architecture.

Read http://en.wikipedia.org/wiki/HyperMemory

However, to make up for the inevitably slower system RAM with a video card, a small high-bandwidth local framebuffer is usually added to the video card itself. This can be noted by the one or two small RAM chips on these cards, which usually have a 32-bit or 64-bit bus to the GPU. This small local memory caches the most often needed data for quicker access, somewhat remedying the inherently high-latency connection to system RAM.

X1's architecture is for boosting low-mid range PC box i.e. to get more out of R7-260 non-X class GPUs. It's ATI's HyperMemory rebooted for 2013.

Mid-range gaming PCs (e.g. Radeon HD R7-265) has 256bit GDDR5.

Note that

R5 = low end.

R7 = mid end. R7-265 (closest to PS4) is highest mid-range R7. X1 basically sports R7-260 non-X with ESRAM booster. R7-260 is a cut down Bonaire GCN with 768 stream processors and 96 GB/s memory bandwidth. Microsoft attempted to boost their R7-260 class product into another SKU level via ESRAM booster.

R9 = high end.

Poor FoxBatAlpha...

Try educating yourself. Just because some cow perceives this technology as nothing useful, doesn't mean you should follow him. This tech is groundbreaking. So cutting edge it will have your little blue light on your DS4 blinking. It still has to be implemented and used fully on the ONE.

Look at Forza 1080p 60fps and that FPS is like a rock. Look at Ryse, beyond beautiful. These things were done without really using ESRam or DX12. First party has a slight edge now but the 3rd party devs will have to pick up the pace and learn how to use it efficiently.

The future will be glorious on the ONE.

For the context, read http://www.dualshockers.com/2014/04/04/microsoft-explains-why-the-xbox-ones-esram-is-a-huge-win-and-helps-reaching-1080p60-fps/

"There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that."

At this time, Microsoft didn't enable CPU to ESRAM via GPU access tunnel feature.

This diagram was from Microsoft's hotchip.org's reveal.

There's a difference between hardware features vs API/runtime features.

For example, DirectX 11.2 doesn't expose any additional features on my AMD GCNs i.e. needs DirectX 12, Mantle, TrueAudio, HSA runtimes e.g. I know my 290X's 8 ACE units are being wasted by DirectX 11.2.

Also from http://www.dualshockers.com/2014/04/04/microsoft-explains-why-the-xbox-ones-esram-is-a-huge-win-and-helps-reaching-1080p60-fps/

"So the last thing you have to do to get it all composited up is to get it copied over to main memory. That copy over to main memory is really fatst, and it doesn’t use any CPU or GPU time either, because we have DNA engines that actually do that for you in the console. This is how you get to 1080p, this is how you run at 60 frames per second… period, if you’re bottlenecked by graphics."

Sounds like Rebellion's "Tiling tricks".

And here comes the spin....

It isn't enable more like it isn't there,that diagram you show has not speed attribute to it is just a black line which wasn't in the original leaks by the way,and which i suspect MS introduce there to lie and trick people into thinking they have HSA which they don't.

They say it clearly the CPU can't see the data only the GPU can,now stop quoting what serve you best,the CPU can't see data when is on ESRAM that = non HSA non hUMA which the xbox one wasn't either way because hUMA is 1 memory address not 2.

So save the spin you loss and you argue this allot so yeah straight from MS own mouth the xbox one isn't HSA or hUMA,the CPU can't see data while on the ESRAM and that also mean manual flush...

At this time my ass quote the part that say "At this time we didn't enable it but we will latter on".

Now don't run away like you always do and hide on irrelevant crap,quote MS saying the will enable the CPU to read from ESRAM,because if it was something so simple it would be enable by now.

By the way you are quoting the same link we did,so yeah you loss.

LOL, You missed it's context yet again.

Read http://www.dualshockers.com/2014/04/04/microsoft-explains-why-the-xbox-ones-esram-is-a-huge-win-and-helps-reaching-1080p60-fps/

"There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that."

At this time, Microsoft didn't enable CPU to ESRAM via GPU access tunnel feature. You keep missing the details.

There's a difference between hardware features vs API/runtime features. Your hitting a Microsoft runtime limitation.

There's very little need for the CPU intervention during GPU's ROPS and TMU operations on ESRAM. The CPU is only interested with the final calculated results from the GPU.

ESRAM on X1 is being use as a fast scratch space for the GPU.

If you read http://timothylottes.blogspot.com.au/2013/08/notes-on-amd-gcn-isa.html

DX and GL are years behind in API design compared to what is possible on GCN. For instance there is no need for the CPU to do any binding for a traditional material system with unique shaders/textures/samplers/buffers associated with geometry. Going to the metal on GCN, it would be trivial to pass a 32-bit index from the vertex shader to the pixel shader, then use the 32-bit index and S_BUFFER_LOAD_DWORDX16 to get constants, samplers, textures, buffers, and shaders associated with the material. Do a S_SETPC to branch to the proper shader.

AMD GCN has hardware features to reduce CPU related dependency i.e. it's HSA's CPU/GPU fusion (AMD CPU department) vs heavy GpGPU bias (AMD GPU department).

-------------------

On the PC, accessing the GPU's VRAM is via the GPU's memory controllers and crossbar switch i.e. you tunnel to GPU's VRAM via the GPU.

.

No you butthurt lemming get Roseta Stone and get a freaking curse in English...

"There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that."

and we didn’t enable that."

and we didn’t enable that."

and we didn’t enable that."

Is not enable... Just like 2 CU are not enable. That means it can't be turn on,else you would have see a claim saying they will enable it latter on,that black line is not on the leak diagrams,only on MS presentation and now all of the sudden after saying it was coherent now they say well you know what is not coherent the CPU can't see the ESRAM only the GPU can we didn't enable that.

Hahahahaaaaaaaaaaaa

It is you who are reading thing out of context,and adding sh** not there.

Oh i told you long time ago that ESRAM wasn't a cache but more like a programmable scratch pad,i told you that long ago,when you and other still call it a cache,and now you call it scratch pad lol...

"There’s no CPU access here, because the CPU can’t see it,

"There’s no CPU access here, because the CPU can’t see it,

"There’s no CPU access here, because the CPU can’t see it,

"There’s no CPU access here, because the CPU can’t see it,

For the selective reader inside you,and ill wait while you find me a quote from MS stating that they can enable the connection between CPU and ESRAM ill just wait,because what was say there is that is not enable on the xbox hardware much like 2 CU are disable and can't be turn back on..lol

Read http://en.wikipedia.org/wiki/HyperMemory

However, to make up for the inevitably slower system RAM with a video card, a small high-bandwidth local framebuffer is usually added to the video card itself. This can be noted by the one or two small RAM chips on these cards, which usually have a 32-bit or 64-bit bus to the GPU. This small local memory caches the most often needed data for quicker access, somewhat remedying the inherently high-latency connection to system RAM.

X1's architecture is for boosting low-mid range PC box i.e. to get more out of R7-260 non-X class GPUs. It's ATI's HyperMemory rebooted for 2013.

Mid-range gaming PCs (e.g. Radeon HD R7-265) has 256bit GDDR5.

Note that

R5 = low end.

R7 = mid end. R7-265 (closest to PS4) is highest mid-range R7. X1 basically sports R7-260 non-X with ESRAM booster. R7-260 is a cut down Bonaire GCN with 768 stream processors and 96 GB/s memory bandwidth. Microsoft attempted to boost their R7-260 class product into another SKU level via ESRAM booster.

R9 = high end.

What kind of memory is on those cards? Is it paired up with GDDR3, DDR3 or GDDR5?

The ONE doesnt have that GPU in it.

Show me where MS has stated it. Besides that GPU cant run DX12 so it is now debunked.

Didnt read the rest.

Top lel. Show me one PC graphics card that uses a pathetic secondary bank of ram as a crutch for limited bandwidth?

This time around, your retort completely betrayed the ghettoness of the XBone's pathetic architecture.

somewhat remedying the inherently high-latency connection to system RAM.

somewhat remedying the inherently high-latency connection to system RAM.

somewhat remedying the inherently high-latency connection to system RAM.

X1's architecture is for boosting low-mid range PC box i.e. to get more out of R7-260 non-X class GPUs. It's ATI's HyperMemory rebooted for 2013.

Mid-range gaming PCs (e.g. Radeon HD R7-265) has 256bit GDDR5.

Note that

R5 = low end.

R7 = mid end. R7-265 (closest to PS4) is highest mid-range R7. X1 basically sports R7-260 non-X with ESRAM booster. R7-260 is a cut down Bonaire GCN with 768 stream processors and 96 GB/s memory bandwidth. Microsoft attempted to boost their R7-260 class product into another SKU level via ESRAM booster.

R9 = high end.

Once again selective reading,is to help with the high latency connection to SYSTEM RAM,those GPU that use hyper memory are low end and use DDR3 so probably that is for laptops or all in one systems.

So yeah on laptops they use a small ESRAM like memory to boost the GPU from DDR3,basically you are confirming that the xbox one operates like a laptop board,which had system memory as GPU memory and use ESRAM like cache to speed things up.

wasn't talking about low end GPU on laptops he say show me a a Pc graphics card not a laptop,what graphics card use System ram as memory and has a small ESRAM like memory.? even that very low end card use DDR3,they don't use ESRAM like memory on the board,nor use your machine system ram either.

You see that on laptops or all in one setups not on stand alone GPU so you did not prove him wrong.

What kind of memory is on those cards? Is it paired up with GDDR3, DDR3 or GDDR5?

he basically describe a setup for laptops or all in one setups,laptop with GPU on PC often come with DDR3 memory and use that DDR3 as video memory as well,and use a small ESRAM like memory to help the GPU not get starved bandwidth wise,is far from ideal or the best way to do things and he knows it,by now he is just spinning things,or partially quoting what he likes.

Read http://en.wikipedia.org/wiki/HyperMemory

However, to make up for the inevitably slower system RAM with a video card, a small high-bandwidth local framebuffer is usually added to the video card itself. This can be noted by the one or two small RAM chips on these cards, which usually have a 32-bit or 64-bit bus to the GPU. This small local memory caches the most often needed data for quicker access, somewhat remedying the inherently high-latency connection to system RAM.

X1's architecture is for boosting low-mid range PC box i.e. to get more out of R7-260 non-X class GPUs. It's ATI's HyperMemory rebooted for 2013.

Mid-range gaming PCs (e.g. Radeon HD R7-265) has 256bit GDDR5.

Note that

R5 = low end.

R7 = mid end. R7-265 (closest to PS4) is highest mid-range R7. X1 basically sports R7-260 non-X with ESRAM booster. R7-260 is a cut down Bonaire GCN with 768 stream processors and 96 GB/s memory bandwidth. Microsoft attempted to boost their R7-260 class product into another SKU level via ESRAM booster.

R9 = high end.

What kind of memory is on those cards? Is it paired up with GDDR3, DDR3 or GDDR5?

ATI's HyperMemory is enabled on all modern AMD dGPU cards i.e. go to "Screen Resolution" -> "Advanced Settings" -> Adapter tab.

For example

Total Available Graphics Memory: 7936 MB

Dedicated Video memory: 4096 MB.<---------------- GDDR5

System Video Memory: 0

Shared System memory: 3840 MB. <---------------- DDR3 (via PCI-E)

My R9-290 has 4 GB GDDR5.

Read HP's support site

HyperMemory = Total Available Graphics Memory

The amount of “total available graphics memory” is based on how much system memory the SBIOS reports to the operating system. We have observed that the SBIOS “carves-out” a small portion of this system memory. Due to this “carve-out”, there is a disparity of 1-2MB between the values in this document derived from the HyperMemory formula and values the user will see in either the Vista control panel or Catalyst Control Center.

To answer your question, it's GDDR5 for my R9-290 and it's dependant on the particular SKU e.g. HP's HyperMemory example has dedicated 128MB.

Higher PC GPUs doesn't remove features that existed on lesser PC GPUs i.e. HyperMemory is still useful when the user exceeds VRAM.

The ONE doesnt have that GPU in it.

Show me where MS has stated it. Besides that GPU cant run DX12 so it is now debunked.

Didnt read the rest.

Top lel. Show me one PC graphics card that uses a pathetic secondary bank of ram as a crutch for limited bandwidth?

This time around, your retort completely betrayed the ghettoness of the XBone's pathetic architecture.

somewhat remedying the inherently high-latency connection to system RAM.

somewhat remedying the inherently high-latency connection to system RAM.

somewhat remedying the inherently high-latency connection to system RAM.

X1's architecture is for boosting low-mid range PC box i.e. to get more out of R7-260 non-X class GPUs. It's ATI's HyperMemory rebooted for 2013.

Mid-range gaming PCs (e.g. Radeon HD R7-265) has 256bit GDDR5.

Note that

R5 = low end.

R7 = mid end. R7-265 (closest to PS4) is highest mid-range R7. X1 basically sports R7-260 non-X with ESRAM booster. R7-260 is a cut down Bonaire GCN with 768 stream processors and 96 GB/s memory bandwidth. Microsoft attempted to boost their R7-260 class product into another SKU level via ESRAM booster.

R9 = high end.

Once again selective reading,is to help with the high latency connection to SYSTEM RAM,those GPU that use hyper memory are low end and use DDR3 so probably that is for laptops or all in one systems.

So yeah on laptops they use a small ESRAM like memory to boost the GPU from DDR3,basically you are confirming that the xbox one operates like a laptop board,which had system memory as GPU memory and use ESRAM like cache to speed things up.

wasn't talking about low end GPU on laptops he say show me a a Pc graphics card not a laptop,what graphics card use System ram as memory and has a small ESRAM like memory.? even that very low end card use DDR3,they don't use ESRAM like memory on the board,nor use your machine system ram either.

You see that on laptops or all in one setups not on stand alone GPU so you did not prove him wrong.

What kind of memory is on those cards? Is it paired up with GDDR3, DDR3 or GDDR5?

he basically describe a setup for laptops or all in one setups,laptop with GPU on PC often come with DDR3 memory and use that DDR3 as video memory as well,and use a small ESRAM like memory to help the GPU not get starved bandwidth wise,is far from ideal or the best way to do things and he knows it,by now he is just spinning things,or partially quoting what he likes.

LOL, My R9-290X still has HyperMemory features you fool. Did you really think a higher grade dGPU doesn't have a low end feature?

Higher grade PC GPU is usually a superset of lesser PC GPU.

------------

ATI's HyperMemory is enabled on all modern AMD dGPU cards i.e. go to "Screen Resolution" -> "Advanced Settings" -> Adapter tab.

For example

Total Available Graphics Memory: 7936 MB

Dedicated Video memory: 4096 MB.<---------------- GDDR5

System Video Memory: 0

Shared System memory: 3840 MB. <---------------- DDR3 (via PCI-E)

My R9-290 has 4 GB GDDR5.

F**k, you failed basic IT support 101. You are wrong.

------------

Higher latencies reduces effective bandwidth and HyperMemory's small fast VRAM boost GPU's bandwidth.

Regardless of relatively lower latencies from DDR3, X1's 68 GB/s is still less than ESRAM's bandwidth. Also, X1 doesn't have to deal with old PC chipsets. The bandwidth from PC's PCI-E version 3.0 16 lane (32 GB/s total) has exceeded yesteryear's 16 GB/s VRAM equipped GPUs.

LOL, My R9-290X still has HyperMemory features you fool. Did you really think a higher grade dGPU removed a low end feature?

Higher latencies reduces effective bandwidth and HyperMemory's small fast VRAM boost GPU's bandwidth.

Regardless of relatively lower latencies from DDR3, X1's 68 GB/s is still less than ESRAM's bandwidth. Also, X1 doesn't have to deal with old PC chipsets. The bandwidth from PC's PCI-E version 3.0 16 lane (32 GB/s total) has exceed yesteryear's 16 GB/s VRAM equipped GPUs.

Your link talks about DDR3 been use as video ram spinner...lol

So yeah you got owned again selective reader.

R9 290X has hypermemory.? It has ESRAM like memory.?

http://www.amd.com/en-us/products/graphics/desktop/r9#

I don't it mention here..

ATI's HyperMemory is enabled on all modern AMD dGPU cards i.e. go to "Screen Resolution" -> "Advanced Settings" -> Adapter tab.

For example

Total Available Graphics Memory: 7936 MB

Dedicated Video memory: 4096 MB.<---------------- GDDR5

System Video Memory: 0

Shared System memory: 3840 MB. <---------------- DDR3 (via PCI-E)

My R9-290 has 4 GB GDDR5.

Read HP's support site

HyperMemory = Total Available Graphics Memory

The amount of “total available graphics memory” is based on how much system memory the SBIOS reports to the operating system. We have observed that the SBIOS “carves-out” a small portion of this system memory. Due to this “carve-out”, there is a disparity of 1-2MB between the values in this document derived from the HyperMemory formula and values the user will see in either the Vista control panel or Catalyst Control Center.

To answer your question, it's GDDR5 for my R9-290 and it's dependant on the particular SKU e.g. HP's HyperMemory example has dedicated 128MB.

Higher PC GPUs doesn't remove features that existed on lesser PC GPUs i.e. HyperMemory is still useful when the user exceeds VRAM.

You sitll haven't answer hes question,what GPU has ESRAM like memory on PC,no card does unless you talk about old cards or very low end ones,which would actually re confirm why the xbox one has DDR 3 and not GDDR5.

@tormentos:

LOL, My R9-290X still has HyperMemory features you fool. Did you really think a higher grade dGPU removed a low end feature?

Higher latencies reduces effective bandwidth and HyperMemory's small fast VRAM boost GPU's bandwidth.

Regardless of relatively lower latencies from DDR3, X1's 68 GB/s is still less than ESRAM's bandwidth. Also, X1 doesn't have to deal with old PC chipsets. The bandwidth from PC's PCI-E version 3.0 16 lane (32 GB/s total) has exceed yesteryear's 16 GB/s VRAM equipped GPUs.

Your link talks about DDR3 been use as video ram spinner...lol

So yeah you got owned again selective reader.

R9 290X has hypermemory.? It has ESRAM like memory.?

http://www.amd.com/en-us/products/graphics/desktop/r9#

I don't it mention here..

You didn't read HP's support link on HyperMemory. Read RTFM.

My R9-290 owns you since it still has HyperMemory features and I don't need amd.com website since I'm running Internet Explorer on it. LOL.

The big difference is R9-290 has 4GB GDDR5 with 320 GB/s instead of 128 MB. The largest VRAM equipped Hawaii GCN is FirePro W9100's 16 GB. The HyperMemory/TurboCache construct still exist on current flagship GPUs.

ATI's HyperMemory is enabled on all modern AMD dGPU cards i.e. go to "Screen Resolution" -> "Advanced Settings" -> Adapter tab.

For example

Total Available Graphics Memory: 7936 MB

Dedicated Video memory: 4096 MB.<---------------- GDDR5

System Video Memory: 0

Shared System memory: 3840 MB. <---------------- DDR3 (via PCI-E)

My R9-290 has 4 GB GDDR5.

Read HP's support site

HyperMemory = Total Available Graphics Memory

The amount of “total available graphics memory” is based on how much system memory the SBIOS reports to the operating system. We have observed that the SBIOS “carves-out” a small portion of this system memory. Due to this “carve-out”, there is a disparity of 1-2MB between the values in this document derived from the HyperMemory formula and values the user will see in either the Vista control panel or Catalyst Control Center.

To answer your question, it's GDDR5 for my R9-290 and it's dependant on the particular SKU e.g. HP's HyperMemory example has dedicated 128MB.

Higher PC GPUs doesn't remove features that existed on lesser PC GPUs i.e. HyperMemory is still useful when the user exceeds VRAM.

You sitll haven't answer hes question,what GPU has ESRAM like memory on PC,no card does unless you talk about old cards or very low end ones,which would actually re confirm why the xbox one has DDR 3 and not GDDR5.

I answered the HyperMemory feature still exist on flagship GPUs since VRAM size is a moving target e.g. FirePro W9100 has 16 GB.

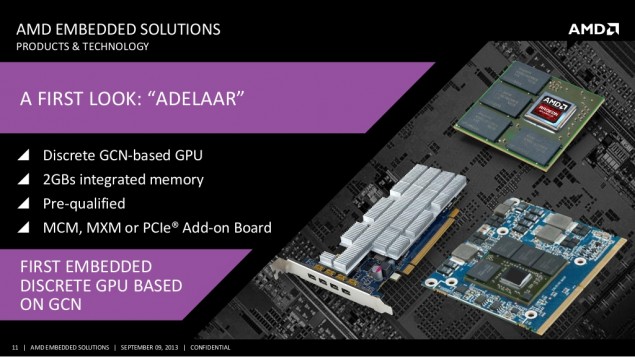

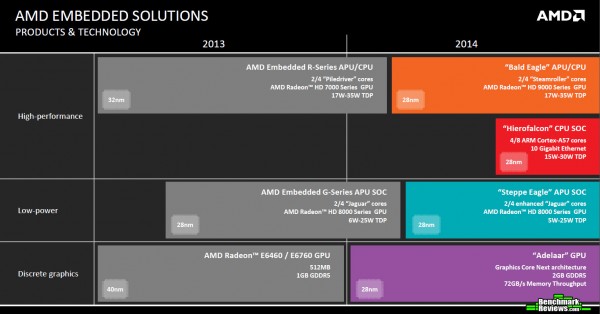

Some of Radeon HD GCN Embedded SKUs has embedded GDDR5 memory chips on the GPU package and they are larger than 32 MB.

This SKU has 14 CU GCN with 2GB of embedded GDDR5 chips. Embedded 2GB GDDR5 has more compatibility and performance with legacy software.

@ronvalencia

I was led to believe that the HyperMemory function was a small high-bandwidth local framebuffer, not something off-die or off-card like a bank of sys mem. You'll have to pardon my ignorance on the subject as I am primarily an NVidia guy and new to all this ATI card.. err.. AMD card stuff.

That is not the same as the xbox one,since when the xbox one has GDDR5 + ESRAM.?

Adelaar isn't the same as the xbox one,it has embedded memory but that all it has,it doesn't have GDDR5 + embedded memory like the xbox one has with DDR3 + ESRAM,Adelar has 2 GB of GDDR5 embedded as video memory,and has 72GB/s bandwidth basically what a 7770 has.

So you are still not answering his question dude,Adelaar is in no way like the xbox one setup.

Please Log In to post.

Log in to comment