Apparently, the XBOX ONE's ESRAM + DirectX 11.2 is a very powerful combination: http://www.giantbomb.com/xbox-one/3045-145/forums/x1-esram-dx-11-2-from-32mb-to-6gb-worth-of-texture-1448545/#51

Interesting read on the power of XBOX ONE's ESRAM + DX 11.2

This topic is locked from further discussion.

lems still looking for the secret sauce? Come on, even Ron gave up on the quest a couple of weeks ago!

My posts are still the same. I don't view X1 vs PS4 as black or white

AMD Mantle > DirectX 11.2 (i.e. Direct3D part).AMD Mantle still supports MS's HLSL.

I think it is fair to say both the PS4 and XB1 are gonna be really good machines. I honestly think we need to quit worrying about specs and start hyping the games....

AMD PRT is not exclusive to DirectX

AMD PRT has more value for X1 than on PS4 i.e. X1 has two speed memory pools with one of them is high speed/smaller memory pool while PS4 has one speed memory pool.

lems still looking for the secret sauce? Come on, even Ron gave up on the quest a couple of weeks ago!

My posts are still the same. I don't view X1 vs PS4 as black or white

AMD Mantle > DirectX 11.2 (i.e. Direct3D part).AMD Mantle still supports MS's HLSL.

Wait so your new argument is mantle .? Mantle is also for PS4. And the PS4 has its own to the metal API which make mantle redundant,or are you going to pretend now that console copy to the metal coding from PC.? Consoles always had that option even if MS chose to ignore it and use DirectX.

Mantle will change in nothing the advantage the PS4 has on PS4 you can code to the metal.

AMD PRT is not exclusive to DirectX

AMD PRT has more value for X1 than on PS4 i.e. X1 has two speed memory pools with one of them is high speed/smaller memory pool while PS4 has one speed memory pool.

PRT is a fixing for a problem that doesn't exist on PS4 and xbox one,to save memory it will benefit more GPU on PC with low ram than the PS4 and xbox one which already have 5GB or more to use.

Hey Ron look the PS4 has the sames Aces and commands as the R9 290,look at some of the benefits of those down,did you know that the volatile bit was sony ideas and is not on GCN according to a AMD employee that post on Beyond3D.?

Since Cerny mentioned it I'll comment on the volatile flag. I didn't know about it until he mentioned it, but looked it up and it really is new and driven by Sony. The extended ACE's are one of the custom features I'm referring to. Also, just because something exists in the GCN doc that was public for a bit doesn't mean it wasn't influenced by the console customers. That's one positive about working with consoles. Their engineers have specific requirements and really bang on the hardware and suggest new things that result in big or small improvements.

http://forum.beyond3d.com/showpost.php?p=1761150&postcount=2321

Hey Ron look apparently the Volatile bit and more Aces was actually sony's idea,and AMD actually took the idea from sony,apparently they like what sony saw,and before you say anything while the tech inside the PS4 is AMD it was stated that sony also added to the GPU several customizations.

I think it is fair to say both the PS4 and XB1 are gonna be really good machines. I honestly think we need to quit worrying about specs and start hyping the games....

They are going to be awesome machines (10+ times more powerful than 360/PS3 sounds perfect to me), but good luck trying to convince system wars of that.

I think it is fair to say both the PS4 and XB1 are gonna be really good machines. I honestly think we need to quit worrying about specs and start hyping the games....

They are going to be awesome machines (10+ times more powerful than 360/PS3 sounds perfect to me), but good luck trying to convince system wars of that.

Closer to 5x more powerful and 8x more powerful.

I think it is fair to say both the PS4 and XB1 are gonna be really good machines. I honestly think we need to quit worrying about specs and start hyping the games....

They are going to be awesome machines (10+ times more powerful than 360/PS3 sounds perfect to me), but good luck trying to convince system wars of that.

Closer to 5x more powerful and 8x more powerful.

It depends. If you look at RAM, for example, PS4 and X1 have 16 times more than current gen consoles (10 times more RAM for games, assuming 3GB are dedicated to the OS).

@SaltyMeatballs:

The Ps4 gpu alone if you looked at the FLOPS( i know you cant compare FLOPS of RSX and Ps4 gpu directly because both have totally different GPU architectures, GCN is much much more efficient then the architecture in the RSX) of the RSX and the GPU in the PS4, its about a 9x increase on "paper" but GCN and nvidia architecture cant compare FLOPS # directly but

SO if you looked at RSX GFLOPs was around 200ish and Ps4 gpu is 1840 gflops, almost 10x increase and the PS4 is even faster then 10x then the RSX at other operations like tessellation etc

Also the out of order 64 bit chips in both consoles arent the best processors out there by a long shot, but them being out of order and being 64 bit is much much better then the previous generation CPU's

Also 16x more RAM aswell, the other onboard processors like the low powered ARM chip, dedicated audio hardware etc, to help out everything its extremely safe to say both consoles are 10x stronger then previous consoles

@SaltyMeatballs:

The Ps4 gpu alone if you looked at the FLOPS( i know you cant compare FLOPS of RSX and Ps4 gpu directly because both have totally different GPU architectures, GCN is much much more efficient then the architecture in the RSX) of the RSX and the GPU in the PS4, its about a 9x increase on "paper" but GCN and nvidia architecture cant compare FLOPS # directly but

SO if you looked at RSX GFLOPs was around 200ish and Ps4 gpu is 1840 gflops, almost 10x increase and the PS4 is even faster then 10x then the RSX at other operations like tessellation etc

Also the out of order 64 bit chips in both consoles arent the best processors out there by a long shot, but them being out of order and being 64 bit is much much better then the previous generation CPU's

Also 16x more RAM aswell, the other onboard processors like the low powered ARM chip, dedicated audio hardware etc, to help out everything its extremely safe to say both consoles are 10x stronger then previous consoles

The wildcard for PS3 is with CELL's SPU i.e. it's "DSP Like" as stated by IBM(1).

Reference

1. http://www.ibm.com/developerworks/power/library/pa-cmpware1/

The eight SPUs (powerful DSP-like devices that contain four floating-point arithmetic and logic units (ALUs) operating in SIMD fashion)

PRT is a fixing for a problem that doesn't exist on PS4 and xbox one,to save memory it will benefit more GPU on PC with low ram than the PS4 and xbox one which already have 5GB or more to use.

AMD PRT is not exclusive to DirectX

AMD PRT has more value for X1 than on PS4 i.e. X1 has two speed memory pools with one of them is high speed/smaller memory pool while PS4 has one speed memory pool.

Hey Ron look the PS4 has the sames Aces and commands as the R9 290,look at some of the benefits of those down,did you know that the volatile bit was sony ideas and is not on GCN according to a AMD employee that post on Beyond3D.?

Since Cerny mentioned it I'll comment on the volatile flag. I didn't know about it until he mentioned it, but looked it up and it really is new and driven by Sony. The extended ACE's are one of the custom features I'm referring to. Also, just because something exists in the GCN doc that was public for a bit doesn't mean it wasn't influenced by the console customers. That's one positive about working with consoles. Their engineers have specific requirements and really bang on the hardware and suggest new things that result in big or small improvements.

http://forum.beyond3d.com/showpost.php?p=1761150&postcount=2321

Hey Ron look apparently the Volatile bit and more Aces was actually sony's idea,and AMD actually took the idea from sony,apparently they like what sony saw,and before you say anything while the tech inside the PS4 is AMD it was stated that sony also added to the GPU several customizations.

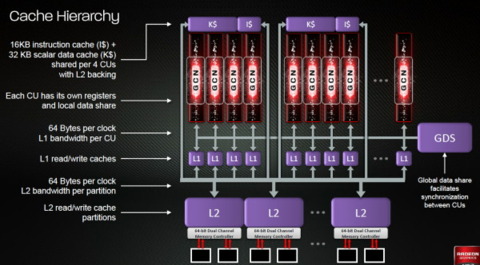

As I recall, my comments on volatile bit is for making better use for the L2 cache, but 79x0 has more L2 cache than 78x0 (Pitcairn). R9-290 is just "more with more" i.e. brute force with improved efficiencies. PS4's approach is to make do with less i.e. improved efficiencies with lesser 78x0 class GCN.

On X1, it has 32 MB (availability will be reduced with raster based workloads) ESRAM for it's GpGPU compute i.e. the approach is different when compared to the other GCNs.

On BF4, R9-280X has better results than on PS4's GCN i.e. R9-280X wins via brute force (with better efficiencies when compared to HD 6970).

--------

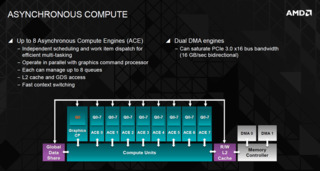

Having greater ACE units count is for reducing mulch-threading (8 concurrent threads) compute context from the CPU, but a thread can have multiple compute kernels. It's a known issue (with AMD GpGPU programmers) and not quite a big problem you have Intel Core i7 Ivybridge/Haswell CPUs.

From http://www.neogaf.com/forum/showthread.php?t=695449

And finally, I want to say about another innovation - from AMD Radeon R9 290X instead of two asynchronous computing engines (Asynchronous Compute Engines, ACE) is set just eight. For the majority of users to increase their number will not notice, because they are mainly engaged in the performance of non-graphical computational tasks. However, in such processes, such as hash data, ACE may play a very significant role. So there is every chance that among the miners graphics card AMD Radeon R9 290X will enjoy no less popular than the AMD Radeon HD 7950/7970/7970 GHz Edition.

PS; miners = bitcoin mining

Btw, AMD Kabini SoC's GCN has 4 ACEs each with 8 queues and it was released on May 2013.

R9-290 will be available for sale before PS4.

-----------

Wait so your new argument is mantle .? Mantle is also for PS4. And the PS4 has its own to the metal API which make mantle redundant,or are you going to pretend now that console copy to the metal coding from PC.? Consoles always had that option even if MS chose to ignore it and use DirectX.

Mantle will change in nothing the advantage the PS4 has on PS4 you can code to the metal.

My answer is no for "your new argument is mantle" statement.

During AMD's Mantle reveal, they use the word "consoles" with the character "s" i.e. more than one.

http://images.techhive.com/images/article/2013/09/amd-mantle-overview-100055858-orig.jpg

"For the X1 this is particularly important since using this technique the 32Mb of eSRAM can theoretically be capable of storing up to 6GB worth of tiled textures going by those numbers. Couple the eSRAM's ultra fast bandwidth with tiled texture streaming middleware tools like Granite, and the eSRAM just became orders of magnitute more important for your next gen gaming. Between software developments such as this and the implications of the data move engines with LZ encode/decode compression capabilities on making cloud gaming practical on common broadband connections, Microsoft's design choice of going with embedded eSRAM for the Xbox One is beginning to make a lot more sense. Pretty amazing."

As I recall, my comments on volatile bit is for making better use for the L2 cache, but 79x0 has more L2 cache than 78x0 (Pitcairn). R9-290 is just "more with more" i.e. brute force with improved efficiencies. PS4's approach is to make do with less i.e. improved efficiencies with lesser 78x0 class GCN.

On X1, it has 32 MB (availability will be reduced with raster based workloads) ESRAM for it's GpGPU compute i.e. the approach is different when compared to the other GCNs.

On BF4, R9-280X has better results than on PS4's GCN i.e. R9-280X wins via brute force (with better efficiencies when compared to HD 6970).

--------

Having greater ACE units count is for reducing mulch-threading (8 concurrent threads) compute context from the CPU, but a thread can have multiple compute kernels. It's a known issue (with AMD GpGPU programmers) and not quite a big problem you have Intel Core i7 Ivybridge/Haswell CPUs.

From http://www.neogaf.com/forum/showthread.php?t=695449

And finally, I want to say about another innovation - from AMD Radeon R9 290X instead of two asynchronous computing engines (Asynchronous Compute Engines, ACE) is set just eight. For the majority of users to increase their number will not notice, because they are mainly engaged in the performance of non-graphical computational tasks. However, in such processes, such as hash data, ACE may play a very significant role. So there is every chance that among the miners graphics card AMD Radeon R9 290X will enjoy no less popular than the AMD Radeon HD 7950/7970/7970 GHz Edition.

PS; miners = bitcoin mining

Btw, AMD Kabini SoC's GCN has 4 ACEs each with 8 queues and it was released on May 2013.

R9-290 will be available for sale before PS4.

-----------

Wait so your new argument is mantle .? Mantle is also for PS4. And the PS4 has its own to the metal API which make mantle redundant,or are you going to pretend now that console copy to the metal coding from PC.? Consoles always had that option even if MS chose to ignore it and use DirectX.

Mantle will change in nothing the advantage the PS4 has on PS4 you can code to the metal.

My answer is no for "your new argument is mantle" statement.

During AMD's Mantle reveal, they use the word "consoles" with the character "s" i.e. more than one.

http://images.techhive.com/images/article/2013/09/amd-mantle-overview-100055858-orig.jpg

Again it was took from the PS4,and was confirmed to be a sony pushed customization.

Once again the PS4 customization are quite more specific than the xbox one,ESRAM is a cheap patch for bandwidth basically confirmed by MS it sell,is there because it was cheap and the best they could do to save money by not going GDDR5.

Data on the xbox one GPU need to be flush on PS4 it doesn't things like that will count allot,is the reason why the xbox one is say to have something similar to HSA but not quite it,while the PS4 has it very straight forward.

Yes the xbox one support mantle to,now how does that change anything.? We all know that means dropping DirectX something i am sure MS isn't very happy about,the more developer use Mantle the less that use DirectX it hurst MS.

But still that doesn't change much even if all used mantle,the PS4 still has 600+Gflops more than the xbox one,both code to the metal will still give better results on PS4 and ESRAM will not change that.

"For the X1 this is particularly important since using this technique the 32Mb of eSRAM can theoretically be capable of storing up to 6GB worth of tiled textures going by those numbers. Couple the eSRAM's ultra fast bandwidth with tiled texture streaming middleware tools like Granite, and the eSRAM just became orders of magnitute more important for your next gen gaming. Between software developments such as this and the implications of the data move engines with LZ encode/decode compression capabilities on making cloud gaming practical on common broadband connections, Microsoft's design choice of going with embedded eSRAM for the Xbox One is beginning to make a lot more sense. Pretty amazing."

That theory is the problem not everything is textures for a game,so to use the ESRAM you need to see what you put there,not everything is optimal to be there,and it been say that the 32 MB are the problem of why so many games are lower than 1080p on xbox one,apparently 32 MB is not enough,MS has been voicing around how they underestimated how the bandwidth of ESRAM was but apparently they also over estimated what was possible with just 32 MB.

There are move engines on the PS4 to,the xbox one has 2 more,the PS4 has 2 just like any GCN is really call DMA,and Kinect is probably the reason for the other 2.

And the whole LZ compression and all that crap was mainly for Kinect,and MS let it loose in one of the interviews,jit compression to.

The xbox one has some modification that look great on the outside but once you dig,many were just in place for Kinect,voice recognition and several others,takes Shapes for example they hype how powerful it is,yet Bikillian some one who worked on the audio block,say that most of the Shape is in deed for Kinect and that very little is left for developers to use,so you can imagine that something that sounds so great,will actually be use mostly to hear your voice say xbox on xbox off,xbox go home.. and crap like that.

As I recall, my comments on volatile bit is for making better use for the L2 cache, but 79x0 has more L2 cache than 78x0 (Pitcairn). R9-290 is just "more with more" i.e. brute force with improved efficiencies. PS4's approach is to make do with less i.e. improved efficiencies with lesser 78x0 class GCN.

On X1, it has 32 MB (availability will be reduced with raster based workloads) ESRAM for it's GpGPU compute i.e. the approach is different when compared to the other GCNs.

On BF4, R9-280X has better results than on PS4's GCN i.e. R9-280X wins via brute force (with better efficiencies when compared to HD 6970).

--------

Having greater ACE units count is for reducing mulch-threading (8 concurrent threads) compute context from the CPU, but a thread can have multiple compute kernels. It's a known issue (with AMD GpGPU programmers) and not quite a big problem you have Intel Core i7 Ivybridge/Haswell CPUs.

From http://www.neogaf.com/forum/showthread.php?t=695449

And finally, I want to say about another innovation - from AMD Radeon R9 290X instead of two asynchronous computing engines (Asynchronous Compute Engines, ACE) is set just eight. For the majority of users to increase their number will not notice, because they are mainly engaged in the performance of non-graphical computational tasks. However, in such processes, such as hash data, ACE may play a very significant role. So there is every chance that among the miners graphics card AMD Radeon R9 290X will enjoy no less popular than the AMD Radeon HD 7950/7970/7970 GHz Edition.

PS; miners = bitcoin mining

Btw, AMD Kabini SoC's GCN has 4 ACEs each with 8 queues and it was released on May 2013.

R9-290 will be available for sale before PS4.

-----------

Wait so your new argument is mantle .? Mantle is also for PS4. And the PS4 has its own to the metal API which make mantle redundant,or are you going to pretend now that console copy to the metal coding from PC.? Consoles always had that option even if MS chose to ignore it and use DirectX.

Mantle will change in nothing the advantage the PS4 has on PS4 you can code to the metal.

My answer is no for "your new argument is mantle" statement.

During AMD's Mantle reveal, they use the word "consoles" with the character "s" i.e. more than one.

http://images.techhive.com/images/article/2013/09/amd-mantle-overview-100055858-orig.jpg

Again it was took from the PS4,and was confirmed to be a sony pushed customization.

Once again the PS4 customization are quite more specific than the xbox one,ESRAM is a cheap patch for bandwidth basically confirmed by MS it sell,is there because it was cheap and the best they could do to save money by not going GDDR5.

Data on the xbox one GPU need to be flush on PS4 it doesn't things like that will count allot,is the reason why the xbox one is say to have something similar to HSA but not quite it,while the PS4 has it very straight forward.

Yes the xbox one support mantle to,now how does that change anything.? We all know that means dropping DirectX something i am sure MS isn't very happy about,the more developer use Mantle the less that use DirectX it hurst MS.

But still that doesn't change much even if all used mantle,the PS4 still has 600+Gflops more than the xbox one,both code to the metal will still give better results on PS4 and ESRAM will not change that.

On the X1, ESRAM is faster (in theory) than X2 64byte per cycle L2 cache for 777/7790.

ESRAM = 1024 bit / 8 bit = 128 bytes per cycle per direction or 256 bytes per cycle for both directions.

On current GCNs, 64 byte per cycle bandwidth for L2 cache partition.

It depends If X1's GCN has access to atomic or current GCN L2 cache basic tag functions.

I don't think that anything can change the outcome of the it,MS had a vision with the xbox one,they fallow it and latter found that people didn't really wanted that,so then comes then GPU and CPU up clock,haven't you notice lately how MS is trying hard to downplay any advantage the PS4 has.?

Even GDDR5 which is the standard for graphics,that is what your do when your product is inferior you try to discredit the competition product,have you see sony desperately talking to Digital foundry about how the PS4 will beat the xbox one.?

Sony doesn't need to do that people know,and the more MS try to downplay the PS4 the more people tag them as doing damage control,hell Leadbetter reputation is on the floor for making article that basically read like pay advertisement.

It depends If X1's GCN has access to atomic or current GCN L2 cache basic tag functions.

I don't think that anything can change the outcome of the it,MS had a vision with the xbox one,they fallow it and latter found that people didn't really wanted that,so then comes then GPU and CPU up clock,haven't you notice lately how MS is trying hard to downplay any advantage the PS4 has.?

Even GDDR5 which is the standard for graphics,that is what your do when your product is inferior you try to discredit the competition product,have you see sony desperately talking to Digital foundry about how the PS4 will beat the xbox one.?

Sony doesn't need to do that people know,and the more MS try to downplay the PS4 the more people tag them as doing damage control,hell Leadbetter reputation is on the floor for making article that basically read like pay advertisement.

http://www.anandtech.com/show/5847/answered-by-the-experts-heterogeneous-and-gpu-compute-with-amds-manju-hedge

5. Fully coherent memory between CPU & GPU: This allows for data to be cached in the CPU or the GPU, and referenced by either. In all previous generations GPU caches had to be flushed at command buffer boundaries prior to CPU access. And unlike discrete GPUs, the CPU and GPU share a high speed coherent bus

In addition on an HSA architecture the application codes to the hardware which enables user mode queueing, hardware scheduling and much lower dispatch times and reduced memory operations. We eliminate memory copies, reduce dispatch overhead, eliminate unnecessary driver code, eliminate cache flushes, and enable GPU to be applied to new workloads. We have done extensive analysis on several workloads and have obtained significant performance per joule savings for workloads such as face detection, image stabilization, gesture recognition etc…

http://developer.amd.com/resources/heterogeneous-computing/what-is-heterogeneous-system-architecture-hsa/

With the old AMD Trinity APU...

The HSA team at AMD analyzed the performance of Haar Face Detect, a commonly used multi-stage video analysis algorithm used to identify faces in a video stream. The team compared a CPU/GPU implementation in OpenCL™ against an HSA implementation. The HSA version seamlessly shares data between CPU and GPU, without memory copies or cache flushes because it assigns each part of the workload to the most appropriate processor with minimal dispatch overhead

Your a little late with CPU and GPU cache flush issue.

On the X1, programmers has control over 32 MB ESRAM.

ESRAM on xbox one is not a cache,and more like a scratch pad.

ESRAM is 140 to 150 GB/s confirmed by MS,they even gave and example of how 164GB/s requirement could saturate their ESRAM because 204GB/s is theory not sustainable in any way,140Gb/s is actually slower than the PS4 bandwidth so is 150Gb/s.

The 7770 has 100Gflops more of usable power than the xbox one GPU,and the 7790 610 Gflops more than the xbox one GPU,which is way to big difference to be make out by simple having faster cache,and ESRAM isn't even a cache to begin with on xbox one.

Killzone SF online tell the story. 1080p 60 FPS vs Ryse online 900P 30 FPS and killzone SF still looks better.

http://www.anandtech.com/show/5847/answered-by-the-experts-heterogeneous-and-gpu-compute-with-amds-manju-hedge

5. Fully coherent memory between CPU & GPU: This allows for data to be cached in the CPU or the GPU, and referenced by either. In all previous generations GPU caches had to be flushed at command buffer boundaries prior to CPU access. And unlike discrete GPUs, the CPU and GPU share a high speed coherent bus

In addition on an HSA architecture the application codes to the hardware which enables user mode queueing, hardware scheduling and much lower dispatch times and reduced memory operations. We eliminate memory copies, reduce dispatch overhead, eliminate unnecessary driver code, eliminate cache flushes, and enable GPU to be applied to new workloads. We have done extensive analysis on several workloads and have obtained significant performance per joule savings for workloads such as face detection, image stabilization, gesture recognition etc…

http://developer.amd.com/resources/heterogeneous-computing/what-is-heterogeneous-system-architecture-hsa/

With AMD Trinity APU

The HSA team at AMD analyzed the performance of Haar Face Detect, a commonly used multi-stage video analysis algorithm used to identify faces in a video stream. The team compared a CPU/GPU implementation in OpenCL™ against an HSA implementation. The HSA version seamlessly shares data between CPU and GPU, without memory copies or cache flushes because it assigns each part of the workload to the most appropriate processor with minimal dispatch overhead

Your a little late with CPU and GPU cache flush issue.

I don't know what you try to prove with this post since the xbox one GPU has to flush its cache,something on PS4 doesn't happen do to been a true HSA design.

http://www.anandtech.com/show/5847/answered-by-the-experts-heterogeneous-and-gpu-compute-with-amds-manju-hedge

5. Fully coherent memory between CPU & GPU: This allows for data to be cached in the CPU or the GPU, and referenced by either. In all previous generations GPU caches had to be flushed at command buffer boundaries prior to CPU access. And unlike discrete GPUs, the CPU and GPU share a high speed coherent bus

In addition on an HSA architecture the application codes to the hardware which enables user mode queueing, hardware scheduling and much lower dispatch times and reduced memory operations. We eliminate memory copies, reduce dispatch overhead, eliminate unnecessary driver code, eliminate cache flushes, and enable GPU to be applied to new workloads. We have done extensive analysis on several workloads and have obtained significant performance per joule savings for workloads such as face detection, image stabilization, gesture recognition etc…

http://developer.amd.com/resources/heterogeneous-computing/what-is-heterogeneous-system-architecture-hsa/

With AMD Trinity APU

The HSA team at AMD analyzed the performance of Haar Face Detect, a commonly used multi-stage video analysis algorithm used to identify faces in a video stream. The team compared a CPU/GPU implementation in OpenCL™ against an HSA implementation. The HSA version seamlessly shares data between CPU and GPU, without memory copies or cache flushes because it assigns each part of the workload to the most appropriate processor with minimal dispatch overhead

Your a little late with CPU and GPU cache flush issue.

I don't know what you try to prove with this post since the xbox one GPU has to flush its cache,something on PS4 doesn't happen do to been a true HSA design.

OMG, your so dense, and an elite cow its not even funny, the idea that X1 does do certain things better then the PS4, it kills you, and you have to downplay any positive aspect of the X1. its so funny that you think X1 does not have "true" HSA... Sorry but it will be used on the X1 . Also to the fact that ESRAM is cache&buffer for the APU along with the X1 will have an altered form of HUMA. PS4 isnt doing anything special. the only thing the PS4 has over the X1 is a stronger gpu.

PRT is a fixing for a problem that doesn't exist on PS4 and xbox one,to save memory it will benefit more GPU on PC with low ram than the PS4 and xbox one which already have 5GB or more to use.

AMD PRT is not exclusive to DirectX

AMD PRT has more value for X1 than on PS4 i.e. X1 has two speed memory pools with one of them is high speed/smaller memory pool while PS4 has one speed memory pool.

Hey Ron look the PS4 has the sames Aces and commands as the R9 290,look at some of the benefits of those down,did you know that the volatile bit was sony ideas and is not on GCN according to a AMD employee that post on Beyond3D.?

Since Cerny mentioned it I'll comment on the volatile flag. I didn't know about it until he mentioned it, but looked it up and it really is new and driven by Sony. The extended ACE's are one of the custom features I'm referring to. Also, just because something exists in the GCN doc that was public for a bit doesn't mean it wasn't influenced by the console customers. That's one positive about working with consoles. Their engineers have specific requirements and really bang on the hardware and suggest new things that result in big or small improvements.

http://forum.beyond3d.com/showpost.php?p=1761150&postcount=2321

Hey Ron look apparently the Volatile bit and more Aces was actually sony's idea,and AMD actually took the idea from sony,apparently they like what sony saw,and before you say anything while the tech inside the PS4 is AMD it was stated that sony also added to the GPU several customizations.

As I recall, my comments on volatile bit is for making better use for the L2 cache, but 79x0 has more L2 cache than 78x0 (Pitcairn). R9-290 is just "more with more" i.e. brute force with improved efficiencies. PS4's approach is to make do with less i.e. improved efficiencies with lesser 78x0 class GCN.

On X1, it has 32 MB (availability will be reduced with raster based workloads) ESRAM for it's GpGPU compute i.e. the approach is different when compared to the other GCNs.

On BF4, R9-280X has better results than on PS4's GCN i.e. R9-280X wins via brute force (with better efficiencies when compared to HD 6970).

--------

Having greater ACE units count is for reducing mulch-threading (8 concurrent threads) compute context from the CPU, but a thread can have multiple compute kernels. It's a known issue (with AMD GpGPU programmers) and not quite a big problem you have Intel Core i7 Ivybridge/Haswell CPUs.

From http://www.neogaf.com/forum/showthread.php?t=695449

And finally, I want to say about another innovation - from AMD Radeon R9 290X instead of two asynchronous computing engines (Asynchronous Compute Engines, ACE) is set just eight. For the majority of users to increase their number will not notice, because they are mainly engaged in the performance of non-graphical computational tasks. However, in such processes, such as hash data, ACE may play a very significant role. So there is every chance that among the miners graphics card AMD Radeon R9 290X will enjoy no less popular than the AMD Radeon HD 7950/7970/7970 GHz Edition.

PS; miners = bitcoin mining

Btw, AMD Kabini SoC's GCN has 4 ACEs each with 8 queues and it was released on May 2013.

R9-290 will be available for sale before PS4.

-----------

Wait so your new argument is mantle .? Mantle is also for PS4. And the PS4 has its own to the metal API which make mantle redundant,or are you going to pretend now that console copy to the metal coding from PC.? Consoles always had that option even if MS chose to ignore it and use DirectX.

Mantle will change in nothing the advantage the PS4 has on PS4 you can code to the metal.

My answer is no for "your new argument is mantle" statement.

During AMD's Mantle reveal, they use the word "consoles" with the character "s" i.e. more than one.

http://images.techhive.com/images/article/2013/09/amd-mantle-overview-100055858-orig.jpg

http://i0.kym-cdn.com/photos/images/newsfeed/000/173/576/Wat8.jpg?1315930535

http://www.anandtech.com/show/5847/answered-by-the-experts-heterogeneous-and-gpu-compute-with-amds-manju-hedge

5. Fully coherent memory between CPU & GPU: This allows for data to be cached in the CPU or the GPU, and referenced by either. In all previous generations GPU caches had to be flushed at command buffer boundaries prior to CPU access. And unlike discrete GPUs, the CPU and GPU share a high speed coherent bus

In addition on an HSA architecture the application codes to the hardware which enables user mode queueing, hardware scheduling and much lower dispatch times and reduced memory operations. We eliminate memory copies, reduce dispatch overhead, eliminate unnecessary driver code, eliminate cache flushes, and enable GPU to be applied to new workloads. We have done extensive analysis on several workloads and have obtained significant performance per joule savings for workloads such as face detection, image stabilization, gesture recognition etc…

http://developer.amd.com/resources/heterogeneous-computing/what-is-heterogeneous-system-architecture-hsa/

With AMD Trinity APU

The HSA team at AMD analyzed the performance of Haar Face Detect, a commonly used multi-stage video analysis algorithm used to identify faces in a video stream. The team compared a CPU/GPU implementation in OpenCL™ against an HSA implementation. The HSA version seamlessly shares data between CPU and GPU, without memory copies or cache flushes because it assigns each part of the workload to the most appropriate processor with minimal dispatch overhead

Your a little late with CPU and GPU cache flush issue.

I don't know what you try to prove with this post since the xbox one GPU has to flush its cache,something on PS4 doesn't happen do to been a true HSA design.

Adding volatile bit control to the L2 cache is making the cache closer to local data store(LDS)/global data store(GDS) type storage device i.e. overriding the cache's default behaviour.

On X1, ESRAM can be controlled by software and doesn't to be flushed.

http://www.anandtech.com/show/5847/answered-by-the-experts-heterogeneous-and-gpu-compute-with-amds-manju-hedge

5. Fully coherent memory between CPU & GPU: This allows for data to be cached in the CPU or the GPU, and referenced by either. In all previous generations GPU caches had to be flushed at command buffer boundaries prior to CPU access. And unlike discrete GPUs, the CPU and GPU share a high speed coherent bus

In addition on an HSA architecture the application codes to the hardware which enables user mode queueing, hardware scheduling and much lower dispatch times and reduced memory operations. We eliminate memory copies, reduce dispatch overhead, eliminate unnecessary driver code, eliminate cache flushes, and enable GPU to be applied to new workloads. We have done extensive analysis on several workloads and have obtained significant performance per joule savings for workloads such as face detection, image stabilization, gesture recognition etc…

http://developer.amd.com/resources/heterogeneous-computing/what-is-heterogeneous-system-architecture-hsa/

With AMD Trinity APU

The HSA team at AMD analyzed the performance of Haar Face Detect, a commonly used multi-stage video analysis algorithm used to identify faces in a video stream. The team compared a CPU/GPU implementation in OpenCL™ against an HSA implementation. The HSA version seamlessly shares data between CPU and GPU, without memory copies or cache flushes because it assigns each part of the workload to the most appropriate processor with minimal dispatch overhead

Your a little late with CPU and GPU cache flush issue.

I don't know what you try to prove with this post since the xbox one GPU has to flush its cache,something on PS4 doesn't happen do to been a true HSA design.

OMG, your so dense, and an elite cow its not even funny, the idea that X1 does do certain things better then the PS4, it kills you, and you have to downplay any positive aspect of the X1. its so funny that you think X1 does not have "true" HSA... Sorry but it will be used on the X1 . Also to the fact that ESRAM is cache&buffer for the APU along with the X1 will have an altered form of HUMA. PS4 isnt doing anything special. the only thing the PS4 has over the X1 is a stronger gpu.

Most Cows on System Wars cannot stand the fact that XBOX ONE maybe be more powerful than PS4 in certain ways, just like the PS4 is more powerful than the XBOX ONE in certain ways. They vehemently deny that there is any way this is possible. All they want to talk about is TFLOPS and GDDR5, not about games or anything of value.

Most Cows on System Wars cannot stand the fact that XBOX ONE maybe be more powerful than PS4 in certain ways, just like the PS4 is more powerful than the XBOX ONE in certain ways. They vehemently deny that there is any way this is possible. All they want to talk about is TFLOPS and GDDR5, not about games or anything of value.

The Xbox 1 has the following advantages over PS4

1.71 Billion triangles/sec setup rate vs 1.6 Billion triangles/sec setup rate or a 6.75% advantage, a possible CPU clock speed advantage of 150Mhz or ~ 8% (the PS4 CPU clock has not actually been revealed so this is based on the PS4 dev kits having 1.6 Ghz CPUs but this could be different in the retail units). It also has some read/write advantages depending on how the dev uses the ESRAM thanks to its low latency.

The problem is a lot of lems think this will put the consoles close to each other but it wont. The performance gap is quite vast, larger than this gen and the PS4 does not have the hurdle of difficult architecture to overcome either. Infact not only is the X1 the weaker console it is a harder one to program for meaning that to the initial batch of unoptimised games might show a bigger gap than just the hardware alone can account for.

Whether Cows like it or not there are some things that the XB1 Actually does better than the Ps4, the only Edge the Ps4 has is a more powerful GPU.

It would appear that saying Anything positive about the XB1 to a cow is the Same as exposing a Vampire to direct sunlight.

Whether Cows like it or not there are some things that the XB1 Actually does better than the Ps4, the only Edge the Ps4 has is a more powerful GPU.

It would appear that saying Anything positive about the XB1 to a cow is the Same as exposing a Vampire to direct sunlight.

LOL!!

Yeah, Cows are going to be humbled bigtime when the two consoles are released and the graphics on multiplat games end up being near-identical with exclusive games having equally good graphics on both consoles.

Also, when Ryse gets tons of critical acclaim and ends up being a big hit, Cows will be humbled once again.

The next couple of months is going to be interesting in the video game world.

Most Cows on System Wars cannot stand the fact that XBOX ONE maybe be more powerful than PS4 in certain ways, just like the PS4 is more powerful than the XBOX ONE in certain ways. They vehemently deny that there is any way this is possible. All they want to talk about is TFLOPS and GDDR5, not about games or anything of value.

The Xbox 1 has the following advantages over PS4

1.71 Billion triangles/sec setup rate vs 1.6 Billion triangles/sec setup rate or a 6.75% advantage, a possible CPU clock speed advantage of 150Mhz or ~ 8% (the PS4 CPU clock has not actually been revealed so this is based on the PS4 dev kits having 1.6 Ghz CPUs but this could be different in the retail units). It also has some read/write advantages depending on how the dev uses the ESRAM thanks to its low latency.

The problem is a lot of lems think this will put the consoles close to each other but it wont. The performance gap is quite vast, larger than this gen and the PS4 does not have the hurdle of difficult architecture to overcome either. Infact not only is the X1 the weaker console it is a harder one to program for meaning that to the initial batch of unoptimised games might show a bigger gap than just the hardware alone can account for.

The second part of your post is what the xbox fans in here don't want to hear and makes all the arguments about the "advantages" of the x1 over the PS4 kind of pointless.

Bad news for the lems. CBOAT,the big MS leaker over at GAF has said some bad things.

"By the way, I hope you aren't fans of 1080p on Xbox One. When the hardware is out in the wild you will see what I meant about issues. Up-clocks too. Risk is everywhere at the moment, this place is nuts. A 2014 release was a better plan." http://www.neogaf.com/forum/showthread.php?t=696772

Sub1080p and RROD 2.0 incoming!!

Adding volatile bit control to the L2 cache is making the cache closer to local data store(LDS)/global data store(GDS) type storage device i.e. overriding the cache's default behaviour.

On X1, ESRAM can be controlled by software and doesn't to be flushed.

ESRAM is not a cache on xbox is more like a programable scratch pad,and yes the GPU most be flush is the reason for all the fight about the xbox one not been true HSA but say to have something similar.

PS4 is a true HSA design with hUMA.

OMG, your so dense, and an elite cow its not even funny, the idea that X1 does do certain things better then the PS4, it kills you, and you have to downplay any positive aspect of the X1. its so funny that you think X1 does not have "true" HSA... Sorry but it will be used on the X1 . Also to the fact that ESRAM is cache&buffer for the APU along with the X1 will have an altered form of HUMA. PS4 isnt doing anything special. the only thing the PS4 has over the X1 is a stronger gpu.

Killing me...hahahahaa

First of the xbox one GPU most be flush,meaning no true HSA this has been discuss to no end on PS4 that isn't the case.

The ESRAM is not a damn cache on xbox one,contrary to what you claim,it is not it is more of a programable scratch pad,which is not the same in any way shape or form,and in fact it is been blame now as the reason why xbox one games are basically all 900p or 720p.

Alternative = same now since when.? The PS4 is true HSA and has hUMA in its more pure form dude,no problems,no fixing to alternative ways without actual explanation.

Not only the PS4 has a stronger GPU,is modify for compute to a level that the xbox one isn't is really funny how much hate you have for the PS4.

Most Cows on System Wars cannot stand the fact that XBOX ONE maybe be more powerful than PS4 in certain ways, just like the PS4 is more powerful than the XBOX ONE in certain ways. They vehemently deny that there is any way this is possible. All they want to talk about is TFLOPS and GDDR5, not about games or anything of value.

Since when ESRAM make GPU more powerful.? hahahaa....

delta3074Whether Cows like it or not there are some things that the XB1 Actually does better than the Ps4, the only Edge the Ps4 has is a more powerful GPU.

It would appear that saying Anything positive about the XB1 to a cow is the Same as exposing a Vampire to direct sunlight.

So what the xbox one does better.? you know saying that without actually giving examples is rather a vague claim.

The xbox one has an 8 or 9 % CPU advantage on a jaguar that is basically nothing,specially when the PS4 can use its GPU for compute,in a way not possible on xbox one,and without hurting graphics.

@Thunder7151

Yeah, Cows are going to be humbled bigtime when the two consoles are released and the graphics on multiplat games end up being near-identical with exclusive games having equally good graphics on both consoles.

Also, when Ryse gets tons of critical acclaim and ends up being a big hit, Cows will be humbled once again.

Well it is been say that BF4 is higher than 720p on PS4 while on xbox one is 720p.

Ryse is a bland a boring hack a slash which only good thing that has going for it is the graphics,and even that is below PS4.

Ryse online 900p 30 FPS.

Killzone online 1080p 60 FPS.

Both the same, PRT is accomplished with programmable VM memory page tables. Both have same HW in this regard. Unless they use eSRAM which also has page table mapping which could give speed increases or use Lz77 engine to decode the pages for optimal memory use

Bad news for the lems. CBOAT,the big MS leaker over at GAF has said some bad things.

"By the way, I hope you aren't fans of 1080p on Xbox One. When the hardware is out in the wild you will see what I meant about issues. Up-clocks too. Risk is everywhere at the moment, this place is nuts. A 2014 release was a better plan."

http://www.neogaf.com/forum/showthread.php?t=696772

Sub1080p and RROD 2.0 incoming!!

I my self have question the upclock to,you can't just take hardware that you originally had set for a certain speed and just over clock it,without thinking it will not affect anything,considering that the xbox one already was having yield issues that up clock can be a problem.

Only god knows and we all know MS doesn't care how things go when they want something they just think that everything can be patch with money.

TC, this is the wrong forum to post things like this. The mad-super-cows are all hardware engineers, didn't you know that? They will flood the boards touting PS4 hardware superiority and graphs that they really don't understand - major development studio contradictions be damned.

Here's the facts: they are both powerful, but the PS4 will have a slightly higher frame rate. If any of these dunderheads in SW really knew anything about technology, they'd know that 30% improvement in performance (which, I have issues with believing) doesn't mean very much.

Have fun getting flamed, though!

TC, this is the wrong forum to post things like this. The mad-super-cows are all hardware engineers, didn't you know that? They will flood the boards touting PS4 hardware superiority and graphs that they really don't understand - major development studio contradictions be damned.

Here's the facts: they are both powerful, but the PS4 will have a slightly higher frame rate. If any of these dunderheads in SW really knew anything about technology, they'd know that 30% improvement in performance (which, I have issues with believing) doesn't mean very much.

Have fun getting flamed, though!

This.

See last gen and teh cell. LOL

TC, this is the wrong forum to post things like this. The mad-super-cows are all hardware engineers, didn't you know that? They will flood the boards touting PS4 hardware superiority and graphs that they really don't understand - major development studio contradictions be damned.

Here's the facts: they are both powerful, but the PS4 will have a slightly higher frame rate. If any of these dunderheads in SW really knew anything about technology, they'd know that 30% improvement in performance (which, I have issues with believing) doesn't mean very much.

Have fun getting flamed, though!

Online.

Ryse 900p 30 FPS.

Killzone 1080p 60 FPS

And Killzone SF still looks better,oh and stages have destructibility as well in Killzone SF,i was watching the lasted online gameplay and it showing how bullets actually destroyed wood on some walls.

You flame every one,because no one want to believe your whole silly theories based on pure fanboysm and wishful thinking,you just have to look at the launch games,how many games on xbox one are actually 1080p how many,there will probably be 1 Forza and is a racing game.

Because even Killer Instinct is 720p.

It would be funny watching you defended inferior ports on xbox one,lower frames and lower resolution,less effects or no AA on xbox one..

Please Log In to post.

Log in to comment