@tormentos said:

@KillzoneSnake said:

Yeah so taking power away from the CPU for the GPU to go full overclock lol. Nice one. For sure most of the time it will run at 9TF then.

Personally i dont care, i think its still a very powerful system. Hell im happy with the PS4 lol. BC news and more AAA game variety imo is more important. Its going to be a sad day for PS fanboys when digital foundry benchmarks both systems lol

Actually that is not how it works,if you haven't read it yet you don't want to that much is obvious from your trolling post.

@ronvalencia said:

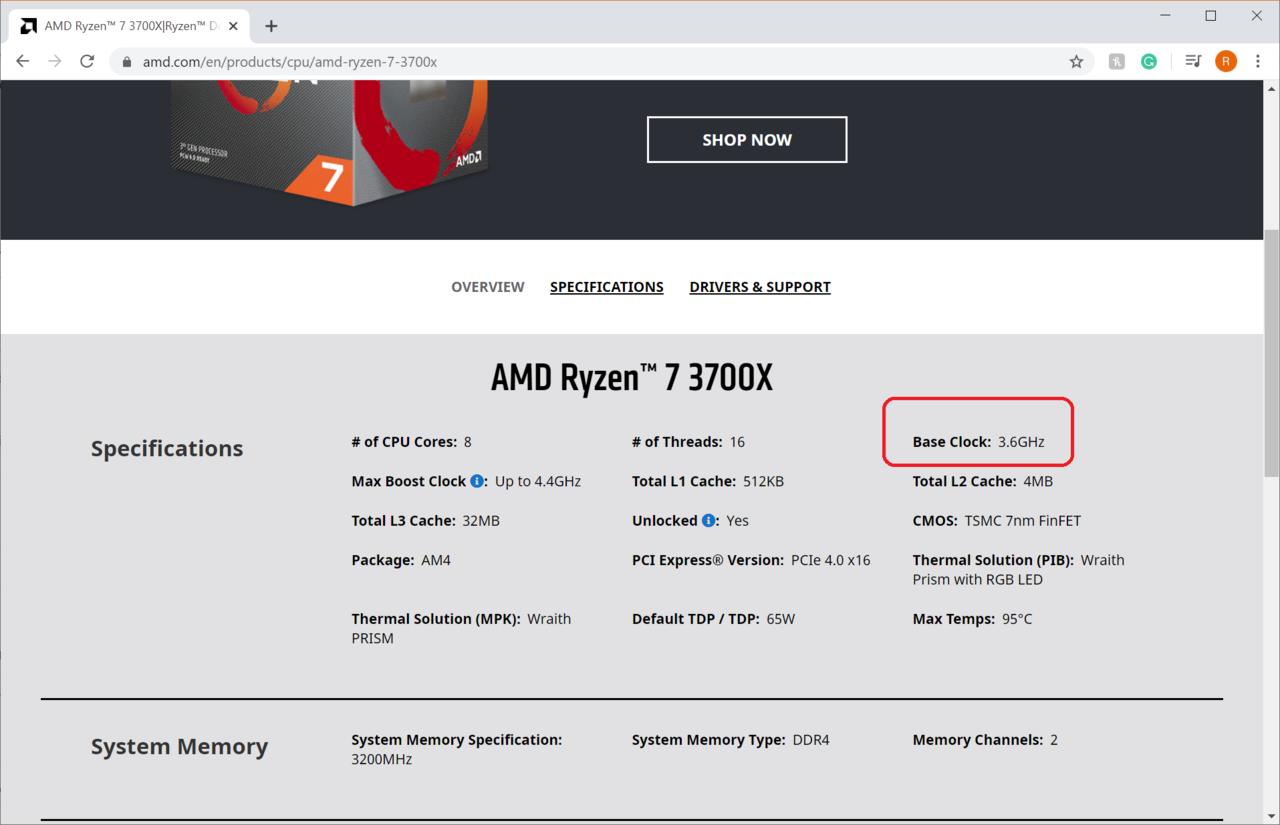

FYI, Mark Cerny states heavy AVX CPU workload can cause clock speeds to drop. LOL.

A 10% drop in worse case scenario that would result in a small frequency drod,in fact it is been claim that the GPU will probably not drop below 10.1TF.

But you know this and now you are just as sad of a troll as he is.lol (FUK0FF)

@ronvalencia said:

If AMD's 50% perf/watt PR claim is true, 2.23 Ghz boost mode would be a good target for RDNA 2's NAVI 10 replacement i.e. RX 6700 and RX 6700 XT. Sony put a cap on GPU's 2.23Ghz boost mode.

RDNA 2 is fabricated on second-generation 7nm N7P or 7nm Enhanced.

https://www.techpowerup.com/review/msi-radeon-rx-5700-xt-gaming-x/33.html

MSI RX 5700 XT Gaming X has 1.99 Ghz average and 2.07 Ghz max boost with 270 watts average gaming power consumption. This is from a factory i.e. MSI.

Apply AMD's 50% perf/watt claim turns 270 watts into 135 watts at ~2Ghz average.

Apply 40% perf/watt claim turns 270 watts into 162 watts at ~2Ghz average.

Sony could spend the remaining TDP headroom into 2.23 Ghz without major problems. I don't support PS5's RDNA 2 GPU to be 9.2 TFLOPS average arguments i.e. I'm not extremist for the other camp.

Sony has stated CPU AVX workloads to be the major factor in the throttling.

You can't compare RDNA v1 7nm against RDNA v2 N7P or 7nm Enhanced when PC's RDNA 2 based SKUs are not yet revealed.

I'm happy for Sony to reveal RDNA 2 boost clocks since it benefits PC's RX 6700 XT which is expected to scale higher clock speed, especially from another MSI Gaming X like SKU.

RDNA 2 40 CU needs to be around 150 watts for RDNA 2's 80 CU "Big Navi" version!

Nothing you say there address my point you are the master of saying tons of bullshit without saying anything really.

The PS4 Pro came in 2016 and was $400 the X came in 2017 1 year latter which mean even cheaper hardware but came at $100 more $500.

The budget for this 2 machines were difference Sony simply could not achieve an xbox one X setup in 2016 at $400 hell not even MS could which is why they release in 2017 and for $100 MORE.

Budget is the keyword here you just don't get it because you are to busy kissing MS ass.

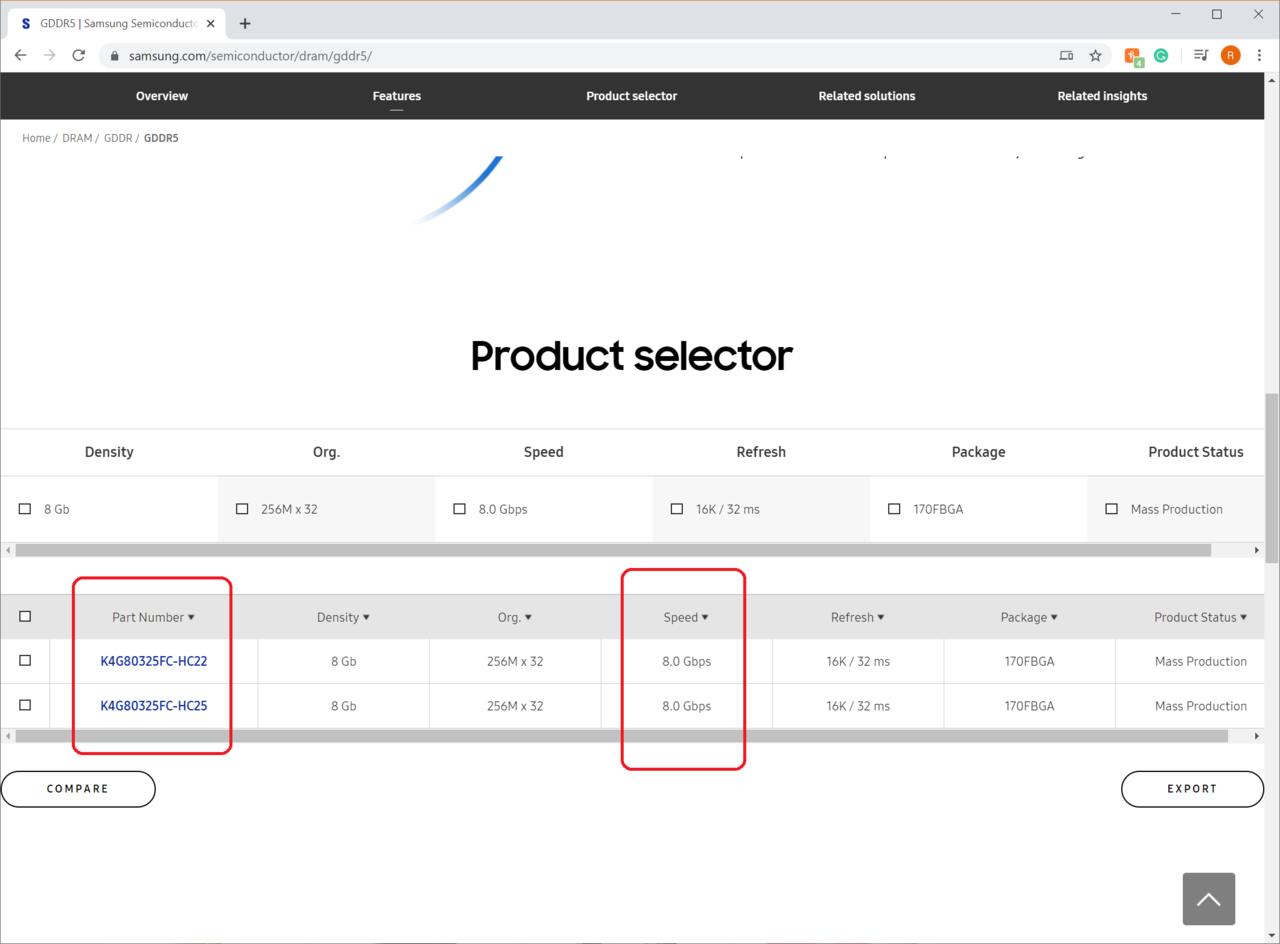

I have addressed your points and I have countered with Sony already paid for eight GDDR5-8000 components.

For X1X, MS was waiting for Vega 64's perf/watts improvements i.e. half of 12.66 TFLOPS at 295 watts yields 6 TFLOPS version would be close to 150 watts. Both Vega 64 and X1X was released in year 2017.

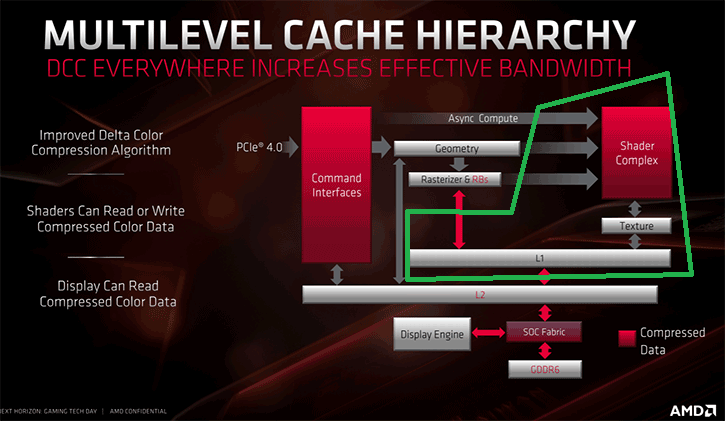

X1X GPU's 32 ROPS was the 1st GCN with 2MB render cache which is half of Vega 64's 64 ROPS with 4MB L2 cache.

X1X has GDDR5-7000 rated chips which is available in year 2016.

AMD's 384-bit bus PCB design already exists since December 2011 with Radeon HD 7970's retail release. Individual GDDR5-7000 chip is cheaper than GDDR5-8000 chip.

X1X GPU's 44 CU with quad shader engine units layout design already exists with year 2013 Hawaii 44 CU GCN.

X1X has an additional cost on Ultra-Blu-ray drive, four extra GDDR5-7000 chips, flash storage (for OS swap file), vapor cooler and slightly complex 384-bit bus PCB.

The main delaying factor for X1X is AMD's perf/watt silicon maturity and MS's customization with GCN's rasterization.

X1X's cost has reached $399 since November 2018. https://www.theverge.com/2018/11/10/18083294/xbox-one-x-black-friday-deal-microsoft

I don't need MS when discussing mostly PC hardware.

Again, I don't support PS5's RDNA 2 GPU to be 9.2 TFLOPS average arguments i.e. I'm not extremist for the other camp. It's your problem to defend Sony.

Log in to comment