@GameboyTroy: When the 7th core was unlocked in the xbox one everyone said it makes no difference, I guess its the same right?

Sony unlocks Playstation 4's 7th CPU core in SDK update

@GameboyTroy: When the 7th core was unlocked in the xbox one everyone said it makes no difference, I guess its the same right?

It will make a difference with games that are CPU bound and are multi threaded to use at least 6 cores. It will benefit the PS4 more than the Xbox One because it's a complete core and the PS4 has more overhead due to the GPU being more powerful, and also it probably has some bandwidth left on the ram to utilise it fully if it ever needs to.

@ten_pints: Do you know for a fact its a complete core, I don't think that has been confirmed yet.

So your saying the cpu works less on the xbox because the gpu is less powerful? The cpu must be a beast in the xbox the as they over clocked it too.

Maybe it can do so graphics processes as its just waiting around

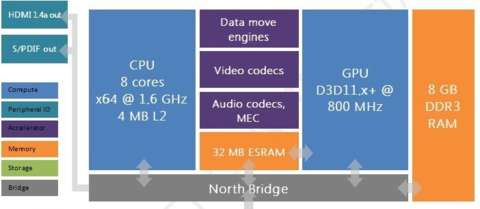

The move engines are located at various points in system to manage the flow of data and where it goes, these are not intrinsically tied to the memory and GPU substructure not to mention the rest of that shit you're blabbing about has absolutely nothing to do with what I said.

This is some misterXmedia shit? This is from the IGN harware overview of the system you moron.

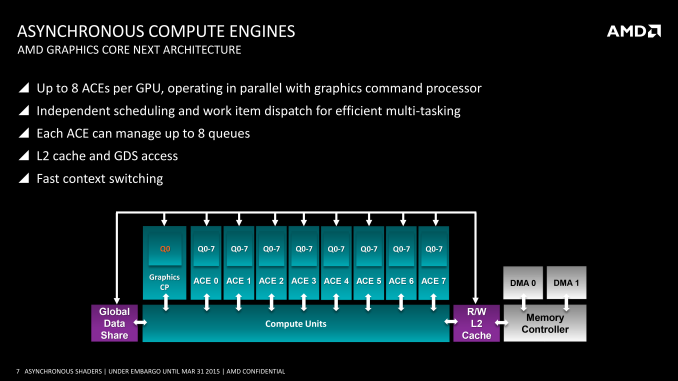

Idiot DME are call DMA on AMD 7XXX series of cards all GCN have 2 the xbox one has 4 because it has DDR3 and ESRAM 2 memory structures which have to swap data in an out,the PS4 doesn't have that shit and yes all GCN family has DME dude just like all have ACES to but the PS4 has 8 instead of the usual 2 because it was modify for better compute.

Post a link you fool and stop talking shit.

With GDC 2015 fast approaching, it’s high time we got through the last major hardware parts of the Xbox One’s SDK leak. In this particular article, we’ll cover the DMA Move Engines and the remaining questions around the Xbox One’s memory bandwidth and copy operations. In the forth part, we’ll tackle the SHAPE audio processor and the remaining hardware components!

http://www.redgamingtech.com/xbox-one-sdk-leak-part-3-move-engines-memory-bandwidth-performance-tech-tribunal/

Moving on, coupled with a DMA copy engine (common to all GCN designs), GCN can potentially execute work from several queues at once.

http://www.anandtech.com/show/9124/amd-dives-deep-on-asynchronous-shading

I advice you to stay arguing counter strike or Halo,don't argue things you don't know the photo you quoted is wrong Move engines will not close the gap and move engines are on PS4 as well,GCN and PS4 has 2 the xbox one has 4 because it has a shitty memory structure.

@GameboyTroy: When the 7th core was unlocked in the xbox one everyone said it makes no difference, I guess its the same right?

It didn't because the xbox one GPU is to weak to make any difference,what i see here with this core unlock is the PS4 doing more things or getting a few more frames nothing big.

Also taking into account that the PS4 beat the xbox one frame wise with 6 cores having 7 should widen that gap a little more.

@ten_pints: Do you know for a fact its a complete core, I don't think that has been confirmed yet.

So your saying the cpu works less on the xbox because the gpu is less powerful? The cpu must be a beast in the xbox the as they over clocked it too.

Maybe it can do so graphics processes as its just waiting around

Why would it be less.? This is the same thing i argue already,sony reservation was for future proofing not because the PS4 OS need it 2 cores,is the same with Ram,sony had problem last gen with the PS3 because when the console launched the OS took 125mb,but sony shrink it to about 45MB so when they wanted to implement party chat it wasn't possible do to the system having little memory and once the games have that memory you can't take it back.

So this gen apparently sony reserve resources in order to future proof the unit in case MS added new features,the reality is that since the unveiling of both many things changed and MS dropped its original plans and focus more on trying to catch the PS4 as much as possible by making overclocking and killing reservation that 7th core alone side 10% GPU reservation were for Kinect,once MS dropped Kinect the GPU reservation was the first thing they free because they need it the most,then then the 7th core as unlock last year,but is not totally unlock that core was tied to Kinect completely,it handle in game voice commands for the xbox one.

So if you want to use that core you can't have you game with in game commands,and you can't use it all because even that MS allow developer to kill in game command,system commands are still in place like xbox record and shit like that as soon as you say xbox record the 7th you lose 50% of the core because the system calls will use the 7th core.

On PS4 there is no Kinect in the first place so there was no need to hold that 7th core other than future proofing,see how sony waited until several games on the xbox one used the 7th core to unlock theirs.? Yeah that is because once you give that core to a game you can't take it back it will ruin the game and cause problems.

Think about this like the PSP were sony had the clock speed of the CPU lock and latter on increase it without problems.

So it was only running on 6 cores? Oh wow. These gen machines are so weak sauce its nuts.

the amount of cores is meaningless. its the amount of instructions the cpu can do per clock cycle is what matters. Its why just a 4 core 6700k skylake can blow away any console or any 8 core AMD cpu also. But its also almost twice the cost of a console.

Even the very first gen i7s released back in 2009 can destroy a console.

So it was only running on 6 cores? Oh wow. These gen machines are so weak sauce its nuts.

the amount of cores is meaningless. its the amount of instructions the cpu can do per clock cycle is what matters. Its why just a 4 core 6700k skylake can blow away any console or any 8 core AMD cpu also. But its also almost twice the cost of a console.

Even the very first gen i7s released back in 2009 can destroy a console.

Hell any Phenom 2 X4 above 2.6ghz can out process the 6 cores in the consoles

@ten_pints: Do you know for a fact its a complete core, I don't think that has been confirmed yet.

So your saying the cpu works less on the xbox because the gpu is less powerful? The cpu must be a beast in the xbox the as they over clocked it too.

Maybe it can do so graphics processes as its just waiting around

Well yes, feeding the GPU with more data will use additional CPU power, maybe not in every case like with multiplat games that are graphically identical but if the geometry and textures and what not have been optimised/improved for a target FPS, more CPU power will be utilised.

The CPU cores themselves are identical in both consoles, I'm assuming they also overclocked the ram at the same time which is probably more of the issue with the Xbox One. The raw speed of an individual components tells you nothing, the other components have to be balanced around it or there will be a bottleneck.

It's not been confirmed as a complete core, but there is no reason to suggest it isn't either, you can't just assume they are doing what Microsoft has done because they did it for a very specific reason.

@ten_pints: Do you know for a fact its a complete core, I don't think that has been confirmed yet.

We can't know for sure yet but I bet that it is the full core, since unlike Xbox one the Ps4 has a secondary processor to help with background tasks and never had to account for the Kinect like the one. Sony was more conservative with their OS last gen, they were using a whopping 120 mb's out of the 512 for that, but got it down to just 50 later, but MS used just 32mb's for the whole generation. So where memory is concerned as well, I think at least Sony will open up more for games.

@rektmuhface:

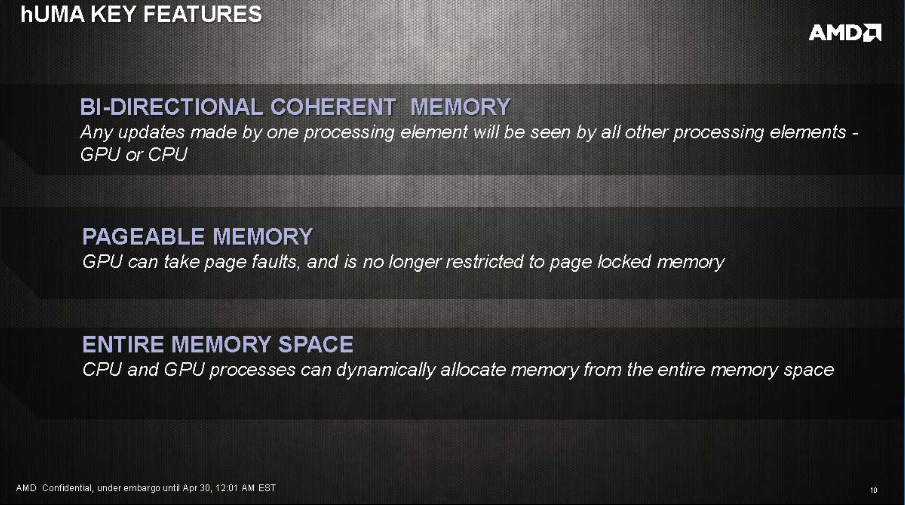

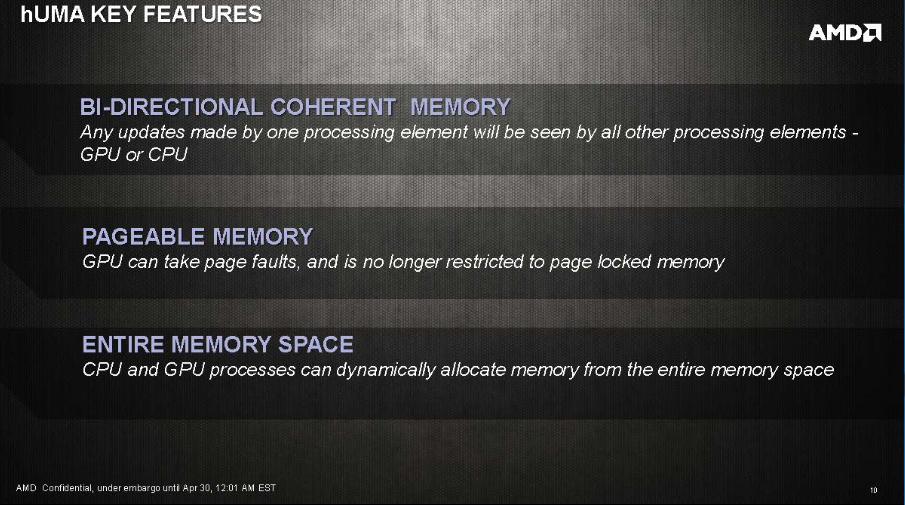

Sure. And Xbone doesn't HUMA

Pure HUMA is not an advantage for raster rendering.

The best situation is a hybrid design i.e.

1. CPU and GPU has common(coherent) data view with main memory.

2. GPU has large enough dedicated memory for large scale GPU operations.

The next-gen AMD APU (PC) would have both high speed HBM(acting like gaming PC's dedicated video memory) and large DDR4 storage memory.

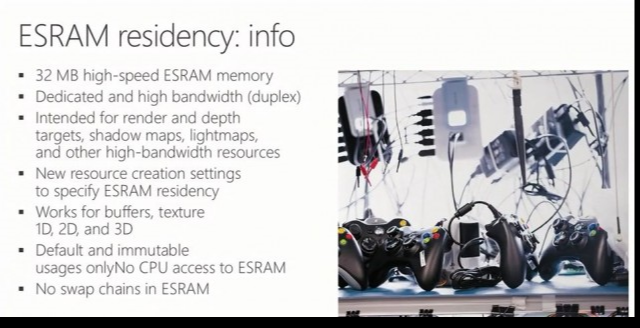

XBox One with 28 CU GPU(replacing 32MB ESRAM yields another 14 CU), 2 GB GDDR5-5500 256bit VRAM + 8 GB DDR3-2133 128bit would have been a better machine over PS4 and it's effectively a gaming PC with Windows 10 (includes DirectX12+XBox services).

XBox One's 32 MB ESRAM consumed APU chip space hence reducing CU count and the storage is not enough.

Dedicated 2 GB GDDR5-5500 256bit VRAM is just a larger 32MB ESRAM memory pool that doesn't reduce APU chip space for CU count and avoids complex framebuffer tiling management since the storage is large enough for non-tiling framebuffers. .

poor el tomato

blindly bashing without actually understanding that X1 can and does support HSA/HUMA features esram has nothing to do with it not it allowing it to do so..... keep the denial going....

cpu and gpu has access to the main pool of memory and both can talk to each other

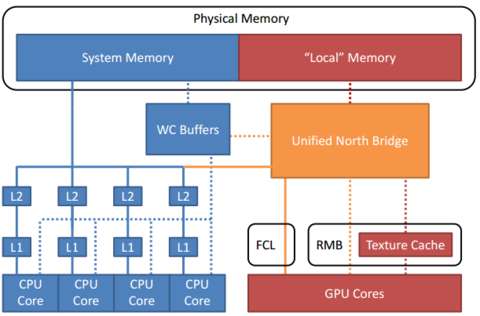

On xbox one that is not the case when data is on ESRAM the CPU can't see it,the CPU has no access to ESRAM there for there is no HUMA while data is on DDR3 both CPU and GPU can see it problem is the xbox one needs to constantly use ESRAM and nothing there can't be seeing by the CPU.

You are an idiot you are not telling me anything i don't know already this is ^^ me the page before.

The problem is not the main pool of ram the problem is ESRAM which has no connection to the CPU i quoted MS on it dude only the GPU can access ESRAM,stop ignoring what is being presented to you fool.

ESRAM handles most of the in game data on xbox one,because DDR3 is to slow,basically everything pass over ESRAM which is why the xbox one is not a true HSA or HUMA design HUMA requires 1 memory pool were both CPU and GPU can see the same data at all times,ESRAM doesn't allow for that.

“ESRAM is dedicated RAM, it’s 32 megabytes, it sits right next to the GPU, in fact it’s on the other side of the GPU from the buses that talk to the rest of the system, so the GPU is the only thing that can see this memory.

There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that.Read more:

Read more: http://wccftech.com/microsoft-xbox-esram-huge-win-explains-reaching-1080p60-fps/#ixzz3sqsUQ36o

FROM MS own mouth the only thing that can see ESRAM is the GPU,so while data is there is no HSA or HUMA what so ever because this is what HUMA requires.

hUMA is a cache coherent system, meaning that the CPU and GPU will always see a consistent view of data in memory. If one processor makes a change then the other processor will see that changed data, even if the old value was being cached.

http://arstechnica.com/information-technology/2013/04/amds-heterogeneous-uniform-memory-access-coming-this-year-in-kaveri/

The only thing accessing ESRAM is the GPU so drop it the CPU will not see any data once is on ESRAM and on xbox one that mean all data that need fast bandwidth.

@tormentos: This is the only link worth reading

http://www.eurogamer.net/articles/digitalfoundry-vs-the-xbox-one-architects

Please i read that before you joined this site..lol And yes it doesn't prove your points.

Pure HUMA is not an advantage for raster rendering.

The best situation is a hybrid design i.e.

1. CPU and GPU has common(coherent) data view with main memory.

2. GPU has large enough dedicated memory for large scale GPU operations.

The next-gen AMD APU would have both high speed HBM(acting like gaming PC's dedicated video memory) and large DDR4 storage memory.

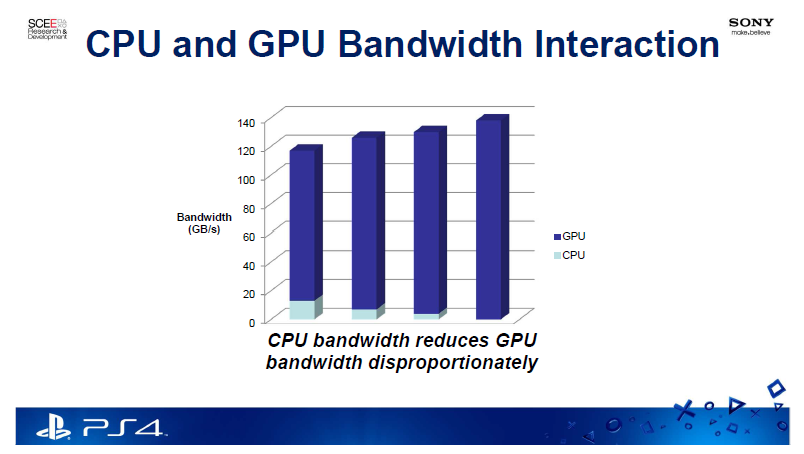

Waiting from a link from sony stating that 140GB is all they can use,as i already quote a developer stating 172GB/s being use.

Oh please there is no advantage on the xbox one memory setup and is part of the reason why some games are 720p to begin with.

You miss quoting sony data and holding to that shitty gift is a joke drop it it is from GDC 2013 the PS3 wasn't even out and its drivers weren't even complete,funny how the xbox one can improve to the moon but some how sony can't right.?

So first it was teh GDDR5 that was going to turn PS4 into a supercomputer...

Then it was teh GPGPU that was going to turn PS4 into a supercomputer...

Now teh extra core on a shit CPU is going to turn the PS4 into a supercomputer.

Thank you $ony.

For a supposed PC gamer you sure cry a lot about ps4. If your upset with your xbone direction take it up with MS rather than having a tanty over the opposition. See you moaning about the underpowered ps4 all the time yet you are one of the first to run to the bones defense. Let me guess your some kid right? Under 25 at least. Trust me it shows.

LOL, you claimed XBO having DX12 from day one fool.

It had DX12 features from day 1 fool,in fact most of DX12 is console optimization bring to PC aside from multi threaded rendering and a few other things the xbox one already was DX12 capable hell the 360 and PS3 were to..lol

Is the fact that you think DX12 is something completely new when in reality is consol like optimization with a few more things,reason why on xbox one developers have being low about its gains when on PC the gains are bigger.lol

Yea so whats your twisted logic have to do with ignoring facts? X1 current API is also missing quite a few of the main features that is coming up in DX12. That tid bit you seem to ignore.....

Again love how you seem to totally ignore that even the 360 API follows the same flaw is in the X1 current API and the same as DX11... which limits one core to directly communicate to the gpu being the funnel bottlenecking the flow of data. Hence the reason why PS4 cant sustain solid framerates in most multiplats.

The PS4 is bruteforcing unoptimized codes for most multiplats. It's sad how much multiplat devs bend over to the Xbone. They waste all their time optimizing the Xbone version and do a 2-man port for 3-6 months on the PS4.

Aside from total raw power, the PS4 has no bottlenecks. It's the perfect architecture.

If the ps4 version and the bone version are both the same rez and features yet the bone has a better frame rate you gotta realize what's going on. I mean otherwise you are just another dumb fanboy. I'm surprised how many people cannot seem to grasp that (and other basic logic) on GS.

@tormentos:

Your clearly the moron here making excuses and grasping ....... To use HSA/HUMA features you dont need to access the ESRAM just the main pool of memory that cpu and gpu can access together aka the DDR3 pool. Where the vast majority of the memory work is done to begin with. PS4 having the GDDR5 pool is a clear advantage allowing the cpu+gpu to access and process the data more quickly. But to keep on denying, ignoring facts and actually look instead of blindly bashing..... you would know X1 can do HSA and HUMA features.

@acp_45: You certainly do have a Jesus Christ complex.

Show us the way, oh thou that shalt not stray away from righteousness.

poor el tomato

blindly bashing without actually understanding that X1 can and does support HSA/HUMA features esram has nothing to do with it not it allowing it to do so..... keep the denial going....

cpu and gpu has access to the main pool of memory and both can talk to each other

On xbox one that is not the case when data is on ESRAM the CPU can't see it,the CPU has no access to ESRAM there for there is no HUMA while data is on DDR3 both CPU and GPU can see it problem is the xbox one needs to constantly use ESRAM and nothing there can't be seeing by the CPU.

You are an idiot you are not telling me anything i don't know already this is ^^ me the page before.

The problem is not the main pool of ram the problem is ESRAM which has no connection to the CPU i quoted MS on it dude only the GPU can access ESRAM,stop ignoring what is being presented to you fool.

ESRAM handles most of the in game data on xbox one,because DDR3 is to slow,basically everything pass over ESRAM which is why the xbox one is not a true HSA or HUMA design HUMA requires 1 memory pool were both CPU and GPU can see the same data at all times,ESRAM doesn't allow for that.

“ESRAM is dedicated RAM, it’s 32 megabytes, it sits right next to the GPU, in fact it’s on the other side of the GPU from the buses that talk to the rest of the system, so the GPU is the only thing that can see this memory.

There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that.Read more:

Read more: http://wccftech.com/microsoft-xbox-esram-huge-win-explains-reaching-1080p60-fps/#ixzz3sqsUQ36o

FROM MS own mouth the only thing that can see ESRAM is the GPU,so while data is there is no HSA or HUMA what so ever because this is what HUMA requires.

hUMA is a cache coherent system, meaning that the CPU and GPU will always see a consistent view of data in memory. If one processor makes a change then the other processor will see that changed data, even if the old value was being cached.

http://arstechnica.com/information-technology/2013/04/amds-heterogeneous-uniform-memory-access-coming-this-year-in-kaveri/

The only thing accessing ESRAM is the GPU so drop it the CPU will not see any data once is on ESRAM and on xbox one that mean all data that need fast bandwidth.

@tormentos: This is the only link worth reading

http://www.eurogamer.net/articles/digitalfoundry-vs-the-xbox-one-architects

Please i read that before you joined this site..lol And yes it doesn't prove your points.

Pure HUMA is not an advantage for raster rendering.

The best situation is a hybrid design i.e.

1. CPU and GPU has common(coherent) data view with main memory.

2. GPU has large enough dedicated memory for large scale GPU operations.

The next-gen AMD APU would have both high speed HBM(acting like gaming PC's dedicated video memory) and large DDR4 storage memory.

Waiting from a link from sony stating that 140GB is all they can use,as i already quote a developer stating 172GB/s being use.

Oh please there is no advantage on the xbox one memory setup and is part of the reason why some games are 720p to begin with.

You miss quoting sony data and holding to that shitty gift is a joke drop it it is from GDC 2013 the PS3 wasn't even out and its drivers weren't even complete,funny how the xbox one can improve to the moon but some how sony can't right.?

To reach Sony's effective 140 GB/s, the peak memory bandwidth has to be higher than effective memory bandwidth.

PS4's 140 GB/s effective vs 176GB/s theoretical is in-line with other AMD GDDR5 memory controller efficiencies e.g. ~79 percent efficient.

You are not factoring AMD's internal crossbar/switching overheads prior to hitting CU or ROPS units i.e. this decoupled design enables AMD to have different CU count SKUs without incurring GTX 970's last 0.5 GB massive speed hit issue.

This ~79 percent efficiency factor also hits my PC's Radeon HD 7950-900, 7970-GE, R9-290-Ref and R9-290X-1040, hence this is not anti-PS4.

Applying 79 percent efficiency on XBO's 68 GB/s DDR3 memory bandwidth yields 53.72 GB/s which is inline with XBO's stated effectiveness.

The lost memory bandwidth is re-gained via texture compression/decompression i.e. ATI/AMD usually introduces IP technology in this area e.g. 3DC+(aka DX10 BC4), 3DC(aka DX10 BC5), DX11's BC6/BC7. This applies to all AMD GPU IP users.

If you apply ~79 percent efficiency on 204 GB/s ESRAM, it reaches it's stated effective memory bandwidth.

The problem with XBO's 32 MB ESRAM is it's very slow relative to CU's SRAM storage i.e. local data storage(LDS) and L1 cache.

XBO's 32 MB ESRAM doesn't turn the machine into 12 CU with 32MB worth of local data storage (LDS) SRAM storage!!!!! XBO's 32 MB ESRAM sits outside of CU while LDS sits inside of CU.

One doesn't apply lossy block compression (BC) to GpGPU compute based data sets.

IF PS4 shows effective 172 GB/s, it's 32 ROPS should have delivered rival MSAA performance to Radeon HD 7950, but this is NOT the case. Your 172 GB/s = effective bandwidth is a load of bull$hit. PS4's GPU delivered about R7-265 level of performance!!!

PS4 doesn't have NVIDIA MaxwellV2's direct CU(aka SM)/ROPS to MCH connection design!!!!!

@acp_45: so you're a troll...well at least you admit it.

As I said you are just using rhetorics now. It's pathetic.

I never meant it in the way you are claiming I did.

Sorry if you misunderstood.

@acp_45: You certainly do have a Jesus Christ complex.

Show us the way, oh thou that shalt not stray away from righteousness.

Stop exposing yourself.

Calm down.

@acp_45: I only expose myself in the bathroom in the mirror to myself.

How dare you say such things about me.

Negative.

"Move Engines are Fixed Function processors in the Xbox One that are meant to reduce the overhead on the CPU and GPU, andALSO HELP transport data from the system memory to the eSRAM."

lol... It's like you guys are trying so desperately to defend Xbone's POS architecture.

I know perfectly well what move engines are, and I'm telling you that however well they help the CPU transfer data between the split memory pools, it is still much inferior to having a single huge high bandwidth memory pool where you don't need to transfer anything. Move engines or not, the Xbone CPU has to waste cycles moving data to and from the eSRAM.

XBO's Move Engines are glorified Blitter that operates outside the CPU and GPU resources. It operates similar to Commodore-Amiga's fix function Blitter hardware.

The main point with offloading memory block copy function to a fix function hardware is to reduce CPU's and GPU's resource memory copy workload.

Modern PC GPUs includes it's own Blitter like functions but that's a GPU resource e.g. the 3D hardware can move/manipulate texture data i.e. GPU's TMU includes a large array of gather(read) and scatter(write) hardware and PS4 has more TMUs when compared to XBO. Also, PS4 has more ROPS(read/write) units when compared to XBO.

For GpGPU mode, GPU's TMU is load/store unit for the GPU's stream processors.

Both ROPS and TMU can memory read and write, and PS4 has more of it. TMU and ROPS has other fix function feature besides memory read and write functions e.g. TMU's texture filtering(AF) and ROPS's blend/MSAA functions.

@imt558:

"PS4 HUMA architecture: AMD retracts statement, 'will not comment' on PS4 vs. Xbox One"

""During a recent gamescom 2013 interview, an AMD spokesperson made inaccurate statements regarding the details of our semi-custom APU architectures"

All HUMA is that it allows data to be simultaneously shared between GPU and CPU, both consoles gpu and cpu can access their main pool of memory to process data. The fact that X1 supports HSA means that its gpu can see the virtual memory and physical pool and process data from it as well, which means that X1 can support a HUMA like feature as well. Since CPU and GPU share the unified memory pool. HSA allows cpu and gpu to work together with unified memory pool of memory. AMD has been working with HUMA and HSA support with their APU's and to suggest PS4 has HUMA and X1 dont in any form is just dumb

"The 'supercharged' part, a lot of that comes from the use of the single unified pool of high-speed memory," said Cerny. The PS4 packs 8GB of GDDR5 RAM that's easily and fully addressable by both the CPU and GPU.

NO CPU ACCESS TO ESRAM.

NO HUMA not true HSA it has being known from the start that the xbox one doesn't have true HSA design it has something similar and certainly doesn't have HUMA which which require the CPU and GPU to see the same data at all times,which is impossible on xbox one because the CPU can't access data while it is on ESRAM.

Is not HUMA and is not true HSA for the same reason.

@04dcarraher:

LOL. Use your logic. Of course that AMD will retract employee's statement about PS4 does have HUMA, Xbone doesn't. They don't want to enter in console war. The hell, AMD developed APU for both consoles. They want to be neutral as possible.

The PS4 does have HUMA AMD drivers were not ready by the time the PS4 came out so sony was using its own solution that was all the problem but what Cerny describe to Gamasutra is that HUMA what i just quoted which 04dcarraher would ignore like he always does.

Move engines are also known as DMA and are also on PS4,is a GCN feature not an xbox one feature,the xbox one has 3 additional ones because it has a cumbersome memory structure.

Post a link to that shit i dare you,move engines don't increase power and would not shorten the gap between the PS4 and xbox one,that is some misterXmedia shit you just quoted there and prove once again that you know SHIT about hardware and what your talking DME = DMA on AMD cards all GCN have them including the PS4,the PS4 just doesn't need 4 because it doesn't have shitty memory structure with slow memory and a small pool of fast one.

Power on GCN,PS4 and xbox one come from Stream Processors inside CU,not from move engines so move engines will shorten no gap and are inside the PS4 as well.

wrong again el tormentos

So much denial about what DX12 fixes and introduces..... and grasping on the hyperbole to continue to bash.

Again X1 is not using parallel/direct async cpu communication ...... its only using async compute ie using the gpus ACE unit on the gpu itself tocompute volumetric lights and shadows. Its not the same league as the Async shading done with the other async games on PS4 or Mantle using all cpu cores talking to gpu and ACE units doing much more.

omg, the X1 having ESRAM means squat in preventing the cpu&gpu using DDR3 segment pool with HSA/HUMA features since that pool of memory is unified.

"The CPU cache block attaches to the GPU MMU, which drives the entire graphics core and video engine. Of particular interest for our purposes is this bit: “CPU, GPU, special processors, and I/O share memory via host-guest MMUs and synchronized page tables.” If Microsoft is using synchronized page tables, this strongly suggests that the Xbox One supports HSA/hUMA and that we were mistaken in our assertion to the contrary. Mea culpa."

That is the same shit Infamous does you fool use async compute for particles to.

It is an async game stop your damage control fool.

""If Microsoft is using synchronized page tables""

There goes your argument and post a link what are you scare your own link will own your ass.?

Fact is there is NO CPU access to ESRAM which mean when data is there the CPU can't see it HUMA is a single memory pool address by both CPU and GPU not 2 memory pools.

“ESRAM is dedicated RAM, it’s 32 megabytes, it sits right next to the GPU, in fact it’s on the other side of the GPU from the buses that talk to the rest of the system, so the GPU is the only thing that can see this memory.

There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that.Read more:

Read more: http://wccftech.com/microsoft-xbox-esram-huge-win-explains-reaching-1080p60-fps/#ixzz3sqsUQ36o

NO HUMA period.

Call me when we see what this does for games.

A few frames more and probably widen the gap frame wise vs the xbox one,alto not by much.

@notafanboy: How is sound processed on the PS4? Is that assl efficient as the Xbox one?

Does that not waste CPU cycles on the ps4?

AMD True audio which is made by the same people who make the xbox one which by the way is mostly for Kinect.

http://www.anandtech.com/show/7513/ps4-spec-update-audio-dsp-is-based-on-amds-trueaudio

As for HUMA issue. Why does the CPU need to see GPU's incomplete ROPS result in ESRAM? CPU has very little business with GPU's incomplete ROPS memory operations i.e. The CPU is only interest with results not with incomplete results e.g. the basic idea to why the CPU offloads it's less optimal functions to the GPU. CPU is useful at the start of rendering pipeline e.g. GpGPU and CPU interaction and the CPU is pointless at ROPS stage.

@tormentos:

Your clearly the moron here making excuses and grasping ....... To use HSA/HUMA features you dont need to access the ESRAM just the main pool of memory that cpu and gpu can access together aka the DDR3 pool. Where the vast majority of the memory work is done to begin with. PS4 having the GDDR5 pool is a clear advantage allowing the cpu+gpu to access and process the data more quickly. But to keep on denying, ignoring facts and actually look instead of blindly bashing..... you would know X1 can do HSA and HUMA features.

hUMA is a cache coherent system, meaning that the CPU and GPU will always see a consistent view of data in memory. If one processor makes a change then the other processor will see that changed data, even if the old value was being cached.

IF one processor make a change other processor will see that.

How the fu** will the CPU see it if the change take place inside ESRAM,it has being know for years now that the xbox one is not true HSA but has something similar,because of its 2 memory structures is not truly HUMA.

In fact the info you posted saying it has HUMA was stated on Redit by a so call developer who god know who he was,we never had a confirmation for MS on that,unlike sony which talked about the GPU and CPU seeing the same data always and eliminating the copy and paste that happen allot on PC between CPU and GPU before the PS3 even launch.

So how can the CPU allocate memory on ESRAM.?

How is BI-directional when the CPU has not access to one of the xbox one memory structures,this crap was argue before the xbox one even came out.

ESRAM is not coherent with the CPU period all data that need fast bandwidth need to be on ESRAM once it is there there is no coherency between because the CPU can't access it only the GPU can,so is not the same at all as the PS4,is something similar period.

To reach Sony's effective 140 GB/s, the peak memory bandwidth has to be higher than effective memory bandwidth.

PS4's 140 GB/s effective vs 176GB/s theoretical is in-line with other AMD GDDR5 memory controller efficiencies e.g. ~79 percent efficient.

You are not factoring AMD's internal crossbar/switching overheads prior to hitting CU or ROPS units i.e. this decoupled design enables AMD to have different CU count SKUs without incurring GTX 970's last 0.5 GB massive speed hit issue.

This ~79 percent efficiency factor also hits my PC's Radeon HD 7950-900, 7970-GE, R9-290-Ref and R9-290X-1040, hence this is not anti-PS4.

Applying 79 percent efficiency on XBO's 68 GB/s DDR3 memory bandwidth yields 53.72 GB/s which is inline with XBO's stated effectiveness.

The lost memory bandwidth is re-gained via texture compression/decompression i.e. ATI/AMD usually introduces IP technology in this area e.g. 3DC+(aka DX10 BC4), 3DC(aka DX10 BC5), DX11's BC6/BC7. This applies to all AMD GPU IP users.

If you apply ~79 percent efficiency on 204 GB/s ESRAM, it reaches it's stated effective memory bandwidth.

The problem with XBO's 32 MB ESRAM is it's very slow relative to CU's SRAM storage i.e. local data storage(LDS) and L1 cache.

XBO's 32 MB ESRAM doesn't turn the machine into 12 CU with 32MB worth of local data storage (LDS) SRAM storage!!!!! XBO's 32 MB ESRAM sits outside of CU while LDS sits inside of CU.

One doesn't apply lossy block compression (BC) to GpGPU compute based data sets.

IF PS4 shows effective 172 GB/s, it's 32 ROPS should have delivered rival MSAA performance to Radeon HD 7950, but this is NOT the case. Your 172 GB/s = effective bandwidth is a load of bull$hit. PS4's GPU delivered about R7-265 level of performance!!!

PS4 doesn't have NVIDIA MaxwellV2's direct CU(aka SM)/ROPS to MCH connection design!!!!!

Stop using the same pathetic ass excuse QUOTE SONY STATING 140GB/s is the maximum bandwidth.

Because i quoted a developer getting 172GB/s.

Bullshit The order is not 4xMSAA because is 1920x800p is because the visual quality it has + 4XMSAA is to much for its bandwidth so it becomes bandwidth starved at 1080p The Order is an incredible looking game from the best this gen so far.

I have already kill and shutdown your crappy theory,MSAA 4X not only consumes allot of bandwidth but also has a hit to performance a good one to,so depending on what your doing your GPU may be able to handle it,so if you have a game with less demanding visuals 4X MSAA is possible with 1080p resolution dude.

Bandwidth without power mean shit,having 300gb/s on a 7770 will not make that shitty GPU suddenly match a 7950 just because it has bandwidth to waste.

Fortunately, PS4 goes with a full 1080p output as expected, and treats it with a comprehensive pass of 4x MSAA - a multi-sample sweep that focuses on geometric aliasing.

http://www.eurogamer.net/articles/digitalfoundry-2015-final-fantasy-x-x2-hd-remaster-ps4-face-off

Forza Horizon 2 is 1080p and uses 4xMSAA and is a forward render game like the Order the only difference is that FH2 look like crap compare to not only the order but also other racers regardless of of using 4x MSAA.

The PS4 could have the same bandwidth as the 7950 it still would not run the same resolution,image quality and frames as a 7950 which has more CU and more stream processors.

Does this mean PS4 will get better first party games? Only one I care about is Gravity Rush.

Is not the PS4 fault what your taste in games is.

lol again tomato overlooking the obvious......

once the crap is processed in main pool the select data can be sent where its needed else where, the ESRAM buffer is only used for a narrow range of items where gpu absolutely needs the write/read speeds. Vast majority of the gpu and cpu memory is handled on the DDR3. Which is why HSA/HUMA is possible on X1 and multiple devs and analysts after that retracted statement from that incorrect AMD employee has shown that X1 supports those features.

So first it was teh GDDR5 that was going to turn PS4 into a supercomputer...

Then it was teh GPGPU that was going to turn PS4 into a supercomputer...

Now teh extra core on a shit CPU is going to turn the PS4 into a supercomputer.

Thank you $ony.

For a supposed PC gamer you sure cry a lot about ps4. If your upset with your xbone direction take it up with MS rather than having a tanty over the opposition. See you moaning about the underpowered ps4 all the time yet you are one of the first to run to the bones defense. Let me guess your some kid right? Under 25 at least. Trust me it shows.

This is SWs. But I don't take this shit outside the forums. Anything here offends you, leave. And grow up with that 'kid' bs, SWs is a game only. NO one should take it as anything else. In fact outside of SWs I haven't recommended anything other than PC for the last year and a half. It's the better deal. Inside SWs, people know that's the deal, I don't have to say it often. Outside SWs, I don't care if you play on PS or a Commodore 64, I don't give a shit about gaming outside. But if people ask me, I'd tell them I don't see the value in PS or Xbox realistically. I don't even buy games on console much now.

I've been on the other side of PlayStation fanboys since their first console in the SWs. I've been a sheep with the Game boy/SNES - N64, a lem Xbox - Xbox 360 and now a herm.

And I call out Xbox too. I called them out for being weak, dropping kinect, dropping the price, everything. I call both out for having no games. I even declared PS the winner of the gen in SWs when MS dropped Kinect.

I'll defend the PC in the few times it needs it, like when a recent cow thread said the PS4 was twice as powerful. I'll defend the Xbox to get at the cows when there's something to defend with. But I'm never going to be on the cows side here. That said, I have little actual problem with Playstation outside of here. In the SWs game, they're the other sports team, they're Man U and I'm Chelsea.

My original version of this comment was rude. My bad.

@HalcyonScarlet:

Cant help it if you come off like a whiney kid.

Doesn't give a shit about gaming. Continues to whine about gaming. Logic much?

Only a kid feels the need to defend plastic. You should apologise. You cant even rant properly.

@HalcyonScarlet:

Cant help it if you come off like a whiney kid.

Doesn't give a shit about gaming. Continues to whine about gaming. Logic much?

Only a kid feels the need to defend plastic. You should apologise. You cant even rant properly.

Since you can't comprehend context, logic and you make quick knee-jerk conclusions, probably based on your own defensive attachments, that and you write like a child. I can only conclude you are under 15, it shows... Trust me.

Are you sure you're not a cow? You seem to take this nonsense kinda personally.

lol again tomato overlooking the obvious......

once the crap is processed in main pool the select data can be sent where its needed else where, the ESRAM buffer is only used for a narrow range of items where gpu absolutely needs the write/read speeds.Vast majority of the gpu and cpu memory is handled on the DDR3. Which is why HSA/HUMA is possible on X1 and multiple devs and analysts after that retracted statement from that incorrect AMD employee has shown that X1 supports those features.

Dude most of that data is on ESRAM first not on DDR3,why the hell you think ESRAM is there.? Anything that need fast bandwidth goes on ESRAM that buffer is always in use any changes there can't be seen period,not only that so you are rendering something using ESRAM as buffer yeah there is no coherentcy with the CPU period.

He wasn't incorrect AMD released a statement damage controlling what was say because well the xbox one was still under NDA if you don't remember in fact the same no comment was say about sony,much like ACU was confirmed to be hold back to avoid arguments then latter damage control.

If there is no CPU access to ESRAM there is no coherency period,the HUMA feature on xbox one is not true HUMA and most of the data is first handle on ESRAM not on DDR3 which only provide like 38GB/s to the GPU,trying to now act like DDR3 is what hold everything on xbox one is wrong,fact is not using ESRAM on xbox one for everything requiring fast bandwidth mean the system will starve bandwidth wise.

So this is where you put things that you gonna read a lot like a shadow map, put things that you draw to a lot, like your back buffer… We have resource creation settings that allow you to put things into there, and don’t have to all reside in the ESRAM, there can be pieces of it that can reside in regular memory as well. So for example if I’m a racing game, and I know that the top third of my screen is usually sky and that sky doesn’t get touched very much, great, let’s leave that in regular memory, but with the fast memory down here we’re gonna draw the cars. This works practically for any D3D resource there is, buffers, textures of any flavors… There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that.

Read more: http://wccftech.com/microsoft-xbox-esram-huge-win-explains-reaching-1080p60-fps/#ixzz3t0Omwk7g

The more excuses you throw the more i make you look like a blind lemming.

Like i already told all the things that need fast bandwidth go on ESRAM is you have something like dead sky that goes on DDR3,see how he say it work for any D3D resources,cars,textures,buffer.?

Oh look again no CPU access to ESRAM.

The more excuses you throw the more i make you look like a blind lemming.

Like i already told all the things that need fast bandwidth go on ESRAM is you have something like dead sky that goes on DDR3,see how he say it work for any D3D resources,cars,textures,buffer.?

Oh look again no CPU access to ESRAM.

lol again your making excuses and ignoring facts, using esram as an anchor for your flawed argument.

and no..... not most of the data is on the esram, and not everything that could use the write/read speeds gets thrown onto esram pool, only the few top priority items.

and again ignoring facts again AMD employee was wrong plain and simple to claim X1 cant do HUMA when it can.....

The Verge reported after the statement on all topics about X1 and PS4 AMD seems to have included the XB1 in huma support.....

"That might sound suspiciously vague, but we spoke to AMD and it's actually true. The AMD chips inside the PlayStation 4 and Xbox One take advantage of something called Heterogeneous Unified Memory Access (HUMA), which allows both the CPU and GPU to share the same memory pool instead of having to copy data from one before the other can use it. Diana likened it to driving to the corner store to pick up some milk, instead of driving from San Francisco to Los Angeles. It's one of AMD's proposed Heterogeneous System Architecture (HSA) techniques to make the many discrete processors in a system work in tandem to more efficiently share loads."

Your the one making excuses and ignoring facts, using outdated data and bias......

You dont need esram to use hsa/huma you stupid cow. Its funny you keep calling me a lem when you keep on being wrong on that account and many others

"an AMD spokesperson made inaccurate statements regarding the details of our semi-custom APU architectures."

it means what its says

This could also be because of the VR headset, needing some extra resources? But anyways, it's great they had some plan for futureproofing. Even though I doubt one CPU core will do much difference in most games, maybe in utilizing gpGPU compute better??

@HalcyonScarlet:

Don't know why pointing out facts about your behaviour around here gets you so angry. You should work on that. At least try to be consistent. You came in this thread like an angry bull with a raging hardon and attacked the ps4 for what now? You really are just a bitter lem that dropped Microsoft once you saw the 2013 reveal and are now hiding behind pc as you try to come to terms with your abandonment by ms which btw is all in that small little head of yours. Don't worry when you grow up you don't get rejection issues from toys.

lol again your making excuses and ignoring facts,

and no..... not most of the data is on the esram, and not everything that could use the write/read speeds get thrown onto esram pool, only the top priority items.

and again ignoring facts again AMD employee was wrong plain and simple to claim X1 cant do HUMA when it can.....

The Verge reported back in June after the statement on all topics AMD and seems to have included the XB1 in huma support.....

"That might sound suspiciously vague, but we spoke to AMD and it's actually true. The AMD chips inside the PlayStation 4 and Xbox One take advantage of something called Heterogeneous Unified Memory Access (HUMA), which allows both the CPU and GPU to share the same memory pool instead of having to copy data from one before the other can use it. Diana likened it to driving to the corner store to pick up some milk, instead of driving from San Francisco to Los Angeles. It's one of AMD's proposed Heterogeneous System Architecture (HSA) techniques to make the many discrete processors in a system work in tandem to more efficiently share loads."

Your the one making excuses and ignoring facts, using outdated data and bias......

You dont need esram to use hsa/huma you stupid cow. Its funny you keep calling me a lem when you keep on being wrong

"an AMD spokesperson made inaccurate statements regarding the details of our semi-custom APU architectures."

it means what its says

And what it does is that it gives you very very high bandwidth output, and read capability from the GPU as well. This is useful because in a lot of cases, especially when we have as large content as we have today and five gigabytes that could potentially be touched to render something, anything that we can move to memory that has a bandwidth that’s on the order of 2 to 10 x faster than the regular system memory is gonna be a huge win.

So this is where you put things that you gonna read a lot like a shadow map, put things that you draw to a lot, like your back buffer… We have resource creation settings that allow you to put things into there, and don’t have to all reside in the ESRAM, there can be pieces of it that can reside in regular memory as well. So for example if I’m a racing game, and I know that the top third of my screen is usually sky and that sky doesn’t get touched very much, great, let’s leave that in regular memory, but with the fast memory down here we’re gonna draw the cars. This works practically for any D3D resource there is, buffers, textures of any flavors… There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that.

Read more: http://wccftech.com/microsoft-xbox-esram-huge-win-explains-reaching-1080p60-fps/#ixzz3t0YUE3lX

Go make excuses elsewhere anything that need fast bandwidth goes on ESRAM which is basically everything this days but baked crap and non dynamic shit like DEAD sky boxes.

http://wccftech.com/amd-huma-playstation-4-graphics-boost-xbox-analysis-huma-architecture/

It was damage control after some one on AMD let the cat out of the bag.

“We decided to lock [both iterations] at the same specifications to avoid all of the debates and stuff,” is apparently what senior producer Vincent Pontbriand told Videogamer.com.

NEXT ASSASSIN'S CREED UNITY / 10 OCT 2014

UBISOFT REFUTES ASSASSIN'S CREED UNITY DOWNGRADE CLAIMS

Share.

"Let’s be clear up front: Ubisoft does not constrain its games."

BY LUKE KARMALI Ubisoft has released a new statement on Assassin's Creed Unity's technical specs, resolutely denying it would ever hold back one version of the game in order to placate owners of other platforms.

The publisher saw backlash earlier this week when senior producer Vincent Pontbriand revealed that Assassin's Creed Unitywill run at 900p and 30 frames per second on PlayStation 4 and Xbox One to "avoid all the debates and stuff."Ubisoft later clarified that the locked resolution and frame rate on both consoles weren't made "to account for any one system."

Avery day damage control is what it was after screwing up fact is you blind and biased lemming,that what AMD describe as HUMA is not the solution on xbox one only when data is on DDR3 there is coherency when data is on ESRAM which is ALL THE TIME there is non and the CPU has no way to read any changes done there because it has no access to it only the GPU can see it.

That is not what AMD describe here.

This is not what the xbox one has,but it is what the PS4 does have and which Mark Cerny explained in high detail while MS didn't this is the same company who bragged about Tile Resources as if the PS4 didn't had it,i am sure it would have brag about HUMA to not before changing the name to it like they did with Partially Resident Textures.

This could also be because of the VR headset, needing some extra resources? But anyways, it's great they had some plan for futureproofing. Even though I doubt one CPU core will do much difference in most games, maybe in utilizing gpGPU compute better??

That could be a possibility, VR takes more cpu resources and gpu resources to render two images. That extra cpu core depending on what is free for the dev to use can change the difference in stable performance. 6 cores at 1.6ghz isnt enough to feed its gpu correctly,

lol tomato its confirmed your an idiot that ignores facts...... ie HSA/HUMA does not require ESRAM.

have a nice day

@HalcyonScarlet:

Don't know why pointing out facts about your behaviour around here gets you so angry. You should work on that. At least try to be consistent. You came in this thread like an angry bull with a raging hardon and attacked the ps4 for what now? You really are just a bitter lem that dropped Microsoft once you saw the 2013 reveal and are now hiding behind pc as you try to come to terms with your abandonment by ms which btw is all in that small little head of yours. Don't worry when you grow up you don't get rejection issues from toys.

I'm ain't even mad, you're the one who got mad at me. Interesting, don't know why you care about what I do, I certainly don't care about you. Never noticed you before.

Also lol with that 'NO ONE is allowed to switch systems unless it's to Playstation' nonsense, get a life. Why are you mad I jumped ship to the PC? I didn't like what Xbox was doing, sorry kid. That's something you're going to have to live with.

lol, at 'anyone who attacks PS is a lem'. Maybe you should gtfo of SWs, this place seems to offend you. You're in the wrong place to call someone out for being a fanboy. Maybe you wanted to go to general discussions or the Playstation forum where everyone holds hands and take wendy walks with each other. I'm a herm and I'll continue to jump on the Playstation and I'll defend the PC, Xbox and Nintendo to do it. And you can just live with that.

I don't understand why you're acting like you're above fanboyism, while in SWs, at the same time as acting like a fanboy, wtf? Take a good hard look at the two words 'SYSTEM WARS', and take you're own frustrations and sanctimonious nonsense elsewhere.

Listen, you can continue saying stuff to me, but I have nothing against you, you're just not interesting enough.

Does anybody find it disturbing that the cows are praising an unlocked physical core?!! Apparently it's now worth bragging that your system is now able to use more of the hardware paid for by you the consumer? The whole point of a console is to play games right, what were those other two cores sitting around for? (rhetorical for you warriors out there)

Sony - we're a console for the gamers! Except a significant portion of our CPU, not that, more cores are never helpful, especially when the framerate crashes harder than the ball drop on New Years.

Somebody needs to start a tech based Onion News so we can shame shit like this.

This just in -"Intel locks down an entire core on its Skylake CPU's because it wants users to save power!"

Smh.

Does anybody find it disturbing that the cows are praising an unlocked physical core?!! Apparently it's now worth bragging that your system is now able to use more of the hardware paid for by you the consumer? The whole point of a console is to play games right, what were those other two cores sitting around for? (rhetorical for you warriors out there)

Sony - we're a console for the gamers! Except a significant portion of our CPU, not that, more cores are never helpful, especially when the framerate crashes harder than the ball drop on New Years.

Somebody needs to start a tech based Onion News so we can shame shit like this.

This just in -"Intel locks down an entire core on its Skylake CPU's because it wants users to save power!"

Smh.

Interestingly Intel were once going to release a partially disabled cpu. I don't know if they did it.

Please Log In to post.

Log in to comment