@loco145 said:

@xantufrog: Ah, so you say that SLI/XF would be transparent for the game devs? Sorry, but it if were that easy, Nvidia/AMD would have done that ages ago. Unlike other parallel tasks such as AI, graphics rendering is very sensitive to memory latency. There's a reason why they showed their multi-gpu capabilities on an specially coded demo (that already supports SLI/XF) and not on Assasin's Creed Odyssey.

As far as I know, there does not exist the technology to make graphics rendering over several GPUs acting as one. And if Google invented it, they failed to mention it at GDC 2019.

You're so busy being upset by this that you couldn't properly read my post.

First, I said "cool, this has Crossfire-like tech. I wonder if that means games coded to support that will lead to a bleed-over, whereby we get better crossfire support on PC games since devs are doing it anyway for Google's platform"

Then, I mused "or, maybe that won't be the case because I imagine they'll try to handle parallelization on the server side, such that multiple gpus can be used without a game code change"

I don't know if that second option is true or not. I didn't say it was. But I definitely do think it's possible, for the record. You're overselling the mystique behind this. As I already said, it's not very difficult for me to submit processes in parallel to run on multiple gpus. Mind you, I'm doing this at the code level. But, what google could do to implement that without explicit SLI/Crossfire support would be to intervene with the render call and distribute the computations over multiple GPUs. This, to me, would imply a CPU-intensive task which a server is perfect for.

The journalists don't know the answer or the limits, so I won't pretend to. The guy from from PCgamesn said it well:

The fact the Stadia system in the datacentre means it is going to be able to allocate multiple GPUs to a gaming instance is mind-boggling, and assumedly means not just two, but potentially as many GPUs as is needed to offer the level of gaming performance the end user needs for a particular game. Maybe it will just need to use a single one of the 10.7 TFLOP GPUs, or maybe it’s greedy and needs three.

Quite how Google and AMD have been able to create this server-side CrossFire analogue is obviously beyond me, or I’d be working at Google and not about to me made redundant in my position as a PC hardware journalist.

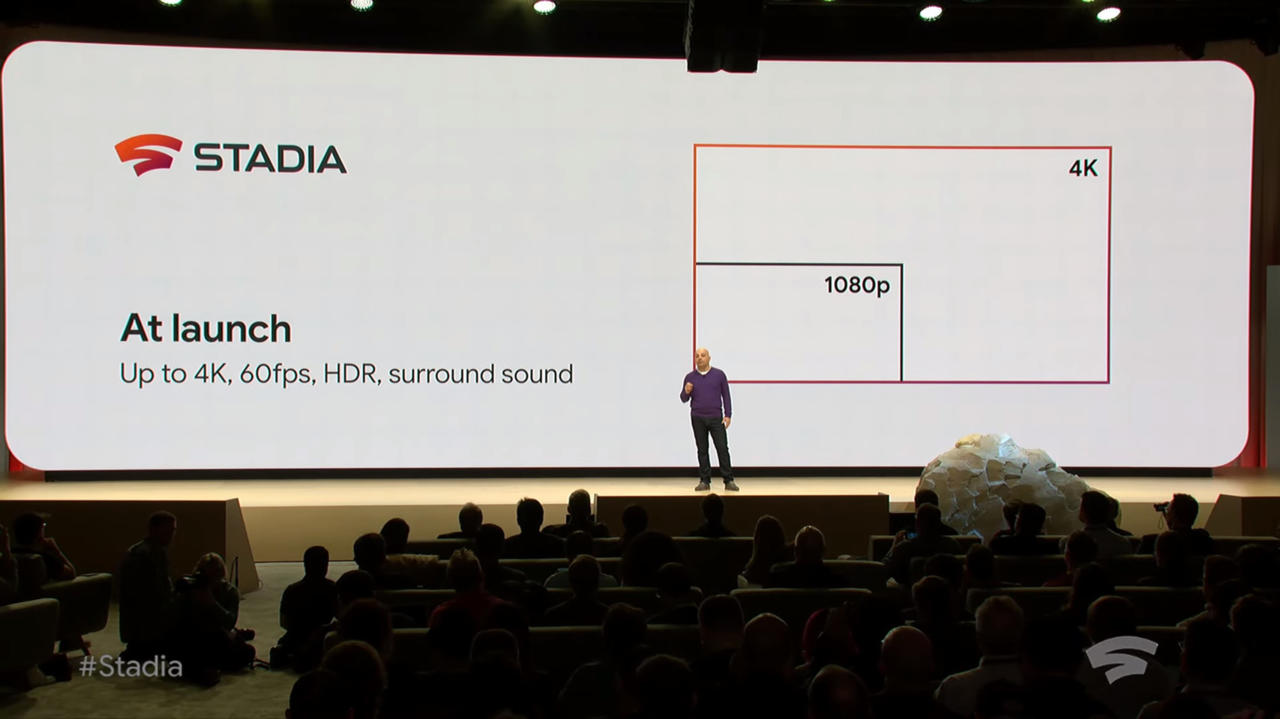

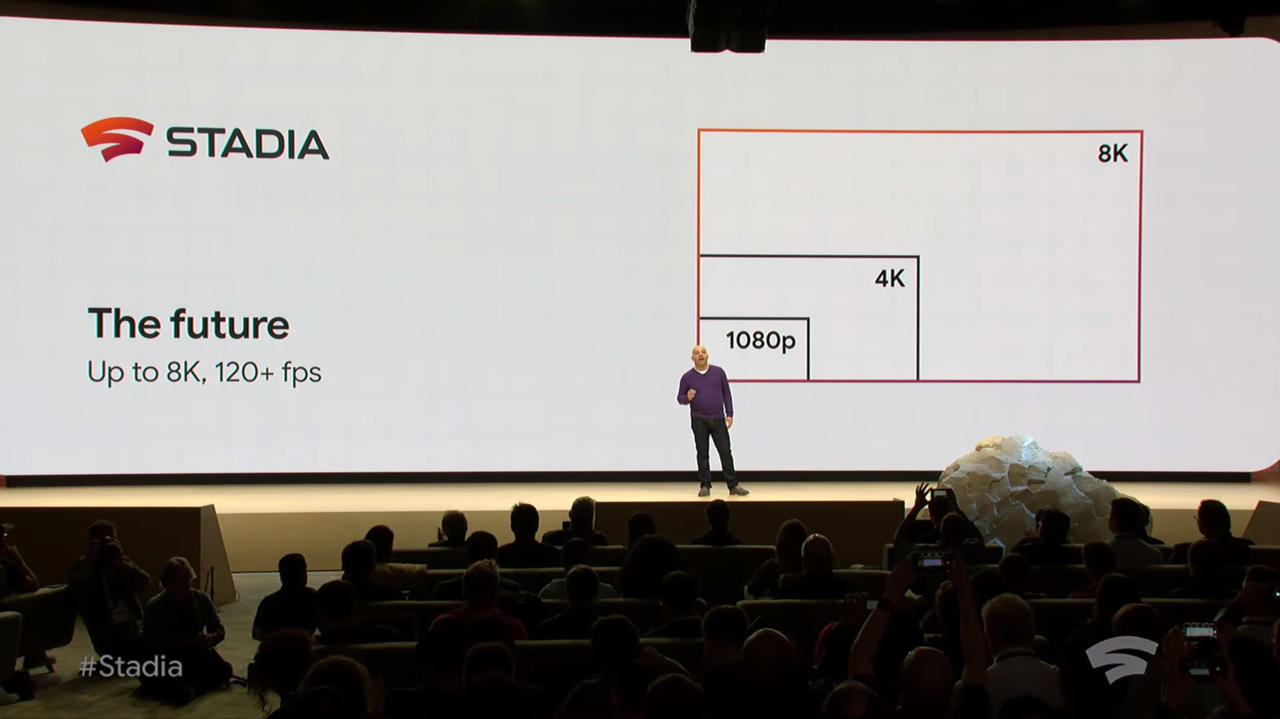

There is simply no way Google is talking about 4k 60 or even 8k if they are limited to a single 10.7TF GPU. It's just not going to happen.

Log in to comment