You’re interpreting their quote incorrectly

Nope you are

@GioVela2010: It doesn’t make sense though. If the Xbox One X was the best looking version they’d simply say it’s the best looking without adding "smoothest". The PC version is the smoothest and also the best looking. Otherwise their sentence makes no sense.

If you're one of the few people who have trouble interpreting simple sentences due to a rather unhealthy and long-standing fanboy bias, it's easy to see what DF actually meant by looking at a screenshot of the 1X version and PC version.

At that moment it is quite clear.

If you're one of the few people who have trouble interpreting simple sentences due to a rather unhealthy and long-standing fanboy bias, it's easy to see what DF actually meant by looking at a screenshot of the 1X version and PC version.

At that moment it is quite clear.

For someone who cant see the diff between 30 and 60 fps......

Yes sentences could be difficult.

If you're one of the few people who have trouble interpreting simple sentences due to a rather unhealthy and long-standing fanboy bias, it's easy to see what DF actually meant by looking at a screenshot of the 1X version and PC version.

At that moment it is quite clear.

It’s easy to see my interpretation is the correct one, when they make the following statements about the X1X version.

“HDR is a night and day upgrade to SDR”

”This is how it always should have looked”

”HDR is a truer more correct representation of the artwork”

”SDR suddenly looked dull by comparison”

”Gives consoles a very tangible advantage”

”HDR leaves it with a distinct advantage, over even PC”

If you're one of the few people who have trouble interpreting simple sentences due to a rather unhealthy and long-standing fanboy bias, it's easy to see what DF actually meant by looking at a screenshot of the 1X version and PC version.

At that moment it is quite clear.

It’s easy to see my interpretation is the correct one, when they make the following statements about the X1X version.

“HDR is a night and day upgrade to SDR”

”This is how it always should have looked”

”HDR is a truer more correct representation of the artwork”

”SDR suddenly looked dull by comparison”

”Gives consoles a very tangible advantage”

”HDR leaves it with a distinct advantage, over even PC”

Doesn't this all boil down to HDR witcher 3 is better on xbox, but not enough to be better overall than PC?

If you're one of the few people who have trouble interpreting simple sentences due to a rather unhealthy and long-standing fanboy bias, it's easy to see what DF actually meant by looking at a screenshot of the 1X version and PC version.

At that moment it is quite clear.

It’s easy to see my interpretation is the correct one, when they make the following statements about the X1X version.

Those quotes are from a completely separate article. It's easy to see you are being deceptive.

In that video you keep using, he even says it's only the best place to play it on consoles, and happens to have an advantage over PC - but isn't overall better than PC.

Because it's not. It looks worse. It runs worse.

If you're one of the few people who have trouble interpreting simple sentences due to a rather unhealthy and long-standing fanboy bias, it's easy to see what DF actually meant by looking at a screenshot of the 1X version and PC version.

At that moment it is quite clear.

It’s easy to see my interpretation is the correct one, when they make the following statements about the X1X version.

“HDR is a night and day upgrade to SDR”

”This is how it always should have looked”

”HDR is a truer more correct representation of the artwork”

”SDR suddenly looked dull by comparison”

”Gives consoles a very tangible advantage”

”HDR leaves it with a distinct advantage, over even PC”

Doesn't this all boil down to HDR witcher 3 is better on xbox, but not enough to be better overall than PC?

Yes. He knows this. The video also makes it clear.

It's an advantage, but still only the best console version. They says this in the video for Blood and Whine and completely separate article by a different author for Witcher 3 base.

The screenshot and fps differences are more than telling to show what DF means.

If you're one of the few people who have trouble interpreting simple sentences due to a rather unhealthy and long-standing fanboy bias, it's easy to see what DF actually meant by looking at a screenshot of the 1X version and PC version.

At that moment it is quite clear.

It’s easy to see my interpretation is the correct one, when they make the following statements about the X1X version.

“HDR is a night and day upgrade to SDR”

”This is how it always should have looked”

”HDR is a truer more correct representation of the artwork”

”SDR suddenly looked dull by comparison”

”Gives consoles a very tangible advantage”

”HDR leaves it with a distinct advantage, over even PC”

Doesn't this all boil down to HDR witcher 3 is better on xbox, but not enough to be better overall than PC?

It boils down to looking best on X1X, being The smoothest, best looking on PC.

NOTsmoothest AND best looking on PC

Doesn't this all boil down to HDR witcher 3 is better on xbox, but not enough to be better overall than PC?

being The smoothest, best looking on PC.

NOTsmoothest AND best looking on PC

What? Lmao you get worse every gen.

It was funny at first when you said your Plasma made xbox, xbox360, ps2, ps3 look better than PC. Kind of funny when you said 30 fps was best. And now this is just sad - you sound like you're having a stroke.

If you're one of the few people who have trouble interpreting simple sentences due to a rather unhealthy and long-standing fanboy bias, it's easy to see what DF actually meant by looking at a screenshot of the 1X version and PC version.

At that moment it is quite clear.

It’s easy to see my interpretation is the correct one, when they make the following statements about the X1X version.

“HDR is a night and day upgrade to SDR”

”This is how it always should have looked”

”HDR is a truer more correct representation of the artwork”

”SDR suddenly looked dull by comparison”

”Gives consoles a very tangible advantage”

”HDR leaves it with a distinct advantage, over even PC”

Doesn't this all boil down to HDR witcher 3 is better on xbox, but not enough to be better overall than PC?

It boils down to looking best on X1X, being The smoothest, best looking on PC.

NOTsmoothest AND best looking on PC

I can't say I read this the same way.

They seem to reference HDR is better on xbox, saying it's better than SDR. Even going as far to say that it's an advantage over PC. Well yes, that seems true, HDR > SDR.

But then they also mention that PC version is better than Xbox version.

So HDR > SDR, but not enough to be better than PC version overall.

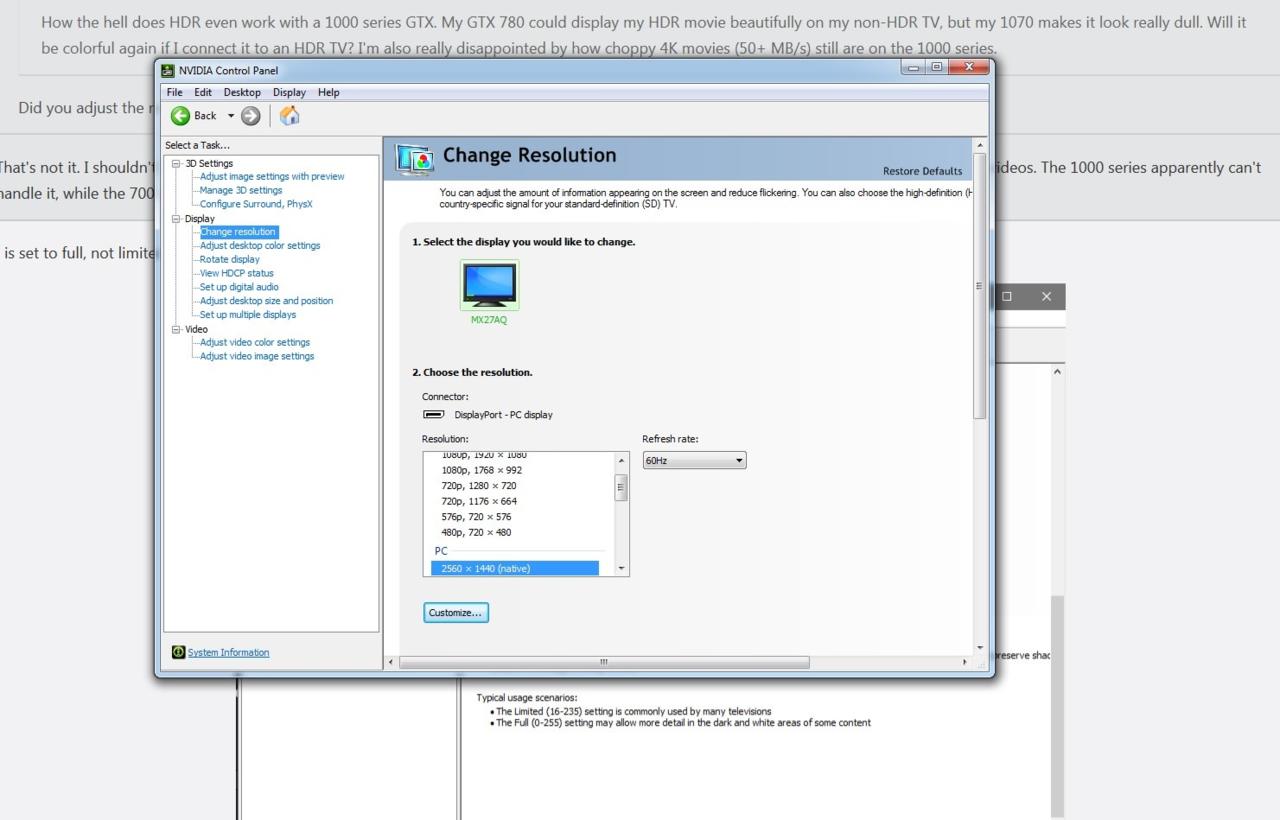

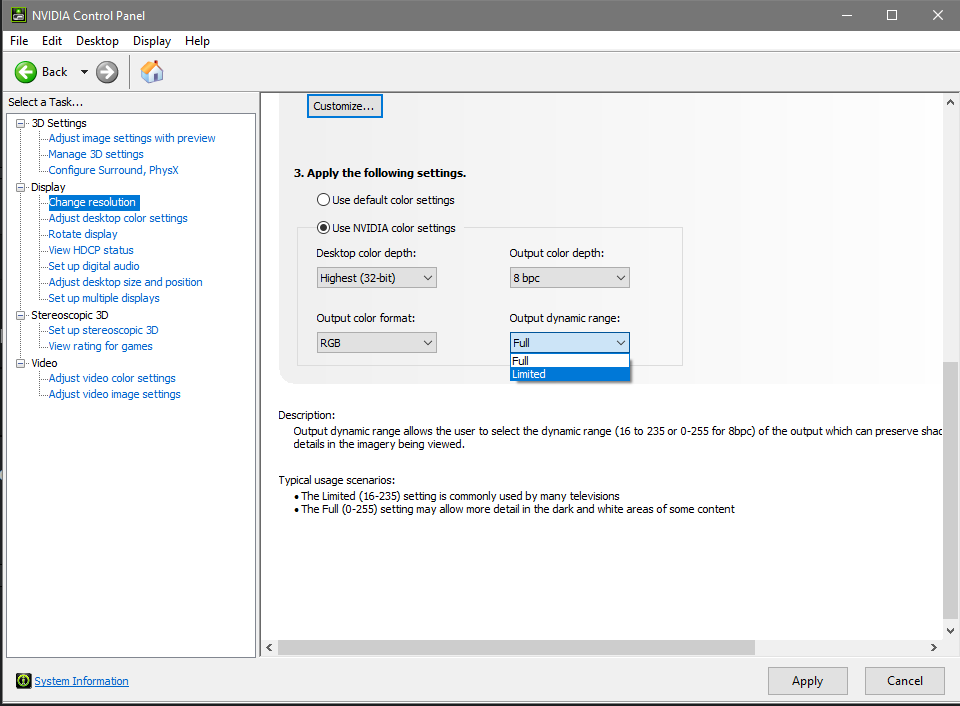

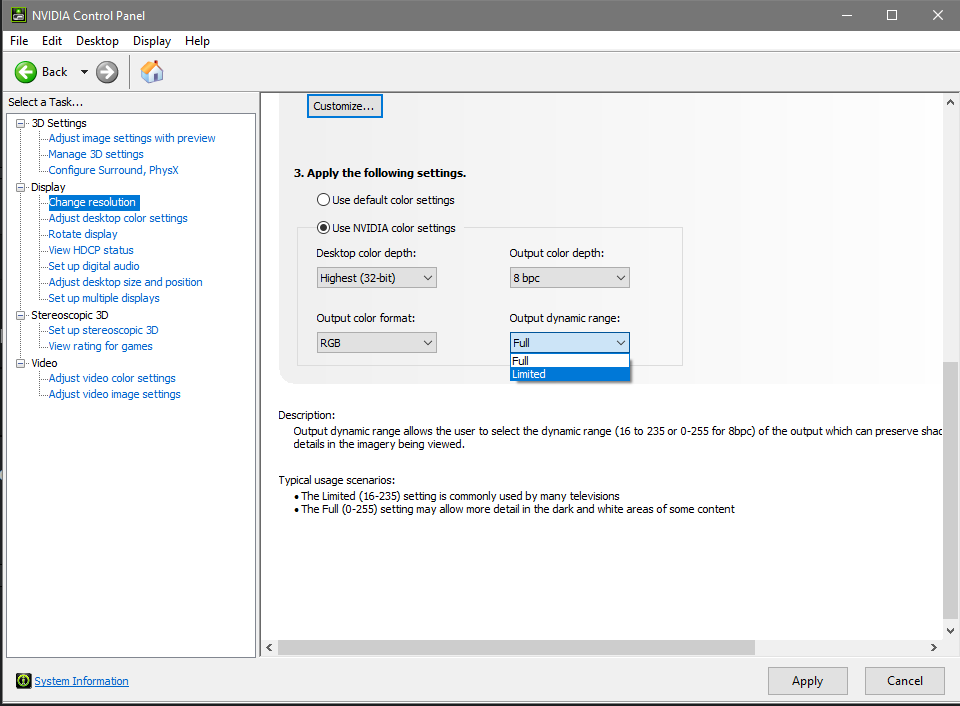

How the hell does HDR even work with a 1000 series GTX. My GTX 780 could display my HDR movie beautifully on my non-HDR TV, but my 1070 makes it look really dull. Will it be colorful again if I connect it to an HDR TV? I'm also really disappointed by how choppy 4K movies (50+ MB/s) still are on the 1000 series.

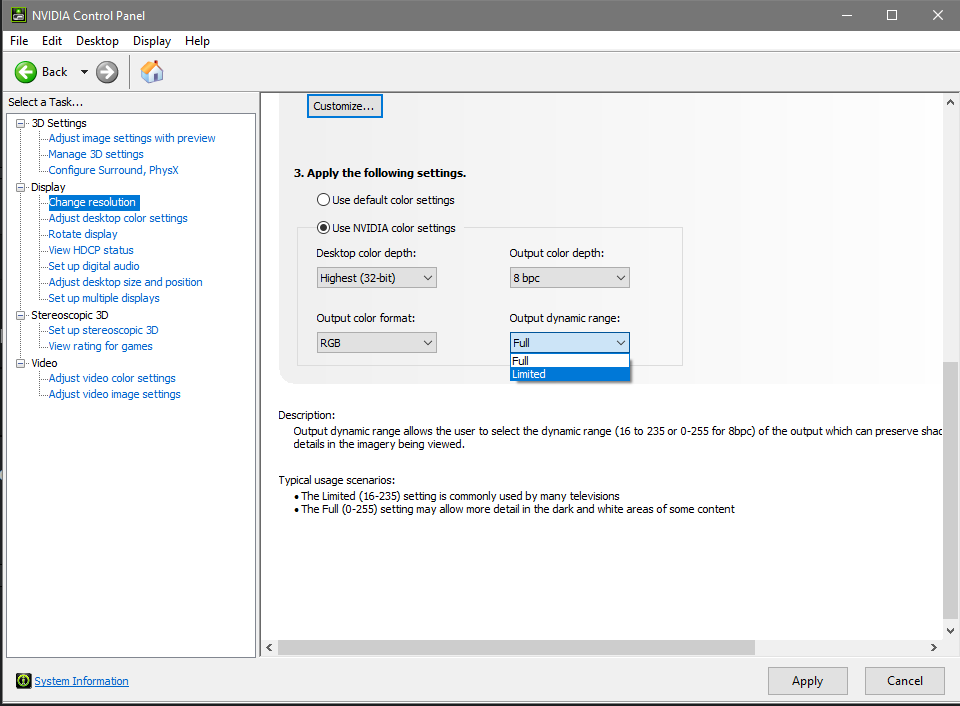

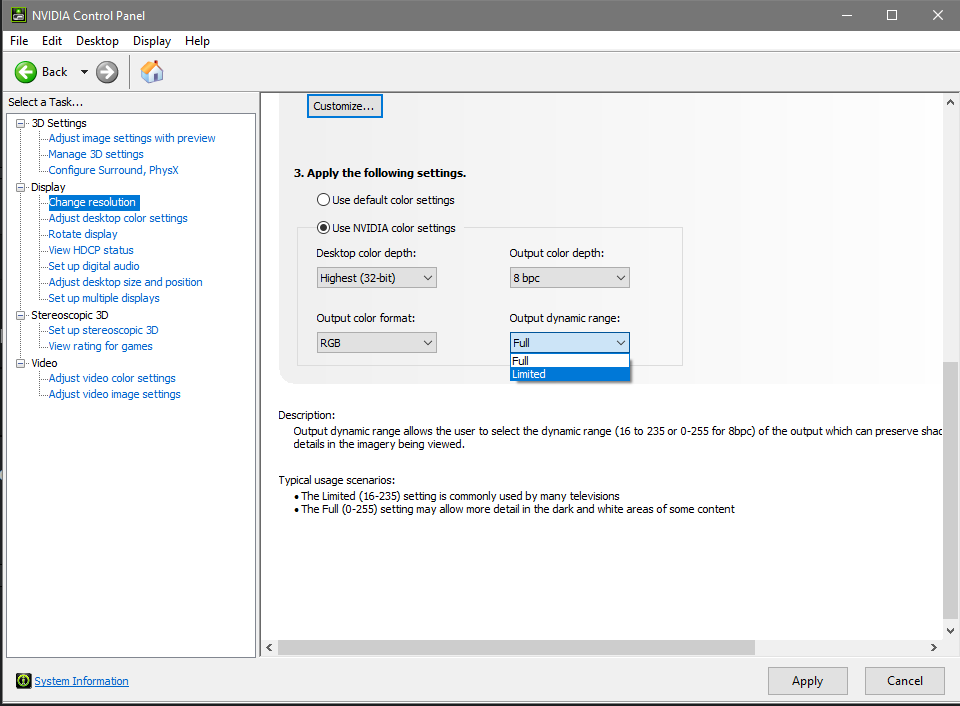

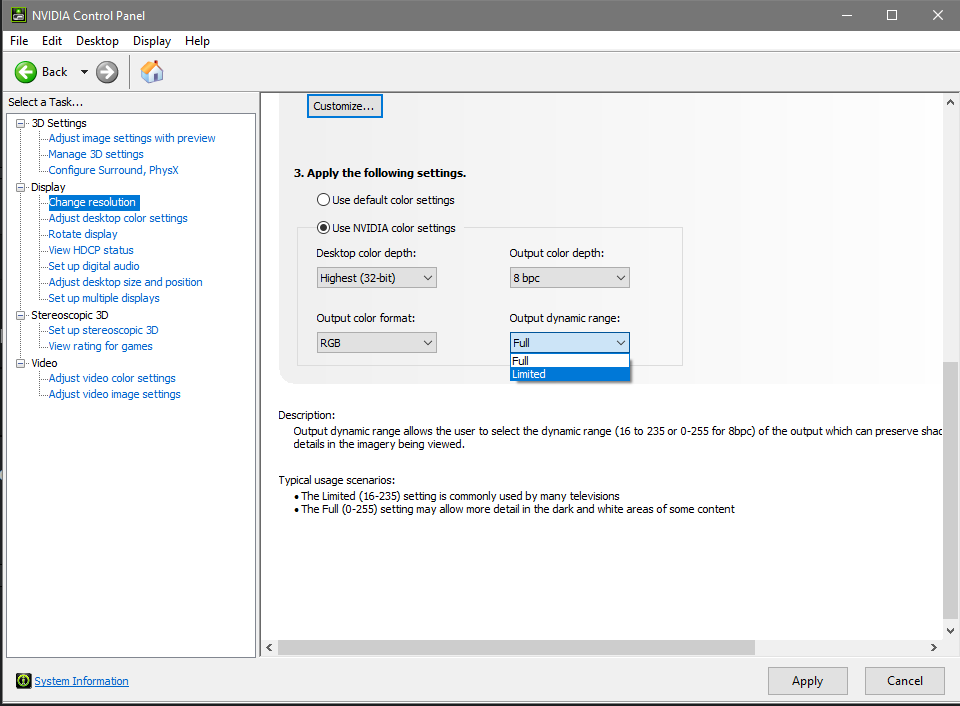

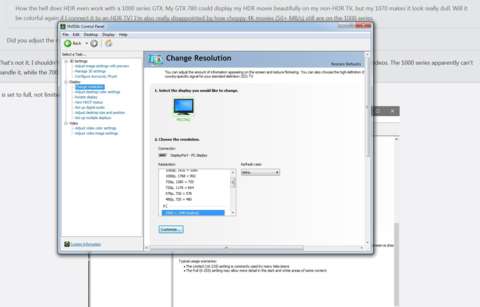

Did you adjust the nvidia color settings in the control panel?

That's not it. I shouldn't have to change the colors for one video. I know it has SOMETHING to do with BT.2020, the color primaries used for HDR videos. The 1000 series apparently can't handle it, while the 700 series can.

How the hell does HDR even work with a 1000 series GTX. My GTX 780 could display my HDR movie beautifully on my non-HDR TV, but my 1070 makes it look really dull. Will it be colorful again if I connect it to an HDR TV? I'm also really disappointed by how choppy 4K movies (50+ MB/s) still are on the 1000 series.

Did you adjust the nvidia color settings in the control panel?

That's not it. I shouldn't have to change the colors for one video. I know it has SOMETHING to do with BT.2020, the color primaries used for HDR videos. The 1000 series apparently can't handle it, while the 700 series can.

This is set to full, not limited, right? It should be set on full.

I'll use the argument that console gamer's use when talking about PC hardware and how many people actually have high end PC's.

How many console gamer's actually have:

Your looking at $3K for a 1000 Nit HDR Panel and ATMOS speakers.

Gio... I know you have a HDR OLED TV?... But which one because HDR is not equal on all TV's, if its less than 700 Nits its not what those comments of praise come from, its those comments come from 1000 Nit panels.

OLED is super and HDR is a game changer in display technology but its not mainstream yet, TV tech and Monitor tech rarely cross paths its why their where no plasma monitors or 144Hz TV's.

Monitors will catch up eventually but consoles will pretty much always be targeting 30FPS... Which is why most PC gamer's have no interest in console gaming out side of exclusives.

I'll use the argument that console gamer's use when talking about PC hardware and how many people actually have high end PC's.

How many console gamer's actually have:

Your looking at $3K for a 1000 Nit HDR Panel and ATMOS speakers.

Gio... I know you have a HDR OLED TV?... But which one because HDR is not equal on all TV's, if its less than 700 Nits its not what those comments of praise come from, its those comments come from 1000 Nit panels.

OLED is super and HDR is a game changer in display technology but its not mainstream yet, TV tech and Monitor tech rarely cross paths its why their where no plasma monitors or 144Hz TV's.

Monitors will catch up eventually but consoles will pretty much always be targeting 30FPS... Which is why most PC gamer's have no interest in console gaming out side of exclusives.

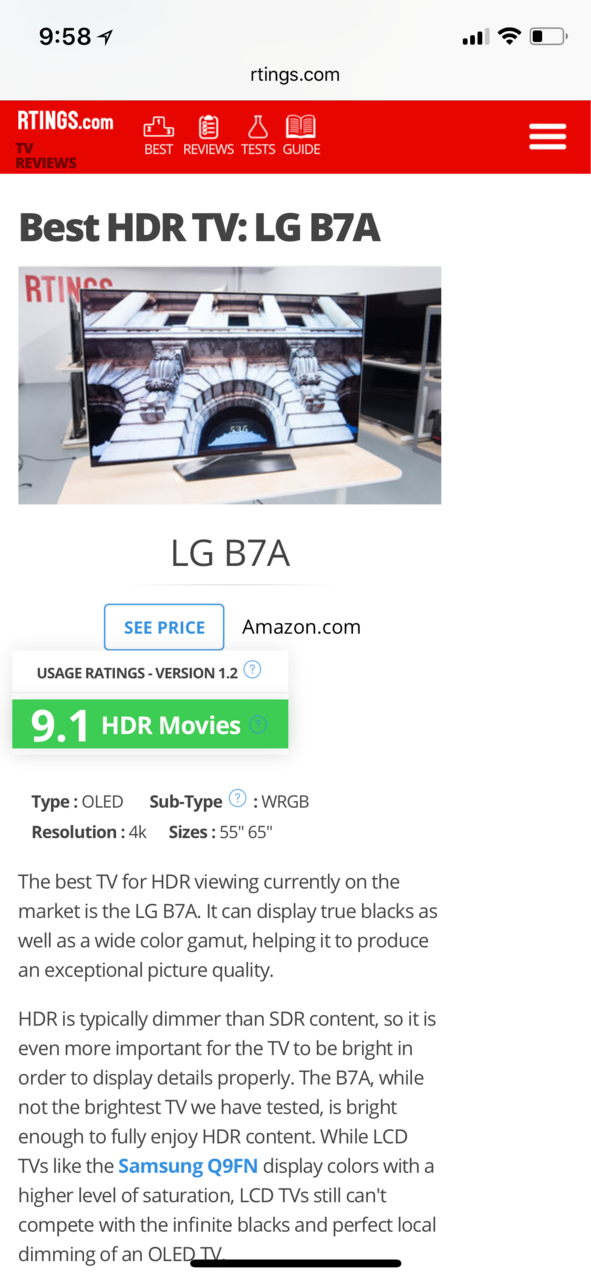

No OLED does 1000 Nits and they’re still considered the industry standard for HDR because the blacks are perfect.

Therefore the range between OLeDS’s deepest Blacks and brightest whites is actually a wider range than any LED/LCD despite the LED getting brighter.

HRD is overrated. Overpriced TVs with a sweetFx mod built-in LOL Only noobs can fall for that. On PC i can reach the same effect with ShadderFX mods for free.

Lol no, you can slightly approximate the effect with things like sweetFX or REshade, but to say you can get the SAME effect, just tells us that you have never seen proper HDR before and/or don't know what it does.

I will take reshade modders word over yours that say they can replicate HDR easily with Reshade 3. not slightly, but very much closely.

On non-HDR display, the final color palette is based on 24 bits i.e. 8 bit integer per primary color in RGB. That's 16,777,216 colors. 64bit (4X packed 16 bit) floating point RGBA framebuffers gets truncated into 24 bits integers.

On HDR display, the final color palette is based on 30 bits i.e. 10 bit per primary color in RGB. That's 1,073,741,824 colors.

GPU's internal floating point rendering out-paced the 1990s display technology.

I'll use the argument that console gamer's use when talking about PC hardware and how many people actually have high end PC's.

How many console gamer's actually have:

Your looking at $3K for a 1000 Nit HDR Panel and ATMOS speakers.

Gio... I know you have a HDR OLED TV?... But which one because HDR is not equal on all TV's, if its less than 700 Nits its not what those comments of praise come from, its those comments come from 1000 Nit panels.

OLED is super and HDR is a game changer in display technology but its not mainstream yet, TV tech and Monitor tech rarely cross paths its why their where no plasma monitors or 144Hz TV's.

Monitors will catch up eventually but consoles will pretty much always be targeting 30FPS... Which is why most PC gamer's have no interest in console gaming out side of exclusives.

No OLED does 1000 Nits and they’re still considered the industry standard for HDR because the blacks are perfect.

Therefore the range between OLeDS’s deepest Blacks and brightest whites is actually a wider range than any LED/LCD despite the LED getting brighter.

OLED is not the HDR standard, HDR is heavily impacted by the Nits the panel can produce.

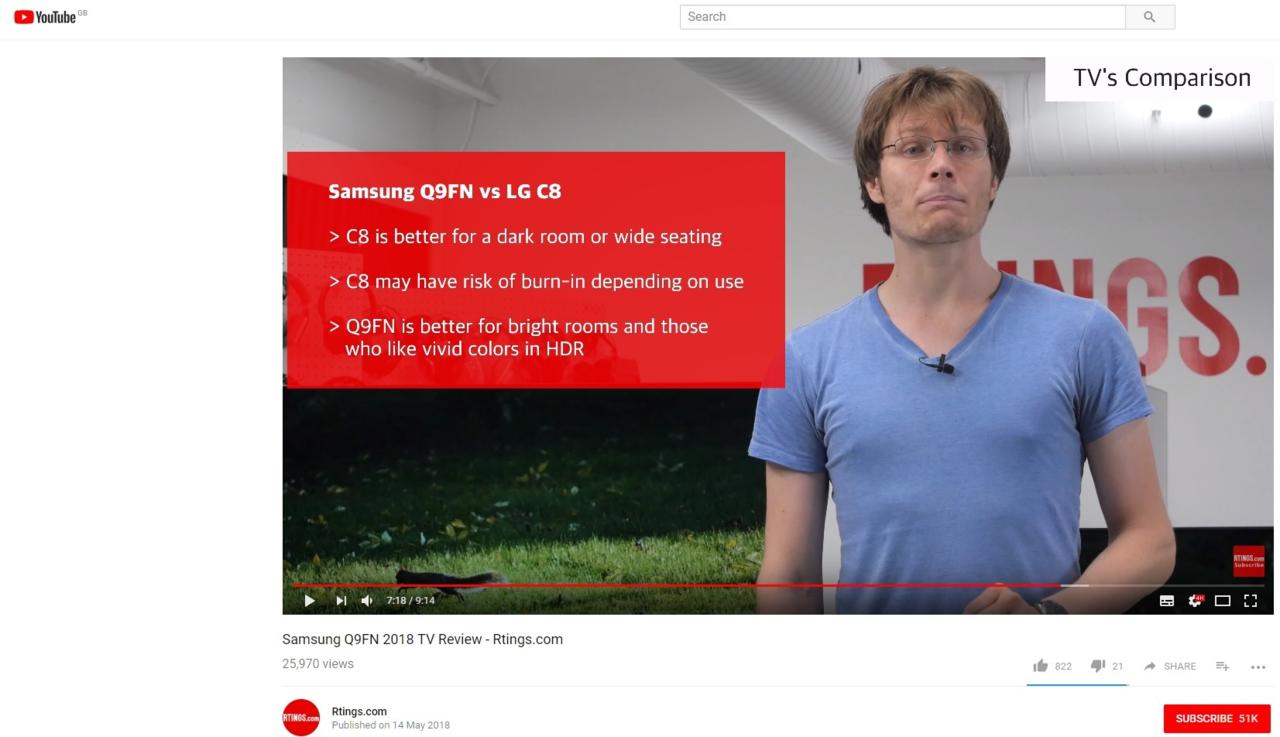

Most if not all review sites say that Samsung's QLED panels the Q9F in particular is the TV that has set the bar for HDR quality.

Their is no industry standard for HDR because their is no definitive standard for HDR, with multiple HDR formats currently being used like HDR10, Dolby Vision and HLG along with the new HDR10+ that closes the gap between Dolby Vision and HDR10 the format wars has yet to begin.

All panels have pro's and cons... OLED you gain perfect blacks and better contrast but lack Nits, with QLED you can better Nit levels with better HDR impact but lose the black depth.

According to engineers OLED's can reach 1K nits but it won't surpass it much so future OLED's will have superb HDR capabilities BUT QLED can reach 2K nits with ease.

I know this because I am currently looking around to buy a new TV and am stuck between the Sony A1 OLED and the Q9F... If you want the best HDR OLED is not the place to go.

HRD is overrated. Overpriced TVs with a sweetFx mod built-in LOL Only noobs can fall for that. On PC i can reach the same effect with ShadderFX mods for free.

Lol no, you can slightly approximate the effect with things like sweetFX or REshade, but to say you can get the SAME effect, just tells us that you have never seen proper HDR before and/or don't know what it does.

I will take reshade modders word over yours that say they can replicate HDR easily with Reshade 3. not slightly, but very much closely.

On non-HDR display, the final color palette is based on 24 bits i.e. 8 bit integer per primary color in RGB. That's 16,777,216 colors. 64bit (4X packed 16 bit) floating point RGBA framebuffers gets truncated into 24 bits integers.

On HDR display, the final color palette is based on 30 bits i.e. 10 bit per primary color in RGB. That's 1,073,741,824 colors.

GPU's internal floating point rendering out-paced the 1990s display technology.

My monitor from 2014 is 10 bit as well, 1 billion colours. So technically it can replicate it.

https://www.benq.com/en/monitor/designer/pd3200q/specifications.html

How the hell does HDR even work with a 1000 series GTX. My GTX 780 could display my HDR movie beautifully on my non-HDR TV, but my 1070 makes it look really dull. Will it be colorful again if I connect it to an HDR TV? I'm also really disappointed by how choppy 4K movies (50+ MB/s) still are on the 1000 series.

Did you adjust the nvidia color settings in the control panel?

That's not it. I shouldn't have to change the colors for one video. I know it has SOMETHING to do with BT.2020, the color primaries used for HDR videos. The 1000 series apparently can't handle it, while the 700 series can.

This is set to full, not limited, right? It should be set on full.

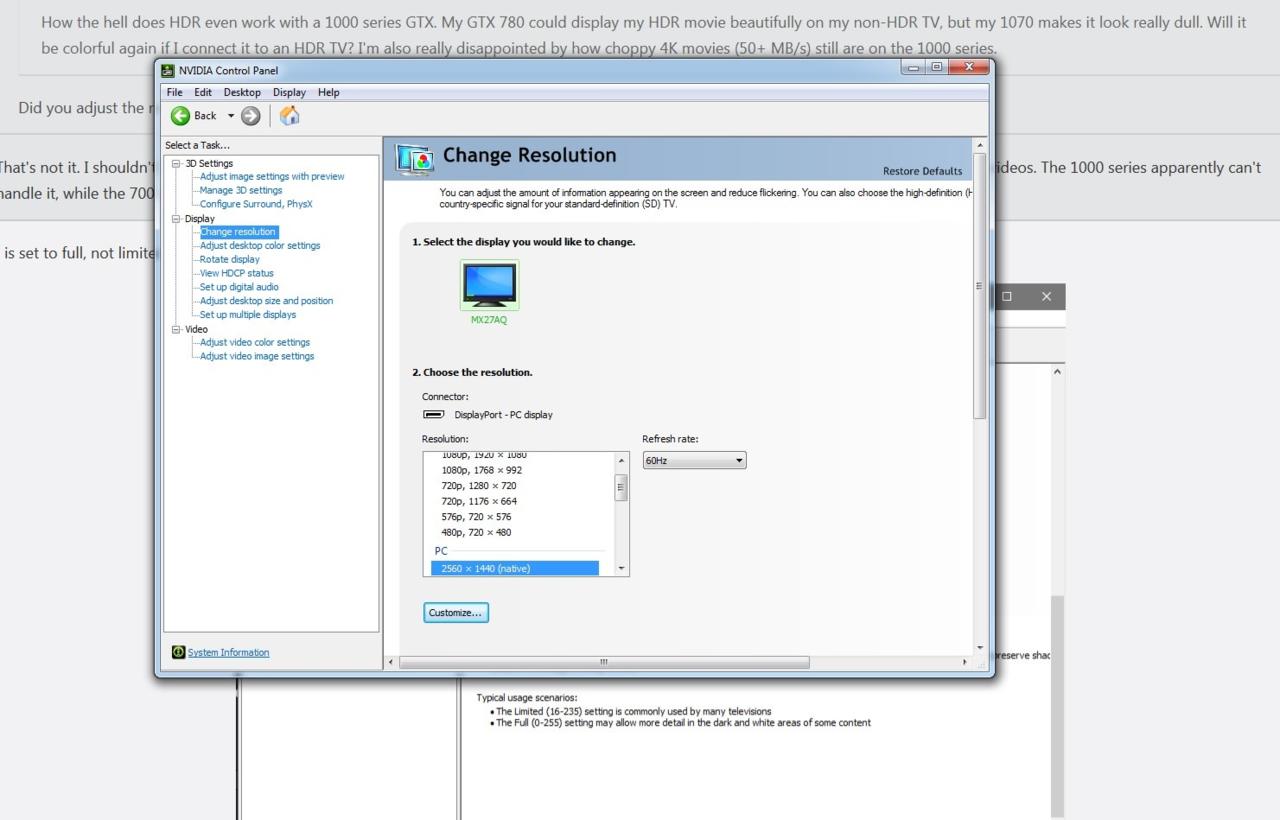

Mine doesn't look like that, it looks like this:

I really hope it looks like that and plays this video without any issue when I have my 4K TV. I also hope it knows not to saturate normal videos.

How the hell does HDR even work with a 1000 series GTX. My GTX 780 could display my HDR movie beautifully on my non-HDR TV, but my 1070 makes it look really dull. Will it be colorful again if I connect it to an HDR TV? I'm also really disappointed by how choppy 4K movies (50+ MB/s) still are on the 1000 series.

Did you adjust the nvidia color settings in the control panel?

That's not it. I shouldn't have to change the colors for one video. I know it has SOMETHING to do with BT.2020, the color primaries used for HDR videos. The 1000 series apparently can't handle it, while the 700 series can.

This is set to full, not limited, right? It should be set on full.

Mine doesn't look like that, it looks like this:

I really hope it looks like that and plays this video without any issue when I have my 4K TV. I also hope it knows not to saturate normal videos.

Try scrolling down in that window. ;)

@DragonfireXZ95: Oh. Mine is already on Full Dynamic Range. I just don't get why my old graphics card didn't have any issues with the color range of that video.

I'll use the argument that console gamer's use when talking about PC hardware and how many people actually have high end PC's.

How many console gamer's actually have:

Your looking at $3K for a 1000 Nit HDR Panel and ATMOS speakers.

Gio... I know you have a HDR OLED TV?... But which one because HDR is not equal on all TV's, if its less than 700 Nits its not what those comments of praise come from, its those comments come from 1000 Nit panels.

OLED is super and HDR is a game changer in display technology but its not mainstream yet, TV tech and Monitor tech rarely cross paths its why their where no plasma monitors or 144Hz TV's.

Monitors will catch up eventually but consoles will pretty much always be targeting 30FPS... Which is why most PC gamer's have no interest in console gaming out side of exclusives.

No OLED does 1000 Nits and they’re still considered the industry standard for HDR because the blacks are perfect.

Therefore the range between OLeDS’s deepest Blacks and brightest whites is actually a wider range than any LED/LCD despite the LED getting brighter.

OLED is not the HDR standard, HDR is heavily impacted by the Nits the panel can produce.

Most if not all review sites say that Samsung's QLED panels the Q9F in particular is the TV that has set the bar for HDR quality.

Their is no industry standard for HDR because their is no definitive standard for HDR, with multiple HDR formats currently being used like HDR10, Dolby Vision and HLG along with the new HDR10+ that closes the gap between Dolby Vision and HDR10 the format wars has yet to begin.

All panels have pro's and cons... OLED you gain perfect blacks and better contrast but lack Nits, with QLED you can better Nit levels with better HDR impact but lose the black depth.

According to engineers OLED's can reach 1K nits but it won't surpass it much so future OLED's will have superb HDR capabilities BUT QLED can reach 2K nits with ease.

I know this because I am currently looking around to buy a new TV and am stuck between the Sony A1 OLED and the Q9F... If you want the best HDR OLED is not the place to go.

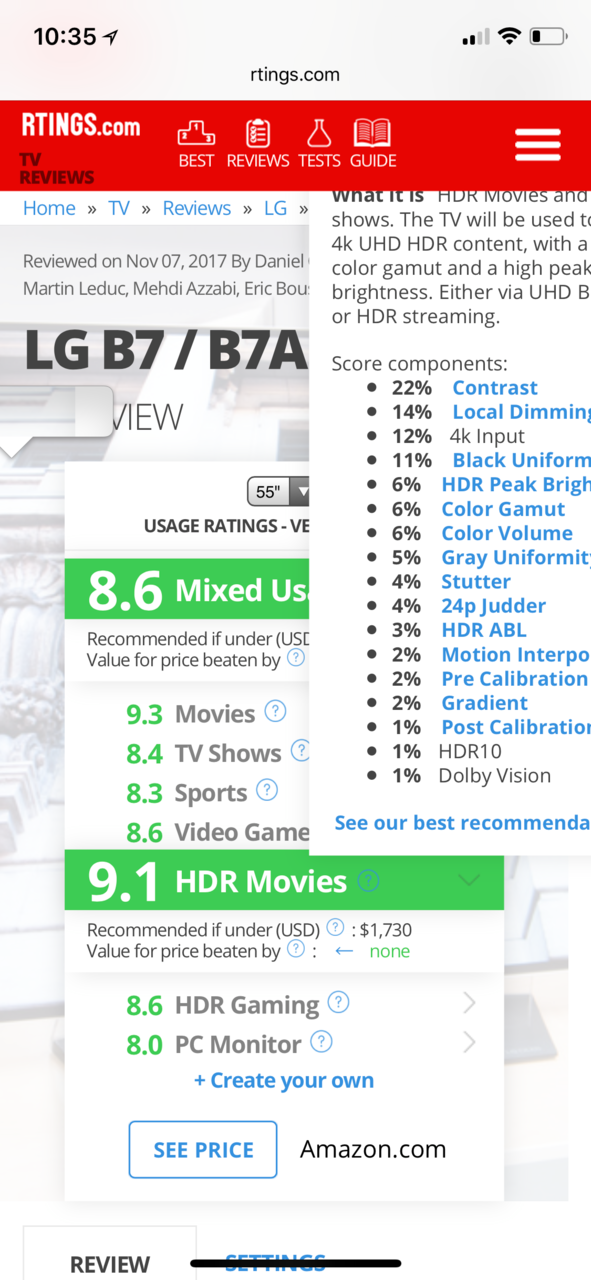

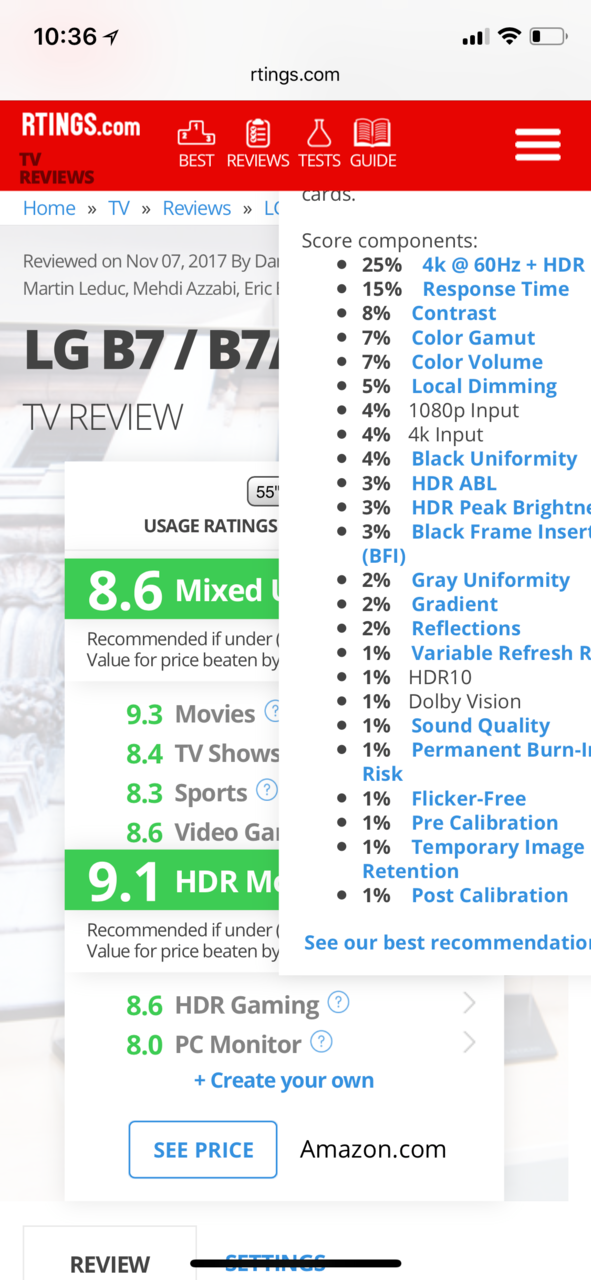

Both Rtings.com and Digital Foundry still think OLED is the definitive HDR experience. The better the contrast ratio, the wider the dynamic range will be.

Rtings.com is the go to source for HDTV reviews, they're by far the most in depth reviews.

How the hell does HDR even work with a 1000 series GTX. My GTX 780 could display my HDR movie beautifully on my non-HDR TV, but my 1070 makes it look really dull. Will it be colorful again if I connect it to an HDR TV? I'm also really disappointed by how choppy 4K movies (50+ MB/s) still are on the 1000 series.

Did you adjust the nvidia color settings in the control panel?

That's not it. I shouldn't have to change the colors for one video. I know it has SOMETHING to do with BT.2020, the color primaries used for HDR videos. The 1000 series apparently can't handle it, while the 700 series can.

This is set to full, not limited, right? It should be set on full.

Mine doesn't look like that, it looks like this:

I really hope it looks like that and plays this video without any issue when I have my 4K TV. I also hope it knows not to saturate normal videos.

Check DirectX Diagnostic Tool to see if your getting True HDR

https://support.microsoft.com/en-us/help/4040263/windows-10-hdr-advanced-color-settings

Did you adjust the nvidia color settings in the control panel?

That's not it. I shouldn't have to change the colors for one video. I know it has SOMETHING to do with BT.2020, the color primaries used for HDR videos. The 1000 series apparently can't handle it, while the 700 series can.

This is set to full, not limited, right? It should be set on full.

Mine doesn't look like that, it looks like this:

I really hope it looks like that and plays this video without any issue when I have my 4K TV. I also hope it knows not to saturate normal videos.

Check DirectX Diagnostic Tool to see if your getting True HDR

https://support.microsoft.com/en-us/help/4040263/windows-10-hdr-advanced-color-settings

I said earlier that my monitor doesn't have HDR. Still, this BT.2020 video looked beautiful on my GTX 780.

@tryit: oh, c'mon, really? Because PC games spend most of their times showing off their rigs, talking about the tech of the games, their settings, resolution, fps, c'mon, if there's something hermits do IS being bothered by technical details.

that was true in 2008, now its all about the games because we have so many more great titles now then ever before

@tryit: no it's not, from here to Reddit, every PC thread becomes a spec and benchmark fest. This thread has it and every other on this same forum also has it.

fair enough, I need to do a better job at getting those PC gamers up to modern times.

some of them are like some TV viewers, still havnet tried Netflix

@tryit: oh, c'mon, really? Because PC games spend most of their times showing off their rigs, talking about the tech of the games, their settings, resolution, fps, c'mon, if there's something hermits do IS being bothered by technical details.

My rig isn't nothing to brag about but all I really care is gaming in 1440p/60fps+ is all I really need during gaming. It's high-end but I don't I rarely brag about it in System Wars.

Gotta congratulate Gio for making hermits go down this spiral, really amusing to see.

Which spiral is that?

@tryit: oh, c'mon, really? Because PC games spend most of their times showing off their rigs, talking about the tech of the games, their settings, resolution, fps, c'mon, if there's something hermits do IS being bothered by technical details.

Specs are fun.

Mine:

LG 4K OLED B7A

Dynaudio Excite LCR Speakers x3

Aperion Surround Speakers x2

Polk VT60 Ceiling Speakers x4

Yamaha RX-A3070

Ultimax UM-18 Subwoofer + 2400W Amp

Ryzen 2700X 8 Core/16 thread CPU

NZXT X72 CPU Cooler (Push/Pull)

EVGA GTX 1080 FTW2

Asrock X470 AC/SLI

Corsair Air 540 Case

Corsair MP500 M.2 SSD 480GB

Kingston A1000 M.2 SSD 960GB

Team Dark Pro DDR4 3200 14CL

Corsair HX750w PSU

NZXT Sentry Fan Controller

@tryit: oh, c'mon, really? Because PC games spend most of their times showing off their rigs, talking about the tech of the games, their settings, resolution, fps, c'mon, if there's something hermits do IS being bothered by technical details.

Specs are fun.

Mine:

LG 4K OLED B7A

Dynaudio Excite LCR Speakers x3

Aperion Surround Speakers x2

Polk VT60 Ceiling Speakers x4

Yamaha RX-A3070

Ultimax UM-18 Subwoofer + 2400W Amp

Ryzen 2700X 8 Core/16 thread CPU

NZXT X72 CPU Cooler (Push/Pull)

EVGA GTX 1080 FTW2

Asrock X470 AC/SLI

Corsair Air 540 Case

Corsair MP500 M.2 SSD 480GB

Kingston A1000 M.2 SSD 960GB

Team Dark Pro DDR4 3200 14CL

Corsair HX750w PSU

NZXT Sentry Fan Controller

Nice.

You get to play the better version of 99% of video games. Substantially so for anything involving online multiplayer. Fun indeed.

Is gio still arguing over that TW3 quote?

good lord

Doesn't have any other straw

@GioVela2010: You forgot to mention your 3000$ AV cabinet

2k

@GioVela2010: Gio.... Or anyone else who has done some comparisons:

What are your favorite HDR implementations? Games/movies/TV shows that you think provide the most visual benefit over their non-HDR counterparts.

I hear a lot of people say FFXV is a good one. I also see people saying The Witness is another one.

@tryit: oh, c'mon, really? Because PC games spend most of their times showing off their rigs, talking about the tech of the games, their settings, resolution, fps, c'mon, if there's something hermits do IS being bothered by technical details.

Specs are fun.

Mine:

LG 4K OLED B7A

Dynaudio Excite LCR Speakers x3

Aperion Surround Speakers x2

Polk VT60 Ceiling Speakers x4

Yamaha RX-A3070

Ultimax UM-18 Subwoofer + 2400W Amp

Ryzen 2700X 8 Core/16 thread CPU

NZXT X72 CPU Cooler (Push/Pull)

EVGA GTX 1080 FTW2

Asrock X470 AC/SLI

Corsair Air 540 Case

Corsair MP500 M.2 SSD 480GB

Kingston A1000 M.2 SSD 960GB

Team Dark Pro DDR4 3200 14CL

Corsair HX750w PSU

NZXT Sentry Fan Controller

Nice.

You get to play the better version of 99% of video games. Fun indeed.

It’s basically for single player games. And maybe VR in the future. I hate keyboard and mouse, but I know I’d get destroyed using a JOYPad in multiplayer games vs people using KB+Mouse. Plus all my friends and family are on XBL

So X1X gets the multiplayer games and the game with HDR or Atmos

sure we get HDR, we had it back in Half-Life 2: Lost Coast :P

In all seriousness, I like how it's always a guy that hates on PC complaining about lack of _______ on PC.

Meanwhile, all the legit PC game players are too busy enjoying everything else that is awesome in PC gaming.

sure we get HDR, we had it back in Half-Life 2: Lost Coast :P

In all seriousness, I like how it's always a guy that hates on PC complaining about lack of _______ on PC.

Meanwhile, all the legit PC game players are too busy enjoying everything else that is awesome in PC gaming.

I wasn’t aware Richard Leadbetter from Digital Foundry hates PC

sure we get HDR, we had it back in Half-Life 2: Lost Coast :P

In all seriousness, I like how it's always a guy that hates on PC complaining about lack of _______ on PC.

Meanwhile, all the legit PC game players are too busy enjoying everything else that is awesome in PC gaming.

I wasn’t aware Richard Leadbetter from Digital Foundry hates PC

Was referring to you, actually, GioTrolla, and how you take things out of context. More specifically, how you take an objective article written by a journalist and twist it into subjective, cynical opinion.

@GioVela2010: Really where do they say that?

As far as everyone in the industry is concerned their are pro's and cons to every TV panel technology their is no definitive winner especially with HDR... Even the site you prefer seems to list pro's and cons of each TV, with the Q9F and the new Q9FN offering better brightness and colors in HDR compared to a LG C8 OLED panel. Also for motion like someone who watches a lot of sport or plays a lot of fast paced games the Sony A1 TV's destroys LG OLED motion processing.

This is essentially all I am getting to pro's and cons, just like monitor technologies TV panels offer pro's and cons their is no definitive winner and no one who reviews TV's ever says their is.

So I get that you love your TV and OLED technology but stop, lets not start a panel fanboy war.

The Q9FN's local dimming from most reviewers say its near impossible to tell the difference between it and OLED unless your right up against the screen looking for it or your in a pitch black room. The only issue is that the Q9FN is aggressive when it comes to local dimming and in some scenes it loses small bright highlights if there is a black back round like a night sky or space scenes.

@GioVela2010: Really where do they say that?

As far as everyone in the industry is concerned their are pro's and cons to every TV panel technology their is no definitive winner especially with HDR... Even the site you prefer seems to list pro's and cons of each TV, with the Q9F and the new Q9FN offering better brightness and colors in HDR compared to a LG C8 OLED panel. Also for motion like someone who watches a lot of sport or plays a lot of fast paced games the Sony A1 TV's destroys LG OLED motion processing.

This is essentially all I am getting to pro's and cons, just like monitor technologies TV panels offer pro's and cons their is no definitive winner and no one who reviews TV's ever says their is.

So I get that you love your TV and OLED technology but stop, lets not start a panel fanboy war.

The Q9FN's local dimming from most reviewers say its near impossible to tell the difference between it and OLED unless your right up against the screen looking for it or your in a pitch black room. The only issue is that the Q9FN is aggressive when it comes to local dimming and in some scenes it loses small bright highlights if there is a black back round like a night sky or space scenes.

Sorry but Rtings still has OLED as #1 for HDR.

Brightness only accounts for 6% of their HDR Movies score, and 3% of their HDR Gaming Score.

In both instances contrast is much more important than brightness

Please Log In to post.

Log in to comment