I'm trying to actually find out how much difference their will between the 2. Tried reading the forums/other places but it's a Sh!t show tbh about comparing the two.

So how much do CU's matter, or is it speed or a combo?

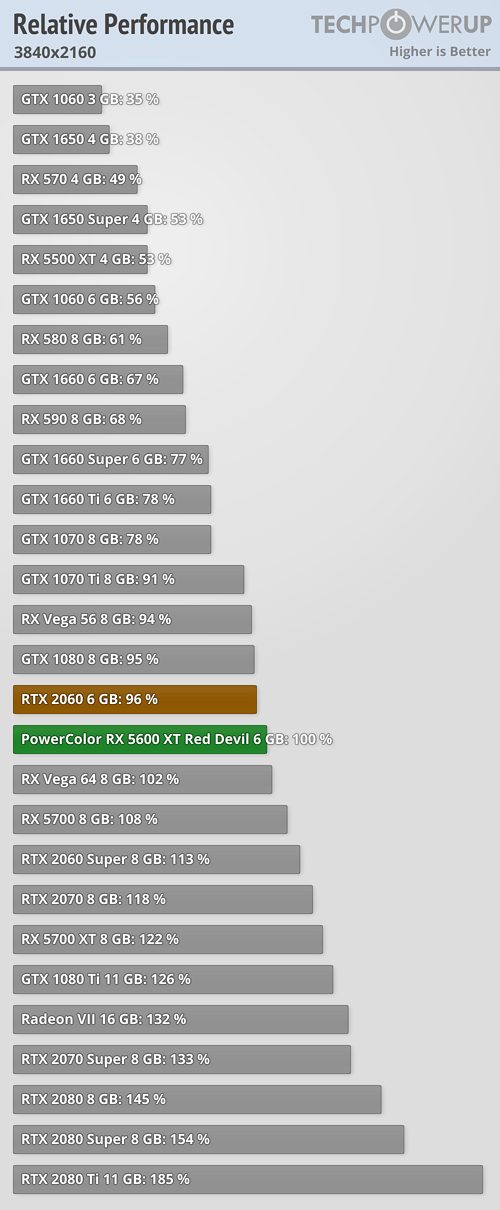

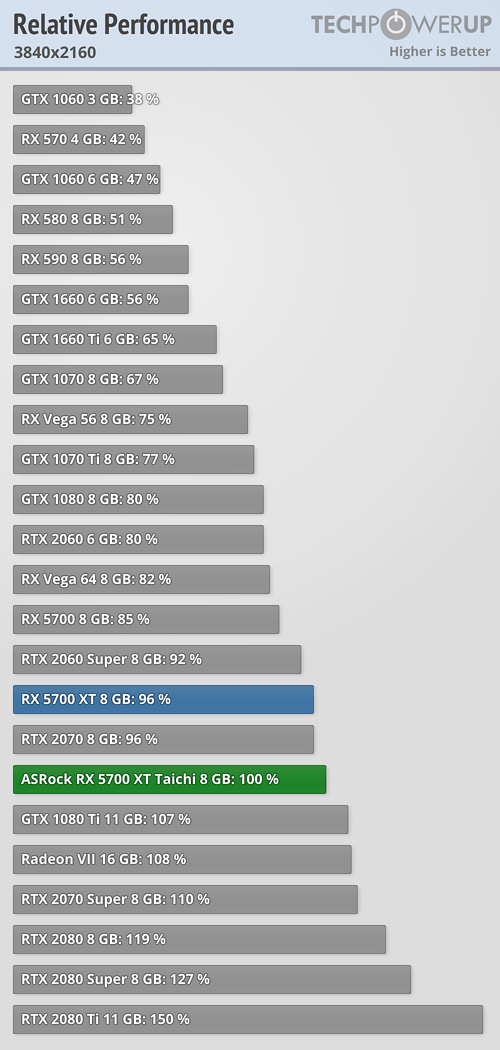

Speed seems to be an important factor here comparing older GPU's when CU's are similar. Comparing the 5700 and XT version. There's 10fps gain for only 4 units and 100mhz increase. 1.5tflop increase for 10fps (@1440p). Doesn't seem like that's a whole lot. Which almost the same amount of difference between Xbox and Ps5.

Also I've heard flops don't necessarily matter either.

Cpu is .3ghz difference between the 2. In my experience OCing is not a huge gain but still noticeable non the less.

Then Cerny said that they removed the bottlenecks as much as they could. Does this mean that the mobo clock speed is increased as well, which could improve the system as a whole?

Then programing, won't that make a huge difference?

Or is this all just wait till release then compare and just judge pure performance?

*Still pretty amateur at this level. So it's appreciated for non trolling answers.

Log in to comment