Please no fanboys.

Just speak sense. No Fanboy logic.

Be open minded and try not to be bias toward PS4 or Xbox One.

Thanks

Why Sony went for GDDR5 ram and why MS went with DDR3.

They are both rich, and not stupid at all. A lot of money could be at stake, so why choose this ?

The following is without having Xbox One’s ESRAM in mind.

A computer (server or console) doesn't need a large pool of DDR5 memory to perform at a high level.

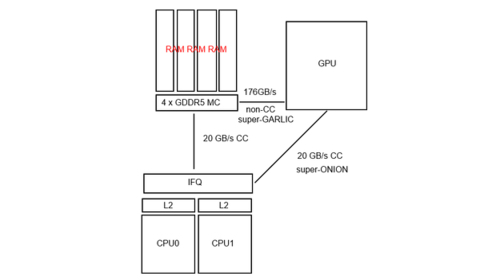

The only reason that Sony went with one unified pool of DDR5 memory is that they have CPU and GPU on the same package which share a single memory controller.

MS has the same dilemma as Sony: One memory controller limits you to using only one type of memory since each memory type has it's own controller.

So you either go with cheaper DDR3 or you go with more expensive DDR5.

The problem with using all DDR3 is that your GPU needs faster memory to do its work. Sony solved this problem by just going all DDR5. 4 GB was economical but they wanted 8 GB mainly with system functions in mind.

MS decided to solve the problem another way: Go with 8 GB of the more economical (and easily available) memory and deal with the memory bandwidth problem with DME's and a fast L4 cache. The DMEs + L4 cache is like an HOV lane for GPU work only.

So we have two companies that faced the same problem but chose to tackle it in different ways achieving the same results.

Sony chose the brute force method and MS chose to make a few adjustments.

Sony went with 8 GB mainly for system functions and this will have a negligible effect on graphics from 4 GB of memory.

PS4 graphics would have been fine with 4 GB of memory, but with all they want to do with the OS (new dash, sharing, Gakai, etc) more memory was necessary.

I think personally think GDDR5 is sort of a bad choice in a way. PS4 is suffering from a bit of GDDR5 latency, although Mark Cerny was saying in an article that it’s “NOT MUCH OF A FACTOR”. But in the article he only talks about the GPU which higher latency won’t be a problem at all. What I was wondering was...... why he didn’t talk about the CPU. OS might be affected by latency. Logically.

8 GB of GDDR5 was included for more storage space. PS4's GPU won't come close to using half of that. The other 4 GB is for game data and OS functions. You'll have game logic and data just sitting in half of your expensive memory while your GPU barely needs 3 GB of it and I'm being generous. GDDR5 is basically cache memory, whatever goes in there doesn't sit in there for very long.

It's really like buying a 1 TB SSD and storing mostly family pictures and documents on it.

But it still fixes the problem.

I don’t want to sound biased at all. I love my PS4. But I think MS came up with the better idea. An efficient, well designed solution to the same problem engineering high speed traffic lanes to it's memory.

Adding the idea of ESRAM to the Xbox One adds a few complications, not to the hardware but for the developers.

The ESRAM is making it a bit harder for the devs.

Reportedly there was a test that the ESRAM does however boost the Xbox One’s bandwidth to 192gb/s

Not sure what PS4’s is but I think 192gb/s is still fast even if PS4’s is higher.

Apparently, some game developers who had access to the console before it was launched claimed that the Xbox One’s bandwidth is faster than even MS thought.

But GDDR5 is still very tough. I wonder if the DDR3 and ESRAM actually comes close to it.

Log in to comment