@elessarGObonzo said:

@KHAndAnime: i just played through the first few hours and then the last few hours of Assassins of Kings the couple days before Wild Hunt came out. can guarantee Wild Hunt looks a bit better @ 1080p with everything turned all the way up. not as much better as people were thinking it would, but a still a bit better. besides that there's more going on in any given scene and a much greater draw distance in Wild Hunt.

I'm not sure about how it compares to The Witcher 2 because I don't have it installed and I haven't played it for a long time. I briefly played The Witcher 3, and this screenshot pretty much sums up my complaints about the game graphically (yes, max settings).

Ten seconds in and the game is throwing early PS3-quality assets at you...and you don't have to look hard for them (a cutscene takes places at this exact spot facing the camera at these exact leaves).

The best part of the graphics must be what...the character models maybe? If that's the case, and the game is supposed to look great, why do random NPC slaves in Mordor look way more detailed?

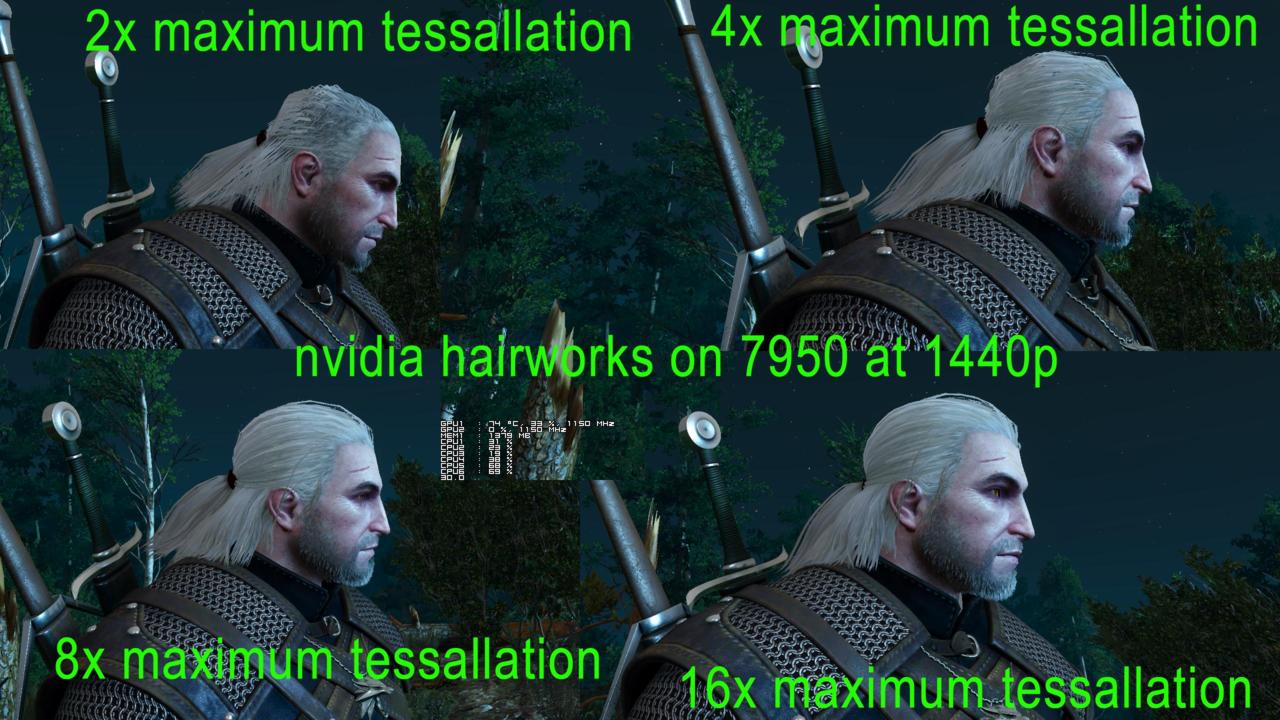

Here, you can see the texture of their skin revealed in the light, as well as their veins, their bone outlines, freckle spots on their shoulders, etc. In comparison, Geralt from that previous screenshot looks rather flat. His textures are really soft and very blurry on spots (like the skin on his arm), the different assets of clothing aren't casting any shadows upon eachother (like the necklace or rope wrapped around Geralt's chest piece). That sort of stuff sticks out to me like a sore thumb and I feel like you must have quite the biased eye to think The Witcher 3 isn't inconsistent looking graphically. The game's style is so splotchy, blurry, aliased, and distorted looking. Comparing those two screenshots, in the former shot feel like I'm looking at a game that was released very early in a generation while in the latter it feels like I'm looking at a game that was released much later in that generation. The smooth extra-detailed lighting, tessellation, and variety of shaders really brings the Mordor protagonist to life. Unlike Geralt's model, where there's a distinct lack of shadows going on, the Mordor dude has a very polished look. These same techniques are why I think even the NPC's in Mordor have an upper-hand over Geralt graphically.

I'm not saying The Witcher 3 always look as bad as that screenshot and never looks better than that (or Mordor). But I'm saying from the couple hours I spent with the game, much of the time I couldn't help but feel like the game often looks as bad as that screenshot. In many circumstances, the game's assets come very well together. In other circumstances, they don't mix very well and it looks like there's something missing (particularly in the lighting department), like the screenshot I used.

And I haven't even gotten started on the game's animations and physics. They must've hired the folks from Bethesda or something because they perfectly nailed the awkward ragdoll where NPC's randomly fly up into the air after you kill them and then float to the ground with no sense of acceleraton. They also nailed many different awkward animations, some of my favorites being the spastic clunky jump and the climb animation (usually involving Geralt massively clipping through the edge he clibms).

Log in to comment