Everyone's like "I wonder if my 970 will run it on Ultra" and I'm just sitting here hoping my 650 Ti Boost will be able to have some things on High.

The Witcher 3 PC Ultra Runs At 1080p 60FPS On GTX 980

Everyone's like "I wonder if my 970 will run it on Ultra" and I'm just sitting here hoping my 650 Ti Boost will be able to have some things on High.

Lol check my Radeon 5770. :D

Kinda sucks that I really can't see myself wasting 800 bucks on a new computer, quite frankly not even 400 bucks on a new console. Just gonna trucker on with my 6 year old rig till she burns out. Luckily I only have a 1280x1024 monitor, so I can still play most of the not top of the line games pretty decently.

Gaming is an expensive hobby lol, and I really aren't into it enough currently to see myself wasting that much money on anything.

Everyone's like "I wonder if my 970 will run it on Ultra" and I'm just sitting here hoping my 650 Ti Boost will be able to have some things on High.

Lol check my Radeon 5770. :D

Kinda sucks that I really can't see myself wasting 800 bucks on a new computer, quite frankly not even 400 bucks on a new console. Just gonna trucker on with my 6 year old rig till she burns out. Luckily I only have a 1280x1024 monitor, so I can still play most of the not top of the line games pretty decently.

Gaming is an expensive hobby lol, and I really aren't into it enough currently to see myself wasting that much money on anything.

I can think of a lot more expensive hobbies and quicker ways to blow through money. I was in Vegas recently. In one night... nice steak dinner, limo, bottle service at the club... I could have bought a nice video card with the money I spent in one night. I think gaming is very affordable. The entertainment per dollar is unmatched.

Everyone's like "I wonder if my 970 will run it on Ultra" and I'm just sitting here hoping my 650 Ti Boost will be able to have some things on High.

Lol check my Radeon 5770. :D

Kinda sucks that I really can't see myself wasting 800 bucks on a new computer, quite frankly not even 400 bucks on a new console. Just gonna trucker on with my 6 year old rig till she burns out. Luckily I only have a 1280x1024 monitor, so I can still play most of the not top of the line games pretty decently.

Gaming is an expensive hobby lol, and I really aren't into it enough currently to see myself wasting that much money on anything.

I can think of a lot more expensive hobbies and quicker ways to blow through money. I was in Vegas recently. In one night... nice steak dinner, limo, bottle service at the club... I could have bought a nice video card with the money I spent in one night. I think gaming is very affordable. The entertainment per dollar is unmatched.

I went on a 3 week backpacking trip for the money that it would cost me to build a new pc :)

Everyone's like "I wonder if my 970 will run it on Ultra" and I'm just sitting here hoping my 650 Ti Boost will be able to have some things on High.

Lol check my Radeon 5770. :D

Kinda sucks that I really can't see myself wasting 800 bucks on a new computer, quite frankly not even 400 bucks on a new console. Just gonna trucker on with my 6 year old rig till she burns out. Luckily I only have a 1280x1024 monitor, so I can still play most of the not top of the line games pretty decently.

Gaming is an expensive hobby lol, and I really aren't into it enough currently to see myself wasting that much money on anything.

I can relate but one thing that has really spiked my interest in hardware upgrades is all this new vr stuff which is soon to be released.

Even then it may not be worth it though. Hard to tell.

Day one patch added higher settings for PC.

High settings are now the old ultra and new ultra is a bump up.

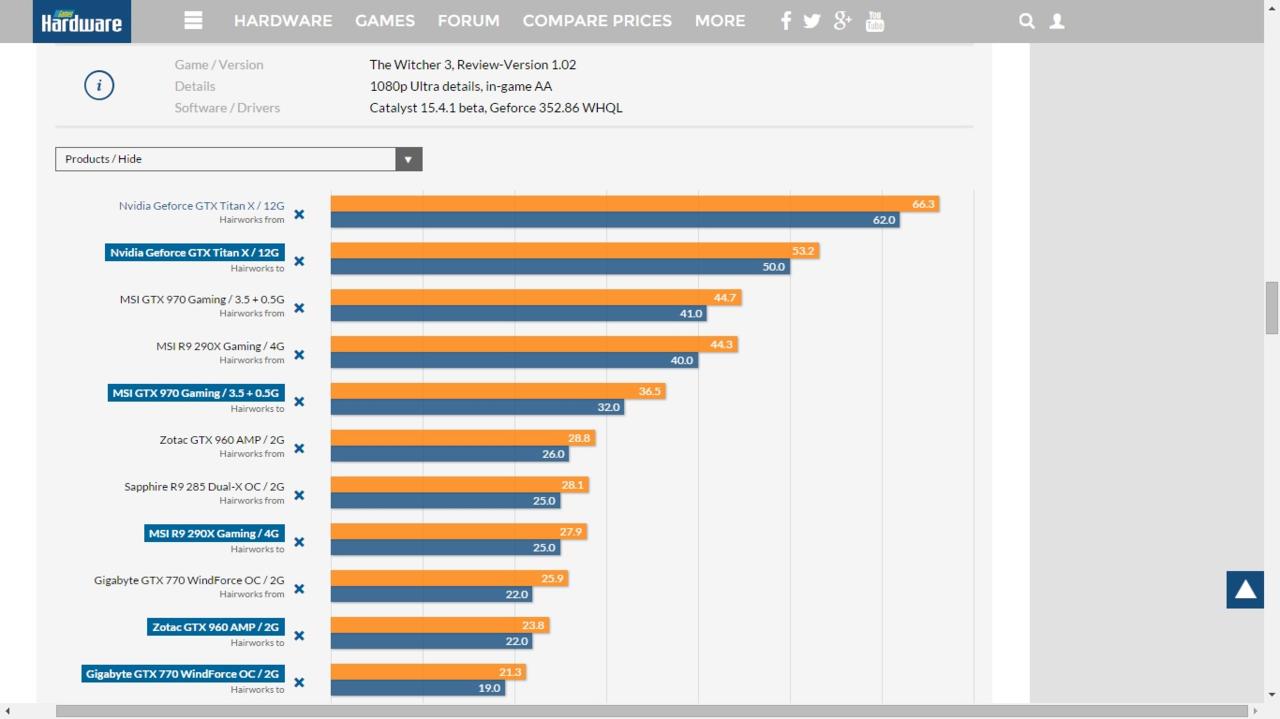

Some benchmarks were released for the game and it looks like you need at least one 970 to get over 30fps since even the 290x gets lower than 30fps average with max settings at 1080p.

Glad I have 2 970s so I can max the game at 1440p.

The settings that read hairworks from means disabled and hairworks to means enabled.

@RyviusARC: if this is true i m screwed .... fuckkkkkkk nvida and thier stubid shit :((((((((((((((((((((((((

Well it seems that this was right...

From Nvidia

| 1920x1080, Low settings | GTX 960 |

| 1920x1080, Medium settings | GTX 960 |

| 1920x1080, High settings | GTX 960 |

| 1920x1080, Uber settings | GTX 970 |

| 1920x1080, Uber settings w/ GameWorks | GTX 980 |

| 2560x1440, Uber settings | GTX 980 |

| 2560x1440, Uber settings w/ GameWorks | GTX TITAN X, or 2-Way SLI GTX 970 |

| 3840x2160, Uber settings | GTX TITAN X, or 2-Way SLI GTX 980 |

| 3840x2160, Uber settings w/ GameWorks | 2-Way SLI GTX 980 or GTX TITAN X |

Intel i7-5960X, 16GB DDR4 RAM, The Witcher 3: Wild Hunt Game Ready GeForce GTX Driver

Also since there are hardly any sites that messed with settings:

The Witcher 3: Wild Hunt Graphics, Performance & Tweaking Guide

You guys are talking as if a GTX 970 wont be able to run the game lol. I mean, Im sure there are going to be ways to adjusting settings to allow for 60 fps and near to no visual degradation. I mean, tweaking really hasnt even started yet. A lot of PC folks dont even have that kind of power. Ive held off on a new build just because of Witcher 3 but also because of all the games that are becoming VRAM whores. One great thing about all the "downgrades" that are resulting because of consoles (especially in this case) help lower the requirements needed to max the game. Without DX12 or Mantle or OpenGL devs arent gonna give a crap about reducing VRAM loads or optimizing for PC. Douche bags know they will do better on console. So many games look great on PS4 and could perform equally well on PC if the devs made the effort or cared...but they dont. I have my doubts with CDProjekt Red as well.

Just tried with my 780ti. Looks like this card is now obsolete, this game is killing it at Ultra with Hairworks. Getting between 20-45fps at the start at 1440p. All the new games this year will make Kepler a dead horse. I was waiting for the 980ti, but might just have to bite the bullet and pickup a Titan X, since I really want to play this game decently. I heard a rumour that the 980ti will be faster than the Titan X but cheaper. What a dilemma.

I'm kind of cheesed that a 780ti has become such crap all of a sudden.

Just tried with my 780ti. Looks like this card is now obsolete, this game is killing it at Ultra with Hairworks. Getting between 20-45fps at the start at 1440p. All the new games this year will make Kepler a dead horse. I was waiting for the 980ti, but might just have to bite the bullet and pickup a Titan X, since I really want to play this game decently. I heard a rumour that the 980ti will be faster than the Titan X but cheaper. What a dilemma.

I'm kind of cheesed that a 780ti has become such crap all of a sudden.

Wait for 980ti. Rumors saying that might be soon released in order to counter whatever AMD has to offer.

Also 980ti will have 6GB VRAM but it will have higher clocks and not to mention that Nvidia will let Asus, MSI, Gigabyte etc etc to use custom coolers.

The difference could be great. Imagine 10% higher clocks and an other 10% from factory o/c cards.

Just tried with my 780ti. Looks like this card is now obsolete, this game is killing it at Ultra with Hairworks. Getting between 20-45fps at the start at 1440p. All the new games this year will make Kepler a dead horse. I was waiting for the 980ti, but might just have to bite the bullet and pickup a Titan X, since I really want to play this game decently. I heard a rumour that the 980ti will be faster than the Titan X but cheaper. What a dilemma.

I'm kind of cheesed that a 780ti has become such crap all of a sudden.

SLI 780's here. Playing at 2K with everything on high and getting FPS in the low 50s with Hairworks off. Very disappointing. The game looks nice, but it's nothing revolutionary. GTA looks better AND runs much better.

Nvidia is screwing the 7 series owners to force them to buy 9 series cards. What a terrible business model. I will never again buy an Nvidia card after this slap to the face. The 280X is owing the 780 when they're not even in the same league.

Played Witcher 3 for about 60/70 mins so far. Grass is defiantly out of place in the game. Maxed out runs pretty smooth... I can tell even with G-sync that it's not high FPS. But at least the stuttering is cut down because of it.

Nvidia is screwing the 7 series owners to force them to buy 9 series cards. What a terrible business model. I will never again buy an Nvidia card after this slap to the face. The 280X is owing the 780 when they're not even in the same league.

Nvidia's been kinda shady, I feel like

I'd hope it runs decently after not one but two graphical downgrades. There was the 2013 version that looked pretty awesome. Then in 2014 they released a new build showing some serious downgrades. Then again in 2015 they showed yet another version with even more downgrades.

The game is pretty demanding. I ran it with a 960 and it goes below 30 fps at times although that could be because it needs more optimizing since I didn't really see anything demanding other than nvidia hairworks(probably will turn that off my next run to not make the frame rate so choppy)

The game looks and plays great at high and ultra isn't much of a difference. Kinda wish I had gotten a 970 instead but I think I'll live for now.

Nvidia is screwing the 7 series owners to force them to buy 9 series cards. What a terrible business model. I will never again buy an Nvidia card after this slap to the face. The 280X is owing the 780 when they're not even in the same league.

Nvidia's been kinda shady, I feel like

You know, I used to think this whole conspiracy about nvidia ditching old devices (or even crippling their performance on purpose) was a bunch crap. But a 960 outperforming a 780? Yea I think I should invest in a tinfoil hat.

You know, I used to think this whole conspiracy about nvidia ditching old devices (or even crippling their performance on purpose) was a bunch crap. But a 960 outperforming a 780? Yea I think I should invest in a tinfoil hat.

Same here.

1440P Ultra for me. But I think I'll hope out for Arkham Knight to satisfy my need for beautiful graphics.

Okay. So here's what I got:

i7 5820k at 4.1ghz

32GB of DDR4 RAM

Titan X

Acer 28" 4K monitor with G-sync

Ultra settings with GameWorks features:

- 1080p - 60fps

- 1440p - 55 to 60fps

- 4K - 30fps

By the way, playing at 1440p is beautiful but at 4K resolution is stupid ridiculous!

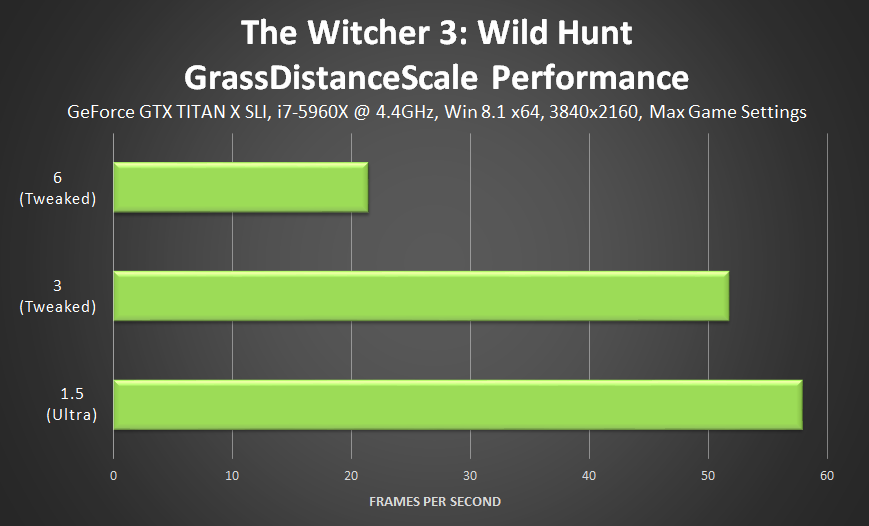

I left it at 3 for that settings and performance was still great along with all the other tweaks added in the nvidia page.

I didn't find 6 worth it because the improvement was not as much as moving from 1.5 to 3.

Game was running so well maxed at 1440p that I forced some settings beyond ultra.

1440p, everything maxed with no AA, and no vsync, what is the fps range and average?

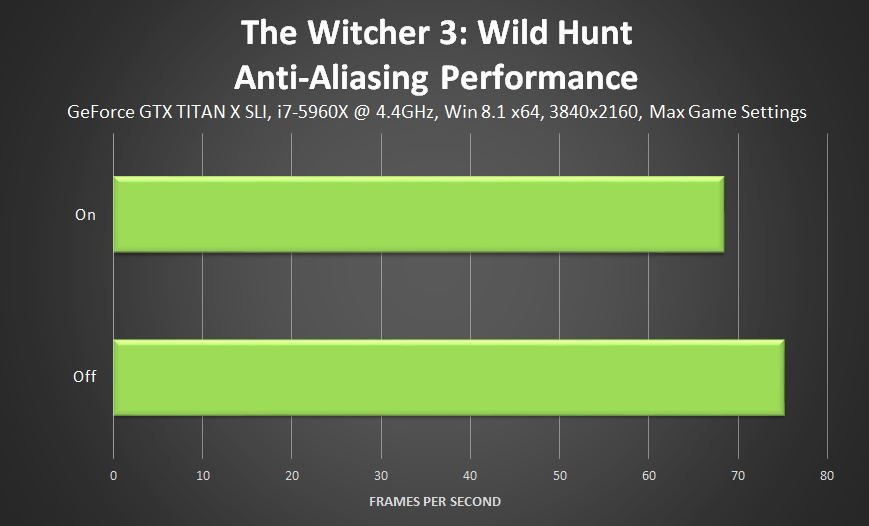

The game uses post process AA which has no effect on frame rate.

Not sure what I get without Vsync but with it I am getting around 50fps but this is with tweak settings that go beyond ultra.

I believe 60fps average should be possible with regular ultra settings at 1440p.

Game was running so well maxed at 1440p that I forced some settings beyond ultra.

1440p, everything maxed with no AA, and no vsync, what is the fps range and average?

The game uses post process AA which has no effect on frame rate.

Not sure what I get without Vsync but with it I am getting around 50fps but this is with tweak settings that go beyond ultra.

I believe 60fps average should be possible with regular ultra settings at 1440p.

I like to keep 60 fps minimum. I guess I will find out tomorrow.

Meh. The Witcher games have always been pretty ugly - this one is no exception. I can only imagine the barrage of crap recycled animations we're about to endure

lol KH.... always good for a troll.

Just tried with my 780ti. Looks like this card is now obsolete, this game is killing it at Ultra with Hairworks. Getting between 20-45fps at the start at 1440p. All the new games this year will make Kepler a dead horse. I was waiting for the 980ti, but might just have to bite the bullet and pickup a Titan X, since I really want to play this game decently. I heard a rumour that the 980ti will be faster than the Titan X but cheaper. What a dilemma.

I'm kind of cheesed that a 780ti has become such crap all of a sudden.

SLI 780's here. Playing at 2K with everything on high and getting FPS in the low 50s with Hairworks off. Very disappointing. The game looks nice, but it's nothing revolutionary. GTA looks better AND runs much better.

Nvidia is screwing the 7 series owners to force them to buy 9 series cards. What a terrible business model. I will never again buy an Nvidia card after this slap to the face. The 280X is owing the 780 when they're not even in the same league.

wtf???

0.o

Meh. The Witcher games have always been pretty ugly - this one is no exception. I can only imagine the barrage of crap recycled animations we're about to endure

lol KH.... always good for a troll.

And unfortunately I'm right again. The game releases and many people are really disappointed that the graphics didn't live up to their expectations. Happened with Witcher 2 too. The pre-release screenshots were not very impressive and yet they were downgraded from those, even. I mean, we can pretend the game looks great...but c'mon. It really doesn't. Reminds me of an MMORPG graphically. The grass has that gross brightened pasty look past 10m away...reminds me of Oblivion or something.

Meh. The Witcher games have always been pretty ugly - this one is no exception. I can only imagine the barrage of crap recycled animations we're about to endure

lol KH.... always good for a troll.

And unfortunately I'm right again. The game releases and many people are really disappointed that the graphics didn't live up to their expectations. Happened with Witcher 2 too. The pre-release screenshots were not very impressive and yet they were downgraded from those, even. I mean, we can pretend the game looks great...but c'mon. It really doesn't.

No you're wrong again:

"The game releases and many people are really disappointed that the graphics didn't live up to their expectations"

- No, people are dissapointed that CDProjekt showed one graphics build, but released the game with another

"Happened with Witcher 2 too."

- Witcher 2 looked better with each footage and even better with the final game

"I mean, we can pretend the game looks great...but c'mon. It really doesn't."

- You need glasses

Game was running so well maxed at 1440p that I forced some settings beyond ultra.

1440p, everything maxed with no AA, and no vsync, what is the fps range and average?

The game uses post process AA which has no effect on frame rate.

Not sure what I get without Vsync but with it I am getting around 50fps but this is with tweak settings that go beyond ultra.

I believe 60fps average should be possible with regular ultra settings at 1440p.

It does use post process AA, but CD Projekt's own solution that does have a noticeable hit. I've actually found it to be a pretty good AA solution, definitely better than crap like FXAA or TXAA.

Meh. The Witcher games have always been pretty ugly - this one is no exception. I can only imagine the barrage of crap recycled animations we're about to endure

lol KH.... always good for a troll.

And unfortunately I'm right again. The game releases and many people are really disappointed that the graphics didn't live up to their expectations. Happened with Witcher 2 too. The pre-release screenshots were not very impressive and yet they were downgraded from those, even. I mean, we can pretend the game looks great...but c'mon. It really doesn't.

No you're wrong again:

No, people are dissapointed that CDProjekt showed one graphics build, but released the game with another

What difference does it make? None - it's the same difference: people are disappointed with Witcher 3's graphics. People thought they were going to look better than what they were delivered. You're referring to older screenshots that used a better graphics build. The most recent screenshots released reflected the current state of the game's graphics.

- Witcher 2 looked better with each footage and even better with the final game

If you think so. The trailers and pre-release screenshots looked great but when the game released, many people (including myself) were laughing at the animations and NPC detail quality. Very poor.

- You need glasses

If my vision was blurrier, wouldn't that mean lower-detail objects would appear equivalent to higher detailed objects? Maybe I'm not the one who need glasses. If your vision is blurry and there's no low-detail elements that pop out to you, that's fine. The low-quality elements of this game's GFX stick out to me like a sore thumb - and have long before launch.

I'm not sure how I can objectively wrong about something that's subjective. I just think the animations look blatantly clunky and many elements of the graphics look dated. If you disagree, that's fine. I'm sure the game's fine, but if the game wasn't ugly or disappointing - nobody would really be pointing out downgrades from the earlier builds in the first place. There are parts of the game that look great, but as a whole, visually, it's a little weak for a 2015 title. Often good graphics are enough alone to convince me to pick up titles. Especially considering I'd like to "benchmark" the beauty of my new monitor - but Witcher 3 never really convinced me.

Don't want to slight the game though...sure it's great. Just not my taste.

Meh. The Witcher games have always been pretty ugly - this one is no exception. I can only imagine the barrage of crap recycled animations we're about to endure

lol KH.... always good for a troll.

And unfortunately I'm right again. The game releases and many people are really disappointed that the graphics didn't live up to their expectations. Happened with Witcher 2 too. The pre-release screenshots were not very impressive and yet they were downgraded from those, even. I mean, we can pretend the game looks great...but c'mon. It really doesn't.

No you're wrong again:

No, people are dissapointed that CDProjekt showed one graphics build, but released the game with another

What difference does it make? None - it's the same difference: people are disappointed with Witcher 3's graphics. You're referring to older screenshots that used a better graphics build. The most recent screenshots reflected the current state of the game's graphics.

- Witcher 2 looked better with each footage and even better with the final game

If you think so. The trailers and pre-release screenshots looked great but when the game released, many people (including myself) were laughing at the animations and NPC detail quality. Very poor.

- You need glasses

If my vision was blurrier, wouldn't that mean lower-detail objects would appear equivalent to higher detailed objects? Maybe I'm not the one who need glasses. If your vision is blurry and there's no low-detail elements that pop out to you, that's fine. The low-quality elements of this game's GFX stick out to me like a sore thumb - and have long before launch.

I'm not sure how I can objectively wrong about something that's subjective. I just think the animations look blatantly clunky and many elements of the graphics look dated. If you disagree, that's fine. I'm sure the game's fine, but if the game wasn't ugly or disappointing - nobody would really be pointing out downgrades from the earlier builds in the first place. There are parts of the game that look great, but as a whole, visually, it's a little weak for a 2015 title.

There's a difference between people not happy with the downgrade issue and not happy with the graphics.

I haven't see one person complain that the game looks ugly (except you of course), the game looks great. What people are disappointed with is that it could have looked even better (and were shown better).

There's a difference between people not happy with the downgrade issue and not happy with the graphics.

I haven't see one person complain that the game looks ugly (except you of course), the game looks great. What people are disappointed with is that it could have looked even better (and were shown better).

I'm not necessarily saying the game is outright ugly. Just visually disappointing. It doesn't excel, that's for sure.

You don't really have to look around hard to find people being disappointed with the graphics.. Even in the other review round up thread, the guy in the Youtube video says in some cases it looks worse than The Witcher 2. How would that not be disappointing? Some of the people complaining about the graphics being downgraded are also complaining that the graphics are generally disappointing - not completely mutually exclusive groups.

Meh. The Witcher games have always been pretty ugly - this one is no exception. I can only imagine the barrage of crap recycled animations we're about to endure

lol KH.... always good for a troll.

And unfortunately I'm right again. The game releases and many people are really disappointed that the graphics didn't live up to their expectations. Happened with Witcher 2 too. The pre-release screenshots were not very impressive and yet they were downgraded from those, even. I mean, we can pretend the game looks great...but c'mon. It really doesn't.

No you're wrong again:

No, people are dissapointed that CDProjekt showed one graphics build, but released the game with another

What difference does it make? None - it's the same difference: people are disappointed with Witcher 3's graphics. People thought they were going to look better than what they were delivered. You're referring to older screenshots that used a better graphics build. The most recent screenshots released reflected the current state of the game's graphics.

- Witcher 2 looked better with each footage and even better with the final game

If you think so. The trailers and pre-release screenshots looked great but when the game released, many people (including myself) were laughing at the animations and NPC detail quality. Very poor.

- You need glasses

If my vision was blurrier, wouldn't that mean lower-detail objects would appear equivalent to higher detailed objects? Maybe I'm not the one who need glasses. If your vision is blurry and there's no low-detail elements that pop out to you, that's fine. The low-quality elements of this game's GFX stick out to me like a sore thumb - and have long before launch.

I'm not sure how I can objectively wrong about something that's subjective. I just think the animations look blatantly clunky and many elements of the graphics look dated. If you disagree, that's fine. I'm sure the game's fine, but if the game wasn't ugly or disappointing - nobody would really be pointing out downgrades from the earlier builds in the first place. There are parts of the game that look great, but as a whole, visually, it's a little weak for a 2015 title. Often good graphics are enough alone to convince me to pick up titles. Especially considering I'd like to "benchmark" the beauty of my new monitor - but Witcher 3 never really convinced me.

Don't want to slight the game though...sure it's great. Just not my taste.

so much troll... lol

There's a difference between people not happy with the downgrade issue and not happy with the graphics.

I haven't see one person complain that the game looks ugly (except you of course), the game looks great. What people are disappointed with is that it could have looked even better (and were shown better).

I'm not necessarily saying the game is outright ugly. Just visually disappointing. It doesn't excel, that's for sure.

You don't really have to look around hard to find people being disappointed with the graphics.. Even in the other review round up thread, the guy in the Youtube video says in some cases it looks worse than The Witcher 2. How would that not be disappointing? Some of the people complaining about the graphics being downgraded are also complaining that the graphics are generally disappointing - not completely mutually exclusive groups.

I doubt it looks worse than Witcher 2 in parts, but I'll have to judge for myself when I get to play it this week.

I know one thing for sure; just watching HD gameplay videos (even after the downgrade), it's one of the best looking RPGs on the market (like Witcher 1 and Witcher 2 were at the time). CDProjekt didn't push the tech as far as they could this time, but they still came out with a beautiful product (not to mention that I'm sure the game will be great since 1 and 2 were)

And this "Often good graphics are enough alone to convince me to pick up titles", lol.... said like a true console player.

so much troll... lol

Ignorance is bliss I guess. When people are saying Witcher 2 looks better in many aspects, well...that's just kinda lame. I'm not going to harp on it though.

Please Log In to post.

Log in to comment