@rmpumper said:

@techhog89 said:

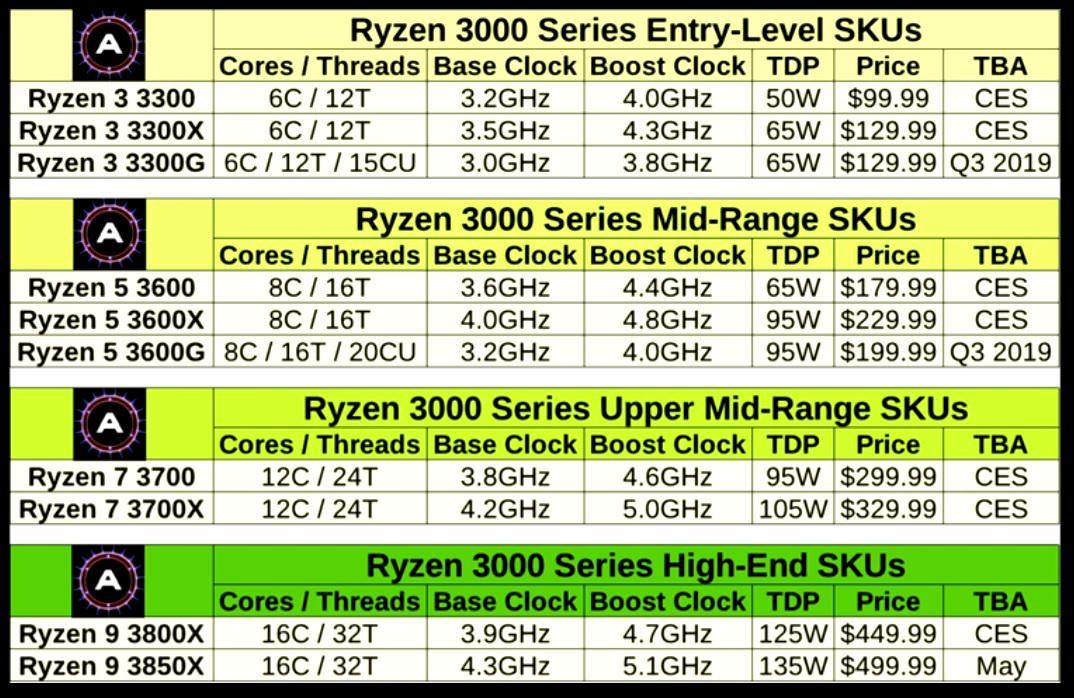

The whole report is fake. These won't be the SKUs we see.

This is a new architecture on a new node. They'll break the barrier. It's just a question of by how much.

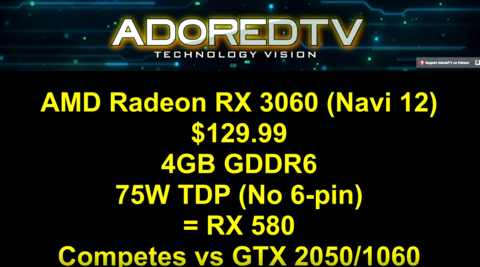

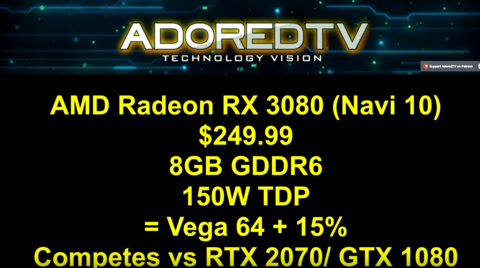

None of these GPUs, if they did exist, are powerful enough for decent ray tracing. Besides that, the current method is Nvidia exclusive and patented.

@rmpumper said:

You can tell it's fake just by looking at the TDP of RX3080.

1080 TDP -180W (~170W in reality), 2070 - 175W (~200W in reality), while current AMD 590 is rated at 175W (220W average in reality) but only a bit fasted than a 120W 1060 6GB. So are you telling us that AMD will skip two gens, beat nvidia in power efficiency and will then sell the 2070 equivalent for half the price? What a fucking joke.

This is actually possible. The RTX series is on 12nm while this is on 7nm. If RTX were on 7nm the 2070 would be closer to 120W, if not less. It's the RTX series that didn't jump as much as usual.

GTX 10xx are 14nm, RX 5xx are 14nm (590 some kind of fake 12nm), but nvdia is twice as efficient. So nm do not mean anything when the whole architecture is shit.

Why are you looking at Nvidia?

its a 7nm vega card with a updated architecture most likely. Original vega = 14nm ( massive difference )

Wanna see how much more you can do with it, here's something from a chief techical officier that makes 7nm cpu's and stated the following a year ago he also talks about 5ghz going to be gained relative easy with time.

While a move from 14 nm to 7 nm was expected to provide, at the very best, a halving in the actual size of a chip manufactured in 7 nm compared to 14 nm, Gary Patton is now saying that the are should actually be reduced by up to 2.7 times the original size. To put that into perspective, AMD's series processors on the Zeppelin die and 14 nm process, which come in at 213 mm² for the full, 8-core design, could be brought down to just 80 mm² instead. AMD could potentially use up that extra die space to either build in some overprovisioning, should the process still be in its infancy and yields need a small boost; or cram it with double the amount of cores and other architectural improvements, and still have chips that are smaller than the original Zen dies.

So in short 7nm process is carrying there entire ryzen line and soon gpu line with it.

It's clear AMD ( gpu ) is no longer chasing the top end. They are not pushing performance up most likely at all with the die shrink, so power consumption will take a massive nose dive. And yes if Nvidia released 14 > 12 nm and next up is another 12 nm card or higher then 7nm card, then AMD is skipping a few gens indeed.

The fact that there architecture for gpu's is clearly worse as you mention is also the reason they probably don't care much for pushing high end gpu's on 7nm or maybe they have other priority's as dedicated high end gpu's are hardly there priority or business right now.

It could also be that higher TDP means less efficient and basically harder for them to reach top end performance something they struggled with before which also makes zero sense push those 7nm vega chips performance.

I wouldn't be shocked if they actually underclocked some of those chips to gain even lower tdp.

Log in to comment