For those worried: yes, 10GB of VRAM is enough for 4K gaming

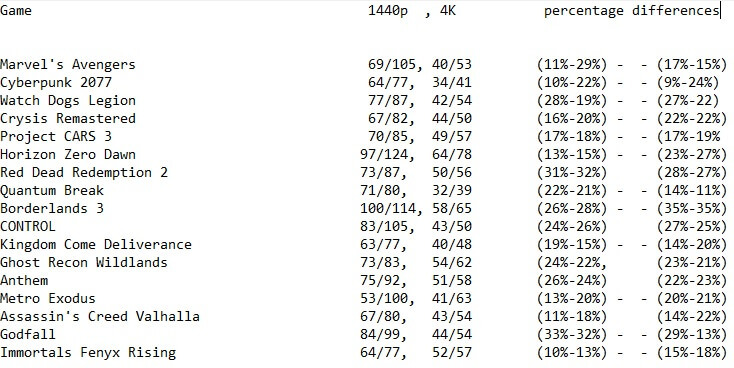

Call of Duty Warzone (with Ray Tracing enabled) was one of the games that pushed our VRAM usage to 9.3GB. On the other hand, Watch Dogs Legion required 9GB at 4K/Ultra (without Ray Tracing). Additionally, Marvel’s Avengers used 8.8GB during the scene in which our RTX2080Ti was using more than 10GB of VRAM (we don’t know whether Nixxes has improved things via a post-launch update, we’ll find out when we’ll re-benchmark the RTX2080Ti in the next couple of days). Furthermore, Crysis Remastered used 8.7GB of VRAM, and Quantum Break used 6GB of VRAM. All the other games were using between 4-8.5GB of VRAM.

So, right now there isn’t any game in which the RTX3080 is bottlenecked by its VRAM. Even Cyberpunk 2077, a game that pushes next-gen graphics, had no VRAM issues at 4K/Ultra settings. However, we obviously can’t predict the future.

Source

Also, running a test on 17 demanding games, DSO has also found out that Nvidia likes blowing hot air and the RTX3080 isn't really that much better than the RTX2080ti, like it was claiming in its super limited benchmark scenarios.

In conclusion, the NVIDIA GeForce RTX3080 appears to be an amazing 1440p graphics card. For 4K/Ultra gaming, though, you’ll either need DLSS or highly-optimized PC games. Owners of OC’ed RTX2080Ti GPUs should also not worry about the RTX3080, as the performance difference between them is not that big.

Log in to comment