Its because GCN hit its limit of 64 CU's and Navi is GCN based.. Its why DF focused on it as well, AMD's tflop's are based on multiplying the CU count of the chip by 64.

If the PS5 was using AMD's Next Gen architecture then we wouldn't know a thing, but knowing that its using GCN based Navi which tops out at 64 CU's there's really no other thing to focus on other than core clocks and CU's because those two specifications are what is used to give the chips tflop count with GCN.

But with knowing how bottlenecked the CU's are on current chips they can get massive gains without even touching the CU's...... and we don't know what they're doing to the rest of the chip.

They need to start increasing the ratio of CU:ROP/TMU before they even think about total CU count.

They could do just that and like DF mentioned they could increase the die size to boost the CU count also but the problem with doing either of those is time and money, you would need several months of RND in order to just design such a custom chip and then to mass produce them would cost even more.

.

Adding more ROPs and TMU's would not be a custom solution for the consoles, it's something AMD need to starting doing for their whole product lineup.

Thus it should filter down to the console's SOC naturally.

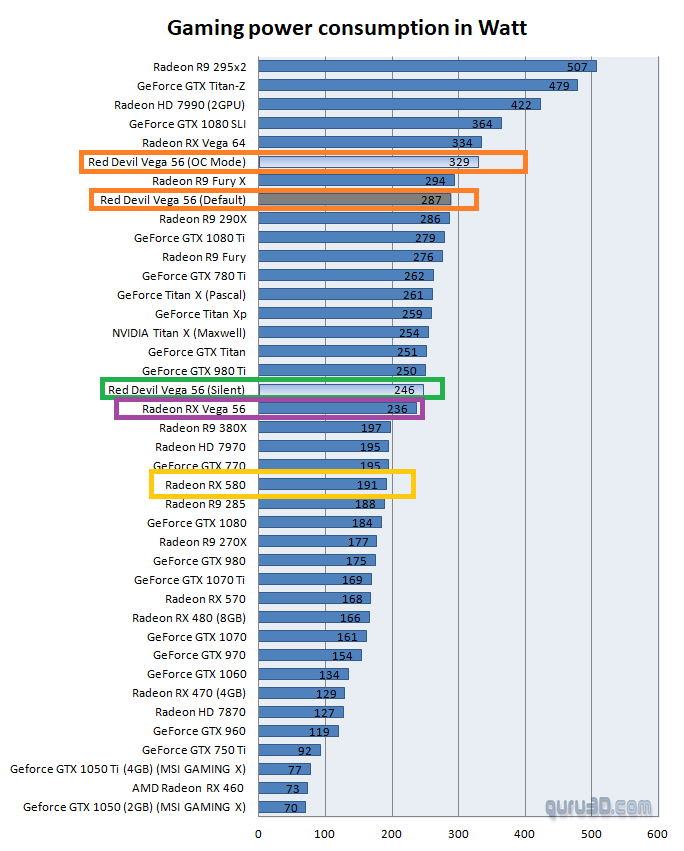

290x = 64 ROPS

Fury X = 64 ROPS

Vega 64 = 64 ROPS

780ti = 48 ROPS

980ti = 96 ROPS

1080ti = 96 ROPS

AMD need to make massive changes to their architecture.

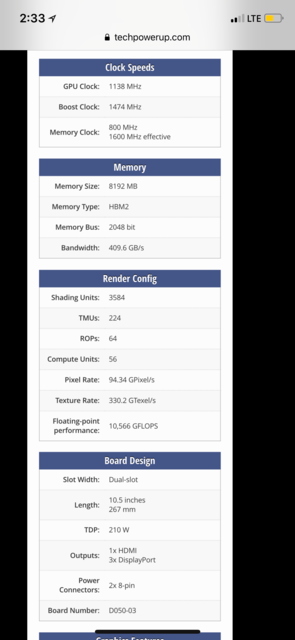

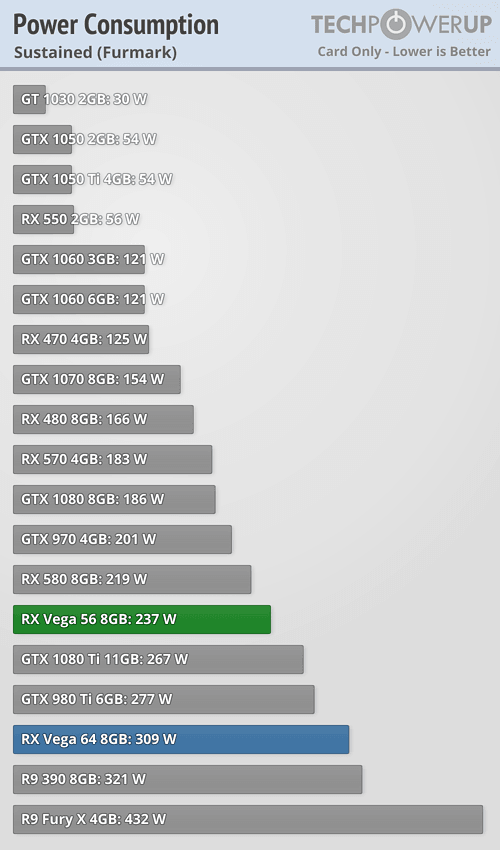

Running Vega 56 and Vega 64 at the same clock speeds show's no additional performance for Vega 64 despite the extra CU's as the ROPS/TMU's are holding the shader cores back.

Simply bumping up the CU count in a next generation console SOC won't be as effective as it should be if they don't fix the un-balanced nature of the chip along side it.

290X = 64 ROPS non-tile cache architecture

Fury X = 64 ROPS non-tile cache architecture with lower latency external memory.

Vega 64 = 64 ROPS at 1500 Mhz tile cache architecture. 4 MB L2 cache. Unknown if Polaris delta compression is applied at this memory level.

----------

780 Ti = 48 ROPS non-tile cache architecture.

980 Ti = 96 ROPS at 1076 Mhz tile cache architecture. ~3 MB L2 cache with Maxwell delta compression

1080 = 64 ROPS at ~1800 Mhz tile cache architecture. ~2 MB L2 cache with better Pascal delta compression

1080 Ti = 88 ROPS at ~1800 Mhz tile cache architecture. ~3 MB L2 cache with better Pascal delta compression. 1080 Ti's L2 cache (>1 TB/s) performance is superior to 980 Ti's L2 cache (~600 GB/s)

Titan XP = 96 ROPS tile cache architecture

AMD needs to lower CU count and increase clock speed e.g. GTX 1080 is like 40 CU GCN with ~1800Mhz clock speed (64 ROPS scaled with is clock speed)

Vega 56/64 extra CUs are wasting power. AMD should have 44 CU setup 64 ROPS and spend extra TDP headroom for higher clock speed.

Log in to comment