Whilst Nvidia are slimy greedy assholes of their own, it looks like AMD isn't one to brag about being pro-consumer either.

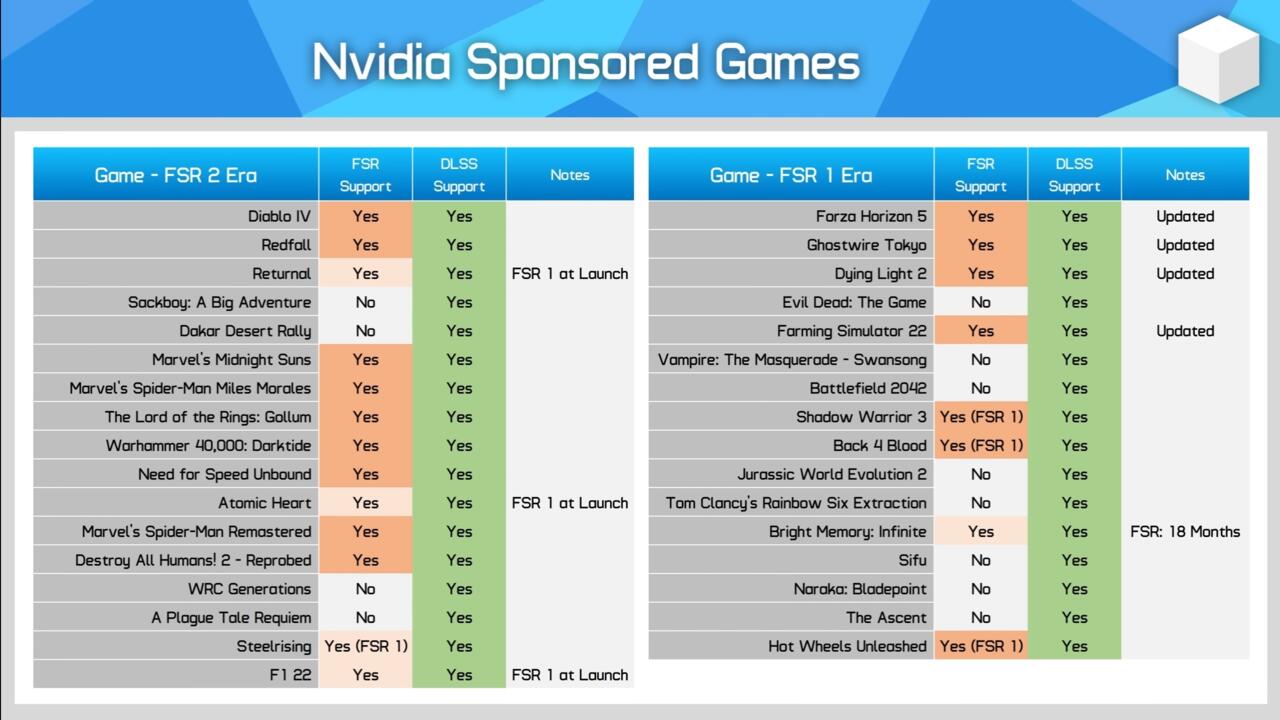

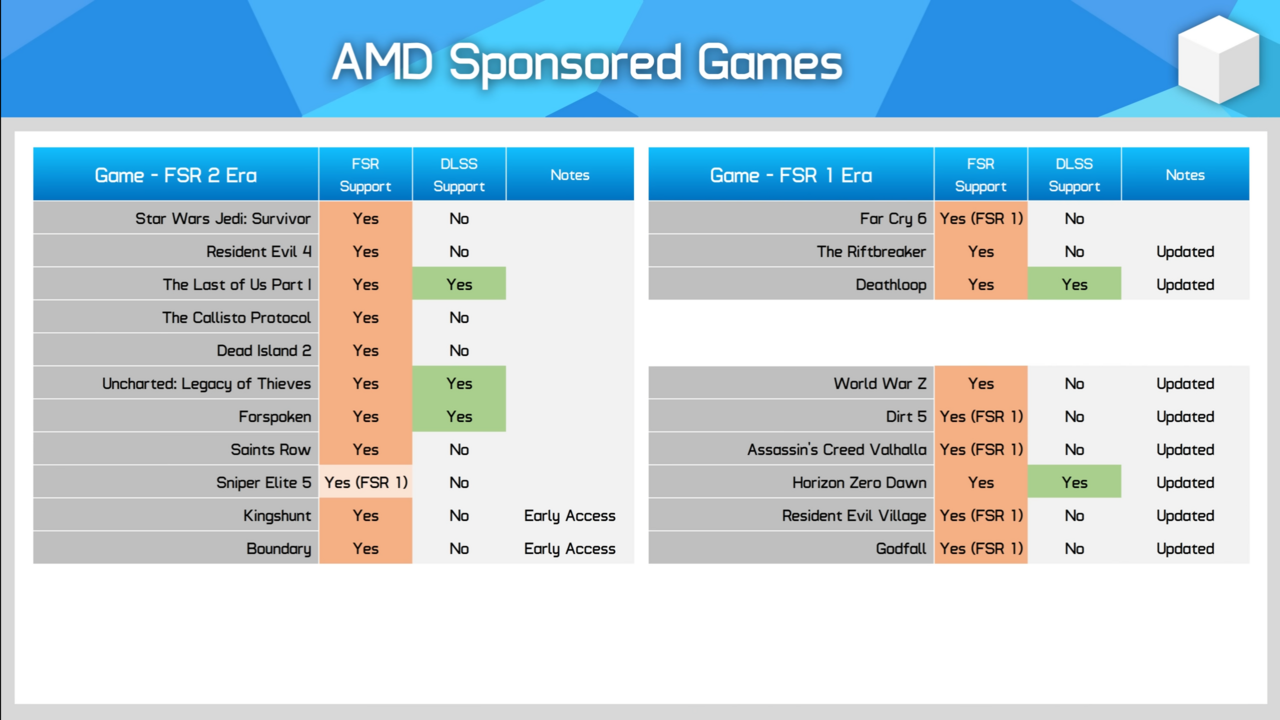

Most Nvidia sponsored titles have both DLSS and FSR included in their games, but it's the other way around for AMD sponsored titles. All these blew up after a WCCF article and Bethesda announcing an AMD partnership surfaced.

And, yes, Nvidia never blocked AMD from using FSR on their sponsored games, and can even use Raytracing at a much worse performance.

NVIDIA does not and will not block, restrict, discourage, or hinder developers from implementing competitor technologies in any way. We provide the support and tools for all game developers to easily integrate DLSS if they choose and even created NVIDIA Streamline to make it easier for game developers to add competitive technologies to their games.

Keita Iida, vice president of developer relations, NVIDIA

AMD FidelityFX Super Resolution is an open-source technology that supports a variety of GPU architectures, including consoles and competitive solutions, and we believe an open approach that is broadly supported on multiple hardware platforms is the best approach that benefits developers and gamers. AMD is committed to doing what is best for game developers and gamers, and we give developers the flexibility to implement FSR into whichever games they choose.

AMD Spokesperson to Wccftech

Even their PR answers are really bad.

Log in to comment