@ronvalencia said:

SPU is a DSP not a CPU. I already corrected you with an IBM quote.

7th SPU handles DRM related services.

From http://public.dhe.ibm.com/software/dw/power/pdfs/pa-cmpware1/pa-cmpware1-pdf.pdf

What GPU runs at 3.2 ghz you MORON...

Even better what 2005 GPU run at 3.2ghz.

Cell is a CPU with CPU speed it a hybrid and nothing you quote there from IBM claim that Cell is a GPU,SPE are part of Cell and Cell is a CPU,no GPU runs at 3.2ghz even less on 2005 when Cell came out.

DSP are digital signal processors are good at some GPU task because how good they are repeating the same task over and over again in loops,which is good for some jobs like folding home for example,as well as other medical applications outside gaming.

Digital signal processing (DSP) is the use of digital processing, such as by computers, to perform a wide variety of signal processing operations. The signals processed in this manner are a sequence of numbers that represent samples of a continuous variable in a domain such as time, space, or frequency.

Digital signal processing and analog signal processing are subfields of signal processing. DSP applications include audio and speech signal processing, sonar, radar and other sensor array processing, spectral estimation, statistical signal processing, digital image processing, signal processing for telecommunications, control of systems, biomedical engineering, seismic data processing, among others.

Digital signal processing can involve linear or nonlinear operations. Nonlinear signal processing is closely related to nonlinear system identification[1] and can be implemented in the time, frequency, and spatio-temporal domains.

A graphics processing unit (GPU), occasionally called visual processing unit (VPU), is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display. GPUs are used in embedded systems, mobile phones, personal computers, workstations, and game consoles. Modern GPUs are very efficient at manipulating computer graphics and image processing, and their highly parallel structure makes them more efficient than general-purpose CPUs for algorithms where the processing of large blocks of data is done in parallel. In a personal computer, a GPU can be present on a video card, or it can be embedded on the motherboard or—in certain CPUs—on the CPU die.

http://digitalcommons.uri.edu/cgi/viewcontent.cgi?article=1002&context=theses

A DSP and GPU are not the same.

So stop comparing the damn G80 vs Cell,Cell is a CPU and DSP are part of it,no GPU runs at 3.2ghz,in fact modern ones run at 1300mhz or little more stock,in 2005 when cell was ready the GPU speed was like 550mhz not even close to DSP on Cell.

I remember how Major Nelson call SPE useless DSP, lol how wrong he was..lol

@skektek said:

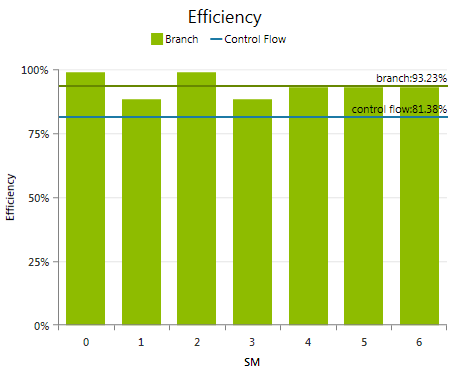

The Cell presented 2 difficulties: most of the cores lacked branch prediction and most of its performance relied on parrelization at a time when parrelization was not a solved problem.

DSP are bad for branch prediction,which is funny because Ronvalencia quotes a sony hater from Beyond3D claiming you have to move branching to SPE which is a joke..lol

@ronvalencia said:

From http://public.dhe.ibm.com/software/dw/power/pdfs/pa-cmpware1/pa-cmpware1-pdf.pdf

The collection of processors in a Cell Broadband Engine™ (Cell/B.E.) processor displays a DSP-like architecture. This means that the order in which the SPUs.

Notice IBM didn't state SPU being CPU like.

For PS3's Cell BE, there's 1 PPE (SMT) + 7 SPUs hence 8 threads. 8th SPU is disabled for yield issues.

SPU thread is not the same as PPE thread.

The Cell Broadband Engine Architecture integrates an IBM PowerPC processor element (PPE) and eight synergistic processor elements (SPEs) in a unified system architecture. The PPE provides common system functions, while the SPEs perform data-intensive processing.

What is a Power PC Processor?

Is a damn CPU you buffoon,there is x86 CPU and there are PPC CPU and they are classify as CPU.

So yeah Cell was a hybrid comparing Cell to the G80 is a joke there are task Cell murder that G80 because that GPU can't run CPU process,Cell is compose of a PPC and SPE in 1 chip,DSP are not even GPU so you can make that comparison the only real reason why you compare Cell to the G80 is blind fanboysm and your hate for anything sony.

Find me 1 CPU than on 2005 or 2006 beat Cell in every single regard.

Log in to comment