With Nvidia announcing their resizable-BAR support to counter AMD's Smart Access Memory today it has made me think how PC hardware is a step or two ahead of the consoles in architecture efficiency now. We all thought the consoles IO decompression was unique to them only for Nvidia to announce RTX IO and DirectStorage.

7th generation consoles (Xbox 360, PS3 and Wii) and older had the optimization advantage in the past where PC hardware that was on par or even somewhat faster in performance would be unable to keep up with the consoles. But since the consoles moved to x86 and practically off the shelf PC parts the optimization advantage has rapidly decreased. PCs with hardware similar to what is in the consoles are able to run games at similar settings and performance throughout most of the 8th generation. The advantage got even smaller once DirectX 12 and Vulkan APIs were introduced which significantly reduced CPU overhead and allowed lower level programming. As Juub said in a similar thread about 2 months ago we noticed that optimization on consoles nowadays is less about efficiency in code and architecture and more about cutting back and tweaking visual effects exactly to what the consoles can handle. A prime example of this is Red Dead Redemption 2 on Xbox One X. In order for the game to run at 4k on the One X the game runs with several graphical settings on fidelity lower than PCs low settings and most of the rest on low and medium. Gamer Nexus even showed in their $500 GTX 1060 PC vs PS5 video that it was somewhat difficult to mimmick PS5 graphics of Dirt 5 on the PC version because somethings such as spectators were completely removed from the PS5 version while still being present on PC.

With the introduction of DLSS, RTX GPUs can play games at a lower internal resolution with the image quality of a higher resolution with the performance benefit of running at a lower internal resolution. For example in Watch Dogs Legion a RTX 2060 Super can play Xbox Series X settings (ray tracing on consoles is lower than PCs low) at native 4k above 30fps but with DLSS enabled that same GPU can then play the game on Ultra settings at above 30fps with hardly any sacrifice in resolution quality. The upcoming survival horror game The Medium is also going to support DLSS and according to the developers will have a RT quality mode that is exclusive to DLSS so that means it won't be on Xbox Series S|X or it will run at a low resolution and framerate.

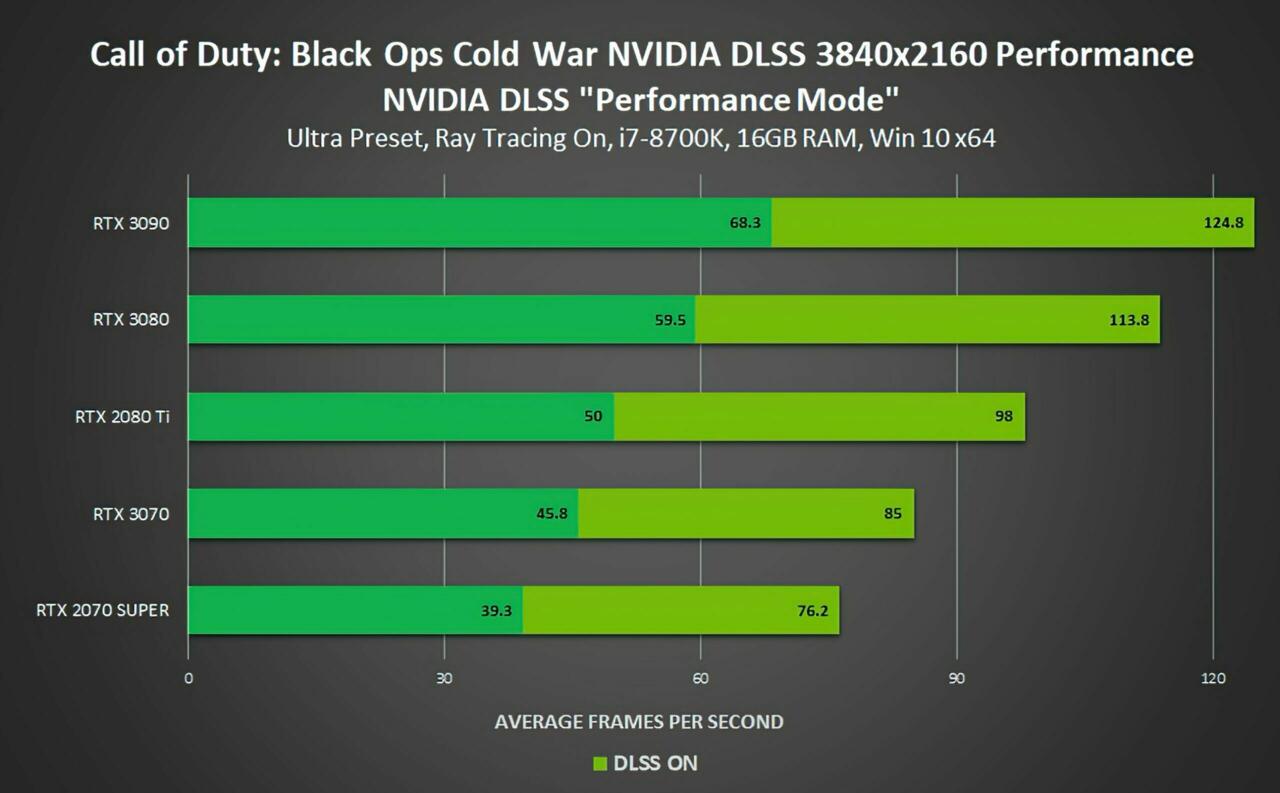

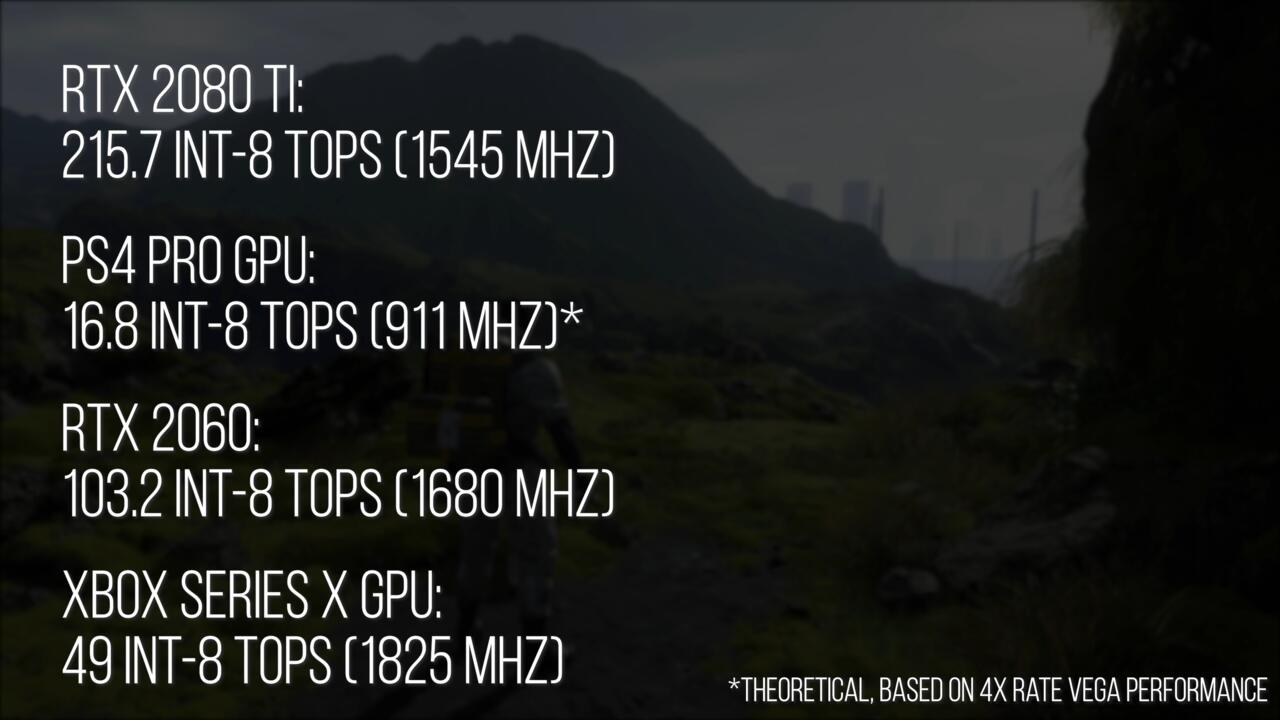

With support for DLSS continuing to grow with adaption in major and minor titles console users can't really downplay it as something only a small handful of games will use. DLSS is a big game changer as it can even offer a 85% boost in framerate in COD Cold War and a 60% boost in Cyberpunk 2077. DLSS makes 4k 90+fps on high/max settings an actual possibility on PC. The PS5 and Xbox Series S|X don't have an answer to DLSS despite being capable of machine learning. The Xbox Series X machine learning performance is half that of an RTX 2060 it also doesn't help that the consoles don't have dedicated hardware for machine learning which means resources will be taken away from other task in order to do it so it won't get the same performance benefit as it would on an Nvidia RTX card which tensor cores for machine learning.

This video showing off AMD's SAM shows that depending on the game you can gain at least an 10% performance boost and going as high as 19%. We should expect similar results from Intel and Nvidia's Resizable-BAR support.

DLSS and Resizable-BAR together can create a wicked combo in PC performance boost.

Log in to comment