@04dcarraher said:

@Xtasy26 said:

@04dcarraher said:

@Xtasy26:

Again do not ignore excessive resolutions and settings being used by many of those people. Many are just using too much that no single gpu can handle with a good frame rate.ie 4k, massive amounts of AA etc.

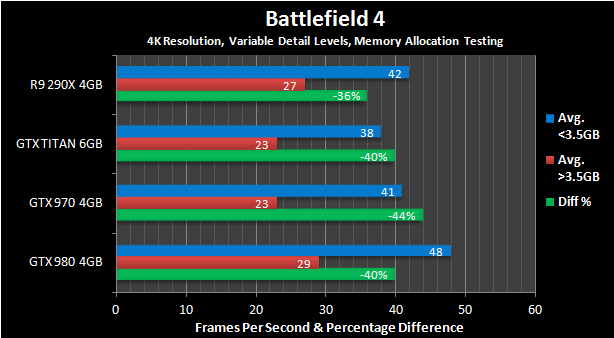

What you seem not to understand that the processing power difference between 970 and 290 series and vram usage will not make any real big difference with future games below 4k. Both gpus dont have enough processing power to do 4k and or massive amounts of AA. It does not matter if a future game can allocate 4gb of vram, When future games come they will more demanding on processing power not just vram usage and by the time 4gb isnt enough for 1080p gaming both the 970 and 290x will be out of the picture.

Some people may want to use their GPUs for 3 - 4 years. If you go over to nVidia's GeForce forums; people who were putting down $330 for a GPU they expected to be 4GB. This is especially true for those who brought SLI setups of GTX 970 who were even more pi$$ed off as they wanted their new GPUs to last for a while.

Heck my HD 6970 is going on for a little over 4 years now.

lol again 3.5gb vs 4gb with similar gpu processing power is not what you should be aiming for for 3-4 years use at or above 1440p. And is not going to make any real difference in the end. and saying it will is a bold face lie. Prime examples is GTX 680 vs 7970 which same type of difference with even 1gb difference and yet 680 performs on par to 7970 at 1440p with 4xAA with BF4 or even Dragon Age Inquisition at 1440. Heck even with Assassin Creed Unity at 1080p 7970 was a whole 4 fps faster then 680 but yet 7970 still can not even make 30 fps average.

Problem is some people are having issues with stuttering even at current games like Assassin's Creed Unity.

If it's having suttering effects with certain games NOW, what about going forward even at 1080P on future games? What about those who brought the 970 now thinking they may be able to upgrade to 1440P monitor or even 4K in the future given that what they thought was a full blown 4GB card, they may not be able to do so, they may now have to sell their graphics card and get a "full 4GB card".

I mean a quick look at GeForce forums which now over 330+ pages shows problems with stuttering to the point when it becomes really annoying which I can imagine.

https://forums.geforce.com/default/topic/803518/geforce-900-series/gtx-970-3-5gb-vram-issue/16/

"After playing Assassins creed unity for a couple of mins, in the most extreme areas of town full of people, fires, guards Vram maxed there with Ultra settings - FXAA it refuses to go any higher than 3558

.....

I was able to hit 4037 with MSAA 8x But there was stuttering...the FPS were starting to dip cause the memory being pushed that high tanks the FPS due to the performance issues at high Vram load."

This is just on page 16. There's many more examples.

I think the main problem is people feel they were lied to as they though they had gotten a full 4GB card. To give an analogy, I am looking to buy a car now and I am only looking for car with V6 engines that's at least 3.2L+ engine ( I am a car enthusiast and only high end cars with V6 engines within certain specs), I don't even bat an eye for V4 engine cars. Now, if I were to buy a car that stated that its a V6 engine and only to later find out that at certain high speeds during driving people are experiencing performance issues (akin to GTX 970 running at high resolutions or games with high memory allocations) and the company comes out and says well at a certain speeds one of the cylinders operates at half frequency so it's technically like "V5" engine..or in this case when with the GTX 970 when it run games with high memory allocations or games at high resolutions it goes from 192GB/sec to 28GB/sec on slow 512MB segment, I as a consumer wouldn't be too happy. I would have gotten a car that doesn't have that issue that operates like a full blown V6 car on this case a full blown 4GB card like the R9 290X/GTX 980.

I especially feel bad for a guy who brought a GTX 970 in Brazil which cost him more than a GTX 980 as it can cost $750 - $800+ for a GTX 970 as Brazil is a low volume market. He is upset as he can't return his graphics card. People like him may be using his graphics card for several years as high end Graphics are very expensive there and it's not fair to him as he brought what he thought was a full-blown 4GB graphics card.

Log in to comment