@musicalmac

The machine learning APIs on both iOS and Android are open for developers to use in their apps. So while a side-by-side isn't exactly possible with similar 3rd party apps at the moment, we'll likely see developers jumping on-board over the next year.

In the case of Huawei, machine learning will be used for a number of features. The first is for the camera, using image recognition, it will optimize the camera and picture based on what is in the scene. For example, it can tell the difference between food, cats, dogs, people, etc. and adjust the image accordingly. Huawei has also worked with Microsoft to pre-load their translator software to convert images and words between dozens of languages when offline. They're also using the NPU towards device optimization based on the user. This would include adjustments to improve battery life, optimize storage, manage multiple processes or ensure that there's no performance degradation over time.

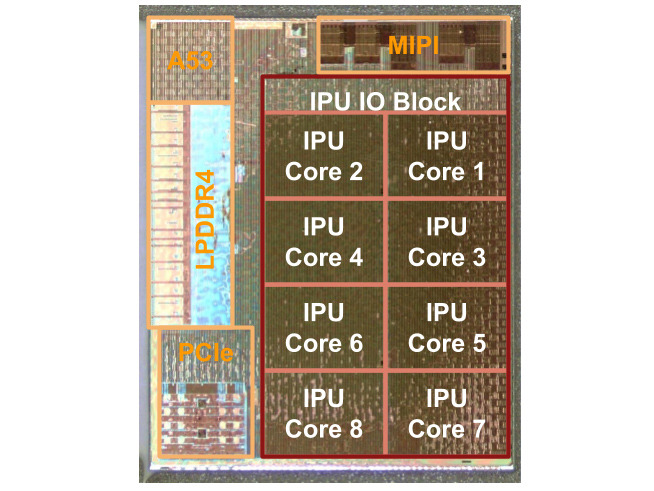

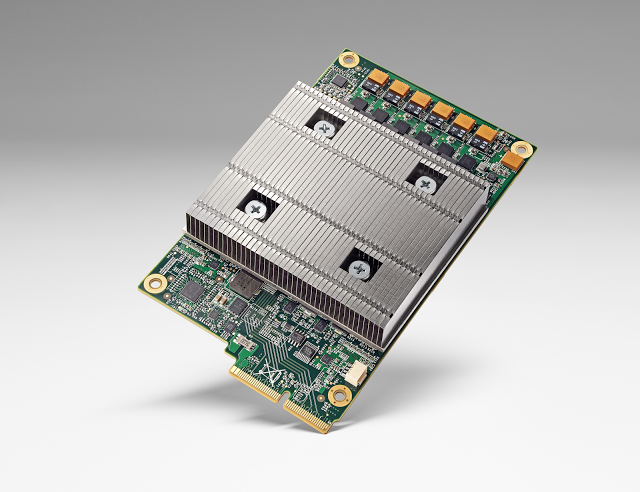

From my understanding, the Pixel Visual Core (PVC) is currently inactive and Google's first major use will be for HDR+ with Android 8.1 in the coming weeks. This is also something they'll allow 3rd party apps to use shortly after. The camera on the Pixel 2 / 2 XL is actually very unique as every pixel is split into two. Meaning, that with each shot, it captures double the amount of data. Data is critical for Google and the raw performance of the PVC can crunch this in very short amounts of time. The data can be used for camera features such as HDR+ or portrait mode. This also allows for benefits in image recognition and AR applications.

While the CPU compute on the A11 is definitely higher than the 970 or 835, the graphics performance is only slightly better. So I wouldn't exactly call it a case of stunted performance. That being said, the performance of any app or function that can leverage machine learning will be immensely higher on the PVC than the A11, raw performance alone is ~5x in favor of Google (~3.2x for Huawei). The PVC is separate from the Snapdragon 835, but, like the A11, the Kirin 970's machine learning hardware is part of the SoC. CPU performance for machine learning is almost insignificant compared to the GPU, let alone dedicated hardware.

You also need to take into account the fact that Google is just better at this than Apple. Looking at Google Assistant vs Siri, it's easy to see that Apple's offering is well behind. There's a lot on the software side that, without the dedicated hardware, allows Google to offer offline features such as limited Google Assistant and music recognition. Outside of FaceID, we haven't really seen anything major from Apple that leverages Core ML and the on-board machine learning hardware.

--

Drastically improving the performance of graphic features, such as ray tracing, is something that also becomes possible with machine learning hardware. I'd be curious if smartphones would ever utilize that for games, AR or other 3D applications.

Log in to comment