The Future of Gaming Tech Looks Brighter Than Ever

As our current stable of consoles begin to fade into obsolescence, the PC market has already defined what we can expect from the future of console gaming and development.

The moment Watch Dogs and Star Wars 1313 entered our field of view at E3, we were teased with a glimpse into the future of gaming tech with demos exhibiting real-time elements that we usually see in prerendered cutscenes and film. It was clear that the fidelity onscreen was a step above what we're used to. LucasArts has already said that Star Wars 1313 is intended for the next generation of hardware, and that much is obvious once the game is seen in action. Watch Dogs was confirmed for release on the Xbox 360 and PlayStation 3, but those versions will not reflect the same tech exhibited onstage, likely sacrificing lighting, texture resolution, and particle effects in the transition.

Both of these titles ran on high-end PCs at E3, with Star Wars 1313 (and Watch Dogs, presumably) using Nvidia's latest GPU (the Kepler-based GTX 680), a card that's opening doors for major advances in graphics and computation.

Hades 2 Is Already An Exciting Sequel With Confident Changes | Technical Test Impressions Firearms Expert Reacts to Fallout 76's Guns Fallout 4 Next Gen Update Comparison Why Are Video Game Adaptations Good Now? | Spot On Fallout 4 Steam Deck Verified Gameplay ALIEN: Rogue Incursion - Announcement Teaser Trailer Stellar Blade - 13 Things I Wish I Knew S.T.A.L.K.E.R. 2: Heart of Chornobyl — Official "Not a Paradise" Trailer Honkai: Star Rail - "Then Wake to Weep" | Version 2.2 Trailer Devil May Cry: Peak Of Combat | Dante: Blazing Tempest Gameplay Trailer SAND LAND — Official Launch Trailer Sea of Thieves Season 12: Official Content Update Video

Please enter your date of birth to view this video

By clicking 'enter', you agree to GameSpot's

Terms of Use and Privacy Policy

But if you try to pinpoint what made Watch Dogs and Star Wars 1313 look so impressive, a quick visual analysis will reveal that lighting and particles are key factors contributing to the perceived fidelity on display. Truthfully, a properly lit environment with 750K polygons looks more authentic than a poorly illuminated environment at twice the polygon count, especially in motion. These elements are only now becoming a reality in the realm of real-time rendering, and it's changing the balance of power in the gaming arms race. Where polygons were once king, they've been usurped by techniques that, in essence, require a different type of artistic prowess. This new direction is going to have a major impact on our expectations for AAA titles.

Insomuch as delivering these techniques requires advanced hardware, a new breed of software is also necessary to facilitate their implementation. Leading the charge is Epic's workhorse, Unreal Engine 4. The real-time demo Epic showed at E3 was nothing short of a revelation. After running a scripted cutscene, the Epic developer driving the demonstration began to alter the scene in real time, crushing our perception of what can and cannot be done on the fly. Light bounced off every surface and, depending on the shaders in use, appropriately illuminated the surrounding objects. This aptly named global illumination is nothing new to film, but it's a first for gaming. Once seen in action, these developments will clearly dominate the coming generation of consoles if Sony and Microsoft hope to keep up with the PC market.

Hades 2 Is Already An Exciting Sequel With Confident Changes | Technical Test Impressions Firearms Expert Reacts to Fallout 76's Guns Fallout 4 Next Gen Update Comparison Why Are Video Game Adaptations Good Now? | Spot On Fallout 4 Steam Deck Verified Gameplay ALIEN: Rogue Incursion - Announcement Teaser Trailer Stellar Blade - 13 Things I Wish I Knew S.T.A.L.K.E.R. 2: Heart of Chornobyl — Official "Not a Paradise" Trailer Honkai: Star Rail - "Then Wake to Weep" | Version 2.2 Trailer Devil May Cry: Peak Of Combat | Dante: Blazing Tempest Gameplay Trailer SAND LAND — Official Launch Trailer Sea of Thieves Season 12: Official Content Update Video

Please enter your date of birth to view this video

By clicking 'enter', you agree to GameSpot's

Terms of Use and Privacy Policy

Clearly, the results speak for themselves, but beyond Unreal Engine 4, the hardware powering the demo deserves mention. The backbone of the machine was Nvidia's GTX 680, which embodies significant strides in packing performance in a concise and efficient package. To illustrate its prowess, we should examine another demo from Epic that evolved between its debut at GDC '11 and its rebirth at GDC '12. Have a look at the real-time Samaritan Demo, built within Unreal Engine 3.

Hades 2 Is Already An Exciting Sequel With Confident Changes | Technical Test Impressions Firearms Expert Reacts to Fallout 76's Guns Fallout 4 Next Gen Update Comparison Why Are Video Game Adaptations Good Now? | Spot On Fallout 4 Steam Deck Verified Gameplay ALIEN: Rogue Incursion - Announcement Teaser Trailer Stellar Blade - 13 Things I Wish I Knew S.T.A.L.K.E.R. 2: Heart of Chornobyl — Official "Not a Paradise" Trailer Honkai: Star Rail - "Then Wake to Weep" | Version 2.2 Trailer Devil May Cry: Peak Of Combat | Dante: Blazing Tempest Gameplay Trailer SAND LAND — Official Launch Trailer Sea of Thieves Season 12: Official Content Update Video

Please enter your date of birth to view this video

By clicking 'enter', you agree to GameSpot's

Terms of Use and Privacy Policy

The point is that this demo, running on the same hardware as the E3 Elemental demo, debuted in 2011 running on three GTX 580s. In broad terms, the GTX 680 is capable of performing as well as a top-of-the-line, triple-SLI-fueled rig from 2011. This drives down cost, obviously, and you can even cram the GTX 680 into a diminutive Shuttle PC, as Epic had for its presentations. This is good news for those of us who shudder at the thought of a full tower beside their desk.

Once seen in action, these developments will clearly dominate the coming generation of consoles if Sony and Microsoft hope to keep up with the PC market.Manufacturing processes continue to shrink year over year, and it's a significant factor in the wealth of performance available in the 680, but Nvidia has done its fair share of legwork in the meantime. Regarding the Samaritan demo, the 2012 variant boasted a 76 percent decrease in VRAM usage over the prior iteration--not a figure to be taken lightly. This is due in no small part to Nvidia's pioneering of FXAA (Fast Approximate Anti-Aliasing), a new antialiasing technique that taxes shader processing cores, rather than RAM. Utilizing FXAA initially required developers to hard-code the functionality into their software, but thanks to a recent driver update, you can now enable FXAA through the Nvidia control panel, boosting performance in hundreds of PC titles. This also means it's a possibility for Microsoft and Sony to use FXAA in the future if they have the right hardware to back it up. Also, since it's a post-processing technique, it can improve on 3D "classics" through backward compatibility.

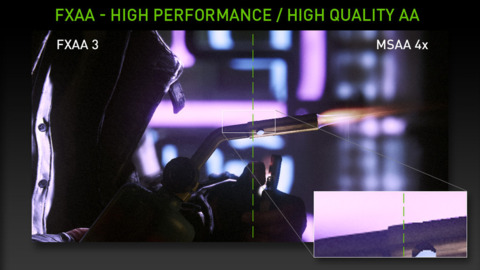

There is a trade-off when using FXAA, but it's one most people will likely accept. Essentially, once the environment and characters are rendered, jaggies and all, FXAA takes that output and works its magic. The previous king of antialiasing, MSAA (Multi-Sample Anti-Aliasing), has to account for every polygon and edge prior to rendering the image, sacrificing frames per second along the way. It's technically the more accurate technique of the two, but the minor discrepancies in antialiasing discrimination present in FXAA are overshadowed by the decrease in resource usage, and increase in frame rates. Overall, FXAA is hyper-efficient relative to other methods, and in most cases produces better results. Here is an image illustrating the differences between MSAA and FXAA, albeit in an isolated sample.

With all of these factors in mind, it's easy to see how the next leap in console tech will be defined by more than advances in polygon counts and resolution. Some industry professionals bemoan a new generation, and there's a lot of truth to the notion that function should precede form in the realm of gaming. For some (including the hardware manufacturers), the market needs occasional stimulation--breadcrumbs that lead to a brighter, globally illuminated future. For better or worse, we've created an economy of performance, where games are valued primarily for their ability to impress their worth upon us in as little time as possible. Hopefully, these powers will be put to good use and help developers flex their creativity, rather than dictate it.

Got a news tip or want to contact us directly? Email news@gamespot.com

Join the conversation