Nvidia's Kepler GTX 680: Powering the Next Gen

Quicker, quieter, and more power efficient, the GTX 680 is new "world's fastest" GPU.

At GDC 2011, Epic Games (maker of Gears of War) unveiled Samaritan, an eye-popping technical demo that showed what was possible with Unreal Engine 3 and a seriously hardcore PC. It was--and still is--an impressive demo, showcasing smoothly tessellated facial features, point light reflections, and judicious use of movie-style bokeh. The demo was so impressive that Epic decided to show it again at this year's GDC, with vice president Mark Rein reiterating that Samaritan is its vision for the next generation--a "screw you" to the naysayers predicting that graphical prowess will play second fiddle to features and functionality.

The problem was, Samaritan didn't exactly run on your average gaming PC, requiring three Nvidia GTX 580 GPUs at a cost of thousands of dollars, as well as a power supply that brought Greenpeace members out in a cold sweat. And this left us wondering: if it took so much power to run the demo, what chance would the next generation of console and PC gamers have to experience it?

The answer, it turns out, was also unveiled at this year's GDC in the form of Nvidia's brand-new GTX 680 GPU, a single one of which easily powers the Samaritan demo. Aside from a few optimisations from Epic, most of that comes down to the new 28nm Kepler architecture that the 680 is based on. It features a new Streaming Multiprocessor (SMX) design, GPU Boost, new FX and TX Anti-aliasing (FXAA/TXAA) technology, Adaptive VSync, support for up to four monitors (including 3D Vision Surround), and--most interestingly of all--much reduced power consumption with an increased focus on performance per watt.

Multiple acronyms aside, just what do you get if you plump down your hard-earned cash for a GTX 680? Quite a bit as it goes: 1536 CUDA Cores, 128 Texture Units, 32 ROP Units, 1Ghz Base Clock, 2GB GDDR5 RAM @ 6Ghz, 128.8 GigaTexels/sec Filtering Rate, 28nm Fabrication, 2x Dual Link DVI, 1x HDMI, 1x DisplayPort, 2x 6-pin Power Connectors, and a 195 Watt TDP.

Based on specs alone, the 680 is a more powerful beast than its predecessor, but it's also more practical too. The four display outputs mean you can drive four monitors (at up to 4K 3840x2160 resolution!) at once from a single card. That goes for 3D vision surround, meaning you don't have to splurge on an SLI setup if you're into giving yourself a headache. Also notable is the reduced power consumption of the card, which has a TDP of 195 Watts, compared to 250 Watts in a GTX 580, meaning you only need two six-pin connectors, and you can run it from a much smaller power supply.

"Clearly, the 680 is a more powerful beast than its predecessor, but it's also more practical too."

The reduced TDP also results in reduced heat, meaning it's easier to keep cool. The reference card has an all-new cooling setup that's much kinder on your ears; a godsend for anyone that ever had to endure the jet engine sounds of the GTX 400 series. It features special acoustic dampening material around the fan, a triple heat pipe design, and a redesigned fin stack that has been shaped for better airflow. Of course, this being a reference card, you can expect manufacturers to come up with their own crazy cooling solutions once they start shipping 680s.

Another neat feature of the 680 is a new hardware-based H.264 video encoder called NVENC. If you've ever tried to encode H.264 video, you'll know that it's a time-consuming process. While previous GTX cards sped up encoding using the GPU's CUDA cores, it resulted in increased power consumption. And so, in keeping with Nvidia's new power-saving attitude, the NVENC encoder consumes much less power, while also being four times as fast.

"If you're anything like us, then nothing gets you more excited than realistic cloth animations and individually animated strands of hair."

That means 1080p videos encode up to eight times faster than real time, depending on your chosen quality setting, so a 16-minute-long 1080p, 30fps video takes approximately 2 minutes to complete. Unfortunately, software developers need to incorporate NVENC support in their software, so at launch you're limited to using Cyberlink's Media Expresso. Support for Cyberlink PowerDirector and Arcsoft MediaConverter is promised for a later date.

One other notable improvement to the GTX 680 comes in the form of improved PhysX performance. And, if you're anything like us, then nothing gets you more excited than realistic cloth animations and individually animated strands of hair. To demonstrate the 680's improved performance, Nvidia has put together a tech demo featuring a very hairy ape in a wind tunnel. Each strand of its fur is individually animated, with PhysX processing each movement in real time.

Nvidia also put together a demo called Fracture, which features three destructible pillars. Instead of scripted animations, it uses PhysX to calculate the destruction of an object in real time. Depending on what force the pillar is struck with and taking into account the environment and any previous damage, it falls apart in an amazingly realistic way. The obvious application for this tech is in action games, where gunfire could accurately damage buildings.

The improvements to PhysX aren't just part of a tech demo either. The PC version of Gearbox Software's upcoming Borderlands 2 is set to support many PhysX enhancements. These include water that reacts accurately to a player's movements, rippling and splashing around the environment as you walk through it. Borderlands 2 also makes use of PhysX to render destruction. For example, fire a rocket launcher into the ground, and huge chunks of earth and gravel fly into the air. The resulting debris settles on the floor, where you can kick it around by walking through it.

So those are the top-line improvements to Kepler, but there's plenty more tech to get stuck into on the next page, where we take a more in-depth look at Nvidia's latest architecture. Or, if you just want to get straight to some benchmarks, head over to page three.

A Closer Look at Kepler

SMX Design

Since the GTX series, Nvidia GPUs have been based on the Fermi architecture, which introduced DirectX 11 and OpenGL 4.0 support, as well as a new pipeline technology for improved tessellation performance. Kepler builds upon Fermi, but instead of simply increasing clock speeds to achieve better performance, it actually decreases them in favour of having more processing (CUDA) cores operating at lower speeds. It's much like Intel's transition from the "hotter than the surface of the sun" architecture of the Pentium 4, to the much more power-efficient Core architecture.

"Kepler is much like Intel's transition from the 'hotter than the surface of the sun' architecture of the Pentium 4, to the much more power-efficient Core architecture."

The 680's CUDA cores reside within the SMX. Most GPU functions are performed by the SMX, including pixel and geometry shading, physics calculations, texture filtering, and tessellation. Each SMX contains 192 CUDA cores, which is six times as many as Fermi, and the 680 contains eight SMX blocks for a total of 1,536 cores. This increase provides two times the performance per watt of Fermi. What that means for you is a graphics card with much greater performance at reduced power consumption of 195W compared to 250W in a GTX 580. The reduction is so great that the card requires only two six-pin power connectors, meaning less heat is generated, and smaller power supplies such as those in Alienware's console-sized X51 desktop can be used.

GPU Boost

Another performance-pushing feature of the 680 is GPU Boost, which dynamically overclocks the GPU from the base clock speed of 1GHz. That's possible thanks to some clever monitoring of the GPU's Thermal Design Power (TDP). Many games and applications don't tax the GPU to its maximum, leaving some TDP headroom available. In those cases the clock speed of the GPU is increased on the fly, typically by around 5 percent, but sometimes by as much as 10 percent, giving you a boost in performance.

What's neat is that feature is entirely automated, being integrated into the 680 drivers and hardware, so you get better performance with little effort on your part. That's not to say performance junkies can't push things further; like all previous GeForce cards, the 680's base clock can be overclocked as far as you're willing to push it.

FXAA

One of the key features in getting Samaritan to run on just a single graphics cards is FXAA, Nvidia's own custom anti-aliasing. Good anti-aliasing is important if you don't want your games to look like a jaggy mess around the edges of objects, with most modern games using some form of it. The most common is multi-sample anti-aliasing (MSAA), which was used extensively in the first Samaritan demo. While MSAA produces some beautiful results, it's a bit of a resource hog.

Nvidia's FXAA produces similar (if not better) results with its pixel shader image filter and other post-processing effects like motion blur and bloom. And it does so using much less of the GPU's resources. That means a performance hit of around 1ms per frame or less, resulting in frame rates that are around two times higher than 4xMSAA.

While FXAA has been around for some time, it has previously been dependent on developers' implementing it: if the game you'd bought didn't support it, you were out of luck. With the GTX 680, FXAA can be turned on from the Nvidia control panel, making it compatible with most games.

TXAA

And, as if that weren't enough AA talk for you, the Kepler architecture also features TXAA, which is a brand-new film-style AA technique that works exclusively on the GTX 680. It's a mix of hardware anti-aliasing, a custom CG film-style AA resolve, and--in the case of TXAA 2--an optional temporal component for better image quality.

Like FXAA, it also requires much less processing power, resulting in better performance. TXAA offers similar visual quality to 8xMSAA, but with the performance hit of 2xMSAA, while TXAA 2 offers image quality that is superior to 8xMSAA, but with the performance hit of 4xMSAA.

It's certainly impressive but will require game developers to support it in future titles, so you won't be able to go TXAA crazy from day one. That said, MechWarrior Online, The Secret World, Eve Online, Borderlands 2, BitSquid, Slant Six Games, Crytek, and Epic's upcoming Unreal Engine 4 have all promised to support the technology.

Adaptive VSync

"Like FXAA, Adaptive VSync doesn't require developer support, so you can simply turn it on in the Nvidia control panel when needed."

If you get annoyed by screen tearing or random stuttering in your favourite games, then Adaptive Vsync is for you. VSync is the process of presenting new frames at the same refresh rate as your monitor, that is, 60fps for your typical 60Hz monitor. The problem is, if you suddenly hit a particularly taxing area in your game that causes the frame rate to drop, rather than simply decreasing the frame rate slightly, it drops right down to 30Hz. This causes noticeable stuttering.

You might imagine the solution is to simply turn VSync off, but that presents its own problems. With VSync off, new frames are presented immediately, which causes a visible tear line onscreen at the switching point between old and new frames. It's exacerbated at higher frame rates, where the tearing gets bigger and more distracting.

Nvidia's solution to this predicament is Adaptive VSync, which switches VSync on and off on the fly. If your frame rate drops below 60fps, Vsync is automatically disabled, thus preventing any stuttering. Once you hit 60fps again, VSync is turned back on to reduce screen tearing.

Like FXAA, Adaptive VSync doesn't require developer support, so you can simply turn it on in the Nvidia control panel when needed.

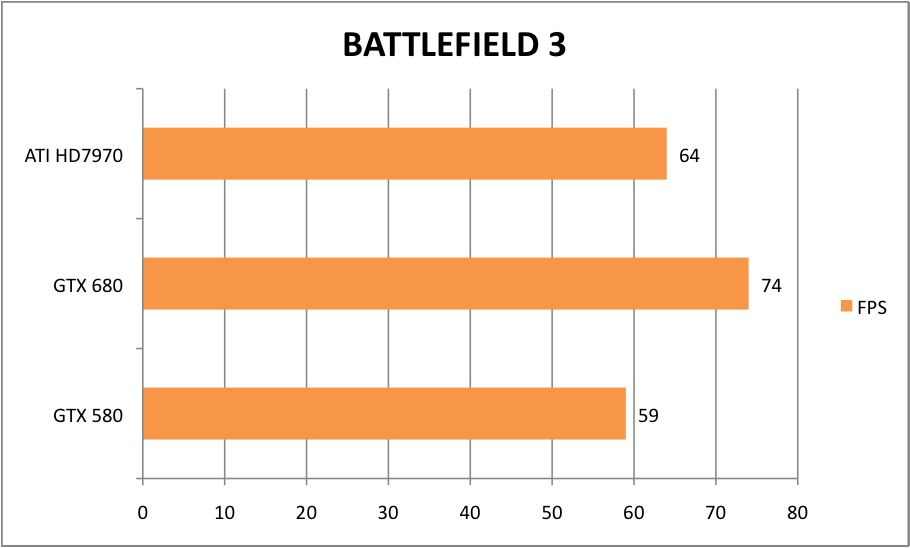

Benchmarks

Because the release of a new graphics card wouldn't be complete without some benchmarks and bar charts, we've rounded up three of the most GPU-taxing games we could find to put the GTX 680 through its paces. We also nabbed a GTX 580 and an ATI HD7970 for some added competition. Each game was run at maximum settings, with AA enabled and at a 1080p resolution. For the Unigine Heaven benchmark, tessellation was set to extreme.

The games were tested using the following system:

Intel Core i7 2700K 3.5Ghz

16GB Crucial Ballistix Sport DDR3 RAM

ASRock Extreme 4 Gen3 Motherboard

Corsair Force Series GT SSD

Corsair H100 Liquid Cooling

Corsair HX 850 PSU

But What Does It All Mean?

Judging by our very orange bar charts, it's easy to see what a great performer the GTX 680 is. Not only is it a step up from its predecessor, but it also outperforms ATI's high-end HD7970 by some margin.

What the charts don't tell you, though, is just how damn quiet the 680 is. There's a very noticeable difference in volume between it and the other cards, particularly when it's running at full whack. If your gaming PC lives under the TV in the living room, or you have it in the same room as your significant other, you'll definitely appreciate the decreased volume.

There's a price to pay for all that quiet power, though, with the GTX 680 retailing at around £429, which is pretty much the same price as ATI's HD7970. With its greater performance, quiet cooling, support for four monitors, much-reduced power consumption, and a bunch of new technologies under the hood, the GTX 680 is easily the better choice. Plus, if you're into headaches, Nvidia's 3D Vision Surround is much more widely supported than ATI's solution, and it performs better too.

The question is, does anyone actually need a card like the GTX 680? After all, a previous-generation GTX 580 can run pretty much anything you throw at it at maximum settings. And while Nvidia hasn't announced anything just yet, it's likely there will be midrange 600 series cards to follow later in the year at a much cheaper price point.

But if Epic's Samaritan demo really is what the next generation of games are going to look like--maybe even more so with Unreal Engine 4--then cards like the GTX 680 are just what the gaming industry needs to push through technological advances and create experiences that can astound, and make us more immersed in our favourite games than ever before. And with its advances in power consumption, there's a chance--however slim--that something like it might just make its way into a next-generation console.

You might not need a 680 just yet, but as soon as a game that looks as good as Samaritan hits, you'll definitely want one.

For more on Kepler and my thoughts on its laptop versions, click here.

'Got a news tip or want to contact us directly? Email news@gamespot.com

Join the conversation