ATI Radeon HD 2000 Series Hands-On

Find out about ATI's DirectX 10 Radeon HD 2000 series video card lineup.

[UPDATE: Added new release information and benchmark results for the Radeon HD 2600 and HD 2400 series cards.]

AMD may have released its new DirectX 10-compatible ATI Radeon months after the Windows Vista launch, but the delay hasn't affected PC enthusiasts because most of the highly anticipated DirectX 10 games, such as Crysis and BioShock, aren't going to arrive until the second half of the year. Yes, we've seen DirectX 10 versions of Lost Planet and Company of Heroes, but we can't expect modified DirectX 9 games to compete against new games developed for DirectX 10. Subtle shadowing changes and extra rocks on the ground aren't going to help Microsoft sell more copies of Vista.

Those holding off on DirectX 10 Windows Vista upgrades in anticipation of upcoming DirectX 10 games can choose from two brands of video cards. Nvidia's DX10-compatible GeForce 8 series has been available since the end of last year. AMD released the first member of its ATI Radeon HD 2000 GPU lineup, the ATI Radeon HD 2900 XT, this past May. The midrange Radeon HD 2600 and the entry-level Radeon HD 2400 cards arrive in early July. All the Radeon HD 2000 series cards feature full DirectX 10 compatibility, image quality enhancements, and hardware-accelerated high-definition video support.

| Radeon HD 2900 XT | Radeon HD 2600 XT | Radeon HD 2600 Pro | Radeon HD 2400 XT | Radeon HD 2400 Pro | |

|---|---|---|---|---|---|

| Price: | $399 | $149 (GDDR4), $119 (GDDR3) | $89 – 99 | $75 – 85 | $50 – 55 |

| Stream Processing Units: | 320 | 120 | 120 | 40 | 40 |

| Clock Speed: | 740MHz | 800MHz | 600MHz | 700MHz | 525MHz |

| Memory: | 512MB (GDDR3) | 256MB (GDDR4, GDDR3) | 256MB (GDDR3, DDR2) | 256MB (GDDR3) | 128 – 256MB (DDR2) |

| Memory Interface: | 512-bit | 128-bit | 128-bit | 64-bit | 64-bit |

| Memory Speed: | 825MHz | 800MHz (GDDR3), 1100MHz (GDDR4) | 400 – 500MHz | 700 – 800MHz | 400 – 500MHz |

| Transistors: | 700 million | 390 million | 390 million | 180 million | 180 million |

The Radeon HD 2600 and Radeon HD 2400 cards will be available in the usual Pro and XT variants, but there will be only a single XT card at the HD 2900 level at launch. The XT cards generally have faster core and memory clock speeds than the Pro versions. The model numbers indicate relative GPU strength. For example, the Radeon HD 2900 has 320 stream processing units, while the Radeon HD 2600 and Radeon HD 2400 have 120 and 40 stream processing units, respectively. On-board memory will range from 256MB for the 2400, 256MB for the 2600, and up to 512MB for the 2900. Board manufacturers will likely offer more memory options as the GPU line matures. Several manufacturers are also working on special Radeon HD 2600 XT "Gemini" cards that will have two 2600 XT GPUs on a single card. ATI hasn't announced a launch date for the dual-GPU "Gemini" board yet, but the company has stated that the suggested pricing will be in the $189-249 range.

The Radeon HD 2000 GPU is ATI's first unified shader architecture for the desktop, but it's actually a second-generation design. ATI's first unified shader was the "Xenos" GPU built for the Xbox 360. Older, nonunified shader designs had separate hardware shaders dedicated to pixel or vertex processing. This made GPUs inefficient, because games never maintain the same pixel-to-vertex workload ratio to always match the pixel-to-vertex shader ratios on the hardware. The unified shader architecture gives the GPU more flexibility by allowing all the shaders to process pixel, vertex, and now, with DirectX 10, geometry work. Nvidia also switched over from traditional shaders to the unified shader approach in its current GeForce 8 GPU.

ATI created a new Ruby "Whiteout" tech demo to show off what the Radeon HD 2900 XT can do. In the new demo, the Ruby model has 200,000 triangles, and the entire video averages more than 1 million triangles per frame. In comparison, the Ruby from the Radeon X1000 series demo, "The Assassin," only has 80,000 triangles, and the demo averages just over 500,000 triangles per frame. The new Ruby also has 128 facial animation targets compared to four for the older models, which allows for more realistic facial expressions.

The Radeon HD 2900 XT will also feature the return of the voucher. Each card will come with a code for Valve's Half-Life 2: The Black Box, which includes Half-Life 2 Episode 2, Portal, and Team Fortress 2. It'll be a great value if the game ships on time. Several years ago, ATI partnered with Valve to include vouchers for the original Half-Life 2 with high-end Radeon 9000 series cards, but some consumers ended up waiting years to cash in because of development delays.

ATI Radeon HD 2000 Series

The Radeon HD 2900 XT has a dual-slot design with a cooler that pulls air from within the case and exhausts it out the back of the card. Each card can draw up to 215 watts of power at standard clock speeds. AMD recommends having a 550W power supply unit for a single card and a 750W power supply for a dual-card CrossFire setup. But company representatives have indicated that these recommendations are on the cautious side, as they've certified power supply units as low as 400W for the Radeon HD 2900 XT.

The card has two power connectors, a 6-pin PCI-E connector and a new 8-pin PCI-E connector. Most new power supply units will have the 8-pin cable, but older units might only have 6-pin cables. That doesn't mean potential owners will need to buy a new power supply. You can plug a 6-pin cable into the 8-pin socket to get the card to run. However, you will need an 8-pin cable if you want to enable overclocking, because the 8-pin connector can supply twice as much power as a 6-pin connector.

Power will not be a concern for the midrange and entry-level Radeon cards. The Radeon HD 2600 XT sports a single-slot design and no external power connectors. It's about as long as a Radeon HD 2900 XT, but doesn't have the power draw of its older sibling. The Radeon HD 2600 Pro has a shorter board and a basic cooling unit. The Radeon HD 2400 XT is even more compact with its "L" shaped board. Several card manufacturers are also designing fanless 2600 and 2400 cards with massive heatsinks for quiet operation. The Radeon HD 2900 XT, 2600 XT, and 2400 XT cards all have the CrossFire connectors for dual-card support. We noticed that our Radeon HD 2600 Pro didn't have the CrossFire card connectors, but ATI representatives told us that the Pro can also support CrossFire if the video card manufacturer chooses to add the connectors to the board.

(From left to right: ATI Radeon HD 2600 XT, ATI Radeon HD 2600 Pro, ATI Radeon HD 2400 XT.)

Image Quality Improvements

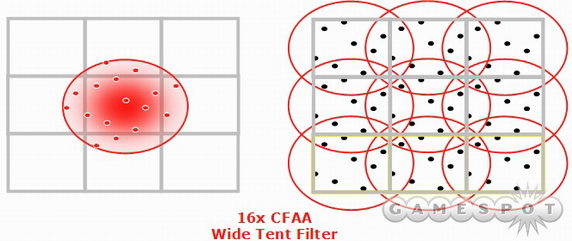

The Radeon HD 2000 series offers several image quality improvements over the previous Radeon X1000 line. There's new custom filter anti-aliasing (CFAA) support that allows for programmable filters, which can be updated with new video card drivers. New CFAA modes include "narrow tent" and "wide tent" filters that sample neighboring pixels in addition to sampling within the primary pixel to create smoother blends. The drivers also offer an additional "edge detect" filter that determines if a pixel is lined up on an edge and gives additional weight to samples along that same edge. The new CFAA modes are a mix and match of the various tent and edge detect filtering options.

The ATI Radeon HD 2000 also has a programmable hardware tessellation unit that can transform a basic polygon model into a highly detailed model by recursively subdividing existing triangles into a more accurate shape. The tessellation unit will let games use high-quality models with much lower bandwidth overhead. The Xbox 360 graphics have a similar tessellation unit, which Rare used in

High-Definition Video

ATI has equipped the Radeon HD 2000 series with several new Avivo features to aid high-definition video playback. All GPUs, including the Radeon HD 2400 and the Radeon HD 2600, have a built-in unified video decoder chip designed to accelerate VC-1 and H.264 decoding for smooth HD-DVD and Blu-ray playback with minimal CPU utilization. Moving all the work to the Radeon GPU will let system owners watch HD content on systems that have less powerful CPUs.All that decoding power won't be of use if the card can't actually output video because of copy protection restrictions. ATI has added all the necessary features to get that copy-protected, high-definition content onto any HDCP-compliant screen. ATI has embedded the necessary encryption keys into every chip to ensure HDCP video compliance right out of the box. Radeon HD 2000 cards will also be able to provide full-resolution content to dual-link HDCP displays. Nvidia's GeForce 8 GPUs are also HDCP-ready, but it's only enabled if the video card manufacturer chooses to add an encryption ROM to the board.

ATI will also offer a DVI-to-HDMI adapter to provide Radeon HD 2000 HDMI output support. However, simply creating an HDMI adapter and attaching it to a regular video card wouldn't be of use because it would only carry a video signal. ATI has integrated an audio controller directly into the new chip to allow the card to output both audio and video over HDMI.

The ATI Radeon HD 2900 XT is available in stores now, and the Radeon HD 2600 and Radeon HD 2400 cards should reach online retailers in early July.

The Radeon HD 2900 XT's $400 price places it in direct competition with the Nvidia GeForce 8800 GTS 640MB, not the GeForce 8800 GTX. However, Nvidia isn't going to let ATI beat up on its six-month old GTS without a response. Team Nvidia is releasing a new overclocked GeForce 8800 GTS GPU to greet ATI's new Radeon. The EVGA "Superclocked" GeForce 8800 GTS 640MB we tested yielded around five to ten percent more frames per second than our reference GeForce 8800 GTS 640MB. Nvidia will slot the overclocked GTS in the $400 spot and the regular GTS will settle into the $350 area. Our performance testing confirms that the ATI Radeon HD 2900 XT is comparable to the GeForce 8800 GTS. The cards traded wins across all of our tests. Frame rates were close in most games, except in S.T.A.L.K.E.R. where the GTS blew past the XT.

The Radeon HD 2600 XT with GDDR4 memory will likely sit somewhere between the $129 GeForce 8600 GT and the $175 GeForce 8600 GTS with its estimated $149 retail price. The Radeon HD 2600 XT CrossFire setup won all the midrange tests--as it should, since it'll cost around $300 to put together a matching pair of Radeon HD 2600 XTs. The single Radeon HD 2600 XT outperformed the GeForce 8600 GT and GTS cards in Company of Heroes, but fell behind in both cards in S.T.A.L.K.E.R. and could only tie the less expensive GeForce 8600 GT in Oblivion.

The $99 Radeon HD 2600 Pro should be compared to the GeForce 8500 GT, which currently sells for $90-$110 online. Following the game preferences exhibited by the midrange cards, the Radeon HD 2400 XT has the advantage in Company of Heroes, the GeForce takes S.T.A.L.K.E.R., and both cards draw even in Oblivion.

System Setup: Intel Core 2 X6800, Intel 975XBX2, EVGA nForce680i SLI, 2GB Corsair Dominator CM2X1024 Memory (1GB x 2), 160GB Seagate 7200.7 SATA Hard Disk Drive, Windows XP SP2, Windows Vista Ultimate. Graphics Cards: ATI Radeon HD 2900 XT 512MB, ATI Radeon X1950 XTX 512MB, Nvidia GeForce 8800 GTX 768MB, Nvidia GeForce 8800 GTS 640MB. Graphics Driver: ATI Catalyst 7.4, ATI Catalyst beta 8-37-4-070419a, Nvidia Forceware 158.22, Nvidia Forceware beta 158.43.

System Setup: Intel Core 2 X6800, Intel 975XBX2, 2GB Corsair Dominator CM2X1024 Memory (1GB x 2), 160GB Seagate 7200.7 SATA Hard Disk Drive, Windows XP SP2, Windows Vista. Graphics Cards: GeForce 8600 GT 256MB, GeForce 8500 GT 256MB, Radeon HD 2600 XT 256MB, Radeon HD 2600 Pro 256MB, Radeon HD 2400 XT 256MB. Graphics Driver: beta Catalyst 8.38.9.1, Forceware 158.22 (Windows XP), Forceware 158.24 (Windows Vista).

We used the same application test suite in our Windows Vista testing. We actually ran several Call of Juarez and Lost Planet tests, but we determined that DirectX 10 isn't suitable for benchmarking yet. The ATI video card driver we used was unable to run the Lost Planet application even when previous drivers worked fine. Call of Juarez could run, but we took a pass on this one due to the game's ATI launch event ties and the ongoing dispute between Nvidia and Techland. We also saw an uncomfortable amount of performance variation between the different drivers we tested for each card. When manufacturers ask you to use specific Vista drivers for each game, it probably means that the platform isn't reliable for performance comparison. We'll revisit DirectX 10 performance after the high-profile games start shipping later this year.

The ATI Radeon HD 2900 XT looks to be a compelling value in the $400 DirectX 10 video card category. It's not a GTX killer, but it compares well to similarly priced GeForce 8800 GTS cards in 3D performance, and the advanced HDCP video support with HDMI output makes the Radeon HD 2900 XT--and the rest of the Radeon HD 2000 line, for that matter--extremely attractive for media applications. The Radeon HD 2600 XT lived up to its $149 price point by performing as well as its GeForce 8600 GTS and 8600 GT competition. The Radeon HD 2400 XT's CrossFire performance was somewhat disappointing, but anyone willing to spend $150-$175 on graphics should be getting a single-card solution anyway.

System Setup: Intel Core 2 X6800, Intel 975XBX2, EVGA nForce680i SLI, 2GB Corsair Dominator CM2X1024 Memory (1GB x 2), 160GB Seagate 7200.7 SATA Hard Disk Drive, Windows XP SP2, Windows Vista Ultimate. Graphics Cards: ATI Radeon HD 2900 XT 512MB, ATI Radeon X1950 XTX 512MB, Nvidia GeForce 8800 GTX 768MB, Nvidia GeForce 8800 GTS 640MB. Graphics Driver: ATI Catalyst 7.4, ATI Catalyst beta 8-37-4-070419a, Nvidia Forceware 158.22, Nvidia Forceware beta 158.42.

System Setup: Intel Core 2 X6800, Intel 975XBX2, 2GB Corsair Dominator CM2X1024 Memory (1GB x 2), 160GB Seagate 7200.7 SATA Hard Disk Drive, Windows XP SP2, Windows Vista. Graphics Cards: GeForce 8600 GT 256MB, GeForce 8500 GT 256MB, Radeon HD 2600 XT 256MB, Radeon HD 2600 Pro 256MB, Radeon HD 2400 XT 256MB. Graphics Driver: beta Catalyst 8.38.9.1, Forceware 158.22 (Windows XP), Forceware 158.24 (Windows Vista).

Got a news tip or want to contact us directly? Email news@gamespot.com

Join the conversation