'Second is not a fu**ing SDK i don't care what they say is fu**ing PR let see if Starwars battlefront is 1080p because of the latest SDK then i say yes i loss.'

So Konami are Lying?

You know what tormentos, i am done with you, even when people post evidence to debunk your claim you just call the evidence Lies or PR speak.

Getting fed up of you calling Credible game developers, who have more knowledge of coding games in there little finger than you have in your whole body, Liars or wrong when you have probably never even coded a rubbish 8 bit game.

What i Cannot stand is people who don't know how to do the Job claiming they know better than people who DO know how to do the Job.

You are NOT an expert when it comes to hardware, you probably have Zero Coding qualifications, you unload boats for crying out loud so please stop making out that you know better then the people at Konami about the Xbones hardware, you don't, they have access to xbone dev kits, you don't.

I was pretty sure a new SDK wouldn't increase resolutions, i thought the best they would get is an improvement in development, Check my posting history Because you are not the only one that was wrong, i was wrong as well.

Unlike you i just don't have a problem admitting i was wrong and i am no Game developer so i am not going to sit there and say Konami have no clue what they are talking about or they are Lying Because they know how to code games, i don't.

the closest i ever got to coding was a book i had for my Commodore 64, you copied the lines of code in the book and, hey presto, Space invaders.

Is PR just like Rebellion did and if you can't see that if your problem.

Fact is Rebellion say the same on February 2014 that they were getting 1080p because of a faster SDK,after that there has been a barrage of 900p games on xbox one,so the whole new SDK mean little fact is any game can be 1080p on xbox one it is just the quality and frames what determine if it can be reach,and MGS5 and Pes should never have been 720p.

The funny thing is i posted evidence of another developer stating the same more than a year ago and the disparity continue it will not end because the PS4 has more juice and to get resolution parity the xbox one most give up frames and to gain frame parity it most give up resolution and some times even quality.

You believe what ever the hell you want DX12 will be sony's 4D mark those words and 3 years from now when games still come in lower resolution on xbox one,i will be here quoting you.

So little you learn from last gen,720p minimum with 4XAA,4D 120FPS is like you can't learn,next game with sub HD graphics is getting you quoted you,hdcarraher and Stormyjoke.

Stupid - the SDK update improved the game performance. End of story. You lose... again.

Yes, he did - you own f*cking link to his comments said so. Do you do selective reading?

It's won't last forever - Christ, more an more games are going to 1080p on Xb1. What the f*ck is the matter with you, do you even read game articles???

Yeah on a game that should have been 1080p since day 1,even a freaking 7750 ran it at 1080p so i loss shit and you holding to Diablo 3 and Destiny was a total joke you loss and after those 2 a barrage of sub 1080p games have hit so in other words resolution gate continues contrary to what you claimed.

Dude more and more games continue to be sub HD on xbox idiot where the fu** have you been.?

Recently.

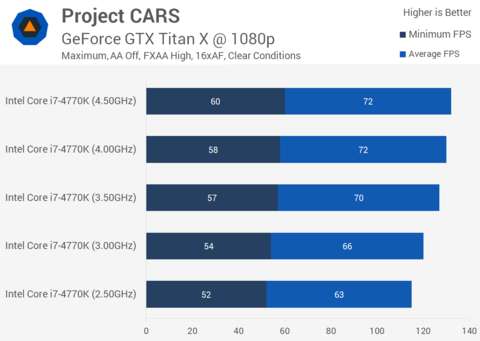

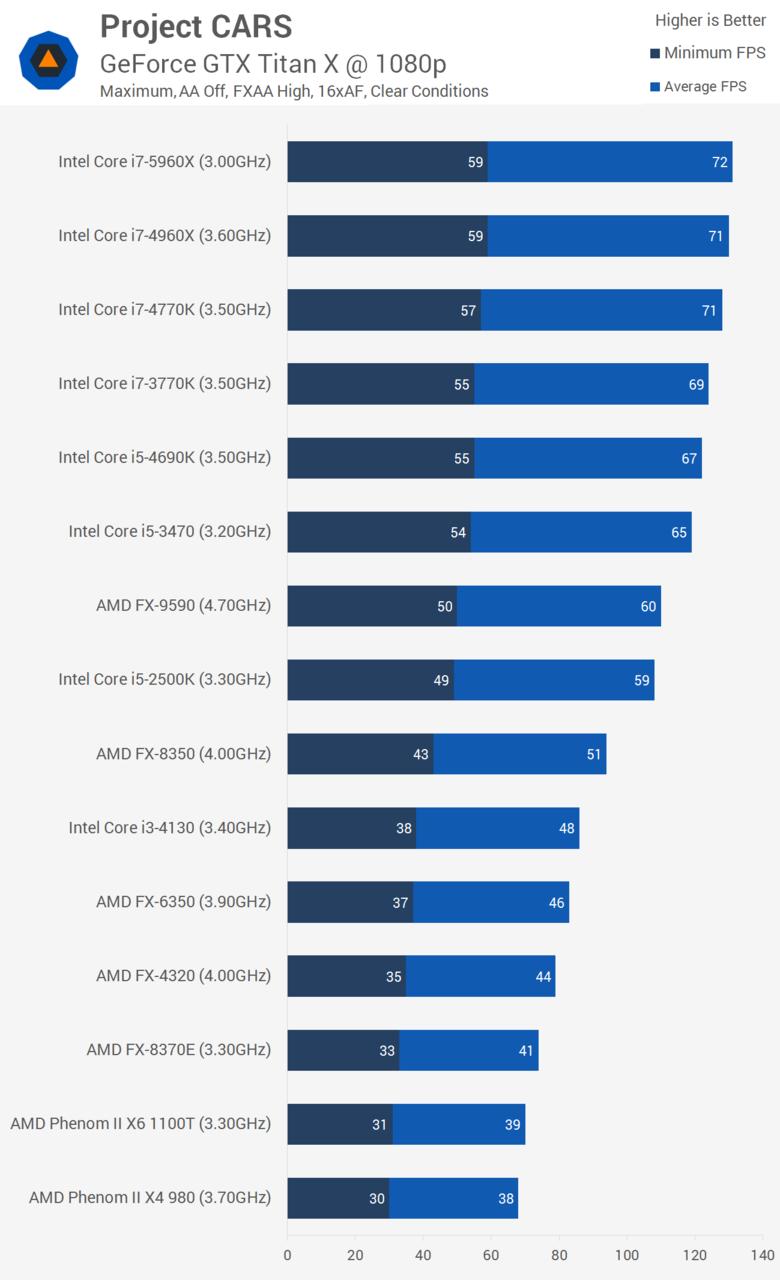

Project Cars.

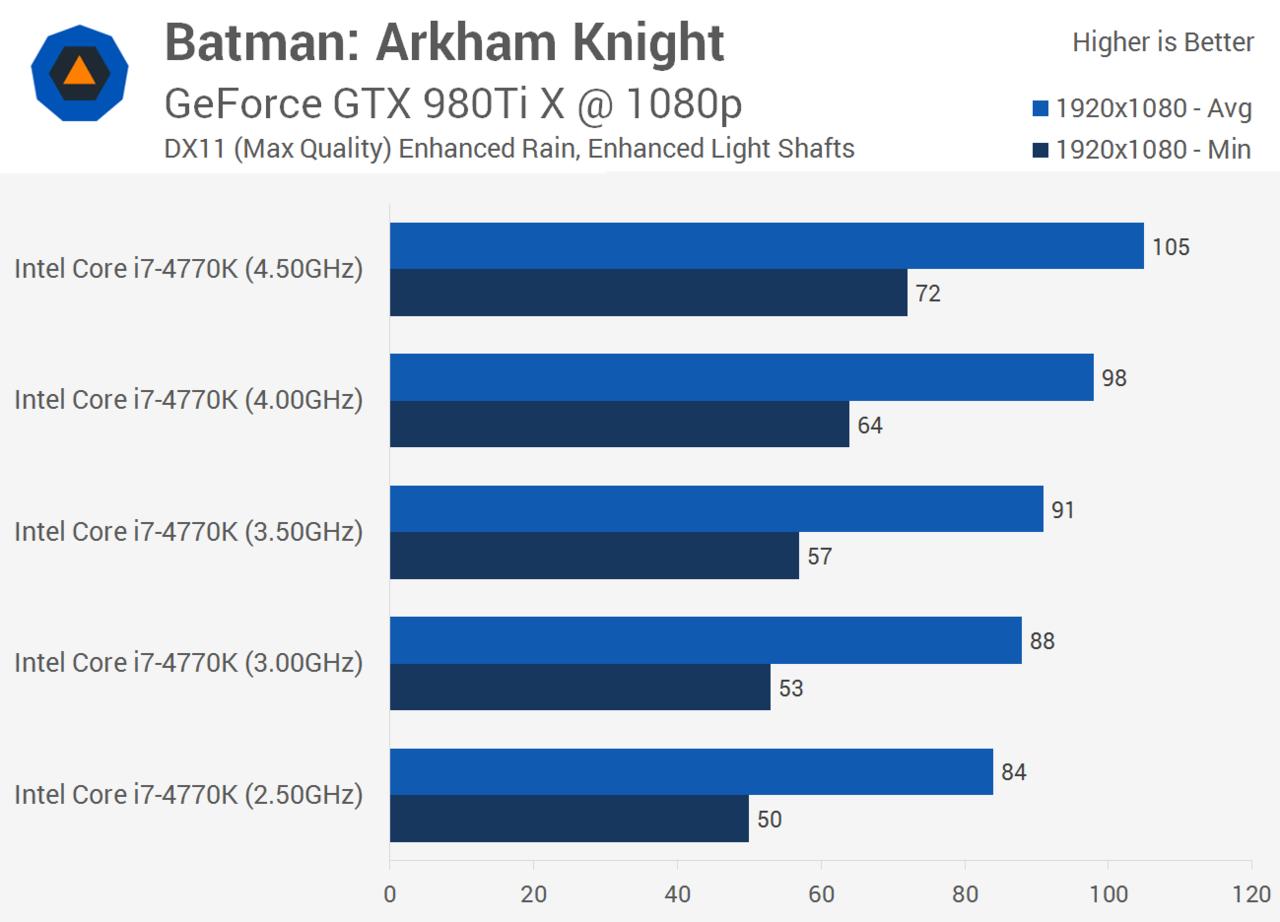

Batman

BFH

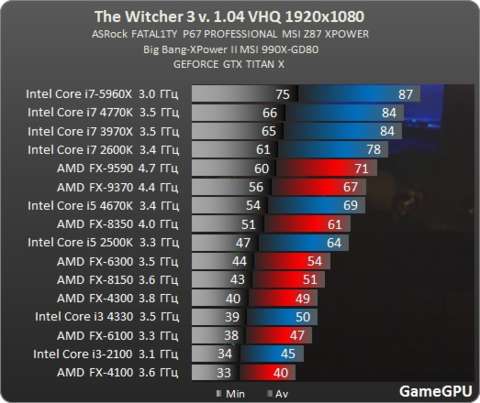

The witcher 3

F1 2015

Mortal Kombat X

Dying Light

This games all released this year so far all inferior resolution wise on xbox one one is even 720p,which should not even exist since the SDK that make Diablo 3 and SE3 1080p should have do the same by your logic....lol

Damn tormentos is getting destroyed from every possible DirectXion.

There is not even one here which can do that.

This is all i will say to you you blind biased lemming....

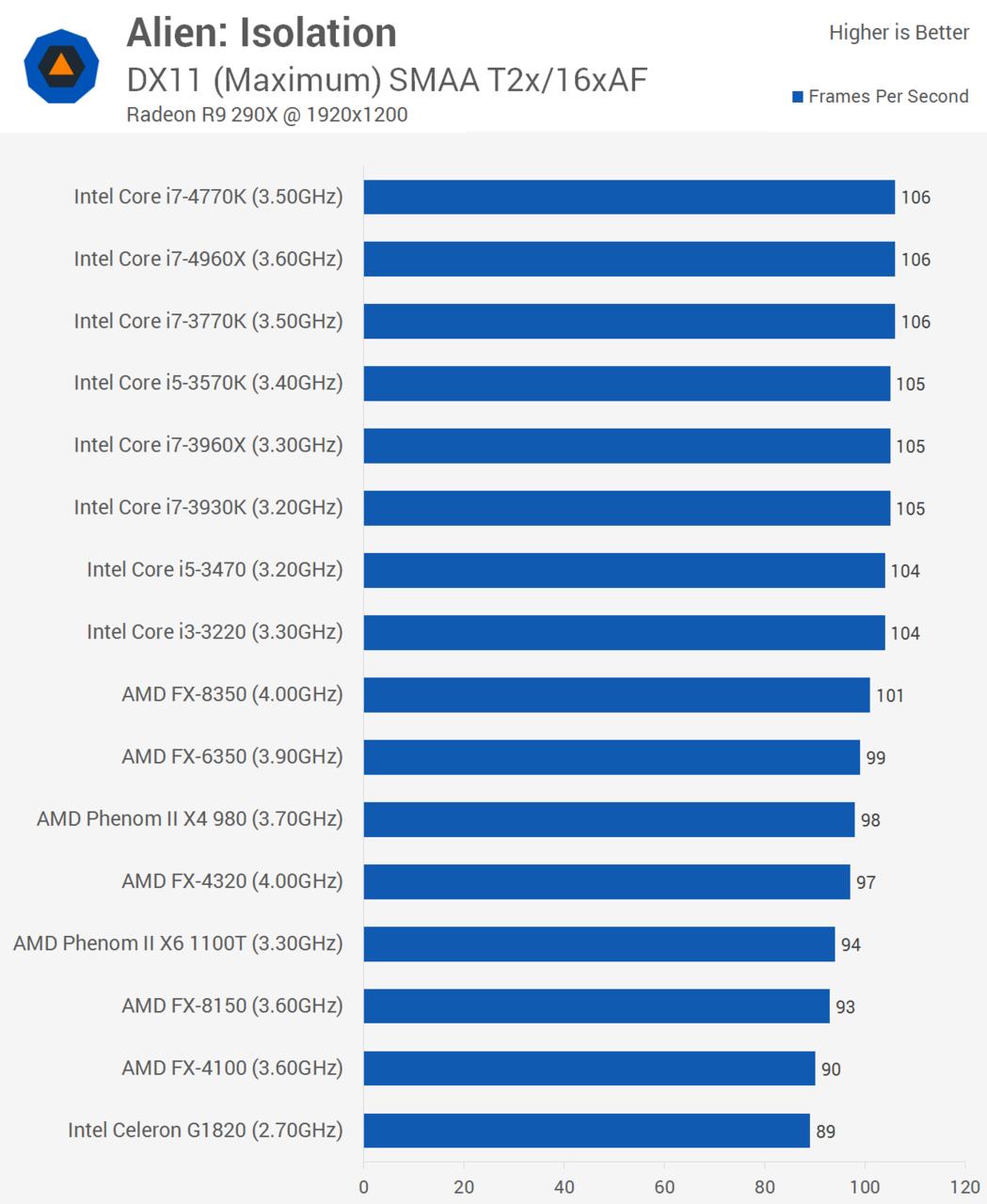

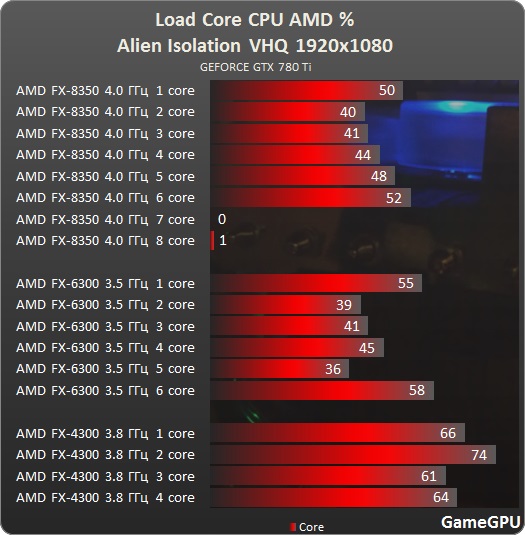

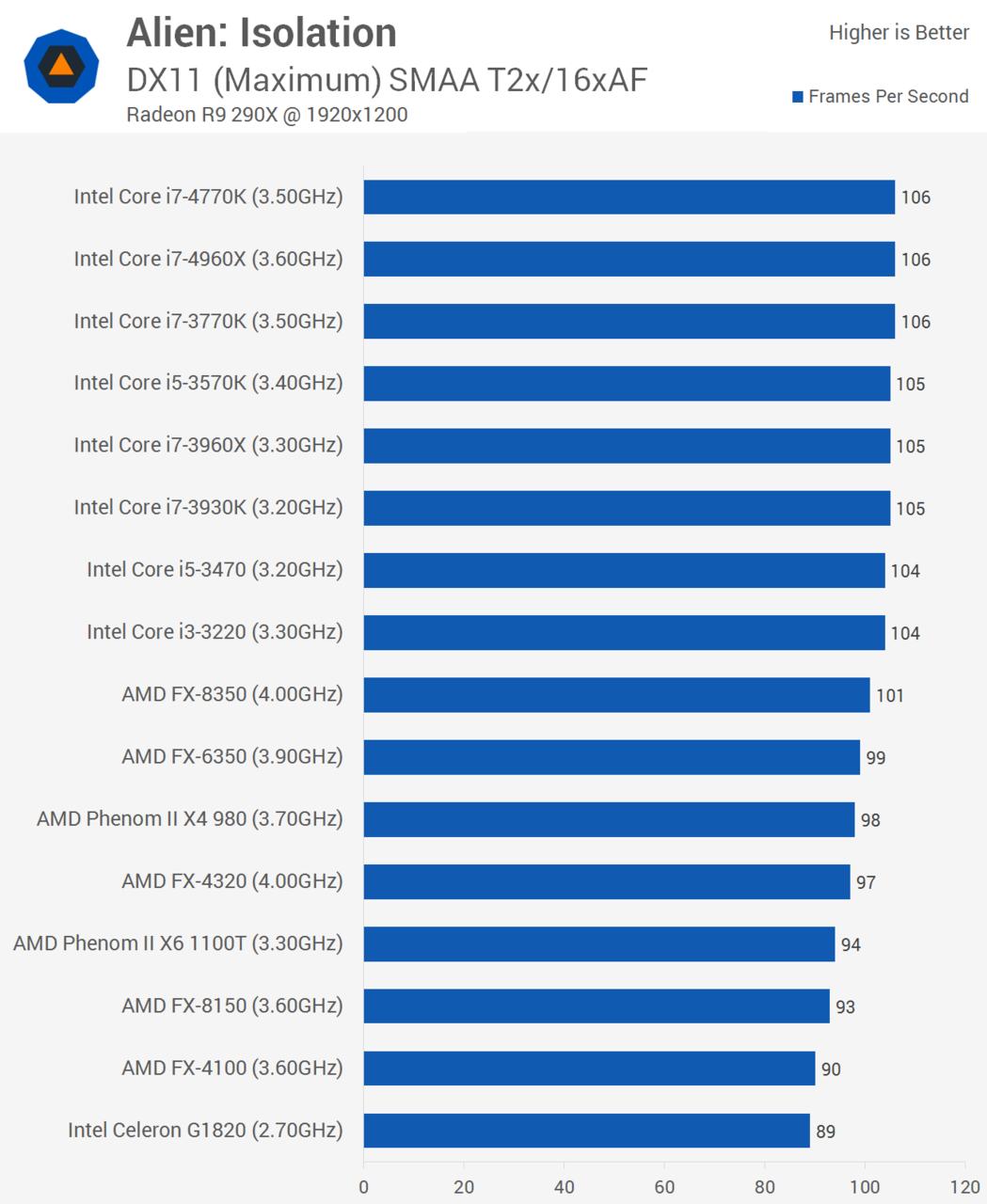

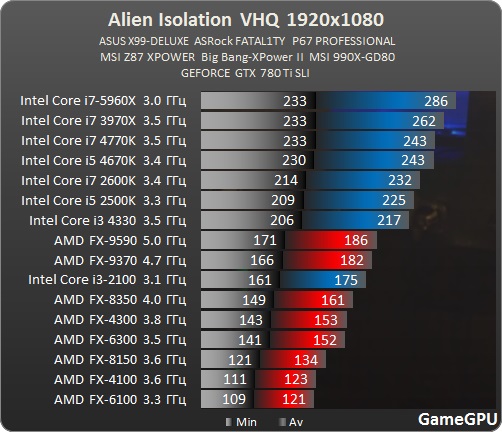

CPUs with four or more cores need not apply -- Alien: Isolation is seeking an affordable dual-core Core i3 processor but will also accept lowly Celeron, FX or Phenom II chips. Of course, that could change when running either SLI or Crossfire, but throwing that kind of GPU power at Alien: Isolation is a waste.

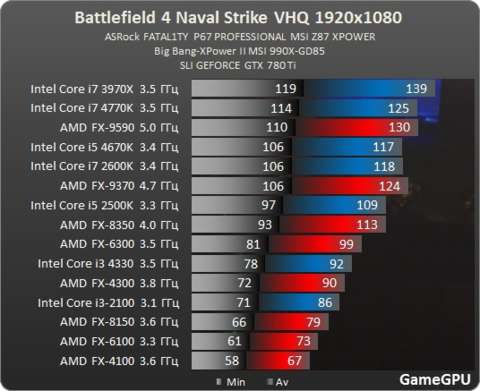

This is what Techspot say about the crappy game you call CPU intensive you idiot,as you can fu**ing see even a damn Celeron g1820 is enough to feed a damn R290X at 89FPS just 17 Frame shit of the top of the line CPU on the test,even a my crappy FX6350 run it 7 frames behind a CPU which cost 3 times what mine cost what the fu**...

And tell me that celeron has a better time feeding that R9 290X than the XBO 6 core 1.75ghz has feeding a damn 7770 like GPU so i can completely and utterly tag you as a total loss case and an utter fanboy who argue with me just to go again the current.

http://www.techspot.com/review/903-alien-isolation-benchmarks/page5.html

AI is not i repeat is not CPU intensive...

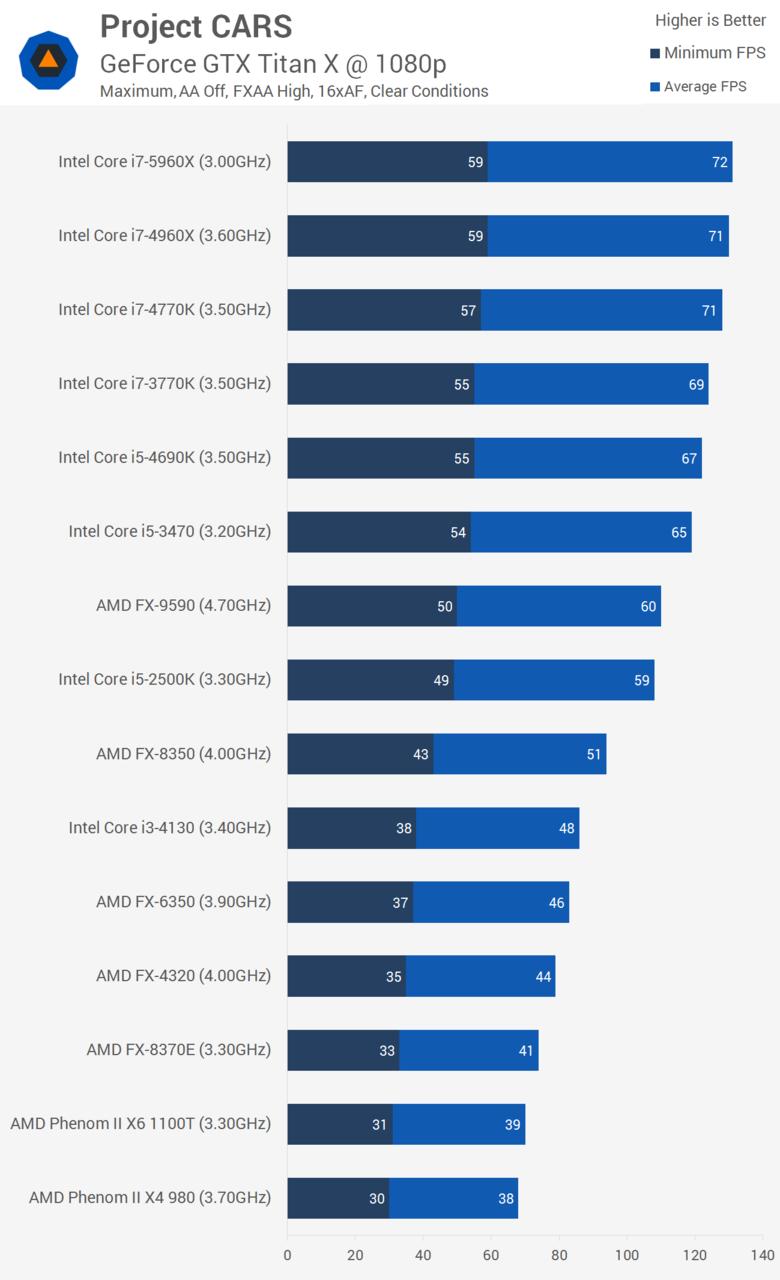

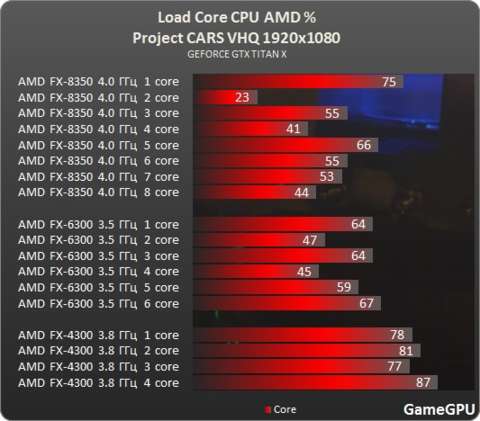

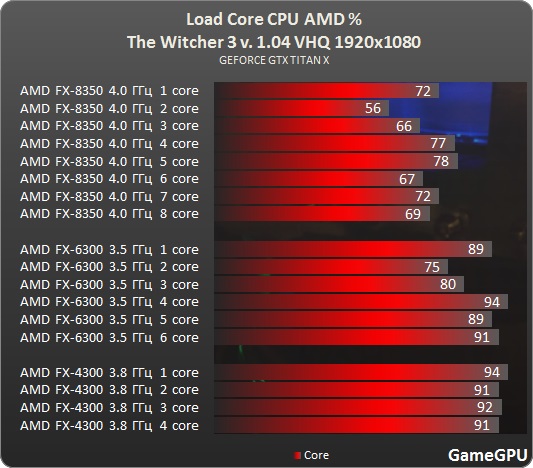

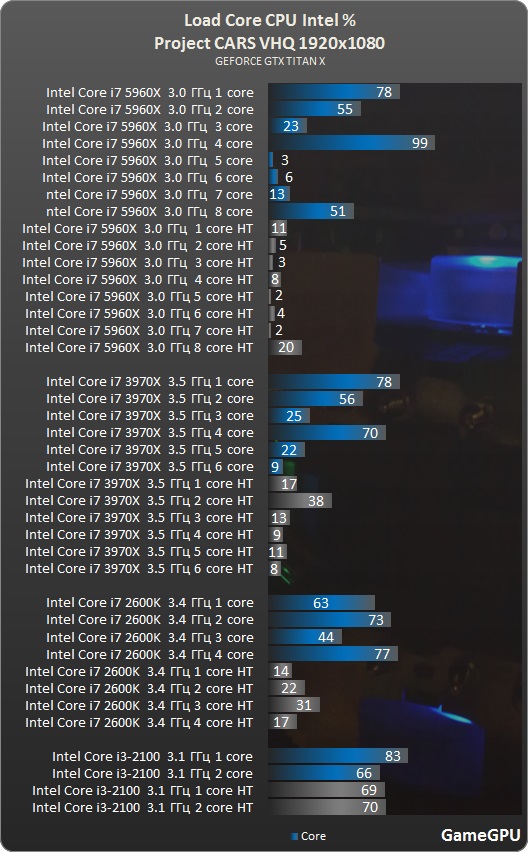

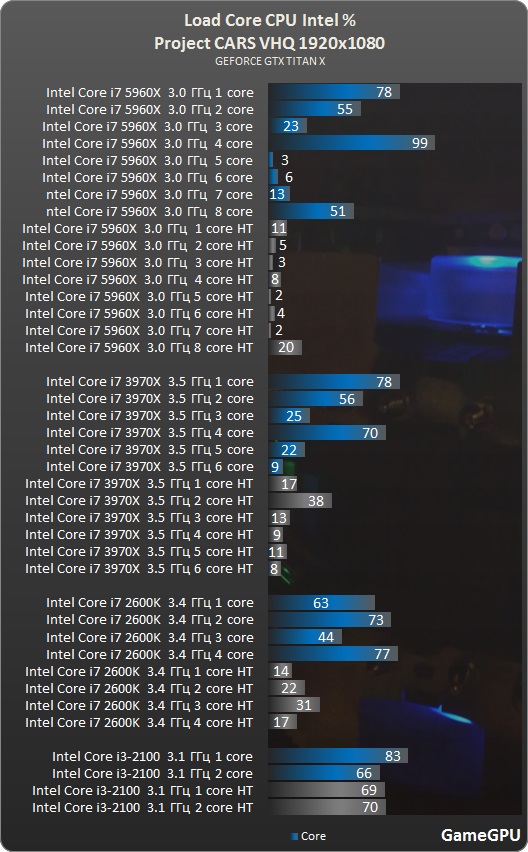

Just for fun which has bigger CPU impact i wonder,yet the PS4 with 6 cores beat the xbox one with 7 on a game that is more CPU intensive mind you this test is on a Titan X on 1080p.

Hhhmm.. Konami didn't say anything about TIME fixing anything. They specifically said the NEW SDK is what provided the fix. So either you're a blind fanboy who didn't read the article or your damage controlling. Microsoft had already announced that DX12 & Win10 would launch to devs in July 2015.

http://wccftech.com/microsoft-windows-10-os-expected-launch-july-2015-reveals-amd-earnings-call/

Don't think it's that hard to put two and two together. Konami didn't say anything about they fixed their engine or optimized it better. I don't think DX12 is a simple call to an SDK, epecially when the hardware in the XB1 has never fully utilized it. Tomb Raider isn't using DX12 and neither is Forza 6 (which runs as 1080P 60fps with weather effects). So if this new SDK doesn't have DX12 on it as you trolls keep claiming, I can only imagine what will happen when it arrives. I'm sure we'll know the whole story before the year is over.

Is PR blackace nothing more.

Log in to comment