what I want to know is if 1080p vs 1080i makes a difference, because i sure as hell can tell the diff between 30 and 60 fps...its obvious.

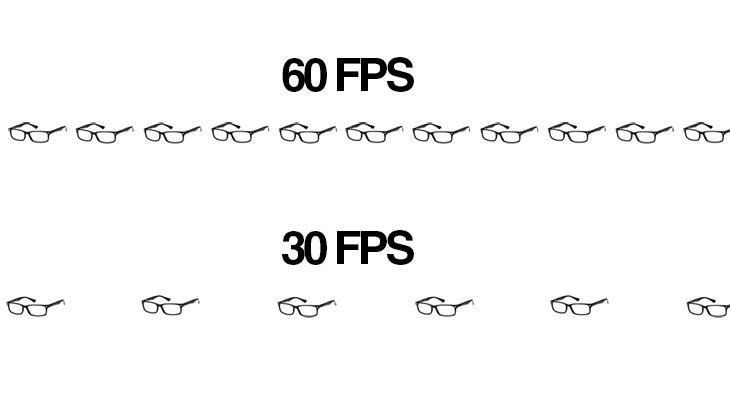

Some say the human eye can't see -30fps VS 60FPS-

This topic is locked from further discussion.

what I want to know is if 1080p vs 1080i makes a difference, because i sure as hell can tell the diff between 30 and 60 fps...its obvious.

1080i is different from 1080p.

Within the designation "1080i", the i stands for interlaced scan. A frame of 1080i video consists of two sequential fields of 1920 horizontal and 540 vertical pixels. The first field consists of all odd-numbered TV lines and the second all even numbered lines. Consequently the horizontal lines of pixels in each field are captured and displayed with a one-line vertical gap between them, so the lines of the next field can be interlaced between them, resulting in 1080 total lines. 1080i differs from 1080p, where the p stands for progressive scan, where all lines in a frame are captured at the same time. In native or pure 1080i, the two fields of a frame correspond to different instants (points in time), so motion portrayal is good (50 or 60 motion phases/second). This is true for interlaced video in general and can be easily observed in still images taken from fast motion scenes, as shown in the figure on the right (without appropriate deinterlacing). However when 1080p material is captured at 25 or 30 frames/second it is converted to 1080i at 50 or 60 fields/second, respectively, for processing or broadcasting. In this situation both fields in a frame do correspond to the same instant. The field-to-instant relation is somewhat more complex for the case of 1080p at 24 frames/second converted to 1080i at 60 fields/second, as explained in the telecine article.

http://en.wikipedia.org/wiki/1080i

@tymeservesfate: dd214 and records are public record. But I'm not putting my full name on this board.

what I want to know is if 1080p vs 1080i makes a difference, because i sure as hell can tell the diff between 30 and 60 fps...its obvious.

1080i is different from 1080p.

Within the designation "1080i", the i stands for interlaced scan. A frame of 1080i video consists of two sequential fields of 1920 horizontal and 540 vertical pixels. The first field consists of all odd-numbered TV lines and the second all even numbered lines. Consequently the horizontal lines of pixels in each field are captured and displayed with a one-line vertical gap between them, so the lines of the next field can be interlaced between them, resulting in 1080 total lines. 1080i differs from 1080p, where the p stands for progressive scan, where all lines in a frame are captured at the same time. In native or pure 1080i, the two fields of a frame correspond to different instants (points in time), so motion portrayal is good (50 or 60 motion phases/second). This is true for interlaced video in general and can be easily observed in still images taken from fast motion scenes, as shown in the figure on the right (without appropriate deinterlacing). However when 1080p material is captured at 25 or 30 frames/second it is converted to 1080i at 50 or 60 fields/second, respectively, for processing or broadcasting. In this situation both fields in a frame do correspond to the same instant. The field-to-instant relation is somewhat more complex for the case of 1080p at 24 frames/second converted to 1080i at 60 fields/second, as explained in the telecine article.

http://en.wikipedia.org/wiki/1080i

thanks, i read that...and still don't get it lol, but thanks anyways. I ask because one of my tv's is 1080i, and another is 1080p....

@clyde46: wow. For the 1000 time, there is a difference. I don't believe its so huge. 480-1080 is huge.

what I want to know is if 1080p vs 1080i makes a difference, because i sure as hell can tell the diff between 30 and 60 fps...its obvious.

1080i is different from 1080p.

Within the designation "1080i", the i stands for interlaced scan. A frame of 1080i video consists of two sequential fields of 1920 horizontal and 540 vertical pixels. The first field consists of all odd-numbered TV lines and the second all even numbered lines. Consequently the horizontal lines of pixels in each field are captured and displayed with a one-line vertical gap between them, so the lines of the next field can be interlaced between them, resulting in 1080 total lines. 1080i differs from 1080p, where the p stands for progressive scan, where all lines in a frame are captured at the same time. In native or pure 1080i, the two fields of a frame correspond to different instants (points in time), so motion portrayal is good (50 or 60 motion phases/second). This is true for interlaced video in general and can be easily observed in still images taken from fast motion scenes, as shown in the figure on the right (without appropriate deinterlacing). However when 1080p material is captured at 25 or 30 frames/second it is converted to 1080i at 50 or 60 fields/second, respectively, for processing or broadcasting. In this situation both fields in a frame do correspond to the same instant. The field-to-instant relation is somewhat more complex for the case of 1080p at 24 frames/second converted to 1080i at 60 fields/second, as explained in the telecine article.

http://en.wikipedia.org/wiki/1080i

thanks, i read that...and still don't get it lol, but thanks anyways. I ask because one of my tv's is 1080i, and another is 1080p....

720p=1080i

1080p >> 1080i

Better?

Those GIFs are both at lower than 30fps on my PC and I'll bet on most others.You can and always will be able to see the difference between 30fps and 60fps. Denying that there is little or no difference is just staying ignorant at this point.

It's sad that in 2014 there are still people denying there is a difference all because of console fanboyism.

you have to view them on the actual linked site, where they are hosted as HTML5, not gifs

BINGO...the link is there for a reason. i even stated that the site has better video displays in the OP.

Why are your gifs so shit? Seriously, there is like a hundred other comparisons that are better then what you have.

here's a better question...why did you just look at the gifs and not read anything in the opening post at all, or check the link?

these arent really my gifs...that isnt my site...i just brought the debate to the board.

@tymeservesfate: dd214 and records are public record. But I'm not putting my full name on this board.

agreed, you shouldnt.

and i see your point completely...i don't see how anyone can speak against you after that answer lol. you shot some of these guns forreral...while we're talking video games lol. especially if those are your shooting range numbers.

@JangoWuzHere:

Play PC all the time. I guess its relative to what one considers huge. I don't consider the difference huge. The difference from 480-1080p is huge, imo. But to each their own assessment

It's double the pixel density...your eyes must be pretty terrible

Oh well guess so. Still shot 35/40 and 4/4 at 300m, no scope in US army. But If people really see differently than ok.

LMAO...that answer should shut anybody up in this thread XD.

Umm no, just because you can't see the difference doesn't negate all the studies and reports that say there is a difference.

i'm just saying...if those are his shooting range numbers, then his eyes are fine. i'm not picking one side or the other openly. but that does give him a little more weight behind his words.

@tymeservesfate: sucks because I needed 36/40 to qualify expert. I was sharpshooter because I missed one. Thing I miss most now is not going to ranges. Especially being a NYer where gun laws suck.

On topic, I do know there are differences in res and fps. Been playing through the arkham games on my new PC, and they are beautiful. But I still loved them when I originally played them on ps3/360. I can't bring my PC to my brothers this weekend but I'll still play arkham on my old 360 that he has. I guess if people are into all the nuances and stuff that's cool. I just have fun playing.

I'm also skeptical of the HZ and FPS being mixed together. Perhaps we should go back to basics when framerates merely indicate how much reserve horsepower a a system has to run a game fluidly.

Anyway, I grew up gaming when PC games rarely reached 24fps. So, 30fps is playable enough to me. Unfortunately, YT drops a lot frames during uploading. So, this video seems jerkier than the original.

Dropping frames isn't really a Youtube problem, thats a recording and encoding problem. I always try to record at 60FPS whenever possible because if you do a dropped frame or two, its fixable.

It's probably a re-encoding problem when uploading a video to YT.

I see subtle differences in 30fps and 60fps in motion and in the feeling while using a mouse which go hand in hand with the more reserves a PC has, the smoother the game will be.

But, I don't really see the correlation with the refresh rates apart from tearing. I haven't had an LCD monitor that went past 75 Hz. But, I've had 21" and 22" CRT/aperture grill monitors that could do 120 Hz a little over a decade ago. The framerates didn't feel any different whether I was playing the game at 1024x768 @ 85 Hz or 1024x768 @ 120 Hz. I certainly never noticed any image quality differences like what some here have posted before.

Why do people keep posting these. You realize there's a huge difference between watching a gif/ video, and what you actually see and feel when you're immersed in a game...

First of all, both those gifs are running on what seems like 20 fps. >__>

And second, there's even a clear difference between 60 and 120 fps. If someone can't see the difference between 30 and 60, then there's something wrong with their eyes.. or monitor.

This is a better comparison. I can see the difference, personally:

This is a pretty good illustration actually. When you look at 30fps first, then 60, you don't see much of a difference but going back, you realize how smooth 60 was. Probably explains why a lot of console gamers say they can't see the difference while I can't play GTA 5 without getting dizzy.

Who cares about seeing the difference? I can't see much of difference in the two but can sure as hell feel it in anything with high sensitivity input. M&K in an FPS at 30fps is very noticeable.

This is a big part of it. Especially when the debate gets into 60+ territory. I can see the difference between 30 and 60 like night and day, but above 60, the improvement gets less visible to me. However, I can feel the difference between 60 and 80 or 100, and it's glorious.

When you are actually playing the game you can see/feel the difference between 60fps and 120fps.

As for 30fps vs 60fps the difference is just massive, in fact is not even enjoyable to play games at 30fps.

Who cares about seeing the difference? I can't see much of difference in the two but can sure as hell feel it in anything with high sensitivity input. M&K in an FPS at 30fps is very noticeable.

This is a big part of it. Especially when the debate gets into 60+ territory. I can see the difference between 30 and 60 like night and day, but above 60, the improvement gets less visible to me. However, I can feel the difference between 60 and 80 or 100, and it's glorious.

I just got two 144hz monitors. Peasants be jelly of my framerates over 60

Honestly I noticed it when comparing after a few takes, but it was hardly anything to freak out about imo.

Weirdly enough I came into this thread thinking there would be a greater noticeable difference (still low influence though) than what I just saw since I've never seen in game tests before, but I guess not. Kind of strange since I've been 60 fps gaming for awhile now, but I guess I never really compared them, and only saw it as better than 30 just because. I think its blown out of proportion tbh, which was also what I thought before coming in here as well.

Now below 30 fps is very noticeable to me, especially around the 20's area.

First of all, both those gifs are running on what seems like 20 fps. >__>

And second, there's even a clear difference between 60 and 120 fps. If someone can't see the difference between 30 and 60, then there's something wrong with their eyes.. or monitor.

there's a link to videos in the OP...i even said they are a better quality than the gifs -_-

@StormyJoe: When on my ps3 or even my computer playing on a 60inch plasma tv, I can notice a difference between 720 and 1080p. I must have amazing eyes, since so many people can't seem to see with clarity. 720 on any game on my 60inch is a blurry jagged mess unless I crank the aliasing up or do super sampling. 1080p is far better clearing up the image and making it far more crisp. I can even take it a step higher on my 1440p monitor, and get an even clearer and crisper image. Even on it I can notice a change sitting back a few feet between the three resolutions. There is a difference and noticeable one for both resolution and frame rate. There is no real debate on this just people on forums who don't know too much about anything or have horrible eyes talking out their asses about resolutions and frame rates.

Interestingly enough, the only people who talk about 1080p being such an incredible difference, are cows.

Look, I am a technophile - I have thousands and thousands of dollars tied to my home theater, and I can admit there isn't a big difference. I am not saying there is "no difference", but it's negligible. You people talk as though it is a difference between VHS and Blu Ray. So yes, your eyes defy medical science - perhaps you are an X-Man and don't know it.

Agreed. I am not a technopredator...............guy............thing. But I guess my eyes are bad. I don't see this MASSIVE difference from 1080p to 720p. And some claim its huge from 900p to 1080p. Its just favoritisms that makes people exaggerate.

It's a colloquialism for someone who only buys high end electronics.

Here's the definition

@StormyJoe: When on my ps3 or even my computer playing on a 60inch plasma tv, I can notice a difference between 720 and 1080p. I must have amazing eyes, since so many people can't seem to see with clarity. 720 on any game on my 60inch is a blurry jagged mess unless I crank the aliasing up or do super sampling. 1080p is far better clearing up the image and making it far more crisp. I can even take it a step higher on my 1440p monitor, and get an even clearer and crisper image. Even on it I can notice a change sitting back a few feet between the three resolutions. There is a difference and noticeable one for both resolution and frame rate. There is no real debate on this just people on forums who don't know too much about anything or have horrible eyes talking out their asses about resolutions and frame rates.

Interestingly enough, the only people who talk about 1080p being such an incredible difference, are cows.

Look, I am a technophile - I have thousands and thousands of dollars tied to my home theater, and I can admit there isn't a big difference. I am not saying there is "no difference", but it's negligible. You people talk as though it is a difference between VHS and Blu Ray. So yes, your eyes defy medical science - perhaps you are an X-Man and don't know it.

Agreed. I am not a technopredator...............guy............thing. But I guess my eyes are bad. I don't see this MASSIVE difference from 1080p to 720p. And some claim its huge from 900p to 1080p. Its just favoritisms that makes people exaggerate.

You don't have to be a technowhatever to see that there are a lot more pixels being rendered on screen. Go play a PC game and switch from 720p to 1080p. The difference is large.

Yeah, because your eyes are 12 inches from the screen...

so everyone should look at buying a PS4 instead of Xbone? Bloody brilliant mate!

Most current gen looking games seem to be struggling to get above 30fps.

@StormyJoe: Thats not true at all even with a desk. My pc's hooked up to my 50" plasma however. I sit about 8 feet away and the difference between 720 and 1080 is night and day.

Whatever, man. You are full of crap, dude there is no way it is "night and day". At that distance, the human eye can barely tell the difference - even if you have better than 20/20 vision.

what I want to know is if 1080p vs 1080i makes a difference, because i sure as hell can tell the diff between 30 and 60 fps...its obvious.

1080i is different from 1080p.

Within the designation "1080i", the i stands for interlaced scan. A frame of 1080i video consists of two sequential fields of 1920 horizontal and 540 vertical pixels. The first field consists of all odd-numbered TV lines and the second all even numbered lines. Consequently the horizontal lines of pixels in each field are captured and displayed with a one-line vertical gap between them, so the lines of the next field can be interlaced between them, resulting in 1080 total lines. 1080i differs from 1080p, where the p stands for progressive scan, where all lines in a frame are captured at the same time. In native or pure 1080i, the two fields of a frame correspond to different instants (points in time), so motion portrayal is good (50 or 60 motion phases/second). This is true for interlaced video in general and can be easily observed in still images taken from fast motion scenes, as shown in the figure on the right (without appropriate deinterlacing). However when 1080p material is captured at 25 or 30 frames/second it is converted to 1080i at 50 or 60 fields/second, respectively, for processing or broadcasting. In this situation both fields in a frame do correspond to the same instant. The field-to-instant relation is somewhat more complex for the case of 1080p at 24 frames/second converted to 1080i at 60 fields/second, as explained in the telecine article.

http://en.wikipedia.org/wiki/1080i

thanks, i read that...and still don't get it lol, but thanks anyways. I ask because one of my tv's is 1080i, and another is 1080p....

The only difference between 1080i and 1080p is that 1080i sends half an image per frame and 1080p sends the full image. Since most displays accept video input at a max of 60hz, what this effectively means is 1080i will cap out at 30fps while 1080p can achieve up to 60fps. If you are viewing content at 30fps or lower, there is effectively no difference between the 2.

what I want to know is if 1080p vs 1080i makes a difference, because i sure as hell can tell the diff between 30 and 60 fps...its obvious.

1080i is different from 1080p.

Within the designation "1080i", the i stands for interlaced scan. A frame of 1080i video consists of two sequential fields of 1920 horizontal and 540 vertical pixels. The first field consists of all odd-numbered TV lines and the second all even numbered lines. Consequently the horizontal lines of pixels in each field are captured and displayed with a one-line vertical gap between them, so the lines of the next field can be interlaced between them, resulting in 1080 total lines. 1080i differs from 1080p, where the p stands for progressive scan, where all lines in a frame are captured at the same time. In native or pure 1080i, the two fields of a frame correspond to different instants (points in time), so motion portrayal is good (50 or 60 motion phases/second). This is true for interlaced video in general and can be easily observed in still images taken from fast motion scenes, as shown in the figure on the right (without appropriate deinterlacing). However when 1080p material is captured at 25 or 30 frames/second it is converted to 1080i at 50 or 60 fields/second, respectively, for processing or broadcasting. In this situation both fields in a frame do correspond to the same instant. The field-to-instant relation is somewhat more complex for the case of 1080p at 24 frames/second converted to 1080i at 60 fields/second, as explained in the telecine article.

http://en.wikipedia.org/wiki/1080i

thanks, i read that...and still don't get it lol, but thanks anyways. I ask because one of my tv's is 1080i, and another is 1080p....

The only difference between 1080i and 1080p is that 1080i sends half an image per frame and 1080p sends the full image. Since most displays accept video input at a max of 60hz, what this effectively means is 1080i will cap out at 30fps while 1080p can achieve up to 60fps. If you are viewing content at 30fps or lower, there is effectively no difference between the 2.

So if I'm playing a game that runs 60 fps on a 1080i tv, you're telling me that it will go from 60fps to 30fps?

what I want to know is if 1080p vs 1080i makes a difference, because i sure as hell can tell the diff between 30 and 60 fps...its obvious.

1080i is different from 1080p.

Within the designation "1080i", the i stands for interlaced scan. A frame of 1080i video consists of two sequential fields of 1920 horizontal and 540 vertical pixels. The first field consists of all odd-numbered TV lines and the second all even numbered lines. Consequently the horizontal lines of pixels in each field are captured and displayed with a one-line vertical gap between them, so the lines of the next field can be interlaced between them, resulting in 1080 total lines. 1080i differs from 1080p, where the p stands for progressive scan, where all lines in a frame are captured at the same time. In native or pure 1080i, the two fields of a frame correspond to different instants (points in time), so motion portrayal is good (50 or 60 motion phases/second). This is true for interlaced video in general and can be easily observed in still images taken from fast motion scenes, as shown in the figure on the right (without appropriate deinterlacing). However when 1080p material is captured at 25 or 30 frames/second it is converted to 1080i at 50 or 60 fields/second, respectively, for processing or broadcasting. In this situation both fields in a frame do correspond to the same instant. The field-to-instant relation is somewhat more complex for the case of 1080p at 24 frames/second converted to 1080i at 60 fields/second, as explained in the telecine article.

http://en.wikipedia.org/wiki/1080i

thanks, i read that...and still don't get it lol, but thanks anyways. I ask because one of my tv's is 1080i, and another is 1080p....

The only difference between 1080i and 1080p is that 1080i sends half an image per frame and 1080p sends the full image. Since most displays accept video input at a max of 60hz, what this effectively means is 1080i will cap out at 30fps while 1080p can achieve up to 60fps. If you are viewing content at 30fps or lower, there is effectively no difference between the 2.

So if I'm playing a game that runs 60 fps on a 1080i tv, you're telling me that it will go from 60fps to 30fps?

Pretty much yes. The 1080i tv, receives half the image, then receives the next half.... then combines both half images together to show you a single full image. Its receiving half frames 60 times a second, but combines them to show you full frames 30 times a second. Whereas the 1080p tv, is receiving full frames 60 times a second, and is displaying 60fps. Basically TVs with 1080i input cannot display more than 30fps.

Although a 30 fps game on 1080i is the same on 1080p. You arent lowering the framerate or performance in anyway, i want to make that clear. 1080p is only better, and never worse.

The only difference between 1080i and 1080p is that 1080i sends half an image per frame and 1080p sends the full image. Since most displays accept video input at a max of 60hz, what this effectively means is 1080i will cap out at 30fps while 1080p can achieve up to 60fps. If you are viewing content at 30fps or lower, there is effectively no difference between the 2.

I'm under the impression 1080i is usually found only on 720p TVs. I still have an old 720p TV and it does 1080i. It shouldn't be a concern to those who own full HD TV sets.

The only difference between 1080i and 1080p is that 1080i sends half an image per frame and 1080p sends the full image. Since most displays accept video input at a max of 60hz, what this effectively means is 1080i will cap out at 30fps while 1080p can achieve up to 60fps. If you are viewing content at 30fps or lower, there is effectively no difference between the 2.

I'm under the impression 1080i is usually found only on 720p TVs. I still have an old 720p TV and it does 1080i. It shouldn't be a concern to those who own full HD TV sets.

1080 is the lines of resolution. Its imposible for a 720p display to be 1080i.

@StormyJoe: No..no crap in this guy. 720p does not look nearly as good as 1080 from the 8 foot distance I sit from the tv. It's very obvious. Why can't people accept that?

IDK, because I read about such things? Humans cannot discern 1080p or 720p with any significant meaningfulness from that distance. It's medical science. Can you tell the difference? Maybe. Is it as big of a difference as you are proposing? No - and it really can't be because you are not an eagle, hawk, or other raptor.

The only difference between 1080i and 1080p is that 1080i sends half an image per frame and 1080p sends the full image. Since most displays accept video input at a max of 60hz, what this effectively means is 1080i will cap out at 30fps while 1080p can achieve up to 60fps. If you are viewing content at 30fps or lower, there is effectively no difference between the 2.

I'm under the impression 1080i is usually found only on 720p TVs. I still have an old 720p TV and it does 1080i. It shouldn't be a concern to those who own full HD TV sets.

1080 is the lines of resolution. Its imposible for a 720p display to be 1080i.

Umm.., most 720p TVs can display 1080i.

The only difference between 1080i and 1080p is that 1080i sends half an image per frame and 1080p sends the full image. Since most displays accept video input at a max of 60hz, what this effectively means is 1080i will cap out at 30fps while 1080p can achieve up to 60fps. If you are viewing content at 30fps or lower, there is effectively no difference between the 2.

I'm under the impression 1080i is usually found only on 720p TVs. I still have an old 720p TV and it does 1080i. It shouldn't be a concern to those who own full HD TV sets.

1080 is the lines of resolution. Its imposible for a 720p display to be 1080i.

Umm.., most 720p TVs can display 1080i.

no they cant, every 1080i input is 1080p output. HDTVs dont output interlaced content. A 720p display regardless of input only can display 720 lines of resolution, the display doesnt have the pixels to display 1080.

The only difference between 1080i and 1080p is that 1080i sends half an image per frame and 1080p sends the full image. Since most displays accept video input at a max of 60hz, what this effectively means is 1080i will cap out at 30fps while 1080p can achieve up to 60fps. If you are viewing content at 30fps or lower, there is effectively no difference between the 2.

I'm under the impression 1080i is usually found only on 720p TVs. I still have an old 720p TV and it does 1080i. It shouldn't be a concern to those who own full HD TV sets.

1080 is the lines of resolution. Its imposible for a 720p display to be 1080i.

Umm.., most 720p TVs can display 1080i.

no they cant, every 1080i input is 1080p output. HDTVs dont output interlaced content. A 720p display regardless of input only can display 720 lines of resolution, the display doesnt have the pixels to display 1080.

Any 720p TV can upscale to 1080i. My 10 year old LG LCD could do it.

Please Log In to post.

Log in to comment