@04dcarraher said:

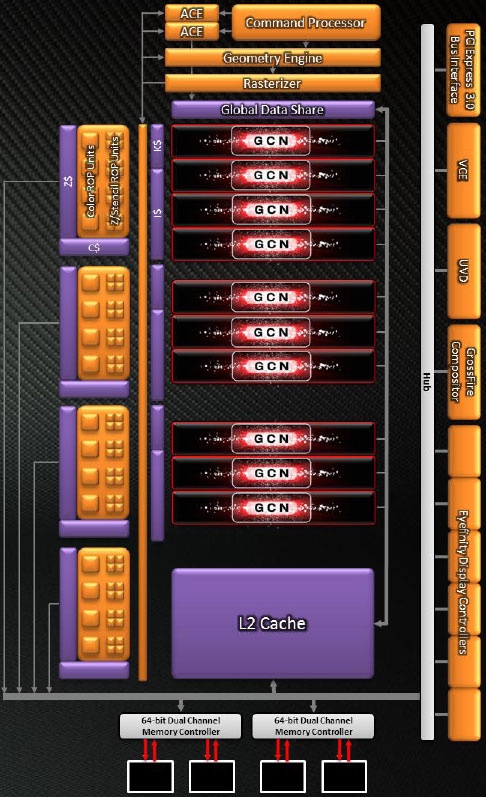

Sony’s PlayStation 4 design docs reveal that the "PlayStation 4 OS uses two out of the 8 cores, and a whopping 3.5 GB of RAM." "Also, 2 of the Jaguar CPU's 8 cores are always going to be off limits as well, meaning developers will only be able to use 75% of the CPU's resources." that 512mb flexible memory that brings the PS4's pool to 5gb is a paging file. The OS and features will not shrink to the degree as the PS3 OS has because of complexity and all the features imbedded into it.

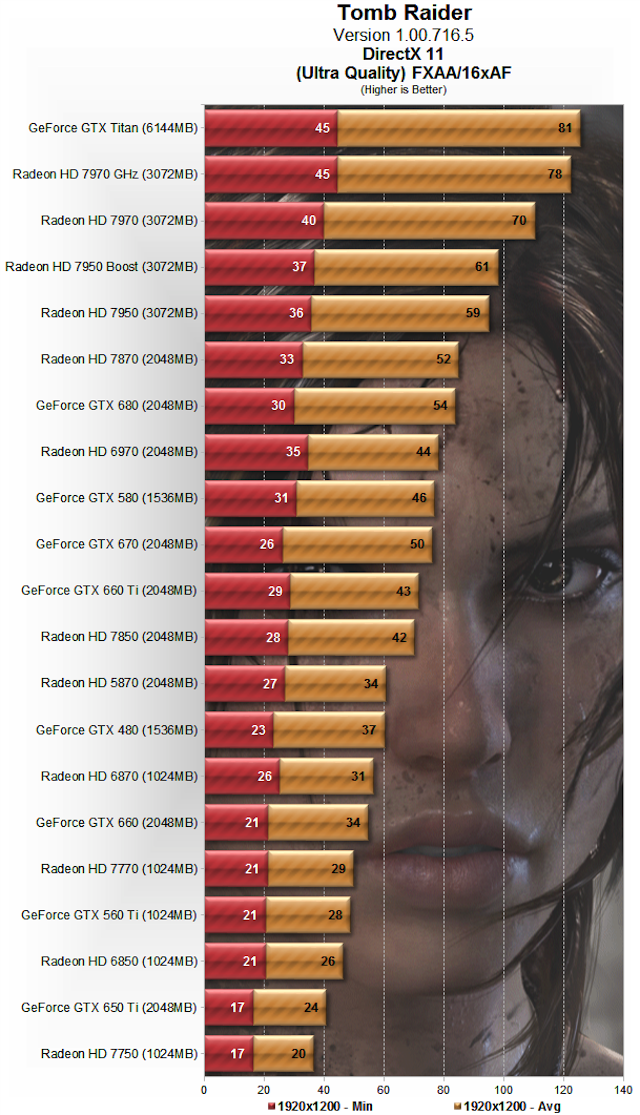

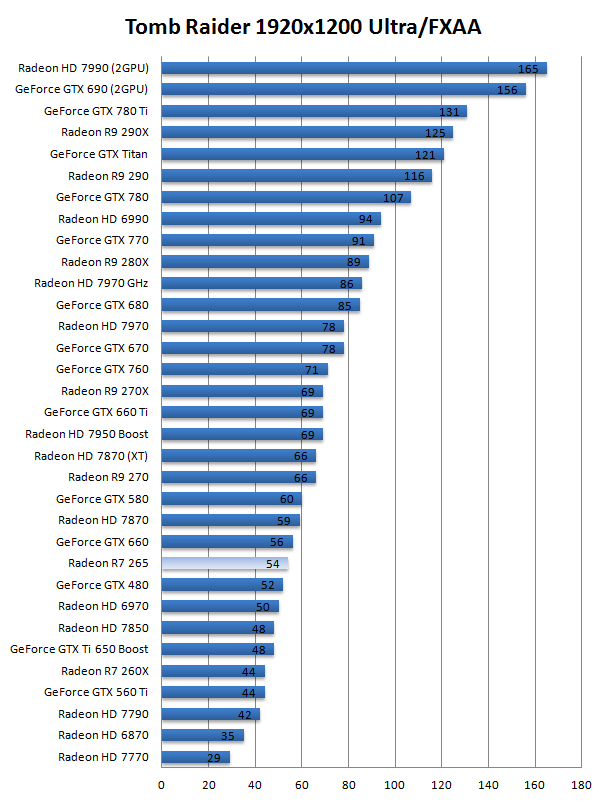

Please prove that the one dev spent as much time and effort in coding TR as the other dev did for PS4. Fact is that even a piss poor 7770 on pc version can run TR beyond 34 fps averaging 40 fps at 1920x1200 with FXAA.

You missed the point going from 50-60 and rapidly into the 40's 30's or whatever is worse then 30 to 25. Which means that they should have set a frame rate limit on PS4. And again the X1 runs TR native 1080 "Tomb Raider: Definitive Edition both run at native 1080p" Also saying x1 is using half resolution and effects is BS the differences are slight at best. "inconsistency in the PS4 experience," The massive frame rates drops are an issue with PS4.

I never claimed 5-10 fps at same resolution..... you have me mixed up with I do believe since Crypt_MX is the one who stated the 5-10 fps "The REAL power difference can be best judged by comparing the two lowest framerates which are 24 and 33, giving the PS4 a 9fps advantage (Which is what I've been guessing since day one, PS4=5-10 more fps).",

just like you claim I said the PS4 wont use more then 4gb when in fact I was referring about the gpu will not use 4gb but only use 2-3gb. And then every time you ignore that fact.

Once again link...

Because the documents didn't say that it was Gerrilla post morten and only DF claimed it,the rest of the sites just re used the news..

Dude the only reason sony reserved such a high memory was to play it safe,period the PS4 OS run on the same source the PS3 one runs and it need 50MB on PS3,is not that demanding,Sony play it safe,you don't need 3GB to run netflix and a bunch of apps,cell phones with 1 GB do it all the time using way weaker CPU to.

That depends on the quality setting in minimum setting which is high it can,on mid setting which is ultra the 7770 runs at 29FPS,and drops to 21 FPS,sounds familiar.?

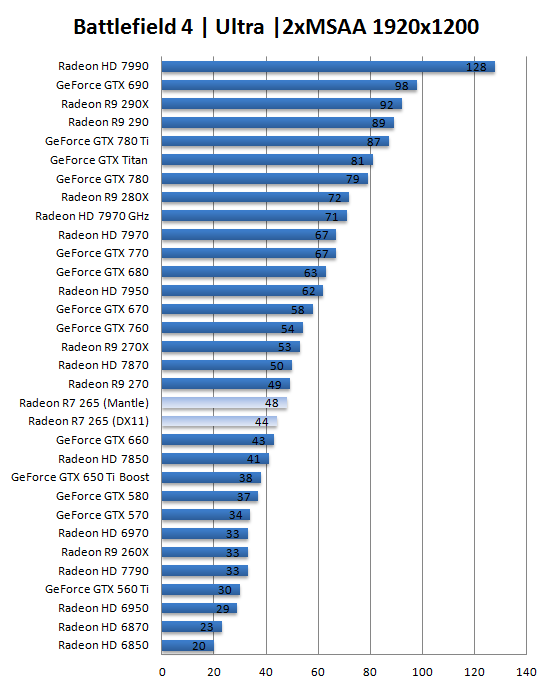

The xbox one version does 30 and drop to 24 FPS,but unlike the test done on the 7770 which had the exact same setting in all video cards when it got 29 FPS,on xbox one it has lower resolution textures,lower quality effects,some effects done at half the resolution,and cut scenes change to 900p because the xbox one can't keep up even on cut scenes,because they are done in engine and not videos.

That is a performance that is under the 7770,why you think is that.?Oh yeah like i told you like for 1000 times the xbox one had a GPU reservation of 10% which leave the xbox one with 1.18TF even lower than 7770,add to that that the test system in which the 7770 was tested wasn't using an 8 core Jaguar,it was using a damn

- Intel Core i7-3960X Extreme Edition (3.30GHz)

So yeah when you join all those factors yeah the xbox one will under perform under the 7770,worse the xbox one wans't even modify to use compute to aid the CPU like the PS4 was.

To begin with, let's address the differences between the two versions of the Definitive Edition on offer. PlayStation 4 users get a comfortably delivered 1080p presentation backed up with a post-process FXAA solution that has minimal impact on texture quality, sporting decent coverage across the scene, bar some shimmer around more finely detailed objects. Meanwhile the situation is more interesting on the Xbox One: the anti-aliasing solution remains unchanged, but we see the inclusion of what looks like a variable resolution framebuffer in some scenes, while some cut-scenes are rendered at a locked 900p, explaining the additional blur in some of our Xbox One screenshots. Curiously, the drop in resolution doesn't seem to occur during gameplay - it's only reserved for select cinematics - suggesting that keeping performance consistent during these sequences was a priority for Xbox One developer United Front Games.

For the most part the main graphical bells and whistles are lavished equally across both consoles, although intriguingly there are a few areas that do see Xbox One cutbacks. As demonstrated in our head-to-head video below (and in our vast Tomb Raider comparison gallery), alpha-based effects in certain areas give the appearance of rendering at half resolution - though other examples do look much cleaner. We also see a lower-quality depth of field in cut-scenes, and reduced levels of anisotropic filtering on artwork during gameplay. Curiously, there are also a few lower-resolution textures in places on Xbox One, but this seems to be down to a bug (perhaps on level of detail transitions) as opposed to a conscious downgrade.

http://www.eurogamer.net/articles/digitalfoundry-2014-tomb-raider-definitive-edition-next-gen-face-off

Owned...lol

Hahahahaaaaaaaaaaaaaaaaaaaaaaaaaaaa So because the PS4 dropped once to 33 FPS and some how we most take that and compare it to the xbox one minimum drop to 24 to claim the difference is 9FPS.?

When both games didn't spent more than 2 seconds on those frames an average they spend it much higher,hahaha yeah lets ignore that what has always been use in the industry is the average and not the minimum to determine frame difference.

So since the 7770 drop to 21 and the 7850 to 28 the real difference is 7 frames between both cards,even that both cards most of the time are running with a 13 FPS difference in average.?

My god you really are butthurt about losing your argument,you claimed even worse 9FPS the average gap is 20 and in many instances it grows to 30FPS but i guess you didn't saw those moments right.? Which funny enough are way more abundant that the 33 FPS drop.

And yeah you claim in the same resolution,and you even hold to a suppose PS4 reservation,in many instances,there is a difference not only in frames but also on quality,and the difference was as small as 9FPS for just 1 moment,most of the time is 20 and some times even 30 which is a devastating 100% difference in frames.

Log in to comment