Poll NVIDIA RTX 3000 series great price. But NVIDIA skimping on Video Memory? (35 votes)

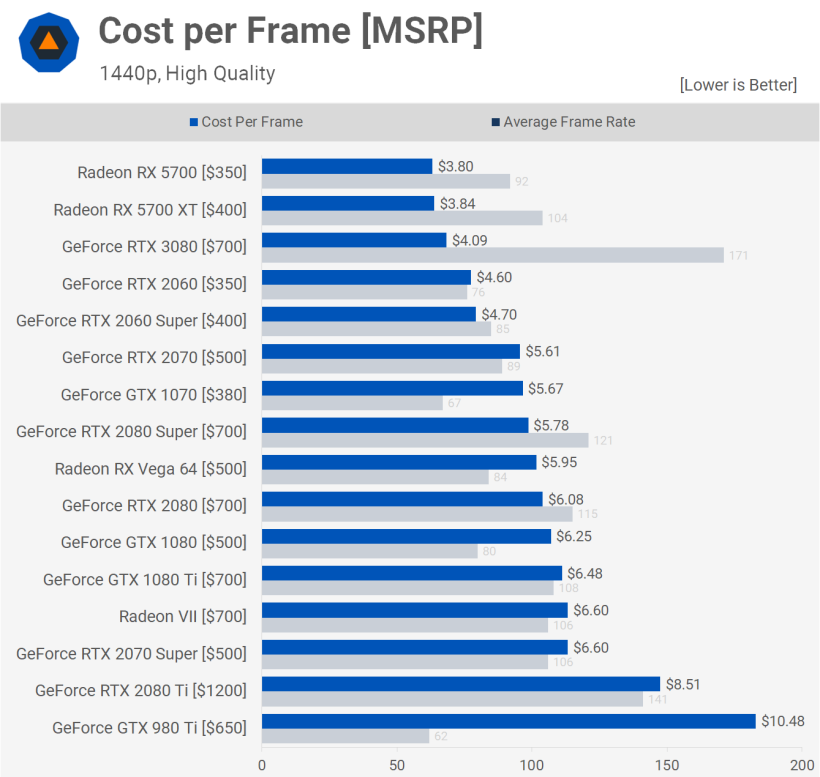

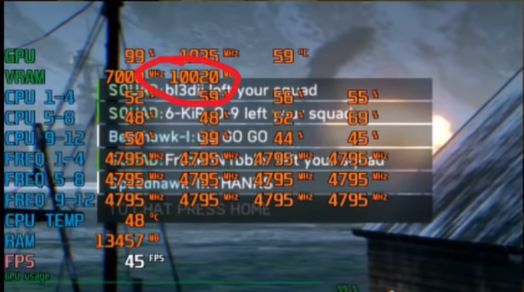

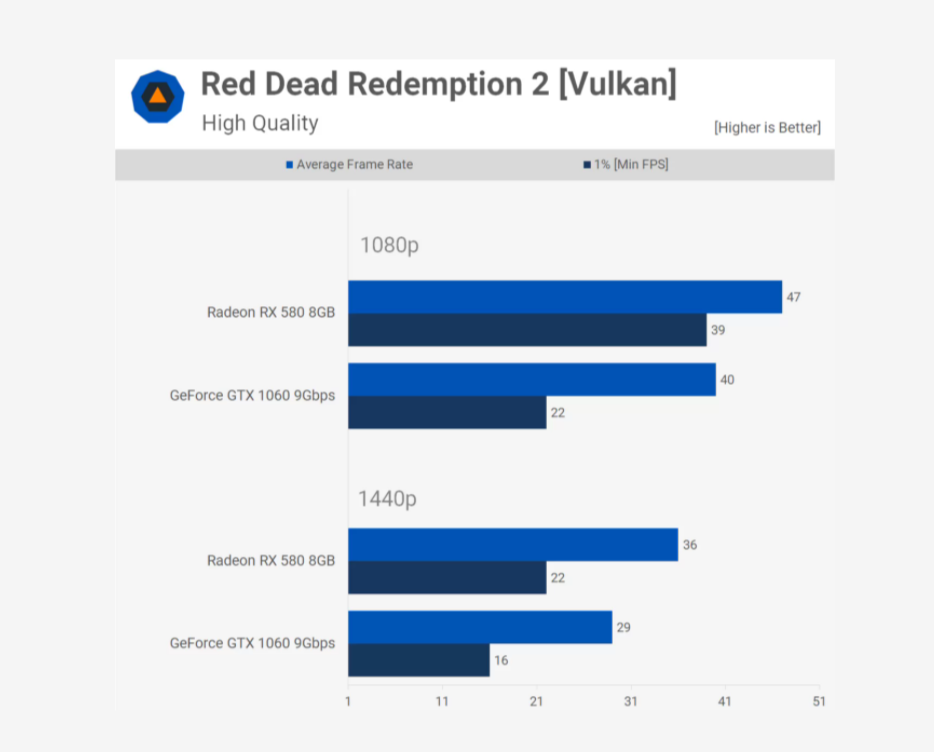

I was absolutely surprised at the price/performance of the new RTX 3000 series. Only to be disappointed to find out that RTX 3070 series only offers 8GB and the RTX 3080 10 GB. 8GB seems so 2016. I am looking to jump to 4K and that's definitely not enough to future proof it. I know, as much as I like my GTX 1060 6GB I can't max out DOOM Eternal at 1080P because to run it at Ultra Nightmare it requires 6.7 GB at 1080P, as stated by tom’s hardware(ultra nightmare needs 6766 MiB at 1080p), while GTX 1060 can run it at descent frame rates but it lacks memory to do so. RX 580 8GB doesn't have this problem, makes me think that I should have gone AMD with 8 GB video memory. Decided to go with 1060 6GB at time because at the time it was widely available and nVidia had the best price/performance/power at the time. I want to jump to 4K but games are already pushing 11 GB maxed out. Heck some games are pushing 13 GB maxed out at 4K.

I really want to get RTX 3000 series (above 3070) but it’s memory is wanting me to wait and see what comes from AMD. Don’t want to get stuck in the same situation where I run out of video memory at 4K couple of years down the road.

So, what do you all say, nVidia skimping on video memory?

Log in to comment