Volta-Based Tesla V100 GPU Shatters Barrier of 120 Teraflops

NVIDIA today launched Volta™ -- the world's most powerful GPU computing architecture, created to drive the next wave of advancement in artificial intelligence and high performance computing.

Volta, NVIDIA's seventh-generation GPU architecture, is built with 21 billion transistors and delivers the equivalent performance of 100 CPUs for deep learning.

It provides a 5x improvement over Pascal™, the current-generation NVIDIA GPU architecture, in peak teraflops, and 15x over the Maxwell™ architecture, launched two years ago. This performance surpasses by 4x the improvements that Moore's law would have predicted.

Breakthrough Technologies

The Tesla V100 GPU leapfrogs previous generations of NVIDIA GPUs with groundbreaking technologies that enable it to shatter the 100 teraflops barrier of deep learning performance. They include:

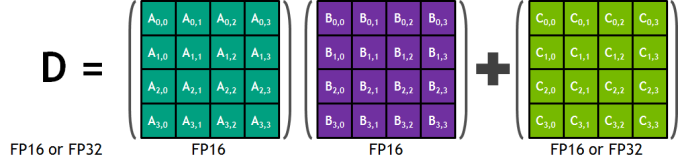

- Tensor Cores designed to speed AI workloads. Equipped with 640 Tensor Cores, V100 delivers 120 teraflops of deep learning performance, equivalent to the performance of 100 CPUs.

- New GPU architecture with over 21 billion transistors. It pairs CUDA cores and Tensor Cores within a unified architecture, providing the performance of an AI supercomputer in a single GPU.

- NVLink™ provides the next generation of high-speed interconnect linking GPUs, and GPUs to CPUs, with up to 2x the throughput of the prior generation NVLink.

- 900 GB/sec HBM2 DRAM, developed in collaboration with Samsung, achieves 50 percent more memory bandwidth than previous generation GPUs, essential to support the extraordinary computing throughput of Volta.

- Volta-optimized software, including CUDA, cuDNN and TensorRT™ software, which leading frameworks and applications can easily tap into to accelerate AI and research.

Source

The V100 will first appear inside Nvidia's bespoke compute servers. Eight of them will come packed inside the $150,000 (~£150,000) DGX-1 rack-mounted server, which ships in the third quarter of 2017. A 250W PCIe slot version of the V100 is also in the works (probably priced at around £10,000), as well as a half-height 150W card that's likely to feature a lower clock speed and disabled cores.

Source

Will the Ps5 even be 1/10 as powerful?

Log in to comment