Disclaimer: TFLOPS aren't actually BS per se, but using them as a measurement of gaming performance doesn't really work well, even within the same architecture in some cases.

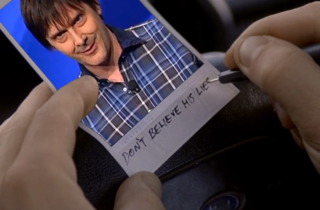

So, Nvidia just announced their new RTX 3000 GPUs, which show INSANE performance increases on paper. The number of TFLOPS has gone up more than anyone could have imagined, and even the RTX 2080 Ti and Xbox Series X look low-end now compared to the weakest of the new GPUs just looking at the numbers. Thus, I made a thread mocking people because of how this all looks, and another person mentioned how the 3070 is "twice as powerful" as the PS5. However, it's really not that simple. There's some magic going on here that you all need to be made aware of, and it will become very clear once RDNA 2 cards are reviewed that TFLOPS are almost completely meaningless to gamers.

So first of all, what even is a FLOP? Well.... that word is meaningless in this context. FLOPS is an acronym that stands for "FLoating point Operations Per Second." Basically, it's a number that says how much computing power a processor has. So, you might think that you run some kind of program to measure this number, right? Well, that's not true. In reality, it's typically calculated using a formula. There are different formulas for different types of processors and FLOPS, but the one commonly used for single-precision operations on a modern GPU is as follows:

GFLOPS = Shader count x Clockspeed in GHz x 2

Note: Nvidia calls Shaders "CUDA cores" and AMD calls them "Stream Processors" or "SPs"

Obviously that is a very simplified version of the concept, but it's enough for the point being made here. If you doubt that it's really just that, let's calculate the RTX 3070's TFLOPS:

5888 x 1.725 GHz x 2 = 20,313.6 GFLOPS, or 20.3 TFLOPS

Now, we know that the 3070 is faster than the 2080 Ti, so let's see where that falls.

4352 x 1.545 GHz x 2 = 13,447.68 GFLOPS, or 13.4 TFLOPS

Seems pretty clear cut then, huh? Thanks to a higher clock speed and many more shaders, the 3070 is a whopping 51% percent faster than the 2080 Ti!

... But then why didn't Nvidia claim that it's 51% faster? And why would they charge so little for such a massive performance bump? Well, there are several factors. Perhaps one that some of you immediately think of is IPC. Perhaps the IPC of Ampere is reduced compared to Turing to increase the number of cores? Well, that's not it. In fact, the IPC is actually higher! But how can I say that? Because the 3070 actually has fewer cores than the 2080 Ti. See, it's not really the shaders that are the true cores of a GPU; they're just part of the core. For Nvidia, the cores are the Streaming Multiprocessors, better known as SMs. The 2080 Ti has 68 SMs, while the 3070 has just 46.

So, how is it possible that the 3070 has more CUDA cores if those are part of the SM and the 2080 Ti has more of them? It's pretty simple actually. The 20 series has 64 cores per SM, while the 30 series has 128 cores per SM. While the CUDA cores do contribute to performance, in games so many parts of the SM are used that doubling the per-SM count doesn't affect game performance much.

"So you're saying that Nvidia lied! The REAL performance is 10.15 TFLOPS! Weaker than PS5 confirmed!"

Yeah, no. 20.3 TFLOPS is still accurate. It's just that shader TFLOPS take into account so little of the GPU that they're pretty much meaningless to us. Using them as an absolute comparison for gaming performance doesn't really get us anywhere. It's a poor metric, especially when comparing consoles that have custom hardware for certain things. In addition, while the shaders don't usually contribute much to gaming on their own, certain games can make heavy use of them and actually see a large boost from this change. Many GPU-accelerated programs may make great use of them as well. Thus, we cant claim that the figure is fake or misleading, or that Nvidia pulled a trick here. It's just that the figure is pretty much arbitrary for gaming.

"Okay, fine. But I just noticed that you used boost clocks for your calculations! Did Nvidia do that too?"

Yes but-

"FALSE ADVERTISING! ONLY 17.7 TFLOPS THAT PERFORMS LIKE 8.8 TFLOPS CONFIRMED!"

Let me finish, please. Yes, but boost in 2020 is vastly different from what it was in 2013, and that applies to PS5 as well. Modern CPUs and GPUs do not boost for a little while and then drop back to base (except in laptops) under typical loads like gaming. In fact, since 2014's Maxwell, Nvidia GPUs often run above the rated boost speed by a few hundred MHz in gaming without any overclocking! Basically, the GPUs have limits to their power usage and temperatures and will boost higher if they are running cool and have headroom for more power. AMD and Intel are adapting similar technology into their CPUs and GPUs as well, though Nvidia's is more agressive.

Enter the PS5 and its supposed "9.2 TFLOPS." PS5 cannot boost past its known max of course, but the idea that it'll typically run at base is inaccurate and ultimately irrelevant. PS5's boost limits are based on power and load balancing between the CPU and GPU. Some of you are taking that as meaning that only one can run at full clocks at a time and devs will be forced to find the right balance, but this is inaccurate. Really, it's just a way to manage power usage. That one dev who said that they had to reduce the CPU to 3.0GHz to get the full speed of the GPU likely would not have benefited much if the clocks were fixed to full boost, since in reality it was load balancing that allowed this to happen, not just power balancing. How do I know this? Because that's how AMD SmartShift works. The CPU clock dropped because the game they're making pushes the GPU hard and doesn't push the CPU much. So, since the game is GPU-bottlenecked while the CPU has more headroom, the power is instead diverted to the GPU's Compute Units (CUs, AMD's equivalent to SMs, which have 64 SPs each), which are actually being stressed. If the GPU is the bottleneck and is running at full clocks, the CPU running faster would not make much of a difference other than the fact that it would be using more energy while still sitting there not doing much. It's possible that it could result in more stutters compared to XSX, but a good developer should be able to work around that. So, basically, PS5 will run at full speed when it needs to, just like how Nvidia GPUs run as fast as the can. I know this won't stop the trolls, but either way the difference between the PS5 and XSX won't be that big. It'll be a bit smaller than PS4 Pro vs One X in fact. PS5's dedicated hardware for stuff like audio and decompression make this even more irrelevant, as that's even less that the CPU has to worry about and makes the difference in CPU speeds more or less moot.

TL;DR Shut up about TFLOPS. Let the games do the talking. Also, boost clocks aren't opportunistic anymore and base clocks are just a worst case.

Log in to comment