MSAA should be abandoned on everything in favor of better frame rate / resolution/ environment quality. You only use MSAA if you have nothing else to turn up.

New Xbox One Update Boosts Console GPU Power

@tormentos: Yes but thats if your comparing it to the ps4. You don't have to compare the two consoles with every waking moment, this 10% increase will be huge for the xbox one. (relative to its prior state) The games have been pretty good thus far and we have seen what it is capable of in its handicap state now its time to wait and see what a 10% increase in GPU looks like.

How is 10% huge when the 40 to 50% the PS4 has on it is small.?

Analogy xbox one jumps from 1180Gflops to 1280 Gflops 10% consider huge real difference 100Gflops.

1840Gflops PS4 - 1280Gflops XBO = 560 Gflops... Consider small.

Want to know what you can expect from that 10%.? 2 or 3 frames more or 100 lines of resolution more depending on the game.

I think your confusing my post. Ignore the ps4 for a minute I know thats hard for you to do. As I said and put in quotes "relative to its prior state" a 10% increase in the graphic processing unit bandwidth is a huge increase. Now how that 10% is quantified is dependent upon the developer. If the game is GPU bound the increase in Bandwidth will defiantly make a difference if it is CPU bound it will not make as much as a difference. However every increase in power or efficiency does not mean +fps or +resolution those are only 2 aspects of the final image. In short without getting to long winded 10% is better than 0.

The reason people say that the difference between the PS4 and XBOX1 is small because despite those big numbers and percentages the results are not to far off. Most of it comes down to development. Looking at just the percentages tossed around they are used to make the PS4 sound way more powerful which is not the case it is indeed more powerful but the numbers change depending on which aspect you want to talk about that day. At the end of the day its about image quality, and if both systems are putting out images that are not that far apart.

The differences between PS4 and Xbox One are demonstrably large, 1080p vs 720p in some cases, 30fps vs 50fps in others. These are large differences on a technical level, now each person here can argue how much these differences mean to them when playing games and everyone is entitled to their opinion. There is defiantly a disconnect between large technical differences and the resulting IQ improvements those differences produce.

What is important is that you use the same method for comparison. If you are using technical differences and say that Xbox One + 10% is a huge improvement then you must also say that Xbox One + 50% is a gigantic improvement. If you are using IQ output and say that Xbox One + 50% is a small increase then you must also say that Xbox One + 10% is a tiny increase. Both of these methods are perfectly valid and both of them are correct but if you are trying to be impartial then you cannot mix and match them to say that Xbox One + 10% is huge but Xbox One + 50% is small.

I personally use the technical differences when comparing in debates like this as they are objective, the IQ method is a lot more subjective but it does have its place.

My theory roughly matches Snipper Elite 3's (proper tiling example) results i.e. both are at 1920x1080p shooter type game with X1 having slightly less frame rate than PS4.

What results? That game is not out yet so we have no empirical evidence for which to support any theory. In fact without any empirical evidence in support you cannot have a theory, all you have is a hypothesis at best and conjecture at worst. You are basing that statement on dev comments where they state the PS4 is at 60FPS and the Xbox One is a little lower. The statement is a bit vague and open to a lot of interpretation and we also have no clue as to weather the IQ settings are the same for both versions, based on your past success perhaps we should wait for the game to release and for performance comparisons to be released before jumping to the conclusion that it supports your 'theory'.

Prior to launch I had a hypothesis that the PS4 would be 40-50% more powerful than the Xbox One. I suggested this would manifest itself in either an IQ disparity, a frame rate disparity or a combination of the two. Now, 7 months after launch we have empirical evidence that supports this with the likes of COD:G, BF4, ACIV, Watch_Dogs, Tomb Raider etc.

560 GFLOPS / 60 fps = extra ~9.3 GFLOP budget per frame.

1840 GFOPS / 60 fps = ~30 GFLOP budget per frame.

1280 GFLOPS / 60 fps = 21.3 GFLOP budget per frame.

If computation graphics quality requires 30 GFLOP per frame..

1280 GFLOPS / 42 fps = ~30.4 GFLOP budget per frame.

My theory roughly matches Snipper Elite 3's (proper tiling example) results i.e. both are at 1920x1080p shooter type game with X1 having slightly less frame rate than PS4.

I think all depend on the engine,i have to see the visuals of the game first,some games can run at 1080p look at Wolfenstein is 1080p but has dynamic resolution,the xbox one version fall very close to 720p pixel density,while the PS4 version also has it but never goes below 900p.

I think your confusing my post. Ignore the ps4 for a minute I know thats hard for you to do. As I said and put in quotes "relative to its prior state" a 10% increase in the graphic processing unit bandwidth is a huge increase. Now how that 10% is quantified is dependent upon the developer. If the game is GPU bound the increase in Bandwidth will defiantly make a difference if it is CPU bound it will not make as much as a difference. However every increase in power or efficiency does not mean +fps or +resolution those are only 2 aspects of the final image. In short without getting to long winded 10% is better than 0.

The reason people say that the difference between the PS4 and XBOX1 is small because despite those big numbers and percentages the results are not to far off. Most of it comes down to development. Looking at just the percentages tossed around they are used to make the PS4 sound way more powerful which is not the case it is indeed more powerful but the numbers change depending on which aspect you want to talk about that day. At the end of the day its about image quality, and if both systems are putting out images that are not that far apart.

Oh i can completely ignore it.

100Gflops is hardly anything and will give you a few more frames,or something like that is not enough for anything big.

you don't get it either then, a 10% increase is huge for the 360 when NOT comparing it to the Ps4, in other words, take the Ps4 out of the equation and open your goddamn eyes and look at what he is saying, hes not even saying it will be on Par with the Ps4 you moron hes just claiming that a 10% increase is Good for the Xbone.

Why do you idiots always have to compare consoles to each other, why don't you judge the 10% increase on it's own merits instead of comparing it to something else all the time.

it appears that both you and tormentos actually need lessons in how to understand things in the correct context becaus both of your replies have no bearing on what the other guy was trying to say and you both look really stupid,lol

Ignoring the PS4 still is nothing dude 10% is nothing it would yield just a few more frames that is it,period even completely ignoring the PS4,100Gflops more is basically nothing,way more than that the xbox one GPU loss by its downgrade from 1.79TF which is what the stock 7790 has to 1.3 TF ,100Gflops 3 or 4 frames with luck.

Lets put it this way.

1180Gflops divided by 30 FPS = 39.3 Gflops per frame

The 10% boost add 100Gflops 100 / 39.3 = 2.5 frames more.

Now this would be on an optimal system without any bottle necks,which the xbox one isn't.

@

It was stated PS4's The Order was memory bandwidth limited for 1920x1080p with MSAA 4X. Higher memory bandwidth with enough frame buffer size has benefits for certain workloads.

On the PC, we can set the detail settings to fit the graphics card's specs e.g. increase MSAA and/or shadows if the graphics card has additional memory bandwidth.

The bottom line is, pure ROPS comparisons are flawed. Color ROP's fill rates includes memory write operations. You haven't factored my Radeon HD 2900's ROP unit example which has multiple I/O ports.

Microsoft already shown 16 ROPS with read and write operations hitting +150 GB/s memory bandwidth. Lower color bit depth will enable more ROPS to use on 176 GB/s memory bandwidth.

The xbox one would be GPU limited in 1920x800p with all the post process effects the order has + MSAA 4X,and i think bandwidth starved as well,MSAA 4 X will fill that ESRAM in an instant,and will require more,

MS already claim ESRAM is 140 to 150GB/s effective on actual code running,that is less than the PS4 has on its bandwidth,so i think it will also bandwidth starve the xbox one.

In fact i think ESRAM is 10MB short for 1080p 4X MSAA,that without taking into notice GPU power,which i think will be the biggest problems,a 7850OC no matter what will work better at 156Gb/s than a 7790 1.3Tf will at 200GB/s,the lack of power will hurt it regardless of having more bandwidth,which the xbox one doesn't have either way since MS confirmed that already.

Jr. Hardware Engineers getting owned.

What he is saying there is that just by killing Kinect reservation it would not make xbox one games out now work better,the 10% has to be program to be use since the power wasn't account for games already out can't take advantage of it like it would be the case on PC.

Either way 10% is 3 frames more basically with luck.

The differences between PS4 and Xbox One are demonstrably large, 1080p vs 720p in some cases, 30fps vs 50fps in others. These are large differences on a technical level, now each person here can argue how much these differences mean to them when playing games and everyone is entitled to their opinion. There is defiantly a disconnect between large technical differences and the resulting IQ improvements those differences produce.

What is important is that you use the same method for comparison. If you are using technical differences and say that Xbox One + 10% is a huge improvement then you must also say that Xbox One + 50% is a gigantic improvement. If you are using IQ output and say that Xbox One + 50% is a small increase then you must also say that Xbox One + 10% is a tiny increase. Both of these methods are perfectly valid and both of them are correct but if you are trying to be impartial then you cannot mix and match them to say that Xbox One + 10% is huge but Xbox One + 50% is small.

I personally use the technical differences when comparing in debates like this as they are objective, the IQ method is a lot more subjective but it does have its place.

My theory roughly matches Snipper Elite 3's (proper tiling example) results i.e. both are at 1920x1080p shooter type game with X1 having slightly less frame rate than PS4.

What results? That game is not out yet so we have no empirical evidence for which to support any theory. In fact without any empirical evidence in support you cannot have a theory, all you have is a hypothesis at best and conjecture at worst. You are basing that statement on dev comments where they state the PS4 is at 60FPS and the Xbox One is a little lower. The statement is a bit vague and open to a lot of interpretation and we also have no clue as to weather the IQ settings are the same for both versions, based on your past success perhaps we should wait for the game to release and for performance comparisons to be released before jumping to the conclusion that it supports your 'theory'.

Prior to launch I had a hypothesis that the PS4 would be 40-50% more powerful than the Xbox One. I suggested this would manifest itself in either an IQ disparity, a frame rate disparity or a combination of the two. Now, 7 months after launch we have empirical evidence that supports this with the likes of COD:G, BF4, ACIV, Watch_Dogs, Tomb Raider etc.

Exactly 10% huge 40 to 50% small...

Hell people ask were is the 40 to 50% difference,Tomb Raider has as big as 100% gap in frames vs the xbox one version,using a PC chart the difference is from average 50FPS vs 30FPS which is the difference between a 7770 and a 7870 on PC on Tomb Raider,to be as big as 7770 vs 7950 when the PS4 is actually running the code at 60FPS which are many instances,which is 60 vs 30 that is a whopping 100% gap,oh and the PS4 version also have better looking effects which are scale down on xbox one,and 1080p cut scenes which are 900p on xbox one as well,is quite a huge gap.

The pixel difference alone in Ghots,MGS5 is more than 100% as well,and some people still ask where is the so call 40 to 50%,when in reality the PS4 is showing even bigger gaps than 40 to 50%.

Already doing 1080p. By Apr-May next year, everything announced will be 1080P 30/60. Greatness begins at the E3. Phil Spencer is going to show gamers what a genius maestro can do at the E3 conference.

I can't wait until the Phil Spencer cult crashes and burns.

LMAO!!!!!

But seriously, the only issue I see with DC is the car count. 50 just isn't enough these days, and for a game with such detail and atmosphere, they should have more. Especially since FH 2 will have hundreds, or at least 200+.

The photo will have to be a consolation prize for dat no clowd powwa. :(

you don't get it either then, a 10% increase is huge for the 360 when NOT comparing it to the Ps4, in other words, take the Ps4 out of the equation and open your goddamn eyes and look at what he is saying, hes not even saying it will be on Par with the Ps4 you moron hes just claiming that a 10% increase is Good for the Xbone.

Why do you idiots always have to compare consoles to each other, why don't you judge the 10% increase on it's own merits instead of comparing it to something else all the time.

it appears that both you and tormentos actually need lessons in how to understand things in the correct context becaus both of your replies have no bearing on what the other guy was trying to say and you both look really stupid,lol

I get it, it's just nobody cares on this board. Most games are multi and xbone will be getting the worst of the bunch. If you want to praise xbone with no comparisons,run to the xbone board.

doesn't make you any less stupid for not getting things in the correct Context dude.

'Lem logic thinking they will be even better than PS4'

Where did the guy say it would be better than the PS4? he didn't

So tell me how he is using lem logic? he's not

End result, your reply just looks plain dumb when you understand the context of what he wrote.

You didn't 'get it' at all, you are just damage controlling because you made yourself look like an tool,lol

@tormentos Doesn't change the basic fact that, yet again, your answer was completely out of context compared to what the other user posted and hence you looked like a tool, that is all, have a nice day:)

and by the way, gamespot managed to get watchdogs running on a dual core Pc, storys on the front page, looks like you got owned by GS,lol

@tormentos Doesn't change the basic fact that, yet again, your answer was completely out of context compared to what the other user posted and hence you looked like a tool, that is all, have a nice day:)

and by the way, gamespot managed to get watchdogs running on a dual core Pc, storys on the front page, looks like you got owned by GS,lol

Again 100Gflops is nothing expect 3 frames more or 100 line more of resolution.

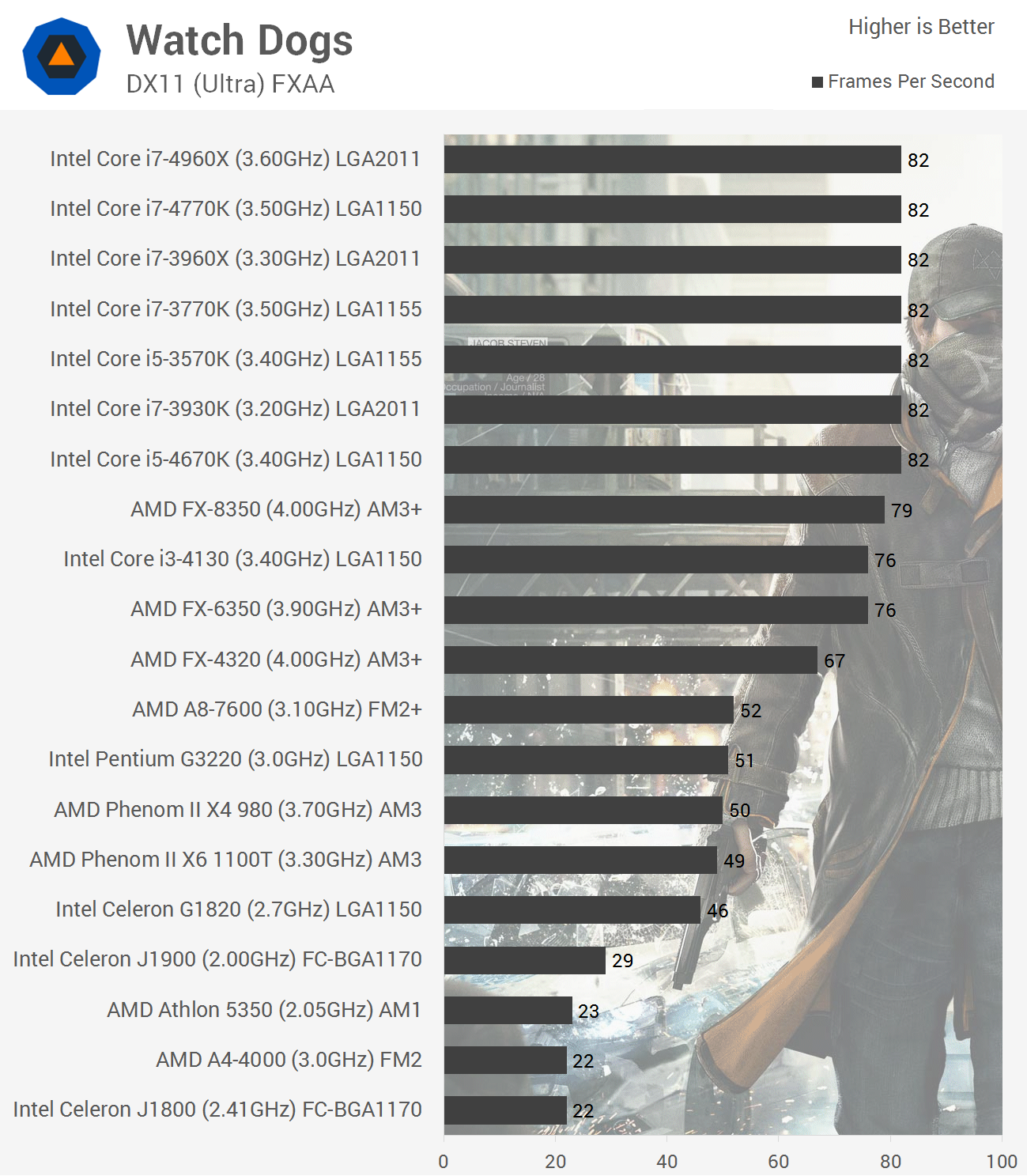

Lo and behold, Watch Dogs runs on our modest Intel PC, even with the lackluster dual-core CPU. But even though it's playable, the results were underwhelming. Never mind the ultra graphics test; Watch Dogs dips below 20fps on low settings. It's telling, as demonstrated in the video above, that there wasn't a dramatic difference in performance between low, medium, and high presets. One look at the Windows task manager illustrates that the CPU, which was always pushing 98 percent or more of its power, is the obvious culprit here. Sure, the GTX 750 Ti isn't a powerhouse, but it's not as flimsy as the Pentium CPU.

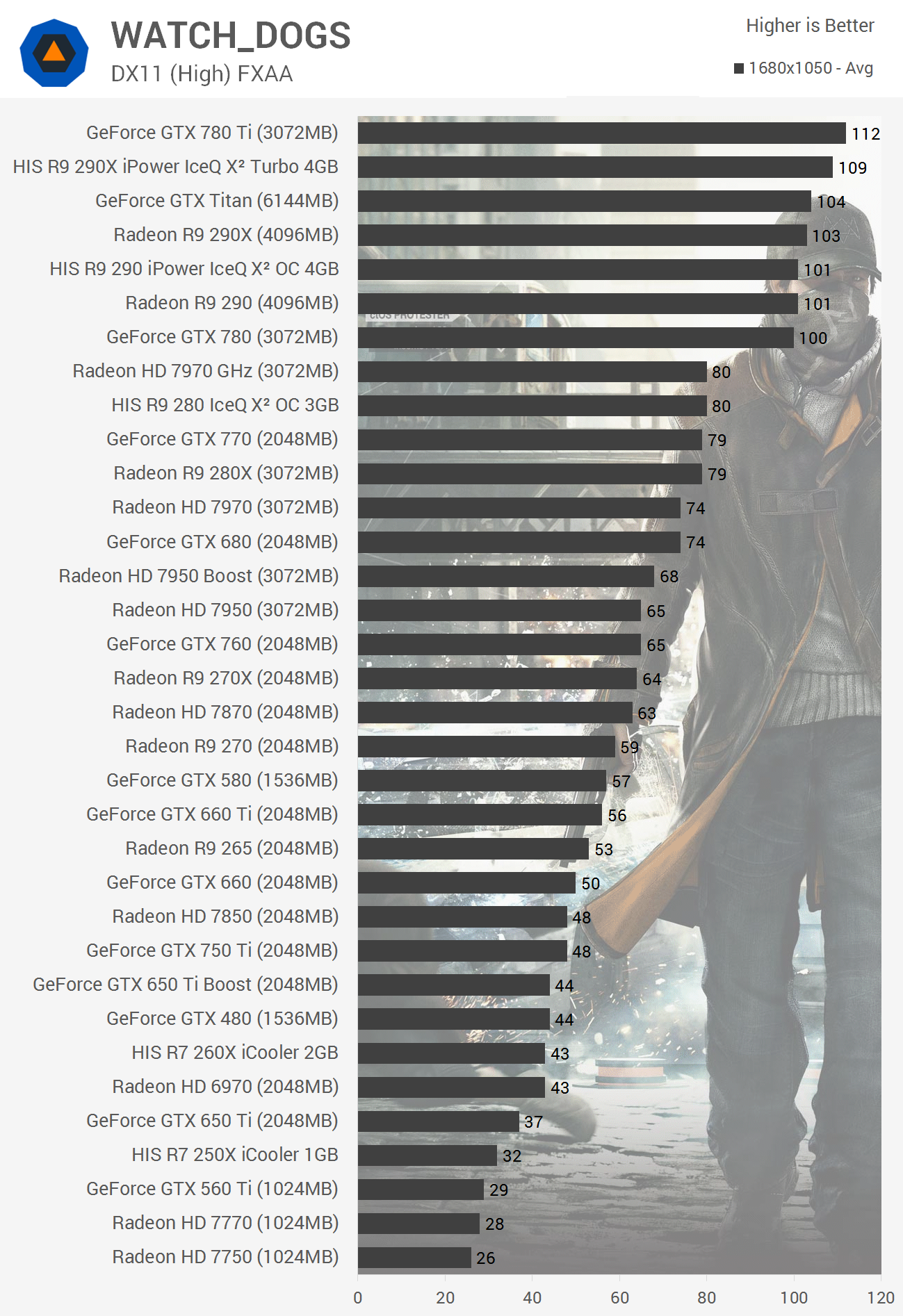

The game looks like a PS3 game but is running on a 750TI and they get lower than 20FPS on low quality,man that 750TI is faster than the 7790 in most test,which is faster than the xbox one in fact is the no cut version of the xbox one GPU.

And can't run the game in low and look like a PS3 game.

That card can run BF4 on low in 1080p at 90FPS on mid 74 FPS and at high at 48 FPS,so yeah getting it to run doesn't mean it does dude,it look like sh** and runs like sh**,and that is believing that some one which such CPU will go for a 750TI,most used GPU on steam are Intel Integrated so yeah try to run watch dogs on that when the 750TI can barely move it with that CPU,there is a reason why they advice you to stay away from dual cores and now is proven,even with a good GPU you will still be limited to low because of been CPU bottleneck.

This coming from you who though hes Phenom 2 quad core was 32 bits..hahahaha

@tormentos Doesn't change the basic fact that, yet again, your answer was completely out of context compared to what the other user posted and hence you looked like a tool, that is all, have a nice day:)

and by the way, gamespot managed to get watchdogs running on a dual core Pc, storys on the front page, looks like you got owned by GS,lol

Thank you I swear I was speaking english but both those posters seem to be missing the point. 40-50% is a very large difference(I would like to know where this 50% difference is coming from). Saying 50% more power is easy but what exactly are you saying is 50% more power? (serious question)The 10% increase is in gpu bandwidth(speculated). So if your referring to the 40% ALU advantage of the ps4 that is not relative to the 10% bandwidth increase as they serve different purposes.

If your metrics for measuring the power of the system is frame rate and resolution of multi platform games then you are not making a genuine comparison. Xbox 1 has already proven it can run 1080p, 900p, 792, and etc. Those measures would work if Xbox had never run those resolutions.

MS must be getting really desperate.

1 week of Watch Dogs sales on PS4 outsold Xbone Titanfail 12 weeks of sales.

I guess if you're gonna drop out of the industry, you might as well do it with a bang.

Forza, Halo and Gears every year now until the end of the gen. Lower price every 6 months. LOL... It's so pathetic.

@tormentos Doesn't change the basic fact that, yet again, your answer was completely out of context compared to what the other user posted and hence you looked like a tool, that is all, have a nice day:)

and by the way, gamespot managed to get watchdogs running on a dual core Pc, storys on the front page, looks like you got owned by GS,lol

Again 100Gflops is nothing expect 3 frames more or 100 line more of resolution.

Lo and behold, Watch Dogs runs on our modest Intel PC, even with the lackluster dual-core CPU. But even though it's playable, the results were underwhelming. Never mind the ultra graphics test; Watch Dogs dips below 20fps on low settings. It's telling, as demonstrated in the video above, that there wasn't a dramatic difference in performance between low, medium, and high presets. One look at the Windows task manager illustrates that the CPU, which was always pushing 98 percent or more of its power, is the obvious culprit here. Sure, the GTX 750 Ti isn't a powerhouse, but it's not as flimsy as the Pentium CPU.

The game looks like a PS3 game but is running on a 750TI and they get lower than 20FPS on low quality,man that 750TI is faster than the 7790 in most test,which is faster than the xbox one in fact is the no cut version of the xbox one GPU.

And can't run the game in low and look like a PS3 game.

That card can run BF4 on low in 1080p at 90FPS on mid 74 FPS and at high at 48 FPS,so yeah getting it to run doesn't mean it does dude,it look like sh** and runs like sh**,and that is believing that some one which such CPU will go for a 750TI,most used GPU on steam are Intel Integrated so yeah try to run watch dogs on that when the 750TI can barely move it with that CPU,there is a reason why they advice you to stay away from dual cores and now is proven,even with a good GPU you will still be limited to low because of been CPU bottleneck.

This coming from you who though hes Phenom 2 quad core was 32 bits..hahahaha

Still runs on a dual core so your 'quad core' minimum statement is Wrong obviously, and as far as the phenom 2 is concerned i got it confused with the Phenom Quad core i had in my last Pc which i am assuming is only a First gen phenom even though it clearly stated it was a phenom 2 X4 on the side of the unit, bottom line is that i was right, Watchdogs WILL run on a dual core device

http://www.cpubenchmark.net/cpu.php?cpu=AMD+Phenom+9950+Quad-Core.

Pointing out where i went wrong is a purile way to distract from the fact that you where wrong yourself, nes flash tormentos, me being wrong about something does not make you any less wrong.

The difference between me and you is that i am man enough to admit when i am wrong, you clearly are not:)

you don't get it either then, a 10% increase is huge for the 360 when NOT comparing it to the Ps4, in other words, take the Ps4 out of the equation and open your goddamn eyes and look at what he is saying, hes not even saying it will be on Par with the Ps4 you moron hes just claiming that a 10% increase is Good for the Xbone.

Why do you idiots always have to compare consoles to each other, why don't you judge the 10% increase on it's own merits instead of comparing it to something else all the time.

it appears that both you and tormentos actually need lessons in how to understand things in the correct context becaus both of your replies have no bearing on what the other guy was trying to say and you both look really stupid,lol

I get it, it's just nobody cares on this board. Most games are multi and xbone will be getting the worst of the bunch. If you want to praise xbone with no comparisons,run to the xbone board.

doesn't make you any less stupid for not getting things in the correct Context dude.

'Lem logic thinking they will be even better than PS4'

Where did the guy say it would be better than the PS4? he didn't

So tell me how he is using lem logic? he's not

End result, your reply just looks plain dumb when you understand the context of what he wrote.

You didn't 'get it' at all, you are just damage controlling because you made yourself look like an tool,lol

It does make you more stupid though when I was merely using his quote to put down all the idiot lems in the thread who think it will make any huge difference.

@tormentos Doesn't change the basic fact that, yet again, your answer was completely out of context compared to what the other user posted and hence you looked like a tool, that is all, have a nice day:)

and by the way, gamespot managed to get watchdogs running on a dual core Pc, storys on the front page, looks like you got owned by GS,lol

Thank you I swear I was speaking english but both those posters seem to be missing the point. 40-50% is a very large difference(I would like to know where this 50% difference is coming from). Saying 50% more power is easy but what exactly are you saying is 50% more power? (serious question)The 10% increase is in gpu bandwidth(speculated). So if your referring to the 40% ALU advantage of the ps4 that is not relative to the 10% bandwidth increase as they serve different purposes.

If your metrics for measuring the power of the system is frame rate and resolution of multi platform games then you are not making a genuine comparison. Xbox 1 has already proven it can run 1080p, 900p, 792, and etc. Those measures would work if Xbox had never run those resolutions.

No worries mate, i completely understood what you where trying to say and the fact that they didn't seem to led me to 2 conclusions

1. they are either Completely lacking in any sort of reading comprehension,or

2. they where making a deliberate effort to make you look like you didn't know what you where talking about to discredit you.

I get it from tormentos all the time, if i had a quid for every times his replies to my posts where completely out of context i would be a very rich man,lol

Be careful with tormentos as well, he will bring up every mistake you made in the past to distract from the mistakes he makes himself, check out the last sentence in his last reply to me.

Still runs on a dual core so your 'quad core' minimum statement is Wrong obviously, and as far as the phenom 2 is concerned i got it confused with the Phenom Quad core i had in my last Pc which i am assuming is only a First gen phenom even though it clearly stated it was a phenom 2 X4 on the side of the unit, bottom line is that i was right, Watchdogs WILL run on a dual core device

http://www.cpubenchmark.net/cpu.php?cpu=AMD+Phenom+9950+Quad-Core.

Pointing out where i went wrong is a purile way to distract from the fact that you where wrong yourself, nes flash tormentos, me being wrong about something does not make you any less wrong.

The difference between me and you is that i am man enough to admit when i am wrong, you clearly are not:)

No it needs a damn quad core and up,you don't know what the fu** running means apparently,it doesn't mean it can physically run,but the fact that it will do so badly,and is proven lower than 20FPs on a freaking 750TI is a joke the xbox one is running it better and has a weaker GPU.

No phenom is 32 bit,all Phenom are 64 bit.

The games needs a quad core and Gamestop confirms it.

Low settings less than 20FPs on a 750Ti....lol

High settings FXAA 48 FPS on the same GPU 750TI little higher than 900p.

This should silence your argument, like 30FPS more just by having a good CPU.

Enjoy... Look at those dual core..lol

Hell even the 5350 quad core fared horrible...

Many games can run at 15 FPS that doesn't mean they run,that unplayable crap.

you don't get it either then, a 10% increase is huge for the 360 when NOT comparing it to the Ps4, in other words, take the Ps4 out of the equation and open your goddamn eyes and look at what he is saying, hes not even saying it will be on Par with the Ps4 you moron hes just claiming that a 10% increase is Good for the Xbone.

Why do you idiots always have to compare consoles to each other, why don't you judge the 10% increase on it's own merits instead of comparing it to something else all the time.

it appears that both you and tormentos actually need lessons in how to understand things in the correct context becaus both of your replies have no bearing on what the other guy was trying to say and you both look really stupid,lol

I get it, it's just nobody cares on this board. Most games are multi and xbone will be getting the worst of the bunch. If you want to praise xbone with no comparisons,run to the xbone board.

doesn't make you any less stupid for not getting things in the correct Context dude.

'Lem logic thinking they will be even better than PS4'

Where did the guy say it would be better than the PS4? he didn't

So tell me how he is using lem logic? he's not

End result, your reply just looks plain dumb when you understand the context of what he wrote.

You didn't 'get it' at all, you are just damage controlling because you made yourself look like an tool,lol

It does make you more stupid though when I was merely using his quote to put down all the idiot lems in the thread who think it will make any huge difference.

no it doesn't but you keep telling yourself that, whatever helps you sleep at night dude.

Still runs on a dual core so your 'quad core' minimum statement is Wrong obviously, and as far as the phenom 2 is concerned i got it confused with the Phenom Quad core i had in my last Pc which i am assuming is only a First gen phenom even though it clearly stated it was a phenom 2 X4 on the side of the unit, bottom line is that i was right, Watchdogs WILL run on a dual core device

http://www.cpubenchmark.net/cpu.php?cpu=AMD+Phenom+9950+Quad-Core.

Pointing out where i went wrong is a purile way to distract from the fact that you where wrong yourself, nes flash tormentos, me being wrong about something does not make you any less wrong.

The difference between me and you is that i am man enough to admit when i am wrong, you clearly are not:)

No it needs a damn quad core and up,you don't know what the fu** running means apparently,it doesn't mean it can physically run,but the fact that it will do so badly,and is proven lower than 20FPs on a freaking 750TI is a joke the xbox one is running it better and has a weaker GPU.

No phenom is 32 bit,all Phenom are 64 bit.

The games needs a quad core and Gamestop confirms it.

Low settings less than 20FPs on a 750Ti....lol

High settings FXAA 48 FPS on the same GPU 750TI little higher than 900p.

This should silence your argument, like 30FPS more just by having a good CPU.

Enjoy... Look at those dual core..lol

Hell even the 5350 quad core fared horrible...

Many games can run at 15 FPS that doesn't mean they run,that unplayable crap.

It does make you more stupid though when I was merely using his quote to put down all the idiot lems in the thread who think it will make any huge difference.

no it doesn't but you keep telling yourself that, whatever helps you sleep at night dude.

Why would I need help when the lems are the ones getting up to bad news practically every day?

Today was UFC being inferior..LOL

Still runs on a dual core so your 'quad core' minimum statement is Wrong obviously, and as far as the phenom 2 is concerned i got it confused with the Phenom Quad core i had in my last Pc which i am assuming is only a First gen phenom even though it clearly stated it was a phenom 2 X4 on the side of the unit, bottom line is that i was right, Watchdogs WILL run on a dual core device

http://www.cpubenchmark.net/cpu.php?cpu=AMD+Phenom+9950+Quad-Core.

Pointing out where i went wrong is a purile way to distract from the fact that you where wrong yourself, nes flash tormentos, me being wrong about something does not make you any less wrong.

The difference between me and you is that i am man enough to admit when i am wrong, you clearly are not:)

No it needs a damn quad core and up,you don't know what the fu** running means apparently,it doesn't mean it can physically run,but the fact that it will do so badly,and is proven lower than 20FPs on a freaking 750TI is a joke the xbox one is running it better and has a weaker GPU.

No phenom is 32 bit,all Phenom are 64 bit.

The games needs a quad core and Gamestop confirms it.

Low settings less than 20FPs on a 750Ti....lol

High settings FXAA 48 FPS on the same GPU 750TI little higher than 900p.

This should silence your argument, like 30FPS more just by having a good CPU.

Enjoy... Look at those dual core..lol

Hell even the 5350 quad core fared horrible...

Many games can run at 15 FPS that doesn't mean they run,that unplayable crap.

Stop getting upset dude, it runs on a dual core, whether or not it runs like crap is irrelevent to me, you claimed it couldn't run at all on a dual core at all and clearly it does, lets just agree to disagree in what actually constitutes 'running' on a PC.

it's also interesting what you said, that would mean my last machine had a 64 bit quad core in it yet my dual core sandybridge in my new PC runs rings around it, i am going to research this some more because it seems strange that a 64 bit Dual core would have increased performance over a 64 Bit Quad core, the only reason i can think for that is that my old quad core was somehow damaged.

i can concede i was wrong about the phenom, makes no odds to me but as far as i am concerned watchdogs CAN run on a dual core, very badly yes, but still runs.

It does make you more stupid though when I was merely using his quote to put down all the idiot lems in the thread who think it will make any huge difference.

no it doesn't but you keep telling yourself that, whatever helps you sleep at night dude.

Why would I need help when the lems are the ones getting up to bad news practically every day?

Today was UFC being inferior..LOL

'every day' thats a bit of an exaggeration but yeah, lots of bad news for lems but like i care, i am not a lem.

i mainly game on a Nexus 7 now and i have no intention of getting either a PS4 or an Xbone, neither are worth the asking price because i don't buy my consoles based on power i buy them based on games and neither console has enough quality games that i cannot get on my 360 to make either of them worth the asking price.

You see i am not obsessed with power or Grafix, watchdogs on the 360 may not look as pretty as the Xbone or Ps4 versions but it's the same game so i don't give a flying F***.

I would never spend 400 quid on a console anyway, the most i payed was 280 quid and that was my 360 at launch.

Dear Media: The GPU change was developer facing. Unplugging Kinect does not get you more HP. Devs have to code to the new specs.

— Larry Hryb (@majornelson) June 5, 2014

Jr. Hardware Engineers getting owned.

thats only for the people who were questioning what would happen when a person unplugs kinect, as in, would it bring better performance etc.

Forget the power brick. That huge ass fan that's about 1/3 the size of the system will take care of any heat problems. There's a reason it's there. Not because the XB1 is a weak system. Greatness is coming...

Ah, faux-insider is back, one of the many blackace personalities. Can't wait for the damage control when what ever you're expecting doesn't turn up lol. Monday is gonna be a good day on system wars, hehe ;)

Both consoles will remain with the same power. Only developers will get better at pushing them. PS4 will always be the best.

Still runs on a dual core so your 'quad core' minimum statement is Wrong obviously, and as far as the phenom 2 is concerned i got it confused with the Phenom Quad core i had in my last Pc which i am assuming is only a First gen phenom even though it clearly stated it was a phenom 2 X4 on the side of the unit, bottom line is that i was right, Watchdogs WILL run on a dual core device

http://www.cpubenchmark.net/cpu.php?cpu=AMD+Phenom+9950+Quad-Core.

Pointing out where i went wrong is a purile way to distract from the fact that you where wrong yourself, nes flash tormentos, me being wrong about something does not make you any less wrong.

The difference between me and you is that i am man enough to admit when i am wrong, you clearly are not:)

No it needs a damn quad core and up,you don't know what the fu** running means apparently,it doesn't mean it can physically run,but the fact that it will do so badly,and is proven lower than 20FPs on a freaking 750TI is a joke the xbox one is running it better and has a weaker GPU.

No phenom is 32 bit,all Phenom are 64 bit.

The games needs a quad core and Gamestop confirms it.

Low settings less than 20FPs on a 750Ti....lol

High settings FXAA 48 FPS on the same GPU 750TI little higher than 900p.

This should silence your argument, like 30FPS more just by having a good CPU.

Enjoy... Look at those dual core..lol

Hell even the 5350 quad core fared horrible...

Many games can run at 15 FPS that doesn't mean they run,that unplayable crap.

The i3 4130 is a dual core cpu and it fares quite well, averaging at 76fps.

And the xbone will never reach the same performance as the ps4, as you and many others are saying, this should be common knowledge, the gap in gpu power is to big but when I see rare cases where the ps4 performs about 100% better (like in Metal Gear Solid 5 720p vs 1080p, both at 60fps) something must be fishy. If the xbone version were 800-900p at 60fps, it would make more sense. Maybe Konami didn't take their time to really make it run well the xbone.

http://www.gamespot.com/articles/new-xbox-one-update-boosts-console-gpu-power/1100-6420097/

"Microsoft is looking to close the performance gap between the Xbox One and Sony's more powerful PlayStation 4, and is now giving developers access to more GPU bandwidth with the console's latest software development kit.

"June #XboxOne software dev kit gives devs access to more GPU bandwidth. More performance, new tools and flexibility to make games better," said Xbox Xboss Phil Spencer on Twitter. Microsoft had previously made rumblings that it was looking to make this the case as soon as possible."

UPDATE: magic is turning X1 more powerfull, (now it sounds more plausible)

These responses always make me chuckle. I don't care what the mathematical difference in pixels is between 720p, 900p, and 1080p are. I have a passing interest in how much different the games look. And, when I see 720p vs 1080p when I am playing the game (not staring at screen shots on some website), I really do not notice a difference. When I look at 900p vs 1080p, i don't see a difference at all.

So, all that extra powah of the PS4 being touted by cows really doesn't amount to much. The colors, polygons, textures, and most importantly gameplay of the multiplats of PS4 and Xb1 are the same.

Resolution-gate is a minor issue.

@tormentos: Yes but thats if your comparing it to the ps4. You don't have to compare the two consoles with every waking moment, this 10% increase will be huge for the xbox one. (relative to its prior state) The games have been pretty good thus far and we have seen what it is capable of in its handicap state now its time to wait and see what a 10% increase in GPU looks like.

How is 10% huge when the 40 to 50% the PS4 has on it is small.?

Analogy xbox one jumps from 1180Gflops to 1280 Gflops 10% consider huge real difference 100Gflops.

1840Gflops PS4 - 1280Gflops XBO = 560 Gflops... Consider small.

Want to know what you can expect from that 10%.? 2 or 3 frames more or 100 lines of resolution more depending on the game.

I think your confusing my post. Ignore the ps4 for a minute I know thats hard for you to do. As I said and put in quotes "relative to its prior state" a 10% increase in the graphic processing unit bandwidth is a huge increase. Now how that 10% is quantified is dependent upon the developer. If the game is GPU bound the increase in Bandwidth will defiantly make a difference if it is CPU bound it will not make as much as a difference. However every increase in power or efficiency does not mean +fps or +resolution those are only 2 aspects of the final image. In short without getting to long winded 10% is better than 0.

The reason people say that the difference between the PS4 and XBOX1 is small because despite those big numbers and percentages the results are not to far off. Most of it comes down to development. Looking at just the percentages tossed around they are used to make the PS4 sound way more powerful which is not the case it is indeed more powerful but the numbers change depending on which aspect you want to talk about that day. At the end of the day its about image quality, and if both systems are putting out images that are not that far apart.

The differences between PS4 and Xbox One are demonstrably large, 1080p vs 720p in some cases, 30fps vs 50fps in others. These are large differences on a technical level, now each person here can argue how much these differences mean to them when playing games and everyone is entitled to their opinion. There is defiantly a disconnect between large technical differences and the resulting IQ improvements those differences produce.

What is important is that you use the same method for comparison. If you are using technical differences and say that Xbox One + 10% is a huge improvement then you must also say that Xbox One + 50% is a gigantic improvement. If you are using IQ output and say that Xbox One + 50% is a small increase then you must also say that Xbox One + 10% is a tiny increase. Both of these methods are perfectly valid and both of them are correct but if you are trying to be impartial then you cannot mix and match them to say that Xbox One + 10% is huge but Xbox One + 50% is small.

I personally use the technical differences when comparing in debates like this as they are objective, the IQ method is a lot more subjective but it does have its place.

My theory roughly matches Snipper Elite 3's (proper tiling example) results i.e. both are at 1920x1080p shooter type game with X1 having slightly less frame rate than PS4.

What results? That game is not out yet so we have no empirical evidence for which to support any theory. In fact without any empirical evidence in support you cannot have a theory, all you have is a hypothesis at best and conjecture at worst. You are basing that statement on dev comments where they state the PS4 is at 60FPS and the Xbox One is a little lower. The statement is a bit vague and open to a lot of interpretation and we also have no clue as to weather the IQ settings are the same for both versions, based on your past success perhaps we should wait for the game to release and for performance comparisons to be released before jumping to the conclusion that it supports your 'theory'.

Prior to launch I had a hypothesis that the PS4 would be 40-50% more powerful than the Xbox One. I suggested this would manifest itself in either an IQ disparity, a frame rate disparity or a combination of the two. Now, 7 months after launch we have empirical evidence that supports this with the likes of COD:G, BF4, ACIV, Watch_Dogs, Tomb Raider etc.

Rebellion already stated the rough performance difference between the two versions. Sorry, the games you mentioned are outside Rebellion's stated conditions.

1. For 60 fps target requirement with CU being the limiter.

1840 GFLOPS / 60 fps = 30.6 GFLOP per frame budget

1188 GFLOPS / 60 fps = 19.8 GFLOP per frame budget.

1188 GFLOPS / 39 fps = 30.46 GFLOP per frame budget.

Can't you can see why X1 has reduce it's resolution or shader details when frame budget is around 30.6 GFLOP budget?

1280 GFLOPS / 42 fps = 30.47 GFLOP per frame budget.

1320 GFLOPS / 44 fps = 30.0 GFLOP per frame budget.

2. 32MB ESRAM is too small for most non-tiling 1920x1080p workloads. Using ~68GB/s DDR3 would yield 7770 like results. There's a specific workaround (programmer and runtime/SDK sides) for this issue as mentioned by Rebellion. X1 is not a forgiving machine.

@

Well, I don't know if the prototype 7850 had 32 ROPs as well, but that is in the final version and not in the XBone GPU, so it and the R7 260 are just 7790 derivatives (1 CU disabled), with 16 ROPS and different clocks. 32 ROPs is one of the things that differenciates the PS4 as a stronger resolution pusher.

I do agree the 7770 and and 7850 are pretty much equivalents, provided they have higher clocks to compensate the fewer shader count. 7770 is already 1000mhz base, so even a slight OC will probably push it above XBone. The 7850 would probably need at least 2GB and an overclock to 900mhz+ to match the PS4. Of course, this is all without counting CPU-bound games.

X1's 16 ROPS at 853 Mhz is effectively 17 ROPS at 800Mhz. ROPS count are less important when it can be memory bandwidth limited for high colour bit depths and we are not factoring other co-processors within the same ROP unit.

Prototype 7850's 32 ROPS would be limited to 153.6 GB/s memory bandwidth, while Radeon HD 7950 BE's 32 ROPS has 240 GB/s memory bandwidth.

Remember, AMD's ROPS are not just for color ROPS (for color fill rates, memory write type operations) i.e. it includes MSAA, Depth/Stencil ROPS co-processor elements.

Radeon HD 2900's ROP hardware block.

MD's incoming HBM (replacing GDDR5) can help to maximise the existing ROP's capabilities by giving it the highest memory bandwidth available at lowest cost within AMD's price target segment.

HBM is AMD/Hynix's stack memory solution for JEDEC standard.

AMD/Hynix's HBM would reduce the reason for Microsoft's 32 MB ESRAM + DDR3 cost reduction approach e.g. future PS4 may have all-in-one SOC+ on-chip 8 GB HBM. For compatibility reasons, PS4's HBM would be most likely limited to 176 GB/s.

Radeon HD R7-265 has 16 CUs at 900Mhz base with 925Mhz boost i.e. 1.843 TFLOPS at 900Mhz with 179 GB/s memory bandwidth.

Well I don't know if the 7850 would benefit from more bandwidth or if the 7950 can take advantage of the 240GB/s its 384bit bus allows (it would use more than 179GB/s though, I think).

It reminds me of the case for my GT 640 DDR3 (28.8GB/s BW, with 39GB/s with OC) that matched or exceeded (and rarely came below) a GDDR5 6670. It's also above the 8800 even with this weak bandwidth.

Bottom line is, I think the XB1 and PS4's GPUs are similar enough where we can compare ROP and shader numbers, as well as clockspeeds, and the PS4 will always be above.

The real mystery is Latte.

It was stated PS4's The Order was memory bandwidth limited for 1920x1080p with MSAA 4X. Higher memory bandwidth with enough frame buffer size has benefits for certain workloads.

On the PC, we can set the detail settings to fit the graphics card's specs e.g. increase MSAA and/or shadows if the graphics card has additional memory bandwidth.

The bottom line is, pure ROPS comparisons are flawed. Color ROP's fill rates includes memory write operations. You haven't factored my Radeon HD 2900's ROP unit example which has multiple I/O ports.

Microsoft already shown 16 ROPS with read and write operations hitting +150 GB/s memory bandwidth. Lower color bit depth will enable more ROPS to use on 176 GB/s memory bandwidth.

Alright, so the PS4 GPU can use up more bandwidth, that's interesting to hear, but when does it become moot if the rest of the card can't cope with the settings (before we hit a bandwidth wall)? I seriously don't know for sure. I'm also not literate enough on the matter to know the differences between ROPs, I only know as far as ROPs and clockspeed affect pixel rate, which in turn is a factor for higher resolutions.

I also read somewhere that for the 7850 and 7870 in particular, 32 ROPs was kind of overkill for 1080p (considering the rest of the card's power), whereas 7770 and 7790's 16 ROPs was just barely enough for 1080p, saying Nvidia's 24 ROP configuration in some cards was a balanced middle ground, which takes me back again to the GT 640, which has different versions, and mine with lower clockspeed (902mhz), 32 TMUs, 16 ROPs and 28.8GB/s BW is slower than the GT 640 Rev.2 with higher clockspeed (1046mhz), 16 TMUs, 8 ROPs and 40.1GB/s BW. It's tough designing/choosing a balanced card.

This is getting interesting. I surely don't know as much as you do, but I love learning. I do think PS4 is kind of a simple, brute-force design and that the XB1 and Wii U approach of big pool of slower ram + embedded fast ram is a more elegant/smart solution, and has potential benefits for the size/price of the system in the long run, and I keep thinking how the GDDR5 on the PS4 is wasted for non GPU operation. I know they went for simple unified design but I'm not sure how much more than 2-3GB can a "7860" use before it hits other bottlenecks.

On a side-note, I think MSAA should be abandoned on consoles in favour of SMAA as I think it is a more performance cost-effective measure on a limited system like the consoles'.

I prefer PS4's design over Wii U or X1's designs. For X1's silicon budget, Microsoft could have 7870 XT class card and waited for HBM (stack memory) on-chip solution to lower future cost i.e. swapping GDDR5s with HBMs. Microsoft didn't change it's approach and rehashed Xbox 360 like solution with updated AMD Jaguar and GCN parts.

If Sony selected ATI for PS3, it could have a PS3 that killed Xbox 360 e.g. near dual Xenos GPUs which replaces 6 SPEs and RSX. In AMD Jaguar and GCN era, you get PS4 with 18 CUs vs X1's 12 CUs. You get larger GPU with Sony's approach.

7770's and 7790's memory bandwidths are similar in magnitude. 7790's 16 ROPS at 1Ghz are effectively 20 ROPS at 800Mhz, but it's gimped by memory bandwidths i.e. 7790's 96 GB/s vs 7850's 153.6 GB/s

PS4's GPU solution is roughly similar to Radeon HD R7-265 which is the highest in the R7-2xx line. It would interesting when AMD releases it's incoming mid-range VI GCNs with HBM e.g. 8 ACEs with 22 CUs** and 32 ROPS.

**Hawaii VI GCN is divided into four 11 CU blocks with each block having 16 ROPS, hence mid-range part may have 22 CUs and 32 ROPS.

Thank you I swear I was speaking english but both those posters seem to be missing the point. 40-50% is a very large difference(I would like to know where this 50% difference is coming from). Saying 50% more power is easy but what exactly are you saying is 50% more power? (serious question)The 10% increase is in gpu bandwidth(speculated). So if your referring to the 40% ALU advantage of the ps4 that is not relative to the 10% bandwidth increase as they serve different purposes.

If your metrics for measuring the power of the system is frame rate and resolution of multi platform games then you are not making a genuine comparison. Xbox 1 has already proven it can run 1080p, 900p, 792, and etc. Those measures would work if Xbox had never run those resolutions.

From the 50% more CU..

GPU on PC also have power difference the 7870 2X the flops of the 7770 yet it doesn't translate into double frame rate or double resolution,which is the case been recorded on PS4 vs xbox one.

50% more power doesn't mean 50% better graphics when it comes to GPU.

10% is nothing,and the whole 40 to 50% was calculated based on the total flop count including the 10% reservation.

Nice blacka4ace.

PS4 outsells Xbox One in April

http://www.cnet.com/news/ps4-outsells-xbox-one-in-april-while-microsoft-leads-in-software/

Meanwhile MS keep getting owned by a company close to bankruptcy....hahahaha

MS is been beaten by a broke company..hahahahaaaaaaaaaaaaaaaaa

Stop getting upset dude, it runs on a dual core, whether or not it runs like crap is irrelevent to me, you claimed it couldn't run at all on a dual core at all and clearly it does, lets just agree to disagree in what actually constitutes 'running' on a PC.

it's also interesting what you said, that would mean my last machine had a 64 bit quad core in it yet my dual core sandybridge in my new PC runs rings around it, i am going to research this some more because it seems strange that a 64 bit Dual core would have increased performance over a 64 Bit Quad core, the only reason i can think for that is that my old quad core was somehow damaged.

i can concede i was wrong about the phenom, makes no odds to me but as far as i am concerned watchdogs CAN run on a dual core, very badly yes, but still runs.

It doesn't run on a dual core it runs like sh** lower than 20FPS in low quality regardless of having a capable GPU..hahhaahaha

Even some quad core ones run it like sh** to..hahahahaaa

Yes that is because they are difference architectures,the phenom 2 was an old architecture compare to the more recent SandyBridge,but is not all like you claim..

http://alienbabeltech.com/main/core-i3-2105-vs-phenom-970-x4-the-importance-of-hyperthreading-in-gaming/4/

While the SandyBridge was 2 core it had HT which help,but when the work is heavily multithreaded the Sandybridge fell behind the Phenom 2 quad core,and this is the problem with dual core CPU on that game,you have AI and graphics program to work best on 4 cores or more,when you use just 2 the game even with a good GPU get bottle neck,to the freaking extreme low quality on a 750TI with less than 20 FPS is a joke,that game runs much faster on that same GPU on high in 1080p with a better CPU.

This game is unplayable on dual core even with good GPU.

The i3 4130 is a dual core cpu and it fares quite well, averaging at 76fps.

And the xbone will never reach the same performance as the ps4, as you and many others are saying, this should be common knowledge, the gap in gpu power is to big but when I see rare cases where the ps4 performs about 100% better (like in Metal Gear Solid 5 720p vs 1080p, both at 60fps) something must be fishy. If the xbone version were 800-900p at 60fps, it would make more sense. Maybe Konami didn't take their time to really make it run well the xbone.

Yeah that is a Haswell CPU which is basically new tech,the majority of steam users don't have dual core Haswell have old AMD and Intel dual core which are not close to a Haswell CPU from 2013,the argument is based on what the majority on steam had,Haswell is relatively new and has a small % of people using it.

Not only is 47% of steam on dual core but the majority with 21% had speed from 2.3 to 2.69....

My Modified Ford F-150 V8 has 475 foot lbs. at the rear wheels. Of raw power.

I still can't beat a 4 cylinder sports car in a race. Does that mean I have less horse power? No.

Due to setup choices in the vehicles. Weight, Power train, gearing, gas distribution. I lose every time.

Optimization is a huge factor in any device. Raw power does not = Win.

It's how you use that power.

F18 fighter jet would beat a 4 cylinder sports car in a straight line race i.e. it depends on raw power's magnitude.

PS4 is not bloated by Ford F-150 type overheads.

These responses always make me chuckle. I don't care what the mathematical difference in pixels is between 720p, 900p, and 1080p are. I have a passing interest in how much different the games look. And, when I see 720p vs 1080p when I am playing the game (not staring at screen shots on some website), I really do not notice a difference. When I look at 900p vs 1080p, i don't see a difference at all.

So, all that extra powah of the PS4 being touted by cows really doesn't amount to much. The colors, polygons, textures, and most importantly gameplay of the multiplats of PS4 and Xb1 are the same.

Resolution-gate is a minor issue.

ah poor stormyclown, either blind or just ignoring the differences to be able to worship his piece of plastic, so sad :(

Thank you I swear I was speaking english but both those posters seem to be missing the point. 40-50% is a very large difference(I would like to know where this 50% difference is coming from). Saying 50% more power is easy but what exactly are you saying is 50% more power? (serious question)The 10% increase is in gpu bandwidth(speculated). So if your referring to the 40% ALU advantage of the ps4 that is not relative to the 10% bandwidth increase as they serve different purposes.

If your metrics for measuring the power of the system is frame rate and resolution of multi platform games then you are not making a genuine comparison. Xbox 1 has already proven it can run 1080p, 900p, 792, and etc. Those measures would work if Xbox had never run those resolutions.

From the 50% more CU..

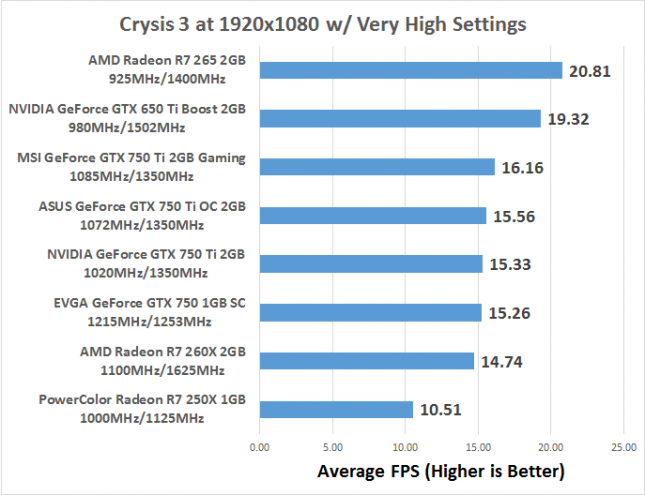

GPU on PC also have power difference the 7870 2X the flops of the 7770 yet it doesn't translate into double frame rate or double resolution,which is the case been recorded on PS4 vs xbox one.

50% more power doesn't mean 50% better graphics when it comes to GPU.

10% is nothing,and the whole 40 to 50% was calculated based on the total flop count including the 10% reservation.

From http://www.legitreviews.com/powercolor-radeon-r7-250x-1gb-video-card-review_137172/5

R7-265 is about 2X over R7-250X (rename 7770)'s 10.51 fps score i.e. R7-250X's 10.51 fps x 2 = 20.81.

R7-265 has nearly twice the rasterization units, nearly twice the triangle rate, slightly more than twice the ROPS memory bandwidth, slightly more than twice the memory bandwidth.

-------------

For techpowerup's graph.

7770's 46% X 2 = 92% which is close to 7870 (2.56 TFLOPS). 7770 has ~1.280 TFLOPS.

7870 is almost 2X over 7770..

7870 has twice TFLOPS over 7770.

7870 has twice rasterization units over 7770.

7870 has twice triangle rate over 7770.

7870 has twice the ROPS memory bandwidth over 7770.

------------

7950 non-BE has 2.867 TFLOPS with dual rasterization units i.e. similar to 7870.

7950 non-BE has slightly more than twice the TFLOPS over 7770.

7950 non-BE has nearly twice rasterization units over 7770.

7950 non-BE has nearly twice triangle rate over 7770.

7950 non-BE has about 3X ROPS memory bandwidth over 7770.

----------

R9-290X is nearly a quad scale from 7770 i.e. 4X (rasterization units+11 CU+16 ROPS) blocks.

7870 is a dual scale from 7770 i.e. 2X (10 CU+16 ROPS) blocks.

7950/7970 is an odd GCN config since it doesn't directly scale from 7770 building blocks i.e. AMD plans to replace it with VI based mid to high GCNs e.g. 3X block VI GCN** and 2X block VI GCN**.

**2X (rasterization units+11 CU+16 ROPS) = GCN config with 2 rasterization units + 22 CU + 32 ROPS.

3X (rasterization units+11 CU+16 ROPS) = GCN config with 3 rasterization units + 33 CU + 48 ROPS.

Your all better off with the superior console the PlayStation 4. it will out last X1 you can be sure of that. so what if its got a performance boost still dont make it any better off.

Already X1 has lowered the price and removed that God awful thing you X1 Cock-box fan boys call kinect, hoping they can claw some new customers ( my advice for all you think of purchasing a X1 DON'T get a PS4 you be thankful for the advice Ive given you.

My Modified Ford F-150 V8 has 475 foot lbs. at the rear wheels. Of raw power.

I still can't beat a 4 cylinder sports car in a race. Does that mean I have less horse power? No.

Due to setup choices in the vehicles. Weight, Power train, gearing, gas distribution. I lose every time.

Optimization is a huge factor in any device. Raw power does not = Win.

It's how you use that power.

F18 fighter jet would beat a 4 cylinder sports car in a straight line race i.e. it depends on raw power's magnitude.

PS4 is not bloated by Ford F-150 type overheads.

PS4 is a raw straight forward number cruncher? 1 Memory pool. 50% Easier to program for, going by developers claims.

X1 has more hardware optimization code to TRY to reach the same results? Modifications to the code. That have to be done, to reach peak performance. 2 memory pools.

Jets..Really?

@b4x:

My Modified Ford F-150 V8 has 475 foot lbs. at the rear wheels. Of raw power.

I still can't beat a 4 cylinder sports car in a race. Does that mean I have less horse power? No.

Due to setup choices in the vehicles. Weight, Power train, gearing, gas distribution. I lose every time.

Optimization is a huge factor in any device. Raw power does not = Win.

It's how you use that power.

Terrible analogy.

@b4x: Terrible analogy.

It may be. :p

The setup of the hardware on both consoles Is not. One is harder to reach certain goals than the other. Forcing more optimizations on the developer.

A weaker product trying to make up a power gap with software coding optimizations written for the setup of the hardware.

@b4x: When you put it like that, I agree with you.

However, the limits of a weaker product is always lower than the better product (let's say, if we were comparing the ps4 to a high end PC)

THAT SAID, the difference between X1 and ps4 is too little for me to notice any difference and give a crap lol (if there is any difference at all)

Just like last gen, or even worse, people are nitpicking to put one console ahead of the other. This is senseless.

I couldn't be happier with both of them =D

854p has never been so close...

Already doing 1080p. By Apr-May next year, everything announced will be 1080P 30/60. Greatness begins at the E3. Phil Spencer is going to show gamers what a genius maestro can do at the E3 conference.

oh look it's "the waiting game" by blackace again, didn't you say the same stupid bs last year with TEH NEW AND MAGICAL SDK!!!!

hahha keep dreaming lem.

From the 50% more CU..

GPU on PC also have power difference the 7870 2X the flops of the 7770 yet it doesn't translate into double frame rate or double resolution,which is the case been recorded on PS4 vs xbox one.

50% more power doesn't mean 50% better graphics when it comes to GPU.

10% is nothing,and the whole 40 to 50% was calculated based on the total flop count including the 10% reservation.

Nice blacka4ace.

PS4 outsells Xbox One in April

http://www.cnet.com/news/ps4-outsells-xbox-one-in-april-while-microsoft-leads-in-software/

Meanwhile MS keep getting owned by a company close to bankruptcy....hahahaha

MS is been beaten by a broke company..hahahahaaaaaaaaaaaaaaaaa

It doesn't run on a dual core it runs like sh** lower than 20FPS in low quality regardless of having a capable GPU..hahhaahaha

Even some quad core ones run it like sh** to..hahahahaaa

Yes that is because they are difference architectures,the phenom 2 was an old architecture compare to the more recent SandyBridge,but is not all like you claim..

http://alienbabeltech.com/main/core-i3-2105-vs-phenom-970-x4-the-importance-of-hyperthreading-in-gaming/4/

While the SandyBridge was 2 core it had HT which help,but when the work is heavily multithreaded the Sandybridge fell behind the Phenom 2 quad core,and this is the problem with dual core CPU on that game,you have AI and graphics program to work best on 4 cores or more,when you use just 2 the game even with a good GPU get bottle neck,to the freaking extreme low quality on a 750TI with less than 20 FPS is a joke,that game runs much faster on that same GPU on high in 1080p with a better CPU.

This game is unplayable on dual core even with good GPU.

The i3 4130 is a dual core cpu and it fares quite well, averaging at 76fps.

And the xbone will never reach the same performance as the ps4, as you and many others are saying, this should be common knowledge, the gap in gpu power is to big but when I see rare cases where the ps4 performs about 100% better (like in Metal Gear Solid 5 720p vs 1080p, both at 60fps) something must be fishy. If the xbone version were 800-900p at 60fps, it would make more sense. Maybe Konami didn't take their time to really make it run well the xbone.

Yeah that is a Haswell CPU which is basically new tech,the majority of steam users don't have dual core Haswell have old AMD and Intel dual core which are not close to a Haswell CPU from 2013,the argument is based on what the majority on steam had,Haswell is relatively new and has a small % of people using it.

Not only is 47% of steam on dual core but the majority with 21% had speed from 2.3 to 2.69....

Well even the dual core Pentium G 3.0 Ghz manages an average of over 50fps and that's a pretty low end cpu. I get what you mean but you shouldn't say that it needs a quad core.

My Modified Ford F-150 V8 has 475 foot lbs. at the rear wheels. Of raw power.

I still can't beat a 4 cylinder sports car in a race. Does that mean I have less horse power? No.

Due to setup choices in the vehicles. Weight, Power train, gearing, gas distribution. I lose every time.

Optimization is a huge factor in any device. Raw power does not = Win.

It's how you use that power.

F18 fighter jet would beat a 4 cylinder sports car in a straight line race i.e. it depends on raw power's magnitude.

PS4 is not bloated by Ford F-150 type overheads.

PS4 is a raw straight forward number cruncher? 1 Memory pool. 50% Easier to program for, going by developers claims.

X1 has more hardware optimization code to TRY to reach the same results? Modifications to the code. That have to be done, to reach peak performance. 2 memory pools.

Jets..Really?

PS4 has a light weight body with V18 engine with 8000 RPM and it doesn't need X1's ESRAM workarounds. PS4 doesn't have F-150 level overheads.

X1's ESRAM workaround is an attempt to keep it's GPU's co-processor engines running at high efficiency.

On PS4, it's GPU's co-processor engines are running at high efficiency due to flat and fast GDDR5 memory layout.

AMD PRT doesn't change CU's TMU potential, hence why there's less need for PRT on PS4 i.e. it has 5 to 6 GB usable GDDR5 memory to feed it's TMUs, before it reverts to PRT (stream from hard drive source). On X1, it would need to use PRT and Tiling tricks nearly from the start.

So confirmed the Xbone is the most powerful current gen console?

Nope.

Mantle + Prototype 7850 with 12 CU (1.32 TFLOPS) and 153.6 GB/s memory bandwidth is still inferior to Mantle+R7-265 with 1.84 TFLOPS and 179GB/s memory bandwidth.

R7-265 ~= PS4.

The gap between the two boxes are not large enough from my POV i.e. I own 8870M (mobile 7770 type GCN) to MSI Twin Frozr R9-290X OC.

These responses always make me chuckle. I don't care what the mathematical difference in pixels is between 720p, 900p, and 1080p are. I have a passing interest in how much different the games look. And, when I see 720p vs 1080p when I am playing the game (not staring at screen shots on some website), I really do not notice a difference. When I look at 900p vs 1080p, i don't see a difference at all.

So, all that extra powah of the PS4 being touted by cows really doesn't amount to much. The colors, polygons, textures, and most importantly gameplay of the multiplats of PS4 and Xb1 are the same.

Resolution-gate is a minor issue.

ah poor stormyclown, either blind or just ignoring the differences to be able to worship his piece of plastic, so sad :(

Keep your mouth shut, cud chewer. Even the devs say this resolution thing is fanboy bullshit.

These responses always make me chuckle. I don't care what the mathematical difference in pixels is between 720p, 900p, and 1080p are. I have a passing interest in how much different the games look. And, when I see 720p vs 1080p when I am playing the game (not staring at screen shots on some website), I really do not notice a difference. When I look at 900p vs 1080p, i don't see a difference at all.

So, all that extra powah of the PS4 being touted by cows really doesn't amount to much. The colors, polygons, textures, and most importantly gameplay of the multiplats of PS4 and Xb1 are the same.

Resolution-gate is a minor issue.

ah poor stormyclown, either blind or just ignoring the differences to be able to worship his piece of plastic, so sad :(

Keep your mouth shut, cud chewer. Even the devs say this resolution thing is fanboy bullshit.

yeah, let me guess, the devs that bring 792p to xbone? xD

keep that asshurt stormyclown

Please Log In to post.

Log in to comment