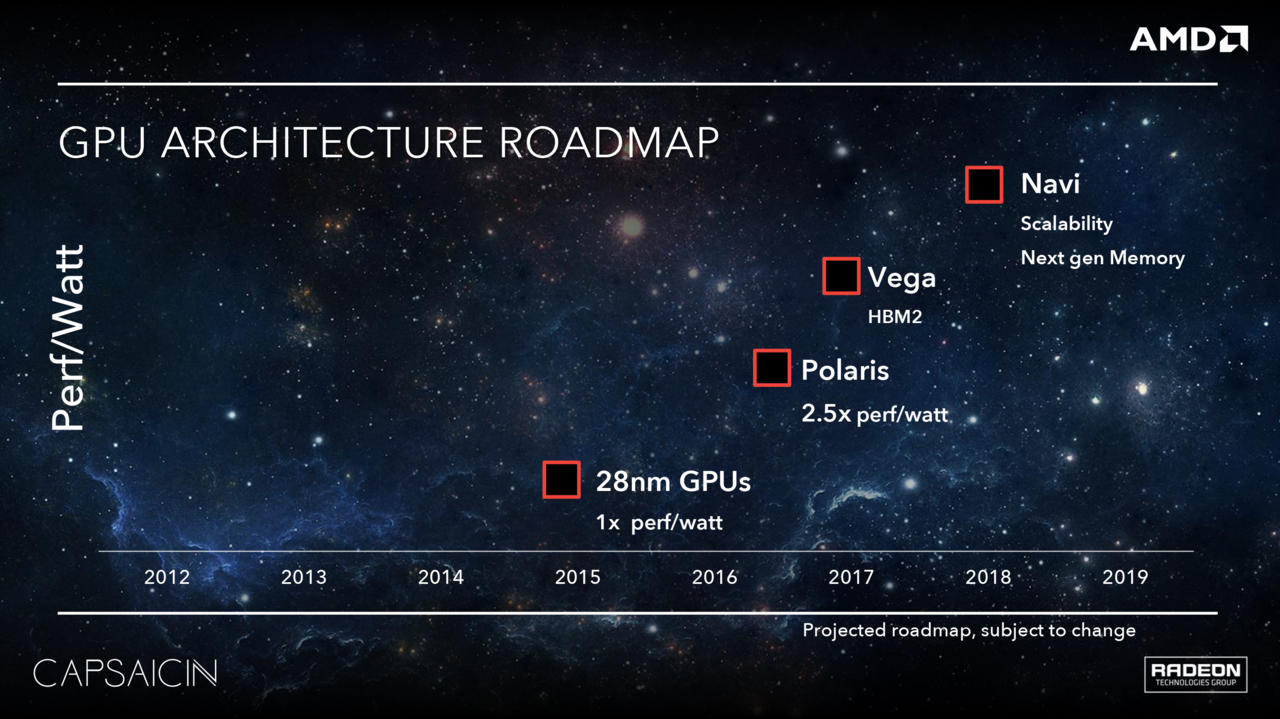

It's AMD's marketing speak when AMD is late to the market. AMD's damage control video is not new.

AMD's price argument is weak when cheaper NVIDIA Super arrives in July 2019.

AMD refuse to acknowledge NAVI's wave32 being similar to CUDA's wave32.

Ahh ha sure. Except they're not wrong. What is the point in implementing a feature in its infancy just for the sake of it.

AMD's aim for hardware accelerated ray-tracing is lighting effects. AMD is not even aiming for multiple different ray-tracing types e.g. lighting and reflections. AMD's ray-tracing argument is geared towards a single ray-tracing type and do it well.

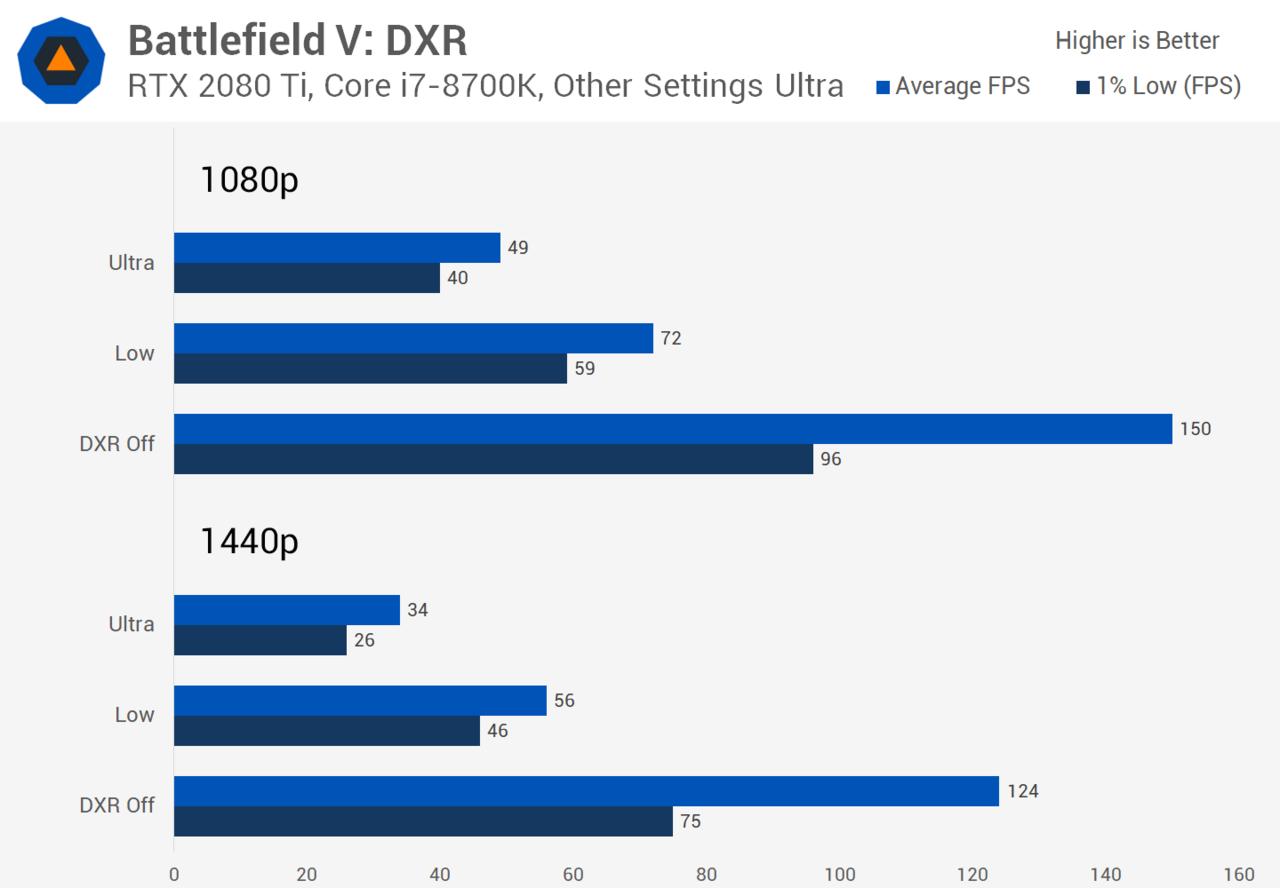

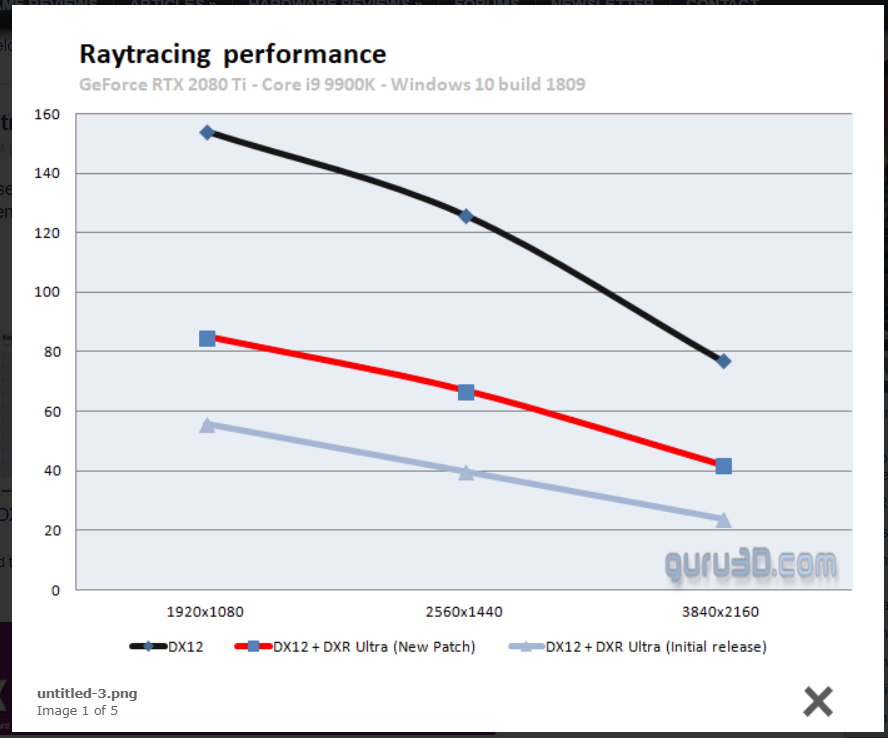

RTX Turing can easily handle single type ray-tracing.

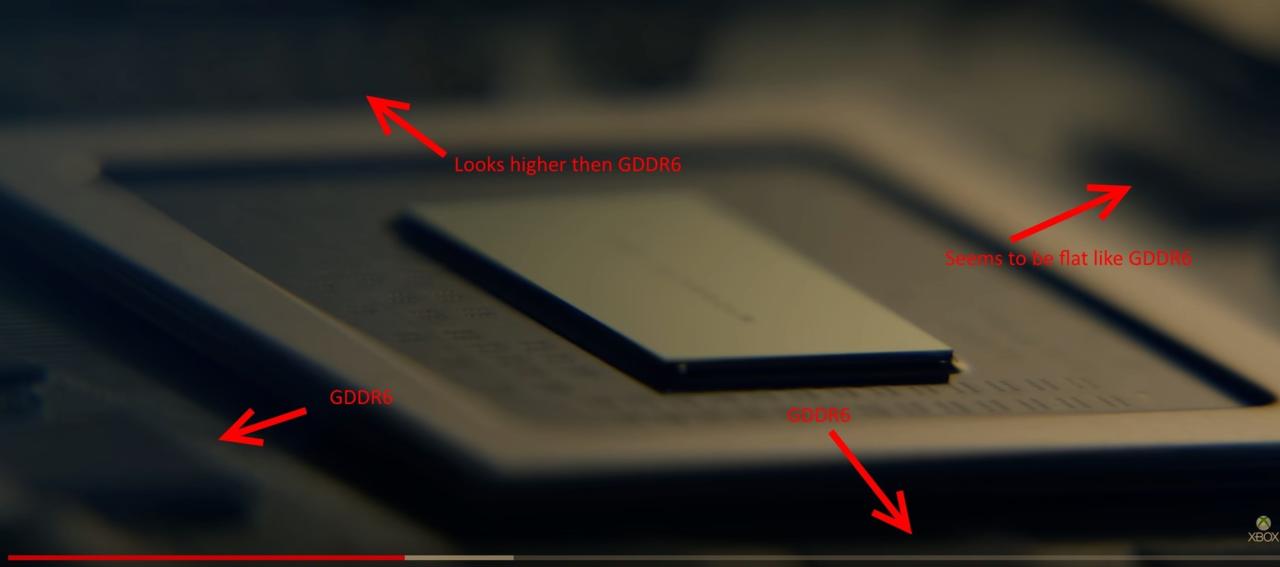

Ray-tracing also needs high memory bandwidth and higher VRAM storage which is gimped on RTX 2060 6GB. RTX 2060 Super has 8GB 256 bit GDDR6-14000 memory.

Ray-tracing should be used with variable shading rate to conserve shader resource and reduce memory bandwidth usage.

Yeah, don't care that much, don't have a bone in this fight. Just saying what Jay was saying and I agree with him.

And to be honest 'a single ray tracing type' does show that it's in its infancy. Kind of sounds like damage control. Just come out and say, they aren't ready to do multiple ray tracing types. If I'm buying into it, I'd want it to do more than a single effect.

Also, you're somewhat making their point. Nvidia's mid range RTXs don't do as good a job, and no matter how you put it, ray tracing has a performance impact. So Navi in the lower range might be better off without it.

The other point he made was that whenever Ray Tracing was brought up, PC gamers just said, it wasn't important or they don't care, and I've seen that round here, but when it isn't on offer, suddenly they're asking where it is.

If you want to say it's damage control, that's fine, it's your opinion. I don't share that opinion.

Log in to comment