Poll Is nVidia's Pascal generation nVidia's Best GPU's in their history? (64 votes)

Ever since I got the GTX 1060 6GB on my laptop, I have been impressed by it's performance and the fact I am getting Desktop level performance on my Alienware 17" gaming laptop (got my fingers crossed that it lasts a long time). Anyways, I am getting up to 1900+ Ghz on the core clock speed. That is insane for a laptop making it essentially like the Desktop GTX 1060 6GB. That's without overclocking. I have practically stopped using my Desktop for gaming.

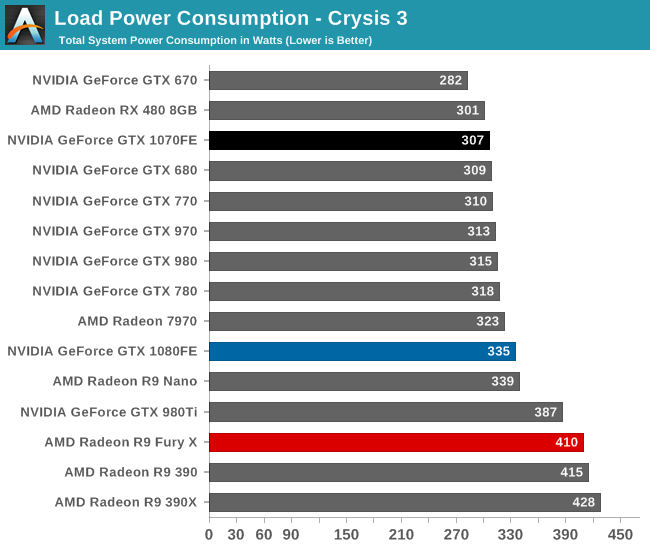

I always thought that nVidia's 8800 series that launched in 2006 was the best GPU series in their history. The 8800 GTX brought leaps and bounds in performance. It wasn't until June of 2008 that ATI had a GPU that could beat the 8800 GTX with the release of HD 4850/HD 4870. That means nVidia held the lead with a single generation for a year and half. Maxwell was good but AMD already had the R9 290X that was as good at the GTX 970 and it's refresh in June 2015, 9 month's after Maxwell's release was good enough to compete with the 970 and 980 with the R9 390 and 390X. But judging by what has come out of the performance number of the RX Vega 64, it will supposedly be as good as the GTX 1080 but not better than it and the 1080 Ti will still reign supreme. The GTX 1080 was launched last year meaning that Vega RX will be 1 year late and will consume 100 Watts more. So, that means Pascal will reign supreme until next year until AMD releases Navi in 2018. That's almost two years with nVidia being on top with Pascal. I don't think nVidia had such a long time with a lead in the GPU industry. That's not to say that Vega RX will be bad ( I welcome the fact that AMD finally has something to compete in the high end), it's about how Pascal is compared to previous GPU's in nVidia's history vs the competition.

I think we can all thank the cuts in the GPU division that AMD's last CEO made back in 2011 and the subsequent departure of top rated graphics talent in the subsequent years.

When you include everything in terms of power, performance (maybe not price), but at least in terms of power and performance and the fact that nVidia was able to stick an entire Desktop GPU inside a laptop and get the same permanence as a Desktop for the first time in their history is indeed an engineering achievement.

So, I think Pascal is their best generation in nVidia's history. Agree?

See I am not a AMD fanboy. Created a nVidia thread. ;)

ReplyEditDelete

Log in to comment