PS5 wont even do 4K60, genius.

I was going to come in and inform the TC that he was asking for a PC that's doing something that PS5 will absolutely not do, but then I saw your post.

No, PS4 will not do native 8k 60fps in any form. However if you don't think PS5 will do 4k60 you might be more delusional than the TC.

PS5 will absolutely do 4k60 native for the majority of games, hell the X offers 4k 30 for many games now and 1 or 2 4k60 titles.

You'd have to be an idiot to think that PS4 wouldn't be able to do 4k60 when Sony are specifically designing PS5 to do that, but I guess you investing $800 - $1500 on you current PC just to get that has you butthurt and damage controlling. Poor guy.

Also TC, PS5 is going to be $500 not $399. I guess it's fair to say you can this guy are equally delusional.

You need to look more into tech before you go off on an idiotic rant, xbox one x hardly offers native 4k 30fps mostly just on simple indie games the rest is just checkerboarding and other forms of up-scaling.

Currently the best consumer gpu is the RTX 2080 Ti and even that struggles in modern graphically advanced games to keep over 60fps in 4k consistently and lets not even talk about the games that will come out in the next 8 years and that's on a 775mm^2 die, its impossible for a console to have such a large gpu die and even if Navi is denser (which it definitely will be due to it being 7nm) it is unlikely to match the 2080 Ti without having at least that kind of die size.

and why would he be upset even if what you said is future gpu's always perform better then current ones, that's really no surprise to anyone that's into hardware.

There is a reason why consoles are as cheap as they are, because they use bottom of the barrel hardware on everything except the gpu and even that's just mid range in most cases, you get what you pay for.

RTX 2080 Ti is built on 12 nm not 7nm and it has tensor and RT cores wastage.

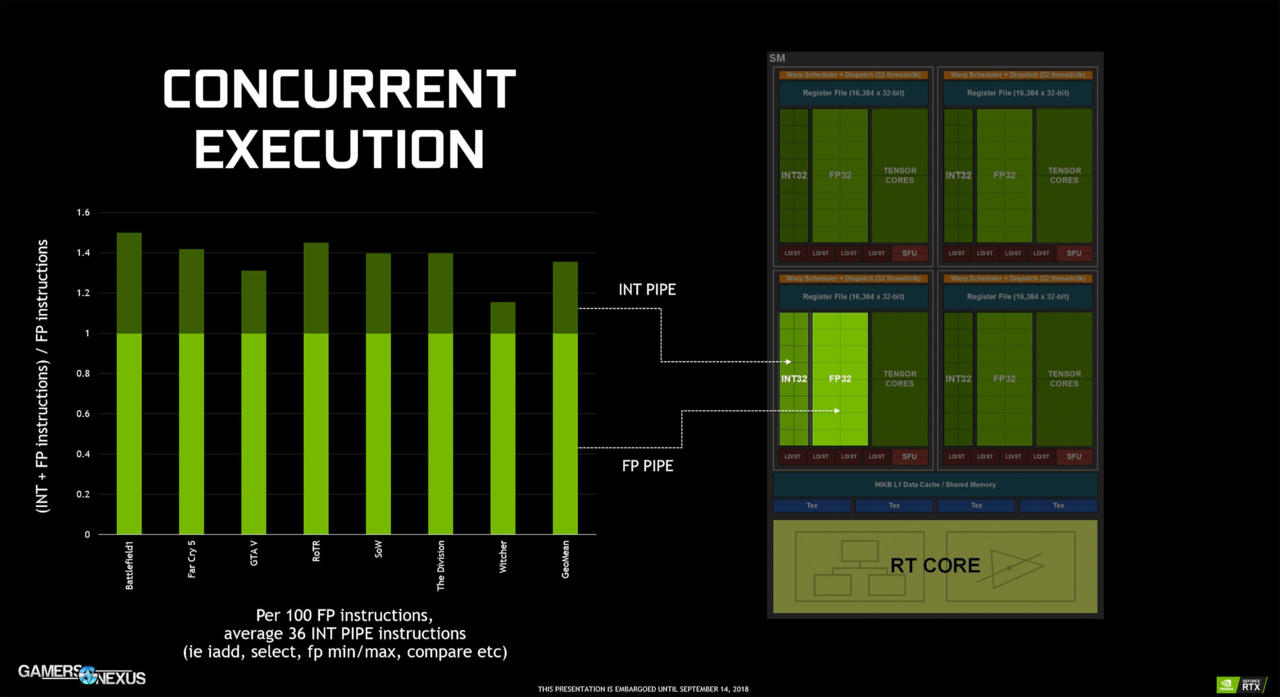

Turing SM has 64 FP CUDA cores and 64 INT CUDA cores instead of 128 mix FP/INT CUDA cores. The majority of current workloads are FP.

With Pascal SM, integer instruction stalls floating point instruction issues. Turing SM splits integer and floating point data-types into two different paths.

RTX 2060 has 6.5 TFLOPS FP32 with 6.5 TIOPS INT32, hence it's total programmable compute operation is 13 TOPS not including fix function Tensor and TX cores.

AMD's CU can mix FP and INT data-types without penalty.

Without factoring Tensor and RT cores, RTX 2080 Ti's raster power is an evolution over GTX 1080 Ti which still sports similar six GPC (six geometry-raster engines) and 88 ROPS which includes improvements such as higher clock speed, separate integer path, rapid pack math(nearly useless atm) and larger 6 MB L2 cache.

Turing didn't overcome 8 ROPS per 32 bit memory channel limits from Pascal architecture while AMD's NAVI is expected to catch up with it's 8 ROPS per 32 bit memory channel with GDDR6.

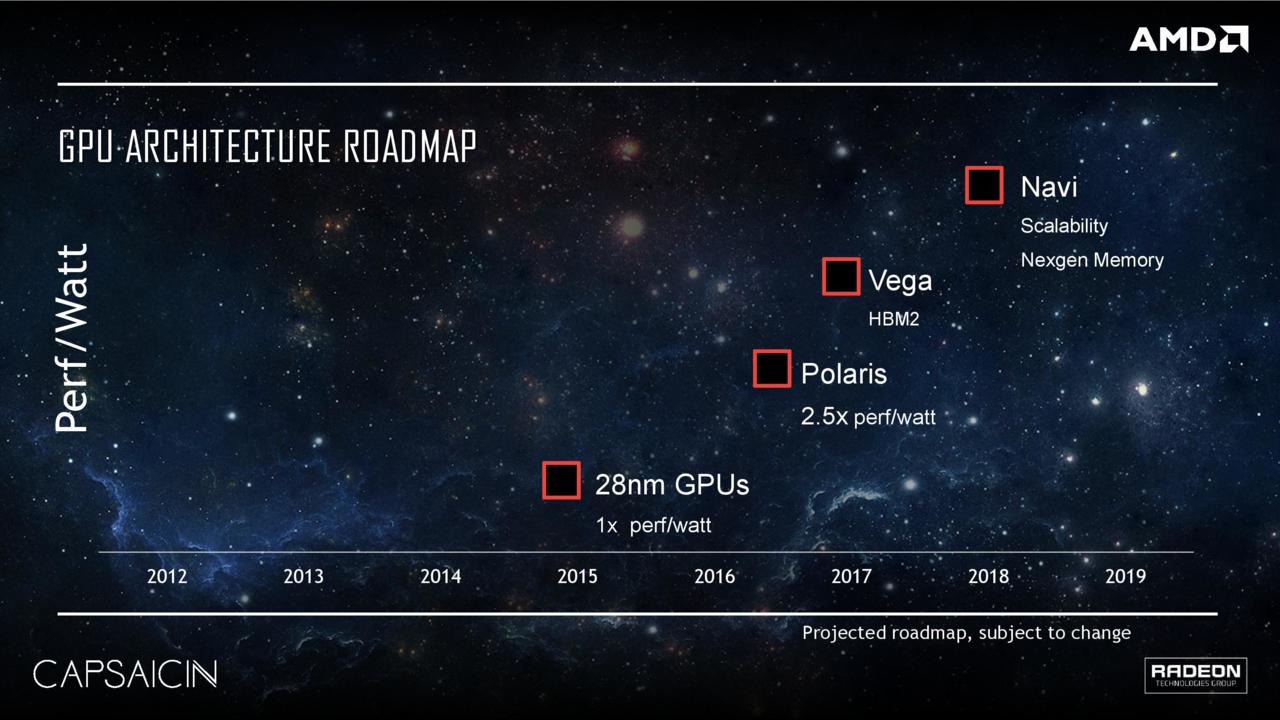

VII with 331 mm^2 size has similar transistor count is similar to RTX 2080 with 545 mm^2 size. VII's quad stack HBM v2 at 1000Mhz is expensive.

Log in to comment