Not quite GTX 1070 performance:

http://www.pcgamer.com/heres-how-microsofts-xbox-one-x-compares-to-a-pc/

Not quite GTX 1070 performance:

http://www.pcgamer.com/heres-how-microsofts-xbox-one-x-compares-to-a-pc/

I genuinely was wondering if its possible to build a PC to compete with xb1x. Ignoring the fact that games are cheaper and multiplayer is free (you get free games on live and ease of use makes up for that). Its pretty obvious you cant compete. The PC they are talking about

has less RAM

has less harddrive space

has less memory bandwidth

has no operating system

has no controllers

Misses other features in xb1x

So if your looking for mid range PC action and don't want to play mouse+keyboard games xb1x is the way to go.

Not quite GTX 1070 performance:

http://www.pcgamer.com/heres-how-microsofts-xbox-one-x-compares-to-a-pc/

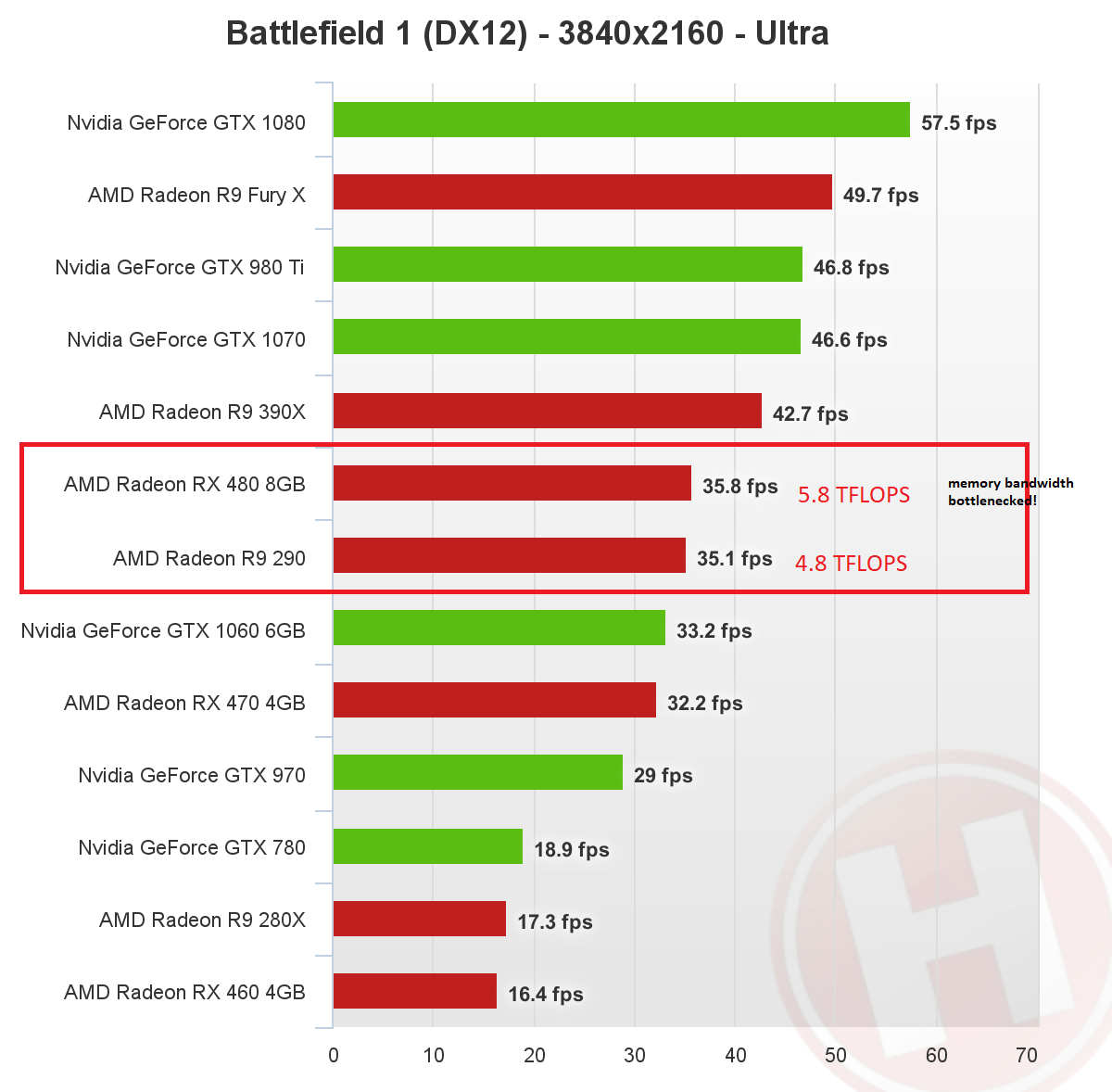

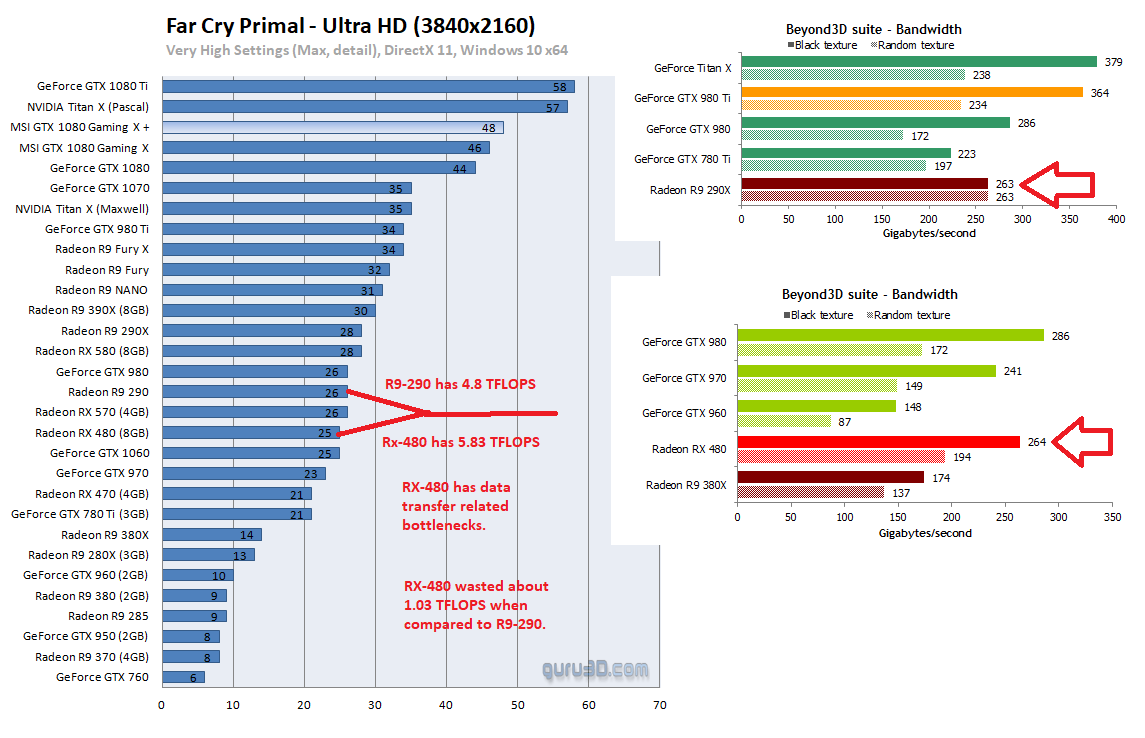

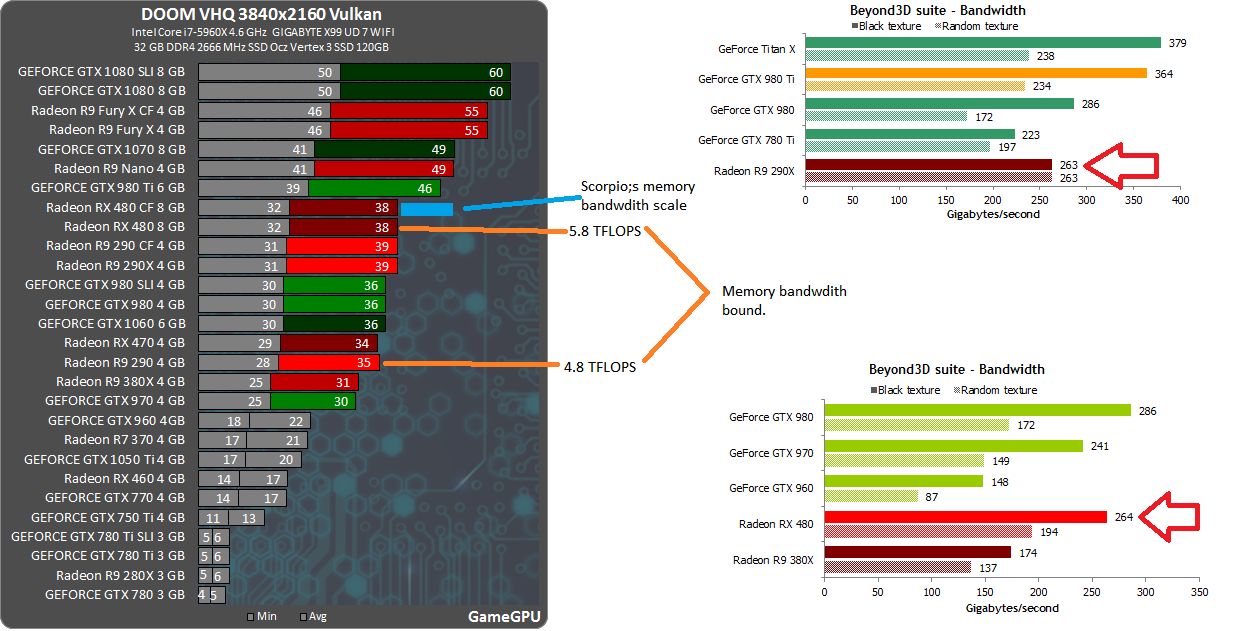

The article from PCgamer didn't factor in RX-580's memory bottleneck situation. RX-580 is not the first Polaris 10* with 6 TFLOPS i.e. MSI RX-480 GX has 6.06 TFLOPS.

Read http://gamingbolt.com/ps4-pro-bandwidth-is-potential-bottleneck-for-4k-but-a-thought-through-tradeoff-little-nightmares-dev

PS4 Pro's has 4.2 TFLOPS with 218 GB/s hence the ratio is 51.9 GB/s per 1 TFLOPS and it's already memory bottlenecked.

RX-580 has 6.17 TFLOPS with 256 GB/s hence the ratio is 41.49 GB/s per 1 TFLOPS and it's memory bottleneck is worst than PS4 Pro.

X1X has 6 TFLOPS with 326 GB/s hence the ratio is 54.3 GB/s per 1 TFLOPS.

RX-480 (5.83 TFLOPS)'s 264 GB/s effective bandwidth, hence very close to R9-290 (4.8 TFLOPS) and R9-290X (5.6 TFLOPS) results.

I welcome for PCgamer's Tuan Nguyen to debate me.

PS; R9-390X's effective memory bandwidth is about 315.6 GB/s and has no DCC (Delta Color Compression) features.

Check list

1. I have game programmer's point of view on memory bottlenecks with PS4 Pro.

2. I have both AMD and NVIDIA PC benchmarks showing memory bottlenecks for Polaris 10 XT. I have cached additional graphs showing similar patterns e.g. F1 2016. Forza Horizon 3 and 'etc'. I'm ready for General Order 24/Base Delta Zero.

@tdkmillsy: strictly performance wise these budget PC's are nothing but trash, if you actually want performance you better lay in the dough, but at least they have ssd in the budget build... otherwise it's looking to maybe do 60fps on most(not all) new games on 1080p because the gpu is trash on anything higher

Not quite GTX 1070 performance:

http://www.pcgamer.com/heres-how-microsofts-xbox-one-x-compares-to-a-pc/

X1X has 6 TFLOPS with 326 GB/s hence the ratio is 54.3 GB/s per 1 TFLOPS.

And there goes your whole argument...... You just owned yourself.

Not quite GTX 1070 performance:

http://www.pcgamer.com/heres-how-microsofts-xbox-one-x-compares-to-a-pc/

X1X has 6 TFLOPS with 326 GB/s hence the ratio is 54.3 GB/s per 1 TFLOPS.

And there goes your whole argument...... You just owned yourself.

You just own yourself e.g. X1X has higher physical memory bandwidth per TFLOPS when compared RX-580 and PS4 Pro.

Not quite GTX 1070 performance:

http://www.pcgamer.com/heres-how-microsofts-xbox-one-x-compares-to-a-pc/

X1X has 6 TFLOPS with 326 GB/s hence the ratio is 54.3 GB/s per 1 TFLOPS.

And there goes your whole argument...... You just owned yourself.

You just own yourself e.g. X1X has higher physical memory bandwidth per TFLOPS when compared RX-580 and PS4 Pro.

Can you please adjust your numbers to the real bandwidth Scorpio's GPU will actually have once the CPU and other systems have taken their share of the bandwidth.

Can't even get that right........

X1X has 6 TFLOPS with 326 GB/s hence the ratio is 54.3 GB/s per 1 TFLOPS.

And there goes your whole argument...... You just owned yourself.

You just own yourself e.g. X1X has higher physical memory bandwidth per TFLOPS when compared RX-580 and PS4 Pro.

Can you please adjust your numbers to the real bandwidth Scorpio's GPU will actually have once the CPU and other systems have taken their share of the bandwidth.

Can't even get that right........

Shared memory PS4 vs Non-shared memory R7-265

http://www.eurogamer.net/articles/digitalfoundry-2016-we-built-a-pc-with-playstation-neo-gpu-tech

We have a Sapphire R7 265 in hand, running at 925MHz - down-clock that to 900MHz and we have a lock with PS4's 1.84 teraflops of compute power.

In our Face-Offs, we like to get as close a lock as we can between PC quality presets and their console equivalents in order to find the quality sweet spots chosen by the developers. Initially using Star Wars Battlefront, The Witcher 3 and Street Fighter 5 as comparison points with as close to locked settings as we could muster, we were happy with the performance of our 'target PS4' system. The Witcher 3 sustains 1080p30, Battlefront hits 900p60, SF5 runs at 1080p60 with just a hint of slowdown on the replays - just like PS4. We have a ballpark match

...

at straight 1080p on the R7 265-powered PS4 surrogate. Medium settings is a direct match for the PS4 version here and not surprisingly, our base-level PS4 hardware runs it very closely to the console we're seeking to mimic

...

Take the Witcher 3, for example. Our Novigrad City test run hits a 33.3fps average on the R7 265 PS4 target hardware - pretty much in line with console performance.

For Witcher 3, SW battlefront and SF5, there's very minimal difference between shared memory PS4 vs non-shared memory R7-265.

PS4 has direct CPU-to-GPU fusion link feature to reduce CPU's main memory hit rates.

PS4 has a "zero copy" feature to reduce memory copy hit rates.

http://www.eurogamer.net/articles/digitalfoundry-vs-the-xbox-one-architects

"On the GPU we added some compressed render target formats like our 6e4 [6 bit mantissa and 4 bits exponent per component] and 7e3 HDR float formats [where the 6e4 formats] that were very, very popular on Xbox 360, which instead of doing a 16-bit float per component 64bpp render target, you can do the equivalent with us using 32 bits - so we did a lot of focus on really maximising efficiency and utilisation of that ESRAM."

XBO's GPU has additional features over other GCNs e.g. 64 bits (FP16 bits per component) ---> compressed into 32 bits. Compress ratio is 2:1.

IF XBO's compressed data format customization is combined with 6 TFLOPS brute force, it would beat R9-390X.

I haven't included Polaris DCC which operates memory block compression.

For example, Polaris DCC has larger impact on ROPS workloads which can lead to CPU memory bandwidth allocation.

On XBO, Kinect DSP has thier own small ESRAM memory pool separate from GPU's 30 MB ESRAM.

------------

http://www.eurogamer.net/articles/digitalfoundry-2017-project-scorpio-tech-revealed

We quadrupled the GPU L2 cache size, again for targeting the 4K performance."

X1X GPU's 2MB L2 cache was used to reach 4K. The purpose for this feature is to reduce main memory hit rates.

Existing AMD GPU's Pixel Engines are not even connected to L2 cache.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

X1X's GPU memory bandwidth allocation with "more than 300GB/s memory bandwidth".

Try again.

And there goes your whole argument...... You just owned yourself.

You just own yourself e.g. X1X has higher physical memory bandwidth per TFLOPS when compared RX-580 and PS4 Pro.

Can you please adjust your numbers to the real bandwidth Scorpio's GPU will actually have once the CPU and other systems have taken their share of the bandwidth.

Can't even get that right........

Shared memory PS4 vs Non-shared memory R7-265

Can you please adjust your bandwidth figures to account for the bandwidth the CPU will take away from the GPU instead of dodging the question because you know you're wrong.

CPU's need bandwidth, they can not function without it so simply dividing the raw bandwidth between the TFLOPS of the GPU is a stupid and idiotioc way to do it as it not give a true indiction of the amount of bandwidth a GPU would actually have to work with.

You may now proceed to post your bull shit charts and quotes from various websites to dodge this yet again.

2x Owned now noob.

You just own yourself e.g. X1X has higher physical memory bandwidth per TFLOPS when compared RX-580 and PS4 Pro.

Can you please adjust your numbers to the real bandwidth Scorpio's GPU will actually have once the CPU and other systems have taken their share of the bandwidth.

Can't even get that right........

Shared memory PS4 vs Non-shared memory R7-265

Can you please adjust your bandwidth figures to account for the bandwidth the CPU will take away from the GPU instead of dodging the question because you know you're wrong.

CPU's need bandwidth, they can not function without it so simply dividing the raw bandwidth between the TfLOPS is a stupid and idiotioc way to do it as it not give a true indiction of the amount of bandwidth a GPU would actually have to work with.

You may now proceed to post your bull shit charts and quotes from various websites to dodge this yet again.

2x Owned now noob.

You are dodging first party source.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

X1X's GPU memory bandwidth allocation with "more than 300GB/s memory bandwidth".

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

2x Owned now noob.

You just own yourself e.g. X1X has higher physical memory bandwidth per TFLOPS when compared RX-580 and PS4 Pro.

Can you please adjust your numbers to the real bandwidth Scorpio's GPU will actually have once the CPU and other systems have taken their share of the bandwidth.

Can't even get that right........

Shared memory PS4 vs Non-shared memory R7-265

Can you please adjust your bandwidth figures to account for the bandwidth the CPU will take away from the GPU instead of dodging the question because you know you're wrong.

CPU's need bandwidth, they can not function without it so simply dividing the raw bandwidth between the TfLOPS is a stupid and idiotioc way to do it as it not give a true indiction of the amount of bandwidth a GPU would actually have to work with.

You may now proceed to post your bull shit charts and quotes from various websites to dodge this yet again.

2x Owned now noob.

You are dodging first party source.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

X1X's GPU memory bandwidth allocation with "more than 300GB/s memory bandwidth".

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

2x Owned now noob.

And yet again you post shit to avoid it, can you please subtract the bandwidth X-box X's CPU will consume from the 326Gb/s system bandwidth and then adjust your figures to suit.

3x Owned now.....

Can you please adjust your numbers to the real bandwidth Scorpio's GPU will actually have once the CPU and other systems have taken their share of the bandwidth.

Can't even get that right........

Shared memory PS4 vs Non-shared memory R7-265

Can you please adjust your bandwidth figures to account for the bandwidth the CPU will take away from the GPU instead of dodging the question because you know you're wrong.

CPU's need bandwidth, they can not function without it so simply dividing the raw bandwidth between the TfLOPS is a stupid and idiotioc way to do it as it not give a true indiction of the amount of bandwidth a GPU would actually have to work with.

You may now proceed to post your bull shit charts and quotes from various websites to dodge this yet again.

2x Owned now noob.

You are dodging first party source.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

X1X's GPU memory bandwidth allocation with "more than 300GB/s memory bandwidth".

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

2x Owned now noob.

And yet again you post shit to avoid it, can you please subtract the bandwidth X-box X's CPU will consume from the 326Gb/s system bandwidth and then adjust your figures to suit.

3x Owned now.....

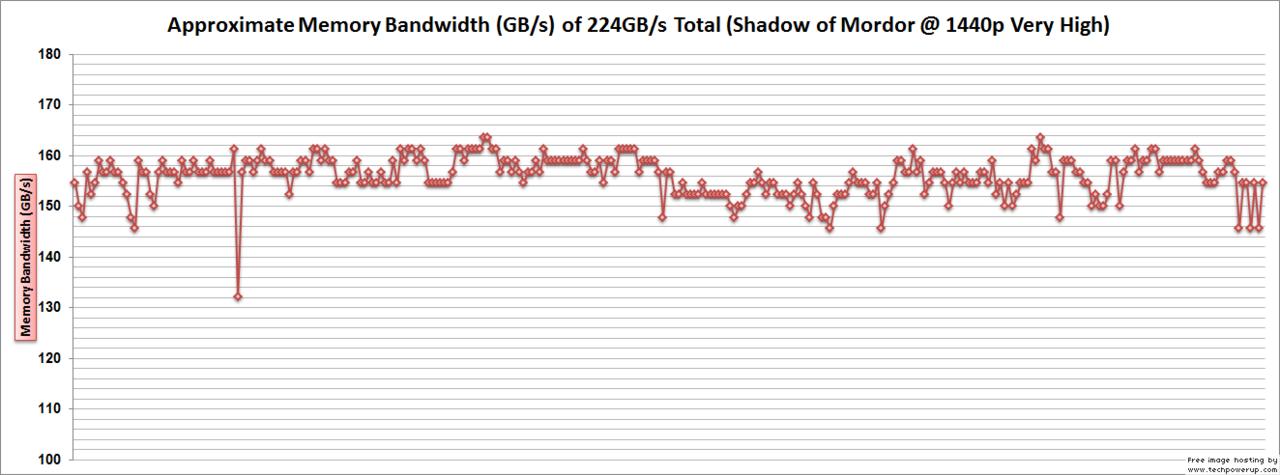

From https://www.techpowerup.com/forums/threads/article-just-how-important-is-gpu-memory-bandwidth.209053/

GTX 970's (FarCry 4 @ 1440p Very High) memory bandwidth usage. GTX 970 has 224 GB/s physical memory bandwidth.

Note why Microsoft aimed for more than 300 GB/s for 4K.

PS4 Pro's 218 GB/s is fine for 1080p and 1440p.

3x Owned now noob.

Shared memory PS4 vs Non-shared memory R7-265

Can you please adjust your bandwidth figures to account for the bandwidth the CPU will take away from the GPU instead of dodging the question because you know you're wrong.

CPU's need bandwidth, they can not function without it so simply dividing the raw bandwidth between the TfLOPS is a stupid and idiotioc way to do it as it not give a true indiction of the amount of bandwidth a GPU would actually have to work with.

You may now proceed to post your bull shit charts and quotes from various websites to dodge this yet again.

2x Owned now noob.

You are dodging first party source.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

X1X's GPU memory bandwidth allocation with "more than 300GB/s memory bandwidth".

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

2x Owned now noob.

And yet again you post shit to avoid it, can you please subtract the bandwidth X-box X's CPU will consume from the 326Gb/s system bandwidth and then adjust your figures to suit.

3x Owned now.....

Your post is shit. You keep on avoiding memory hit rate reduction and memory compression measures.

Shared memory PS4 vs Non-shared memory R7-265

Can you please adjust your bandwidth figures to account for the bandwidth the CPU will take away from the GPU instead of dodging the question because you know you're wrong.

CPU's need bandwidth, they can not function without it so simply dividing the raw bandwidth between the TfLOPS is a stupid and idiotioc way to do it as it not give a true indiction of the amount of bandwidth a GPU would actually have to work with.

You may now proceed to post your bull shit charts and quotes from various websites to dodge this yet again.

2x Owned now noob.

You are dodging first party source.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

X1X's GPU memory bandwidth allocation with "more than 300GB/s memory bandwidth".

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

2x Owned now noob.

And yet again you post shit to avoid it, can you please subtract the bandwidth X-box X's CPU will consume from the 326Gb/s system bandwidth and then adjust your figures to suit.

3x Owned now.....

Your post is shit. You keep on avoiding memory hit rate reduction and memory compression measures.

And you seem to think CPU's can operate without memory bandwidth.

And now you're getting mad and salty because you've been caught out with your bull shit yet again.

So again, can you please adjust your figures to reflect the actual Gb/s per Tflop the GPU inside in Xbox X will have access too.

Can you please adjust your bandwidth figures to account for the bandwidth the CPU will take away from the GPU instead of dodging the question because you know you're wrong.

CPU's need bandwidth, they can not function without it so simply dividing the raw bandwidth between the TfLOPS is a stupid and idiotioc way to do it as it not give a true indiction of the amount of bandwidth a GPU would actually have to work with.

You may now proceed to post your bull shit charts and quotes from various websites to dodge this yet again.

2x Owned now noob.

You are dodging first party source.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

X1X's GPU memory bandwidth allocation with "more than 300GB/s memory bandwidth".

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

2x Owned now noob.

And yet again you post shit to avoid it, can you please subtract the bandwidth X-box X's CPU will consume from the 326Gb/s system bandwidth and then adjust your figures to suit.

3x Owned now.....

Your post is shit. You keep on avoiding memory hit rate reduction and memory compression measures.

And you seem to think CPU's can operate without memory bandwidth.

And now you're getting mad and salty because you've been caught out with your bull shit yet again.

So again, can you please adjust your figures to reflect the actual Gb/s per Tflop the GPU inside in Xbox X will have access too.

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

If Physics and AI calculations are not completed, the GPU stalls/waits. PC benchmarks already has built in ping-pong CPU and GPU interaction.

You are dodging first party source.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

X1X's GPU memory bandwidth allocation with "more than 300GB/s memory bandwidth".

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

2x Owned now noob.

And yet again you post shit to avoid it, can you please subtract the bandwidth X-box X's CPU will consume from the 326Gb/s system bandwidth and then adjust your figures to suit.

3x Owned now.....

Your post is shit. You keep on avoiding memory hit rate reduction and memory compression measures.

And you seem to think CPU's can operate without memory bandwidth.

And now you're getting mad and salty because you've been caught out with your bull shit yet again.

So again, can you please adjust your figures to reflect the actual Gb/s per Tflop the GPU inside in Xbox X will have access too.

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

If Physics and AI calculations are not completed, the GPU stalls/waits. PC benchmarks already has built in ping-pong CPU and GPU interaction.

Can you please adjust your bandwidth per Tflop figures for Xbox-X so they show a true reflection of available bandwdith available to the GPU once CPU bandwidth allocation has been factored in.

You are dodging first party source.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

X1X's GPU memory bandwidth allocation with "more than 300GB/s memory bandwidth".

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

2x Owned now noob.

And yet again you post shit to avoid it, can you please subtract the bandwidth X-box X's CPU will consume from the 326Gb/s system bandwidth and then adjust your figures to suit.

3x Owned now.....

Your post is shit. You keep on avoiding memory hit rate reduction and memory compression measures.

And you seem to think CPU's can operate without memory bandwidth.

And now you're getting mad and salty because you've been caught out with your bull shit yet again.

So again, can you please adjust your figures to reflect the actual Gb/s per Tflop the GPU inside in Xbox X will have access too.

http://gamingbolt.com/project-cars-dev-ps4-single-core-speed-slower-than-high-end-pc-splitting-renderer-across-threads-challenging

On being asked if there was a challenge in development due to different CPU threads and GPU compute units in the PS4, Tudor stated that, “It’s been challenging splitting the renderer further across threads in an even more fine-grained manner – even splitting already-small tasks into 2-3ms chunks. The single-core speed is quite slow compared to a high-end PC though so splitting across cores is essential.

“The bottlenecks are mainly in command list building – we now have this split-up of up to four cores in parallel. There are still some bottlenecks to work out with memory flushing to garlic, even after changing to LCUE, the memory copying is still significant.”

Total frame time target = 16 ms

Physics and AI: unknown, ms value not given.

CPU's GPU command list generation: 2 to 3 ms, four threads running in parallel. <----the GPU is waiting or in stalled state.

GPU render: 16 ms - 3 ms = 13 ms. Within a single 16 ms frame time render, GPU has about 13 ms of it.

If CPU's command list generation ms value is extended to 8 ms, GPU has about 8 ms to do it's thing before exceeding 16 ms frame time target.

PC benchmarks already has builtin CPU (producer) and GPU (consumer) ms frame time allocation.

Try again noob.

For the price, you can't beat what XB1X has to offer, tech and quality wise.

Are you taking royalties into consideration? The price upfront is not all you pay for to use the console.

You are dodging first party source.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

X1X's GPU memory bandwidth allocation with "more than 300GB/s memory bandwidth".

You failed to grasp producer and consumer processing models. The consumer is dependent on producer.

2x Owned now noob.

And yet again you post shit to avoid it, can you please subtract the bandwidth X-box X's CPU will consume from the 326Gb/s system bandwidth and then adjust your figures to suit.

3x Owned now.....

Your post is shit. You keep on avoiding memory hit rate reduction and memory compression measures.

And you seem to think CPU's can operate without memory bandwidth.

And now you're getting mad and salty because you've been caught out with your bull shit yet again.

So again, can you please adjust your figures to reflect the actual Gb/s per Tflop the GPU inside in Xbox X will have access too.

http://gamingbolt.com/project-cars-dev-ps4-single-core-speed-slower-than-high-end-pc-splitting-renderer-across-threads-challenging

On being asked if there was a challenge in development due to different CPU threads and GPU compute units in the PS4, Tudor stated that, “It’s been challenging splitting the renderer further across threads in an even more fine-grained manner – even splitting already-small tasks into 2-3ms chunks. The single-core speed is quite slow compared to a high-end PC though so splitting across cores is essential.

“The bottlenecks are mainly in command list building – we now have this split-up of up to four cores in parallel. There are still some bottlenecks to work out with memory flushing to garlic, even after changing to LCUE, the memory copying is still significant.”

Total frame time target = 16 ms

Physics and AI = unknown, ms value not given

CPU's GPU command list generation = 2 to 3 ms, four threads running in parallel. <----the GPU is waiting or in stalled state.

GPU render = 16 ms - 3 ms = 13 ms. Within a single 16 ms frame time render, GPU has about 13 ms of it.

If CPU's command list generation ms value is extended to 8 ms, GPU has about 8 ms to do it's thing before exceeding 16 ms frame time target.

PC benchmarks already has builtin CPU (producer) and GPU (consumer) ms frame time allocation.

Try again noob.

Can you please adjust your bandwidth per Tflop figures for Xbox-X so they show a true reflection of available bandwdith available to the GPU once CPU bandwidth allocation has been factored in.

Your post is shit. You keep on avoiding memory hit rate reduction and memory compression measures.

And you seem to think CPU's can operate without memory bandwidth.

And now you're getting mad and salty because you've been caught out with your bull shit yet again.

So again, can you please adjust your figures to reflect the actual Gb/s per Tflop the GPU inside in Xbox X will have access too.

http://gamingbolt.com/project-cars-dev-ps4-single-core-speed-slower-than-high-end-pc-splitting-renderer-across-threads-challenging

On being asked if there was a challenge in development due to different CPU threads and GPU compute units in the PS4, Tudor stated that, “It’s been challenging splitting the renderer further across threads in an even more fine-grained manner – even splitting already-small tasks into 2-3ms chunks. The single-core speed is quite slow compared to a high-end PC though so splitting across cores is essential.

“The bottlenecks are mainly in command list building – we now have this split-up of up to four cores in parallel. There are still some bottlenecks to work out with memory flushing to garlic, even after changing to LCUE, the memory copying is still significant.”

Total frame time target = 16 ms

Physics and AI = unknown, ms value not given

CPU's GPU command list generation = 2 to 3 ms, four threads running in parallel. <----the GPU is waiting or in stalled state.

GPU render = 16 ms - 3 ms = 13 ms. Within a single 16 ms frame time render, GPU has about 13 ms of it.

If CPU's command list generation ms value is extended to 8 ms, GPU has about 8 ms to do it's thing before exceeding 16 ms frame time target.

PC benchmarks already has builtin CPU (producer) and GPU (consumer) ms frame time allocation.

Try again noob.

Can you please adjust your bandwidth per Tflop figures for Xbox-X so they show a true reflection of available bandwdith available to the GPU once CPU bandwidth allocation has been factored in.

Your argument doesn't reflect real world typical sync rendering workloads. The GPU can't do anything without the CPU completing it's task.

If CPU has 3 ms from 16.6 ms, the GPU has 81 percent of the 16 ms frame time. Scale it by 60 fps.

For my bandwidth per TFLOPS numbers, remove CPU's 2-3 ms proportion from 16.6 ms i.e. GPU is waiting for the CPU event.

My bandwidth per TFLOPS numbers already has builtin CPU's command list generation ms time from 16.6 ms.

And you seem to think CPU's can operate without memory bandwidth.

And now you're getting mad and salty because you've been caught out with your bull shit yet again.

So again, can you please adjust your figures to reflect the actual Gb/s per Tflop the GPU inside in Xbox X will have access too.

http://gamingbolt.com/project-cars-dev-ps4-single-core-speed-slower-than-high-end-pc-splitting-renderer-across-threads-challenging

On being asked if there was a challenge in development due to different CPU threads and GPU compute units in the PS4, Tudor stated that, “It’s been challenging splitting the renderer further across threads in an even more fine-grained manner – even splitting already-small tasks into 2-3ms chunks. The single-core speed is quite slow compared to a high-end PC though so splitting across cores is essential.

“The bottlenecks are mainly in command list building – we now have this split-up of up to four cores in parallel. There are still some bottlenecks to work out with memory flushing to garlic, even after changing to LCUE, the memory copying is still significant.”

Total frame time target = 16 ms

Physics and AI = unknown, ms value not given

CPU's GPU command list generation = 2 to 3 ms, four threads running in parallel. <----the GPU is waiting or in stalled state.

GPU render = 16 ms - 3 ms = 13 ms. Within a single 16 ms frame time render, GPU has about 13 ms of it.

If CPU's command list generation ms value is extended to 8 ms, GPU has about 8 ms to do it's thing before exceeding 16 ms frame time target.

PC benchmarks already has builtin CPU (producer) and GPU (consumer) ms frame time allocation.

Try again noob.

Can you please adjust your bandwidth per Tflop figures for Xbox-X so they show a true reflection of available bandwdith available to the GPU once CPU bandwidth allocation has been factored in.

Your argument doesn't reflect real world typical sync rendering workloads. The GPU can't do anything without the CPU completing it's task. When GPU is idle, it's not using memory bandwidth.

Can you please adjust your bandwidth per Tflop figures for Xbox-X so they show a true reflection of available bandwdith available to the GPU once CPU bandwidth allocation has been factored in.

And if you're not able to work that out can you ackonweldge that the GPU in Xbox-X does not have exclusive 100% use of the 326Gb/s system bandwidth and that the CPU does use some of this resulting in your bandwidth per Tflop figure for Xbox-X is incorrect.

http://gamingbolt.com/project-cars-dev-ps4-single-core-speed-slower-than-high-end-pc-splitting-renderer-across-threads-challenging

On being asked if there was a challenge in development due to different CPU threads and GPU compute units in the PS4, Tudor stated that, “It’s been challenging splitting the renderer further across threads in an even more fine-grained manner – even splitting already-small tasks into 2-3ms chunks. The single-core speed is quite slow compared to a high-end PC though so splitting across cores is essential.

“The bottlenecks are mainly in command list building – we now have this split-up of up to four cores in parallel. There are still some bottlenecks to work out with memory flushing to garlic, even after changing to LCUE, the memory copying is still significant.”

Total frame time target = 16 ms

Physics and AI = unknown, ms value not given

CPU's GPU command list generation = 2 to 3 ms, four threads running in parallel. <----the GPU is waiting or in stalled state.

GPU render = 16 ms - 3 ms = 13 ms. Within a single 16 ms frame time render, GPU has about 13 ms of it.

If CPU's command list generation ms value is extended to 8 ms, GPU has about 8 ms to do it's thing before exceeding 16 ms frame time target.

PC benchmarks already has builtin CPU (producer) and GPU (consumer) ms frame time allocation.

Try again noob.

Can you please adjust your bandwidth per Tflop figures for Xbox-X so they show a true reflection of available bandwdith available to the GPU once CPU bandwidth allocation has been factored in.

Your argument doesn't reflect real world typical sync rendering workloads. The GPU can't do anything without the CPU completing it's task. When GPU is idle, it's not using memory bandwidth.

Can you please adjust your bandwidth per Tflop figures for Xbox-X so they show a true reflection of available bandwdith available to the GPU once CPU bandwidth allocation has been factored in.

And if you're not able to work that out can you ackonweldge that the GPU in Xbox-X does not have exclusive 100% use of the 326Gb/s system bandwidth and that the CPU does use some of this resulting in your bandwidth per Tflop figure for Xbox-X is incorrect.

The very nature of producer (CPU) and consumer (GPU) process model precludes 100 percent GPU usage.

My memory scaling already has GPU wait states (wasting clock cycles) built in. For every CPU ms consumption, it's less TFLOPS for GPU for a given frame time target i.e. GPU is waiting on the CPU event. This is effective TFLOPS vs theoretical TFLOPS subjects i.e. CPU's ms consumption can reduce GPU's effective TFLOPS to complete it's work within 16.6 ms or 33.3 ms frame time target. Higher the GPU compute power = less time to completion.

http://gamingbolt.com/project-cars-dev-ps4-single-core-speed-slower-than-high-end-pc-splitting-renderer-across-threads-challenging

On being asked if there was a challenge in development due to different CPU threads and GPU compute units in the PS4, Tudor stated that, “It’s been challenging splitting the renderer further across threads in an even more fine-grained manner – even splitting already-small tasks into 2-3ms chunks. The single-core speed is quite slow compared to a high-end PC though so splitting across cores is essential.

“The bottlenecks are mainly in command list building – we now have this split-up of up to four cores in parallel. There are still some bottlenecks to work out with memory flushing to garlic, even after changing to LCUE, the memory copying is still significant.”

Total frame time target = 16 ms

Physics and AI = unknown, ms value not given

CPU's GPU command list generation = 2 to 3 ms, four threads running in parallel. <----the GPU is waiting or in stalled state.

GPU render = 16 ms - 3 ms = 13 ms. Within a single 16 ms frame time render, GPU has about 13 ms of it.

If CPU's command list generation ms value is extended to 8 ms, GPU has about 8 ms to do it's thing before exceeding 16 ms frame time target.

PC benchmarks already has builtin CPU (producer) and GPU (consumer) ms frame time allocation.

Try again noob.

Can you please adjust your bandwidth per Tflop figures for Xbox-X so they show a true reflection of available bandwdith available to the GPU once CPU bandwidth allocation has been factored in.

Your argument doesn't reflect real world typical sync rendering workloads. The GPU can't do anything without the CPU completing it's task. When GPU is idle, it's not using memory bandwidth.

Can you please adjust your bandwidth per Tflop figures for Xbox-X so they show a true reflection of available bandwdith available to the GPU once CPU bandwidth allocation has been factored in.

And if you're not able to work that out can you ackonweldge that the GPU in Xbox-X does not have exclusive 100% use of the 326Gb/s system bandwidth and that the CPU does use some of this resulting in your bandwidth per Tflop figure for Xbox-X is incorrect.

The very nature of producer (CPU) and consumer (GPU) process model precludes 100 percent GPU usage.

My memory scaling already has GPU wait states (wasting clock cycles) built in. For every CPU ms consumption, it's less TFLOPS for GPU for a given frame time target i.e. GPU is waiting on the CPU event. This is effective TFLOPS vs theoretical TFLOPS subjects i.e. CPU's ms consumption can reduce GPU's effective TFLOPS to complete it's work within 16.6 ms or 33.3 ms frame time target. Higher the GPU compute power = less time to completion.

Can you please adjust your bandwidth per Tflop figures for Xbox-X so they show a true reflection of available bandwdith available to the GPU once CPU bandwidth allocation has been factored in.

You asked for charts? Well they are about to arrive.

@howmakewood: incoming in 3... 2... 1...

WTF? You two can see the future? /s

Gosh this ronvalencia dude and his chart spamming is annoying. I've noticed that people who over complicate stuff when trying to explain something don't really know what they're talking about. I suspect this dude is one of those.

@pinkanimal: Oh he does know what he's talking about but for some reason he just doesn't bother explaining it on a more down to earth level so we get this instead. If someone wants to catchup to Ron on this cpu draw call-gpu-memory interaction there's pretty good write-ups around the web

@pinkanimal: Oh he does know what he's talking about but for some reason he just doesn't bother explaining it on a more down to earth level so we get this instead. If someone wants to catchup to Ron on this cpu draw call-gpu-memory interaction there's pretty good write-ups around the web

He really doesn't..... he doesn't even understand how bandwidth allocation works in a modern console, hence me calling him out on it and thus far he's done everything he can to dodge it as he knows I have him by the balls.

I can and have shown him up in a few threads now.

He really doesn't..... he doesn't even understand how bandwidth allocation works in a modern console, hence me calling him out on it and thus far he's done everything he can to dodge it as he knows I have him by the balls.

I can and have shown him up in a few threads now.

He does but he's a fanboy for Microsoft and AMD and is completely biased when it comes to them. Also he seems to not grasp English 100% so I suspect he doesn't quite understand what you're asking.

He really doesn't..... he doesn't even understand how bandwidth allocation works in a modern console, hence me calling him out on it and thus far he's done everything he can to dodge it as he knows I have him by the balls.

I can and have shown him up in a few threads now.

He does but he's a fanboy for Microsoft and AMD and is completely biased when it comes to them. Also he seems to not grasp English 100% so I suspect he doesn't quite understand what you're asking.

I don't think I've ever seen one of his posts that isn't wrong on multiple levels.

@scatteh316: the allocation isn't static

@lglz1337: do share the fun

No it's not but it has a maximum value which can be calculated, iirc there was a figure of 25Gb/s floating around for OG Xbone's maximum CPU useage when the processor is at maximum capacity.

Xbox-X's CPU is faster still but even though processor load is dynaimc is still uses bandwidth which is why Ron's figures are a complete joke and just flat out wrong but he won't admit this.

@scatteh316: you keep saying owned to Ron, but his statement still stands true. Even if you took out the CPU bandwidth for xb1x, you'd still have to do the same for PS4, and no way the X CPU is gonna make bandwidth drop down to the 256 found on the rx 480. You did a poor job of trying to prove him wrong because even he did provide the requested information it would prove that the xb1x bandwidth would still be more than the PS4 and Rx gpu's.

@scatteh316: you keep saying owned to Ron, but his statement still stands true. Even if you took out the CPU bandwidth for xb1x, you'd still have to do the same for PS4, and no way the X CPU is gonna make bandwidth drop down to the 256 found on the rx 480. You did a poor job of trying to prove him wrong because even he did provide the requested information it would prove that the xb1x bandwidth would still be more than the PS4 and Rx gpu's.

I have in no way bashed Ron's statement that Xbox-X has more then Pro nor have I tried to argue that fact, I've bashed his numbers and calculations, which are wrong.

The actual gap between Xbox-X and the PC GPU's is not what he claims it to be making his whole comparrison irrelvant until he provides the actual real world figures.

I have mentioned this error to him in other threads and he still hasn't listened.

@Orchid87: Nowhere in the article does it compare the Xbox to the 1070. It compared it to the Rx 480 which is a 6tflop card that can't reach 4K due to its low memory bandwidth. I know I have a 480 and 4K is off limits for me even 1440p I'd have to turn things down. On paper those cards are the closest to the gpu found in the 1x but it punches well above those cards. Both of those cards are 1080p /1440p cards, so if the X is more powerful than both of those and the next gpu above the 480 and 1060 is the 1070 which is a 1440p card that can handle light 4K with settings turned down, where does that put the X. A console that CAN handle 4K at custom setting.

@scatteh316: think the best metric would be to get the avg bandwidth during one frame for both 33ms and 16ms, but I do pack up your point that the gpu will most likely never have access to the full bandwidth

@slimdogmilionar: 1070 is a step above 1060 but that step is high indeed. XBX falls somewhere inbetween.

@scatteh316: Compared to rx480 it is, I have a 480 and you can't even hit 1440p without turning down settings, mind you that's a 6tflop card paired with an i5. But you don't understand what he's saying about memory bandwidth, this is why he keeps referencing Nvidia because the 1070 performs better than the 480 and 580 with the same bandwidth, same with the 1080 it only has a 256 bit bus, but can do 4K. Nvivdias memory compression is insane, and for months he's been telling you guys that MS has stole pages from Nvidia and Amd in order to fix the bandwidth problems AMD gpu have. Not to mention that the higher the resolution goes the less CPU has to do with performance. Look at the 1060 vs 480 for reference, the 1060 is able to match and sometimes beat the 480 even though it only has 192 bit vs 480 256.

Please Log In to post.

Log in to comment