@rdnav2: It is undervolting. Post a link to your source as well.

Has Navi and Ryzen 3000 got your hopes up for next gen consoles?

It’s the stock voltage curve. If you stick to the voltage curve you’re not really Undervolting

My mistake this one is undervolted, thought you were quoting the other curve I just posted.

but this one is drawing 132 watts, so there’s plenty of headroom add more voltage and get it to the stock voltage curve

Who's $400 GPU?

- I argued game console hardware's profit margins are tighter and less profit margin overheads when compared to NVIDIA's PC GPU SKUs.

- I argued PC's RX-5700 XT has AMD's silicon production profit margin AND add-in-board vendor's PCB production profit margin while on Microsoft assumes both silicon and PCB board production risk, hence MS's vertical integration has cost savings.

- Microsoft's reveals Scarlet PCB being in Scorpio level PCB bus memory width .

- Microsoft's reveals Scarlet APU's chip area size rivals and exceeds Scorpio APU's chip area size.

If next gen consoles recycles RX-5700 XT's 4 prim units**, the overall geometry-rasterization GPU framework wouldn't be Turing with six GPC units level.

**NAVI 10 has 8 triangle inputs with 4 triangle output as per working back face culling hardware. Polaris/Vega 64/Vega II back face calling hardware is broken due to 4 triangle inputs with 4 triangle output yielding 2 triangle output which needs compute shader workaround.

GCN's IPC drops after R9-390X since following Fury Pro/X/Vega 56/64/II GPUs didn't scale the Hawaii XT's geometry-rasterization GPU framework with compute power increase i.e. AMD can't keep adding CUs.

RX-5700 XT has Vega II's rasterization power with improved back-face culling and double width texture filtering hardware, The relationship between RX-5700 XT and Vega II reminds me of R9-390X OC and Fury X.

This is GPU hardware basics 101. GPUs should NOT be DSPs.

Don't worry about next gen consoles when GPU fundamentals hardware design issues are not addressed.

NAVI 10 has four prim units while TU106 has four GPC units.

NAVI 10 has 64 ROPS units while TU106 has 64 ROPS units.

NAVI 10 has 4MB L2 cache while TU106 has 4MB L2 cache.

NAVI 10 has GDDR6-14000 256 bit bus while TU106 has GDDR6-14000 256 bit bus.

NAVI 10's fundamental GPU hardware design is like TU106 class.

TU104 has six GPC units scaling with compute TFLOPS increase, hence NVIDIA knows GPU fundamentals hardware design basics 101.

RX 5700 XT seems to be ~1.8 Ghz overclocked R9-390X with Polaris/Vega/NAVI improvements and design corrections.

I said $400 GPU right in the very beginning: "These graphics cards on their own cost as much as the consoles as a whole will cost." and "So you are telling me, Sony/MS are going to take an otherwise $400 graphics card...". And you went on to argue about how it can be done.

When I said a $400 GPU, that's how much the 5700 XT cost. And even with profit reductions, it'll be a stretch to get that in there.

Don't end a post "Try again" and try to tell me you're not arguing my point. And my point has always been clear.

Just step up, and say you're challenging my point, because whether you like it or not, this is the position you have essentially taken. You think the GPU in the next consoles will be a 5700 XT.

Otherwise you have to admit as usual you didn't read what you replied to properly, and you've ended up chasing your own tail and arguing with yourself.

I don't based my arguments at PC's retail prices and PC's add-in-board channels since MS funds both silicon and PCB production runs.

The facts based on MS's E3 2019 reveal,

- Scarlet's wider GDDRx bus enabled PCB is not PS4/PS4 Pro's 256 bit trace line PCB class motherboard.

- Like Scarlet, Scorpio's 384bit GDDRx wide bus on based Radeon HD 7950/7970's PCB design.

- PS4/PS4 Pro's PCB motherboards are based on mainstream Radeon HD 7870 and RX-470 GDDRx bus PCB.

Your argument is already flawed by using RX 5700's 256 bit PCB and chip area size cost structures.

- Scorpio's 384 bit GDDR5-6800 wider bus enabled better 4K results when compared to RX-580's 256 bit GDDR5-8000.

- Optimized game consoles CPU workloads are programmed within L2 cache boundary to minimize external memory hit rates. Programming within L2 cache boundary has existed since X86 CPUs gained L2 cache i.e. CELL's SPU like programming boundary is NOT new. 8 core Zen v2 has 36 MB cache.

- Scorpio's Direct12 API calls to GCN ISA conversion are done by micro-coding hardware engine NOT by PC's driver which runs on the CPU. Less CPU usage on plumping workloads.

- Scorpio has Variable Rate Shading feature which doesn't exist on RX-5700/5700 XT since NAVI v1 still has Vega ROPS design which is not aware of multi-resolution shading like Scorpio ROPS design. Scarlet has shading conservation features which doesn't exist on RX-5700/5700 XT. Variable Rate Shading feature usage in games would improve when more hardware ships with this feature, hence Turing GPUs are ready for Variable Rate Shading feature usage. .

- "RDNA v2" said to have Variable Rate Shading and hardware accelerated RT features.

RX-5700 XT is NOT ready for Scarlet's GPU feature set.

My minimum PC spec for year 2020

CPU: 8 core Zen v2

GPU: RTX 2060 Super/2070. I don't recommend RX-5700 XT as a Scarlet equivalent GPU due to missing hardware features.

RX-5700 XT feels like Radeon X1800 XT to be hardware feature obsolete by next year's "RDNA 2".

Try again.

Who's $400 GPU?

- I argued game console hardware's profit margins are tighter and less profit margin overheads when compared to NVIDIA's PC GPU SKUs.

- I argued PC's RX-5700 XT has AMD's silicon production profit margin AND add-in-board vendor's PCB production profit margin while on Microsoft assumes both silicon and PCB board production risk, hence MS's vertical integration has cost savings.

- Microsoft's reveals Scarlet PCB being in Scorpio level PCB bus memory width .

- Microsoft's reveals Scarlet APU's chip area size rivals and exceeds Scorpio APU's chip area size.

If next gen consoles recycles RX-5700 XT's 4 prim units**, the overall geometry-rasterization GPU framework wouldn't be Turing with six GPC units level.

**NAVI 10 has 8 triangle inputs with 4 triangle output as per working back face culling hardware. Polaris/Vega 64/Vega II back face calling hardware is broken due to 4 triangle inputs with 4 triangle output yielding 2 triangle output which needs compute shader workaround.

GCN's IPC drops after R9-390X since following Fury Pro/X/Vega 56/64/II GPUs didn't scale the Hawaii XT's geometry-rasterization GPU framework with compute power increase i.e. AMD can't keep adding CUs.

RX-5700 XT has Vega II's rasterization power with improved back-face culling and double width texture filtering hardware, The relationship between RX-5700 XT and Vega II reminds me of R9-390X OC and Fury X.

This is GPU hardware basics 101. GPUs should NOT be DSPs.

Don't worry about next gen consoles when GPU fundamentals hardware design issues are not addressed.

NAVI 10 has four prim units while TU106 has four GPC units.

NAVI 10 has 64 ROPS units while TU106 has 64 ROPS units.

NAVI 10 has 4MB L2 cache while TU106 has 4MB L2 cache.

NAVI 10 has GDDR6-14000 256 bit bus while TU106 has GDDR6-14000 256 bit bus.

NAVI 10's fundamental GPU hardware design is like TU106 class.

TU104 has six GPC units scaling with compute TFLOPS increase, hence NVIDIA knows GPU fundamentals hardware design basics 101.

RX 5700 XT seems to be ~1.8 Ghz overclocked R9-390X with Polaris/Vega/NAVI improvements and design corrections.

I said $400 GPU right in the very beginning: "These graphics cards on their own cost as much as the consoles as a whole will cost." and "So you are telling me, Sony/MS are going to take an otherwise $400 graphics card...". And you went on to argue about how it can be done.

When I said a $400 GPU, that's how much the 5700 XT cost. And even with profit reductions, it'll be a stretch to get that in there.

Don't end a post "Try again" and try to tell me you're not arguing my point. And my point has always been clear.

Just step up, and say you're challenging my point, because whether you like it or not, this is the position you have essentially taken. You think the GPU in the next consoles will be a 5700 XT.

Otherwise you have to admit as usual you didn't read what you replied to properly, and you've ended up chasing your own tail and arguing with yourself.

I don't based my arguments at PC's retail prices and PC's add-in-board channels since MS funds both silicon and PCB production runs.

The facts based on MS's E3 2019 reveal,

- Scarlet's wider GDDRx bus enabled PCB is not PS4/PS4 Pro's 256 bit trace line PCB class motherboard.

- Like Scarlet, Scorpio's 384bit GDDRx wide bus on based Radeon HD 7950/7970's PCB design.

- PS4/PS4 Pro's PCB motherboards are based on mainstream Radeon HD 7870 and RX-470 GDDRx bus PCB.

Your argument is already flawed by using RX 5700's 256 bit PCB and chip area size cost structures.

- Scorpio's 384 bit GDDR5-6800 wider bus enabled better 4K results when compared to RX-580's 256 bit GDDR5-8000.

- Optimized game consoles CPU workloads are programmed within L2 cache boundary to minimize external memory hit rates. Programming within L2 cache boundary has existed since X86 CPUs gained L2 cache i.e. CELL's SPU like programming boundary is NOT new. 8 core Zen v2 has 36 MB cache.

- Scorpio's Direct12 API calls to GCN ISA conversion are done by micro-coding hardware engine NOT by PC's driver which runs on the CPU. Less CPU usage on plumping workloads.

- Scorpio has Variable Rate Shading feature which doesn't exist on RX-5700/5700 XT since NAVI v1 still has Vega ROPS design which is not aware of multi-resolution shading like Scorpio ROPS design. Scarlet has shading conservation features which doesn't exist on RX-5700/5700 XT. Variable Rate Shading feature usage in games would improve when more hardware ships with this feature, hence Turing GPUs are ready for Variable Rate Shading feature usage. .

- "RDNA v2" said to have Variable Rate Shading and hardware accelerated RT features.

RX-5700 XT is NOT ready for Scarlet's GPU feature set.

My minimum PC spec for year 2020

CPU: 8 core Zen v2

GPU: RTX 2060 Super/2070. I don't recommend RX-5700 XT as a Scarlet equivalent GPU due to missing hardware features.

RX-5700 XT feels like Radeon X1800 XT to be hardware feature obsolete by next year's "RDNA 2".

Try again.

I'm not using the 5700XT, I'm responding to those who are making those claims, was I the first one in this thread to start talking about this GPU? No. I said, for the final time: 'I'LL BELIEVE IT WHEN I SEE IT'. How can I I make this any clearer. I don't know, you tell me? Do you need diagrams or something?

What is the matter with you? It is not that hard. So no, my argument is not flawed.

So if you don't think it's the 5700XT, and I don't think it's the 5700XT, what are you arguing with me about? Something you made up in your head? If you don't think it will be this GPU, why are you telling me, why don't you tell that to TC, dumbass. Why are we going round in circles both denouncing the same effing GPU for different reasons? I'm referring to it's cost, and you're referring to it's specs.

Therefore, your argument, ya know, the one that you've apparently been having with yourself, is flawed, since it has less relevance to me and more to those who are arguing for that GPU.

So you "Try again".

I don't based my arguments at PC's retail prices and PC's add-in-board channels since MS funds both silicon and PCB production runs.

The facts based on MS's E3 2019 reveal,

- Scarlet's wider GDDRx bus enabled PCB is not PS4/PS4 Pro's 256 bit trace line PCB class motherboard.

- Like Scarlet, Scorpio's 384bit GDDRx wide bus on based Radeon HD 7950/7970's PCB design.

- PS4/PS4 Pro's PCB motherboards are based on mainstream Radeon HD 7870 and RX-470 GDDRx bus PCB.

Your argument is already flawed by using RX 5700's 256 bit PCB and chip area size cost structures.

- Scorpio's 384 bit GDDR5-6800 wider bus enabled better 4K results when compared to RX-580's 256 bit GDDR5-8000.

- Optimized game consoles CPU workloads are programmed within L2 cache boundary to minimize external memory hit rates. Programming within L2 cache boundary has existed since X86 CPUs gained L2 cache i.e. CELL's SPU like programming boundary is NOT new. 8 core Zen v2 has 36 MB cache.

- Scorpio's Direct12 API calls to GCN ISA conversion are done by micro-coding hardware engine NOT by PC's driver which runs on the CPU. Less CPU usage on plumping workloads.

- Scorpio has Variable Rate Shading feature which doesn't exist on RX-5700/5700 XT since NAVI v1 still has Vega ROPS design which is not aware of multi-resolution shading like Scorpio ROPS design. Scarlet has shading conservation features which doesn't exist on RX-5700/5700 XT. Variable Rate Shading feature usage in games would improve when more hardware ships with this feature, hence Turing GPUs are ready for Variable Rate Shading feature usage. .

- "RDNA v2" said to have Variable Rate Shading and hardware accelerated RT features.

RX-5700 XT is NOT ready for Scarlet's GPU feature set.

My minimum PC spec for year 2020

CPU: 8 core Zen v2

GPU: RTX 2060 Super/2070. I don't recommend RX-5700 XT as a Scarlet equivalent GPU due to missing hardware features.

RX-5700 XT feels like Radeon X1800 XT to be hardware feature obsolete by next year's "RDNA 2".

Try again.

I'm not using the 5700XT, I'm responding to those who are making those claims, was I the first one in this thread to start talking about this GPU? No. I said, for the final time: 'I'LL BELIEVE IT WHEN I SEE IT'. How can I I make this any clearer. I don't know, you tell me? Do you need diagrams or something?

What is the matter with you? It is not that hard. So no, my argument is not flawed.

So if you don't think it's the 5700XT, and I don't think it's the 5700XT, what are you arguing with me about? Something you made up in your head? If you don't think it will be this GPU, why are you telling me, why don't you tell that to TC, dumbass. Why are we going round in circles both denouncing the same effing GPU for different reasons? I'm referring to it's cost, and you're referring to it's specs.

Therefore, your argument, ya know, the one that you've apparently been having with yourself, is flawed, since it has less relevance to me and more to those who are arguing for that GPU.

So you "Try again".

You argued

I said $400 GPU right in the very beginning: "These graphics cards on their own cost as much as the consoles as a whole will cost." and "So you are telling me, Sony/MS are going to take an otherwise $400 graphics card...". And you went on to argue about how it can be done.

FACTS based on MS's E3 2019 reveal.

1. Scarlet's PCB bus width is already following Scorpio's instead of PS4/PS4 Pro's 7870/RX-470 based bus width PCB

Scorpio's 384 bit wide bus PCB cost more to make when compared to GDDR5 256 bit bus PCB based RX-470 8GB/480 8GB/570 8GB/580 8GB or GTX 1070 8GB (BOM cost similar to RX-580 with large profit margins for NVIDIA)!

This is why your PC retail AIB price argument is flawed!

320 bit wide bus with GDDR6-14000 chips was indicated in 2019 E3 reveal, hence memory bandwidth is ~560 GB/s

Bus width may increase when the entire PCB is shown.

PC already has 256 bit GDDR6-15500 with RTX 2080 Super, hence 496 GB/s. Next year's PC GPUs would have faster GDDR6 like 16000 to 18000 rated modules.

2. Scarlet's APU size is already rivals or exceeds Scorpio's APU chip area size.

3. Variable rate shading rate feature must be included with Scarlet due to backward compatibility reasons with Scorpio.

4. Declared hardware accelerated ray-tracing.

Scarlet follows Scorpio's approach NOT PS4/PS4 Pro approach.

Can one of you console hype machine please explain why the leaks are Navi 10 "Lite"?

5700 = Navi 10 XL

5700 XT = Navi 10 XT

Just curious, seeing as you guys seem too caught up on CU counts and TFLOPS but we have no idea what affect ray tracing cores will have on TDP let alone price to manufacture.

Lite... Hmmm.

I don't based my arguments at PC's retail prices and PC's add-in-board channels since MS funds both silicon and PCB production runs.

The facts based on MS's E3 2019 reveal,

- Scarlet's wider GDDRx bus enabled PCB is not PS4/PS4 Pro's 256 bit trace line PCB class motherboard.

- Like Scarlet, Scorpio's 384bit GDDRx wide bus on based Radeon HD 7950/7970's PCB design.

- PS4/PS4 Pro's PCB motherboards are based on mainstream Radeon HD 7870 and RX-470 GDDRx bus PCB.

Your argument is already flawed by using RX 5700's 256 bit PCB and chip area size cost structures.

- Scorpio's 384 bit GDDR5-6800 wider bus enabled better 4K results when compared to RX-580's 256 bit GDDR5-8000.

- Optimized game consoles CPU workloads are programmed within L2 cache boundary to minimize external memory hit rates. Programming within L2 cache boundary has existed since X86 CPUs gained L2 cache i.e. CELL's SPU like programming boundary is NOT new. 8 core Zen v2 has 36 MB cache.

- Scorpio's Direct12 API calls to GCN ISA conversion are done by micro-coding hardware engine NOT by PC's driver which runs on the CPU. Less CPU usage on plumping workloads.

- Scorpio has Variable Rate Shading feature which doesn't exist on RX-5700/5700 XT since NAVI v1 still has Vega ROPS design which is not aware of multi-resolution shading like Scorpio ROPS design. Scarlet has shading conservation features which doesn't exist on RX-5700/5700 XT. Variable Rate Shading feature usage in games would improve when more hardware ships with this feature, hence Turing GPUs are ready for Variable Rate Shading feature usage. .

- "RDNA v2" said to have Variable Rate Shading and hardware accelerated RT features.

RX-5700 XT is NOT ready for Scarlet's GPU feature set.

My minimum PC spec for year 2020

CPU: 8 core Zen v2

GPU: RTX 2060 Super/2070. I don't recommend RX-5700 XT as a Scarlet equivalent GPU due to missing hardware features.

RX-5700 XT feels like Radeon X1800 XT to be hardware feature obsolete by next year's "RDNA 2".

Try again.

I'm not using the 5700XT, I'm responding to those who are making those claims, was I the first one in this thread to start talking about this GPU? No. I said, for the final time: 'I'LL BELIEVE IT WHEN I SEE IT'. How can I I make this any clearer. I don't know, you tell me? Do you need diagrams or something?

What is the matter with you? It is not that hard. So no, my argument is not flawed.

So if you don't think it's the 5700XT, and I don't think it's the 5700XT, what are you arguing with me about? Something you made up in your head? If you don't think it will be this GPU, why are you telling me, why don't you tell that to TC, dumbass. Why are we going round in circles both denouncing the same effing GPU for different reasons? I'm referring to it's cost, and you're referring to it's specs.

Therefore, your argument, ya know, the one that you've apparently been having with yourself, is flawed, since it has less relevance to me and more to those who are arguing for that GPU.

So you "Try again".

You argued

I said $400 GPU right in the very beginning: "These graphics cards on their own cost as much as the consoles as a whole will cost." and "So you are telling me, Sony/MS are going to take an otherwise $400 graphics card...". And you went on to argue about how it can be done.

FACTS based on MS's E3 2019 reveal.

1. Scarlet's PCB bus width is already following Scorpio's instead of PS4/PS4 Pro's 7870/RX-470 based bus width PCB

Scorpio's 384 bit wide bus PCB cost more to make when compared to GDDR5 256 bit bus PCB based RX-470 8GB/480 8GB/570 8GB/580 8GB or GTX 1070 8GB (BOM cost similar to RX-580 with large profit margins for NVIDIA)!

This is why your PC retail AIB price argument is flawed!

320 bit wide bus with GDDR6-14000 chips was indicated in 2019 E3 reveal, hence memory bandwidth is ~560 GB/s

Bus width may increase when the entire PCB is shown.

PC already has 256 bit GDDR6-15500 with RTX 2080 Super, hence 496 GB/s. Next year's PC GPUs would have faster GDDR6 like 16000 to 18000 rated modules.

2. Scarlet's APU size is already rivals or exceeds Scorpio's APU chip area size.

3. Variable rate shading rate feature must be included with Scarlet due to backward compatibility reasons with Scorpio.

4. Declared hardware accelerated ray-tracing.

Scarlet follows Scorpio's approach NOT PS4/PS4 Pro approach.

That's because

those were the cards

TC and others

were arguing for.

So tell them, not me. I don't give shit, I didn't start arguing for these cards.

How can I spell this out for you. You tell me?

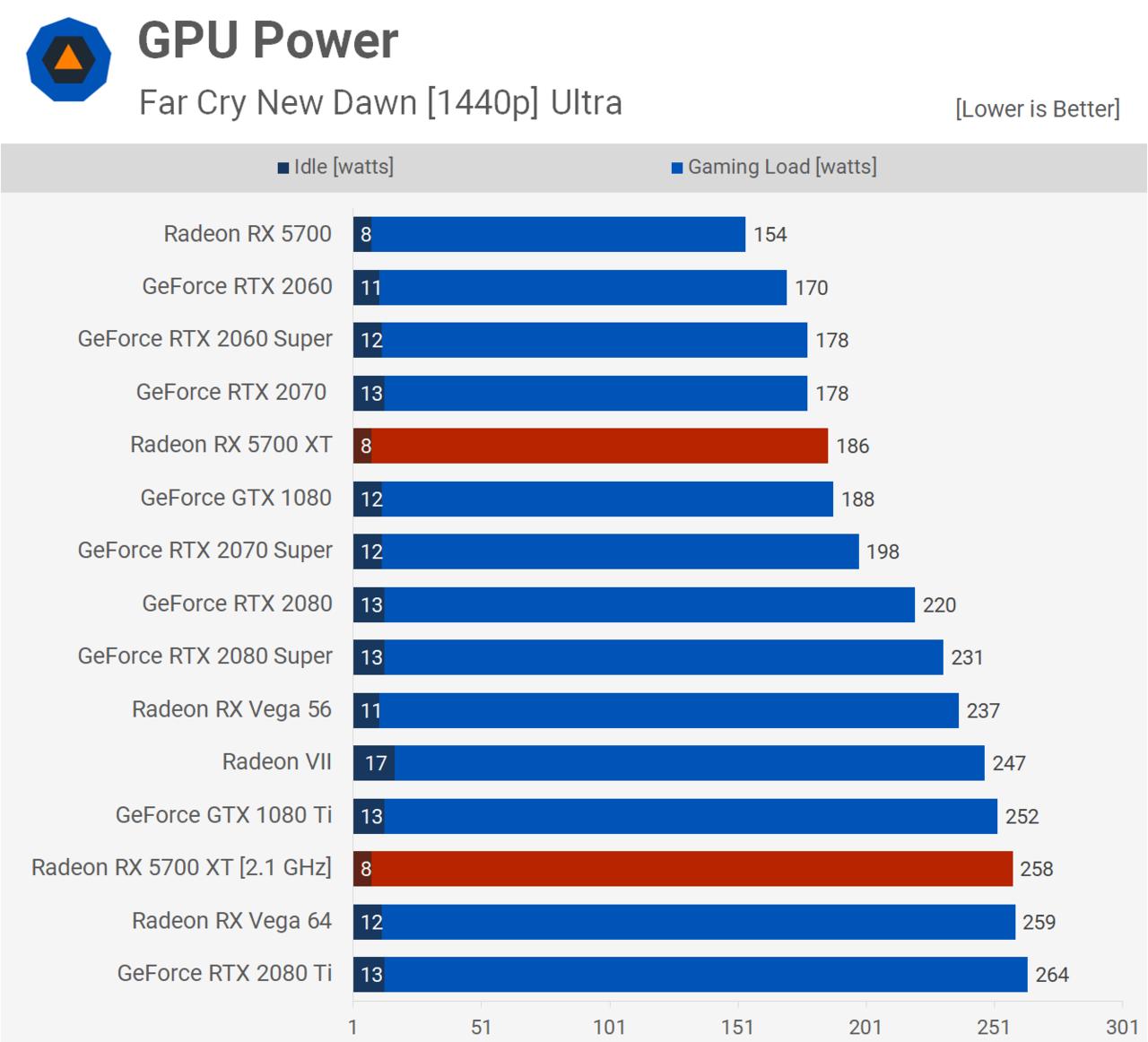

Ryzen 3000 is definitely a major improvement over the 2000 series. Zen 2, if used in the next gen consoles, will be a huge performance increase over the slow jaguar cpus we have in the current Xbox one and PS4 families of consoles. As far as Navi goes, its competitive against Turing. Though it’s still a little power hungry which is disappointing but it is an improvement over Vega. The combo of Navi and Zen 2 will be a nice boost for the next consoles but I feel they will be expensive to start.

I don't based my arguments at PC's retail prices and PC's add-in-board channels since MS funds both silicon and PCB production runs.

The facts based on MS's E3 2019 reveal,

- Scarlet's wider GDDRx bus enabled PCB is not PS4/PS4 Pro's 256 bit trace line PCB class motherboard.

- Like Scarlet, Scorpio's 384bit GDDRx wide bus on based Radeon HD 7950/7970's PCB design.

- PS4/PS4 Pro's PCB motherboards are based on mainstream Radeon HD 7870 and RX-470 GDDRx bus PCB.

Your argument is already flawed by using RX 5700's 256 bit PCB and chip area size cost structures.

- Scorpio's 384 bit GDDR5-6800 wider bus enabled better 4K results when compared to RX-580's 256 bit GDDR5-8000.

- Optimized game consoles CPU workloads are programmed within L2 cache boundary to minimize external memory hit rates. Programming within L2 cache boundary has existed since X86 CPUs gained L2 cache i.e. CELL's SPU like programming boundary is NOT new. 8 core Zen v2 has 36 MB cache.

- Scorpio's Direct12 API calls to GCN ISA conversion are done by micro-coding hardware engine NOT by PC's driver which runs on the CPU. Less CPU usage on plumping workloads.

- Scorpio has Variable Rate Shading feature which doesn't exist on RX-5700/5700 XT since NAVI v1 still has Vega ROPS design which is not aware of multi-resolution shading like Scorpio ROPS design. Scarlet has shading conservation features which doesn't exist on RX-5700/5700 XT. Variable Rate Shading feature usage in games would improve when more hardware ships with this feature, hence Turing GPUs are ready for Variable Rate Shading feature usage. .

- "RDNA v2" said to have Variable Rate Shading and hardware accelerated RT features.

RX-5700 XT is NOT ready for Scarlet's GPU feature set.

My minimum PC spec for year 2020

CPU: 8 core Zen v2

GPU: RTX 2060 Super/2070. I don't recommend RX-5700 XT as a Scarlet equivalent GPU due to missing hardware features.

RX-5700 XT feels like Radeon X1800 XT to be hardware feature obsolete by next year's "RDNA 2".

Try again.

I'm not using the 5700XT, I'm responding to those who are making those claims, was I the first one in this thread to start talking about this GPU? No. I said, for the final time: 'I'LL BELIEVE IT WHEN I SEE IT'. How can I I make this any clearer. I don't know, you tell me? Do you need diagrams or something?

What is the matter with you? It is not that hard. So no, my argument is not flawed.

So if you don't think it's the 5700XT, and I don't think it's the 5700XT, what are you arguing with me about? Something you made up in your head? If you don't think it will be this GPU, why are you telling me, why don't you tell that to TC, dumbass. Why are we going round in circles both denouncing the same effing GPU for different reasons? I'm referring to it's cost, and you're referring to it's specs.

Therefore, your argument, ya know, the one that you've apparently been having with yourself, is flawed, since it has less relevance to me and more to those who are arguing for that GPU.

So you "Try again".

You argued

I said $400 GPU right in the very beginning: "These graphics cards on their own cost as much as the consoles as a whole will cost." and "So you are telling me, Sony/MS are going to take an otherwise $400 graphics card...". And you went on to argue about how it can be done.

FACTS based on MS's E3 2019 reveal.

1. Scarlet's PCB bus width is already following Scorpio's instead of PS4/PS4 Pro's 7870/RX-470 based bus width PCB

Scorpio's 384 bit wide bus PCB cost more to make when compared to GDDR5 256 bit bus PCB based RX-470 8GB/480 8GB/570 8GB/580 8GB or GTX 1070 8GB (BOM cost similar to RX-580 with large profit margins for NVIDIA)!

This is why your PC retail AIB price argument is flawed!

320 bit wide bus with GDDR6-14000 chips was indicated in 2019 E3 reveal, hence memory bandwidth is ~560 GB/s

Bus width may increase when the entire PCB is shown.

PC already has 256 bit GDDR6-15500 with RTX 2080 Super, hence 496 GB/s. Next year's PC GPUs would have faster GDDR6 like 16000 to 18000 rated modules.

2. Scarlet's APU size is already rivals or exceeds Scorpio's APU chip area size.

3. Variable rate shading rate feature must be included with Scarlet due to backward compatibility reasons with Scorpio.

4. Declared hardware accelerated ray-tracing.

Scarlet follows Scorpio's approach NOT PS4/PS4 Pro approach.

That's because

those were the cards

TC and others

were arguing for.

So tell them, not me. I don't give shit, I didn't start arguing for these cards.

How can I spell this out for you. You tell me?

I’m not arguing for these cards.

im arguing for these cards as the minimum

Ryzen 3000 is definitely a major improvement over the 2000 series. Zen 2, if used in the next gen consoles, will be a huge performance increase over the slow jaguar cpus we have in the current Xbox one and PS4 families of consoles. As far as Navi goes, its competitive against Turing. Though it’s still a little power hungry which is disappointing but it is an improvement over Vega. The combo of Navi and Zen 2 will be a nice boost for the next consoles but I feel they will be expensive to start.

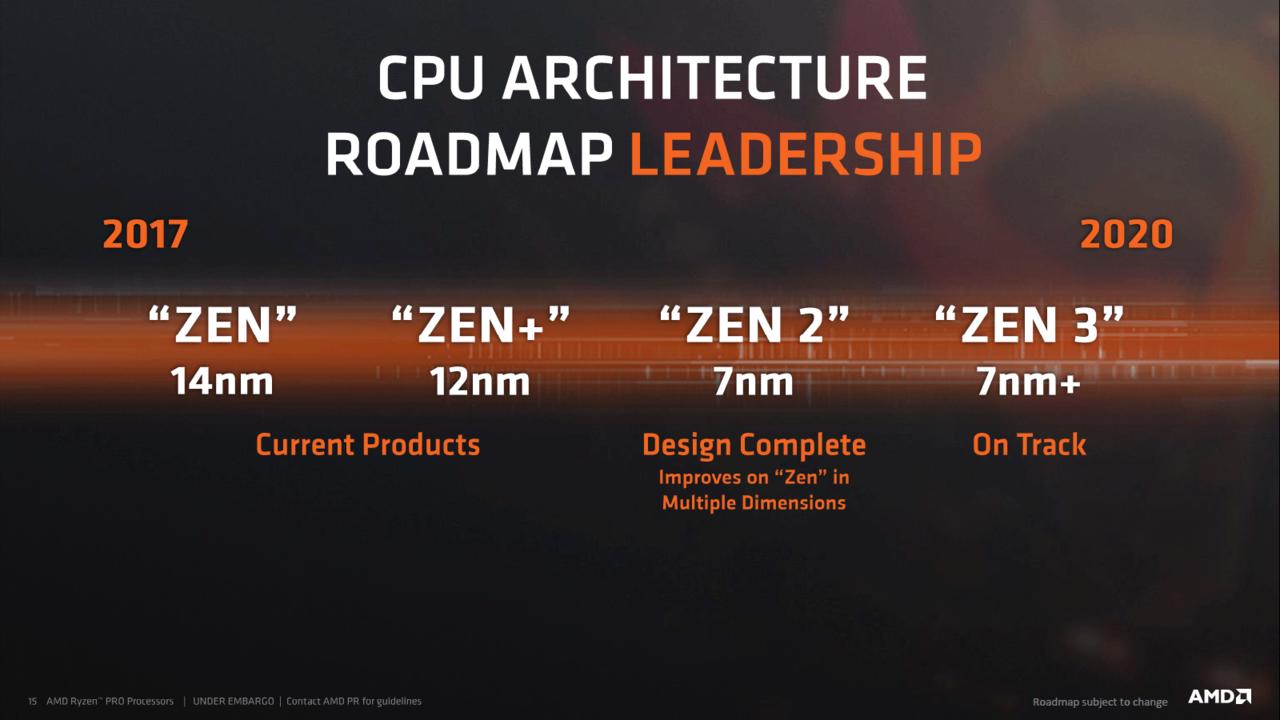

Note that "Zen 3" with 7nm+ UV is planned sometime in year 2020.

Intel has to keep up with AMD's GPU style release pace for CPUs.

IF NAVI 10 Lite has 36 CU at 1800Mhz clock speed

(36 CU / 40 CU) x 186 watts = 167.4 watts

Apply 15 percent power reduction improvements from next year's 7nm+ may yield 142 watts.

Under-voltage tricks not yet applied.

Note that AMD is position to scale RX 5700's dual ahader engiens by 2X into quad shader engines (8 prim triangle units, 72 CU, 128 ROPS) GPU ala. RX-5900.

RX-5700's situation is similar to Radeon HD 7870's dual shader engines position before R9-290X's quad shader engines release which is more than to rival or beat RTX 2080 TI/Titan RTX. NVIDIA must release Ampere RTX 3080 Ti in year 2020!

Ryzen 3000 is definitely a major improvement over the 2000 series. Zen 2, if used in the next gen consoles, will be a huge performance increase over the slow jaguar cpus we have in the current Xbox one and PS4 families of consoles. As far as Navi goes, its competitive against Turing. Though it’s still a little power hungry which is disappointing but it is an improvement over Vega. The combo of Navi and Zen 2 will be a nice boost for the next consoles but I feel they will be expensive to start.

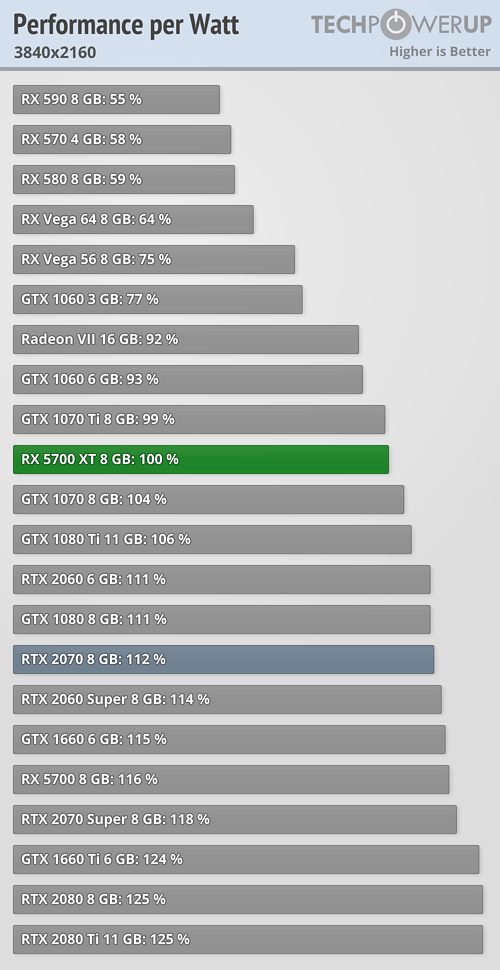

https://www.techpowerup.com/review/amd-radeon-rx-5700-xt/29.html

RX-5700's fps per watts similar to Turing. AMD's is slightly overclocking RX-5700 XT

Please Log In to post.

Log in to comment