@ronvalencia: Time to re-boot yourself, Ron.

Digitial Foundry: Teraflop computation no longer a relevant measurement for next gen consoles.

I'd just like to point out that dev kits come with a target performance point. People are making wild assumptions about whether or not the alpha/beta dev kits are at all reflective of this from a hardware standpoint.

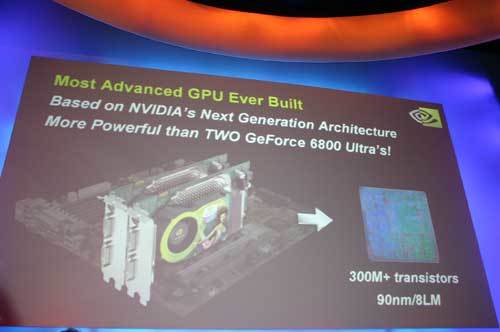

FALSE, E3 2005 gears of War 1 demo with GeForce 6800 ultra SLI or Radeon X800 Crossfire PowerMacs didn't reflect the final Xbox 360's GPU which is more capable than E3 2005 PowerMacs dev kits.

It didn't reflect the xbox 360,but it used more powerful hardware than the Mac Panello was claming MS had send,not only that it also proof that Epic never used those on the development of Gears or at least not until the final form arrived and that ANY hardware would be use be stronger or weaker.

In the xbox one X case the devkit is considerably stronger with double the ram and more CU.

So parting from than point if MS has deliver a 13TF devkit chances are the Final Scarlet hardware may be weaker going by what they did with the xbox one X.

Not me, I asked YOU why don't you use PC GPU/CPU?

You keep referencing to X and Pro for next gen NOT me. You said consoles are just PCs so why do you want to reference mid gen for next gen?

So I'm asking you why don't you use the latest PC hardware as your reference for next gen instead of older mid-gen consoles?

You don't seem to have an answer.

Because I am comparing current offerings from *each company* to future offerings from each company. I know its mind-blowing. But I think its because you're annoyed that the next gen is not going to be that much stronger than the midgen. Don't be upset with me because your favorite company released a midgen console that undermines their next gen. :)

So why didn't I see you include AMD or Nvidia?

If it's all PC like YOU say not sure why you only use "console" companies have to determine generations?

Why did you omit AMD and Nvidia from your generations evaluation? They're also "companies" who produce hardware for video games within a "generation".

You seem to be stuck on mid-gen consoles when there is stronger PC hardware already released within this generation and then you omit PC companies from the conversation of generations, when you're saying consoles are just PC?

Geez, You still don't seem to have a solid grasp on your own narrative buddy.

Try explaining why you're using mid-gen consoles as your reference point for generations again without contradicting yourself. ?

@boxrekt: You are a special breed indeed. I was comparing consoles. What is so hard to understand? What is so hard to also understand that your beloved consoles are just closed off PCs? What is also so hard to understand that I compared mid-gen because mid-gen is the most recent consoles from both companies? Is my comparison hurting your agenda? Are you unable to sleep at night because PC hardware runs your consoles and that next gen is going to sport year old PC hardware that is not going to be as significant as you and your buddy Microsoft believe? LOL

Why is that so hard to believe?

PS5 will have more then enough grunt to run those games at 4k/60.

Hmmm. 4X resolution (or more?) and twice the fps. Well if it is 8 times stronger I guess.

Pro runs the vast majority of it's games at 1440p.......so where are you getting 4x the resolution from?

You would be looking at just over double the resolution with the frame rate still being an unknown as we would need a break down on a per game basis to know what head room there is on games with a 30fps cap.

Why is that so hard to believe?

PS5 will have more then enough grunt to run those games at 4k/60.

Hmmm. 4X resolution (or more?) and twice the fps. Well if it is 8 times stronger I guess.

Pro runs the vast majority of it's games at 1440p.......so where are you getting 4x the resolution from?

You would be looking at just over double the resolution with the frame rate still being an unknown as we would need a break down on a per game basis to know what head room there is on games with a 30fps cap.

I compare it to the non pro versions naturally.

@boxrekt:

NAVI's TFLOPS is based on wave32 compute with 1 cycle latancy to completion. Similar to CUDA's wave32 compute model. NAVI's wave32 compute model is better matched with NVIDIA Gameworks wave32 shader programs. Better single thread performance when compared to GCN's wave64.

GCN's TFLOPS is based on wave64 compute with 4 cycle latancy to completion. Higher need for multiple wave compute to hide latancy. Higher need for independant 64 elements to fill wave64 compute slots.

wave32 means 32 mini-threads payload being feed into CU. NAVI CU can handle two wave32 for every clock cycle and completes it 1 clock cycle, hence steady read/write stream. Superior vector branch latancy.

wave64 means 64 mini-threads payload being feed into CU. GCN CU can handle four wave64 and completes it in 4th clock cycle, hence burst read/write stream at 4th cycle, which placed heavy burdern on memory bandwdith. GCN CU's four cycle latancy affect vector branch.

It was never a good way to compare across different architectures with different instruction sets. Also doesn't take into account any bottlenecks.

NAVI CU can emulate wave64 as two wave32 with a single clock cycle latancy.

T+1 NAVI CU would have completed two wave32 (write) vs T+1 GCN CU would have 1/4 processed four wave64.

T+2 NAVI CU would have completed two wave32 (write) vs T+2 GCN CU would have 2/4 processed four wave64.

T+3 NAVI CU would have completed two wave32 (write) vs T+3 GCN CU would have 3/4 processed four wave64.

T+4 NAVI CU would have completed two wave32 (write) vs T+4 GCN CU would have 4/4 processed four wave64 (write burst with four wav64s, heavy burden on memory bandwdith, to avoid burst write, delay 2nd/3rd/4th wave64s by clock cycles but it create bubbles in the pipeline.). Software pipelining is harder on GCN when compared to NAVI.

NAVI CU has steady stream writes for every clock cycle, hence it's more efficient when compared to GCN CU.

MS wanted wanabe Turing GPU without paying NVIDIA's price.

In other words.. nobody knows. On that note, it will be a leap in technology, loading times, on-screen objects, etc.... so it doesn't really matter. Watching people try to explain this (when nobody even knows for sure) is hilarious though. The same people on the PC side saying it will be weaker than an Atari 2600, and super rabid fanboys claiming their consoles will have teh superz 14 terraz floops. lol.. Keep it up guys. I'll get my popcorn. Clown show.

In other words.. nobody knows. On that note, it will be a leap in technology, loading times, on-screen objects, etc.... so it doesn't really matter. Watching people try to explain this (when nobody even knows for sure) is hilarious though. The same people on the PC side saying it will be weaker than an Atari 2600, and super rabid fanboys claiming their consoles will have teh superz 14 terraz floops. lol.. Keep it up guys. I'll get my popcorn. Clown show.

Buh Buh

"NAVI CU can emulate wave64 as two wave32 with a single clock cycle latancy.

T+1 NAVI CU would have completed two wave32 (write) vs T+1 GCN CU would have 1/4 processed four wave64.

T+2 NAVI CU would have completed two wave32 (write) vs T+2 GCN CU would have 2/4 processed four wave64.

T+3 NAVI CU would have completed two wave32 (write) vs T+3 GCN CU would have 3/4 processed four wave64.

T+4 NAVI CU would have completed two wave32 (write) vs T+4 GCN CU would have 4/4 processed four wave64 (write burst with four wav64s, heavy burden on memory bandwdith, to avoid burst write, delay 2nd/3rd/4th wave64s by clock cycles but it create bubbles in the pipeline.). Software pipelining is harder on GCN when compared to NAVI.

NAVI CU has steady stream writes for every clock cycle, hence it's more efficient when compared to GCN CU.

MS wanted wanabe Turing GPU without paying NVIDIA's price."

LMAO

Amd tflops vs Nvidia tflops never mattered. AMD ttflops have always been total junk for comparisons.

With the last benchmark i would be shocked if that thing actually pushes 10 tflops i would not be shocked if it sits at 8 tflops.

That doesn't changes the fact that you are saying the PS5 will be at the same level as the 750 Ti which means by next year, the fastest card will be 3.7x the RTX 2070. No TFLOPS needed or comparison between companies.

Amd tflops vs Nvidia tflops never mattered. AMD ttflops have always been total junk for comparisons.

With the last benchmark i would be shocked if that thing actually pushes 10 tflops i would not be shocked if it sits at 8 tflops.

That doesn't changes the fact that you are saying the PS5 will be at the same level as the 750 Ti which means by next year, the fastest card will be 3.7x the RTX 2070. No TFLOPS needed or comparison between companies.

The fastest card will be decided by Nvidia and how much money they want to spend on it.

ATM there is no pressure to do anything. They could rebrand the 2000 series for the next decade if nobody is going to pressure them. Much like intel did with there CPU's.

Amd tflops vs Nvidia tflops never mattered. AMD ttflops have always been total junk for comparisons.

With the last benchmark i would be shocked if that thing actually pushes 10 tflops i would not be shocked if it sits at 8 tflops.

In other words.. nobody knows. On that note, it will be a leap in technology, loading times, on-screen objects, etc.... so it doesn't really matter. Watching people try to explain this (when nobody even knows for sure) is hilarious though. The same people on the PC side saying it will be weaker than an Atari 2600, and super rabid fanboys claiming their consoles will have teh superz 14 terraz floops. lol.. Keep it up guys. I'll get my popcorn. Clown show.

Buh Buh

"NAVI CU can emulate wave64 as two wave32 with a single clock cycle latancy.

T+1 NAVI CU would have completed two wave32 (write) vs T+1 GCN CU would have 1/4 processed four wave64.

T+2 NAVI CU would have completed two wave32 (write) vs T+2 GCN CU would have 2/4 processed four wave64.

T+3 NAVI CU would have completed two wave32 (write) vs T+3 GCN CU would have 3/4 processed four wave64.

T+4 NAVI CU would have completed two wave32 (write) vs T+4 GCN CU would have 4/4 processed four wave64 (write burst with four wav64s, heavy burden on memory bandwdith, to avoid burst write, delay 2nd/3rd/4th wave64s by clock cycles but it create bubbles in the pipeline.). Software pipelining is harder on GCN when compared to NAVI.

NAVI CU has steady stream writes for every clock cycle, hence it's more efficient when compared to GCN CU.

MS wanted wanabe Turing GPU without paying NVIDIA's price."

LMAO

It's my incomplete explanation why NAVI is not GCN and it's not the follow on topic on GCN software pipelining and the need for fine grain aysnc compute's dispatch under programmer's control.

I'd just like to point out that dev kits come with a target performance point. People are making wild assumptions about whether or not the alpha/beta dev kits are at all reflective of this from a hardware standpoint.

FALSE, E3 2005 gears of War 1 demo with GeForce 6800 ultra SLI or Radeon X800 Crossfire PowerMacs didn't reflect the final Xbox 360's GPU which is more capable than E3 2005 PowerMacs dev kits.

It didn't reflect the xbox 360,but it used more powerful hardware than the Mac Panello was claming MS had send,not only that it also proof that Epic never used those on the development of Gears or at least not until the final form arrived and that ANY hardware would be use be stronger or weaker.

In the xbox one X case the devkit is considerably stronger with double the ram and more CU.

So parting from than point if MS has deliver a 13TF devkit chances are the Final Scarlet hardware may be weaker going by what they did with the xbox one X.

Again, Panello argument has time line argument and you have omitted that, hence you are wrong.

IMO I would not place any faith in Dev Kit specs this early - chips aren't even close to ready. First Scorpio bring-up was 12/16 and real dev kits went out in March 2017. X1 and PS4 even later.

— Albert Penello (@albertpenello) June 17, 2019

We sent out Mac's for X360 dev kits. Dev kits mean very little at this point TBH.

Xbox One X real dev kit appeared in March 2017 and it's product release is in November 2017.

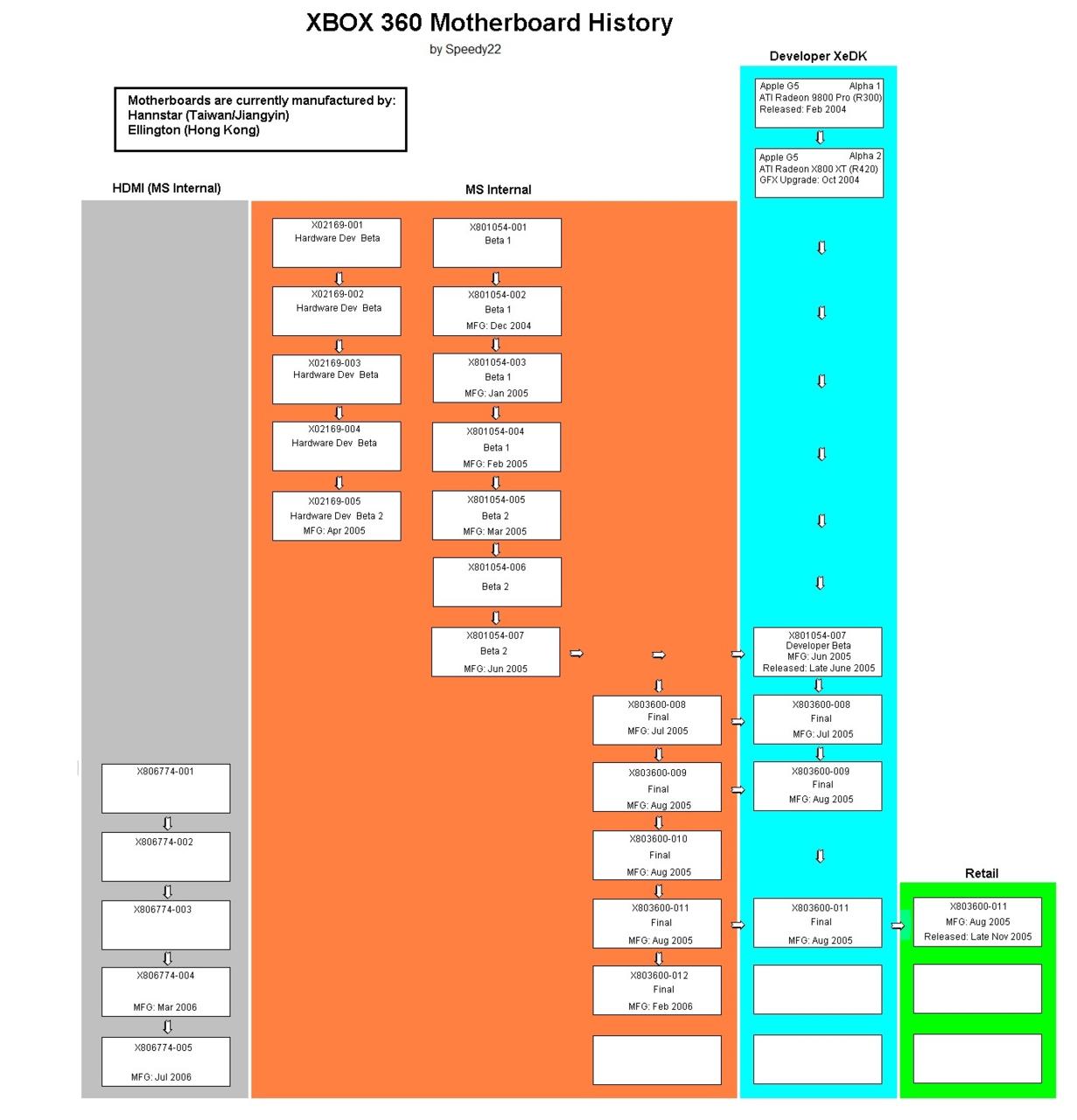

For Xbox 360's time line evolution

Beta 2 Xbox 360 hardware XeDE kit (which used Xbox 360's silicon) arrived in June 2005 and final version arrived in July 2005.

Late in PS3's R&D cycle, Sony made sure thier NVIDIA RSX shader power level is like more than 6800 Ultra SLI which is about X850 XT CF level which rivals Microsoft 's Alpha 2 dev kits in GPU power level.

Industrial spying is real.

@Gatygun: Intel GPUs are releasing next year so Nvidia might finally feel some pressure.

Unlikely since Intel's GPU department are mostly made up of AMD's rejects like Qualcomm's GPU department.

@Gatygun: Intel GPUs are releasing next year so Nvidia might finally feel some pressure.

Unlikely since Intel's GPU department are mostly made up of AMD's rejects like Qualcomm's GPU department.

I can say with great certainty that the people they hire that you are calling rejects are significantly more knowledgeable than even the perceived version of yourself. :)

@Gatygun: Intel GPUs are releasing next year so Nvidia might finally feel some pressure.

Unlikely since Intel's GPU department are mostly made up of AMD's rejects like Qualcomm's GPU department.

I can say with great certainty that the people they hire that you are calling rejects are significantly more knowledgeable than even the perceived version of yourself. :)

That's a red herring. GCN has many graphics pipeline bottlenecks and the fault is with RTG rejects.

Sigh, it's exactly like with cars... AMD may be ''stronger on the paper'' but it sucks when ''tested in real conditions''...

Again, Panello argument has time line argument and you have omitted that, hence you are wrong.

Xbox One X real dev kit appeared in March 2017 and it's product release is in November 2017.

For Xbox 360's time line evolution

Beta 2 Xbox 360 hardware XeDE kit (which used Xbox 360's silicon) arrived in June 2005 and final version arrived in July 2005.

Late in PS3's R&D cycle, Sony made sure thier NVIDIA RSX shader power level is like more than 6800 Ultra SLI which is about X850 XT CF level which rivals Microsoft 's Alpha 2 dev kits in GPU power level.

Industrial spying is real.

Gears was never shown on a Mac with an X800 the very first time we saw it was on 2 6800ultra on SLI which mean Epic was targing higher spec.

The rest is history.

Again, Panello argument has time line argument and you have omitted that, hence you are wrong.

Xbox One X real dev kit appeared in March 2017 and it's product release is in November 2017.

For Xbox 360's time line evolution

Beta 2 Xbox 360 hardware XeDE kit (which used Xbox 360's silicon) arrived in June 2005 and final version arrived in July 2005.

Late in PS3's R&D cycle, Sony made sure thier NVIDIA RSX shader power level is like more than 6800 Ultra SLI which is about X850 XT CF level which rivals Microsoft 's Alpha 2 dev kits in GPU power level.

Industrial spying is real.

Gears was never shown on a Mac with an X800 the very first time we saw it was on 2 6800ultra on SLI which mean Epic was targing higher spec.

The rest is history.

That's a red herring argument

G70 was already an aging GPU design and rest is history.

PowerMac G5 has support for both Radeon X800 XT and GeForce 6800 Ultra

From https://barefeats.com/radx800.html

X800 XT is similar to 6800 Ultra in terms of power.

--------

https://www.anandtech.com/show/1686/5

Because the G5 systems can only use a GeForce 6800 Ultra or an ATI Radeon X800 XT, developers had to significantly reduce the image quality of their demos - which explains their lack luster appearance. Anti-aliasing wasn't enabled on any of the demos, while the final Xbox 360 console will have 4X AA enabled on all titles.

Meanwhile at ATI's E3 2005 booth https://www.anandtech.com/show/1686/6

The machine was running ATI's R520 Ruby demo, which took about a week to port to the 360 from the original PC version. The hardware in this particular box wasn't running at full speed

During E3 2005, R520 (aka X1800) was mentioned and wasn't released during E3 2005 time period.

Unlike Epic, ATI has the actual Xbox 360 hardware which is NOT at full speed and it's running R520's Ruby demo.

A single Radeon X1800 XT (R520) can rival GeForce 6800 Ultra SLI or beat GeForce 7800 GTX.

R520 Ruby demo was also running on gimped Xbox 360 sample hardware during E3 2005.

Epic selected duel GeForce 6800 SLI due to hints from R520 demo.

From https://techreport.com/review/8864/ati-radeon-x1000-series-gpus

Date: October 2005

R520 (8 vertex + 16 pixel shader pipelines) was the first GPU released with large scale hyper-threading design followed by Xbox 360's Xenos (48 unified shader pipelines, 64 hyper-threads) in November 2005.

R520 was Close-to-Metal GpGPU capable which is precursor to Apple defined OpenCL.

Try again.

Please Log In to post.

Log in to comment