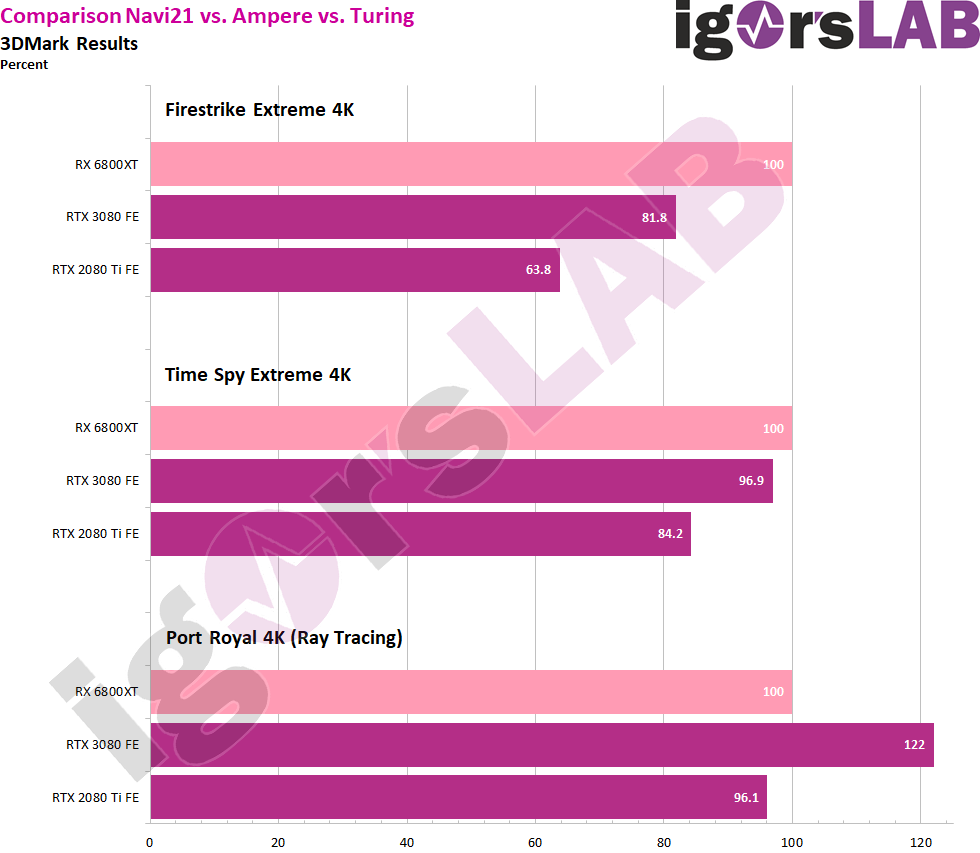

As always, you have to be careful with such benchmarks, even if the material I received yesterday seems quite plausible. Two sources, very different approaches or settings and yet in the end a certain coverage of the results – one can at least already see a certain trend. The most plausible results for me come from an Ultra-HD run (“4K”) of the three known benchmarks from the 3DMark suite.

What I received is said to be based on a benchmark run by a board partner who manufactures both AMD and NVIDIA graphics cards and is said to have carried out these three subsequent runs with an evaluation model (EVT phase). A normal Intel platform could have served as a basis when comparing the results. Since I was asked to benchmark the other cards myself for comparison and testing purposes and then to refer to the percentage differences, I first summarized the pure performance comparison as it was sent to me in this form in the original:

https://www.igorslab.de/en/3dmark-in-ultra-hd-benchmarks-the-rx-6800xt-without-and-with-raytracing/

The 6800XT beating the 3080FE?

But losing in RT vs the 3080 alto beating the 2080ti on its first RT try.

What say you system wars?

Log in to comment