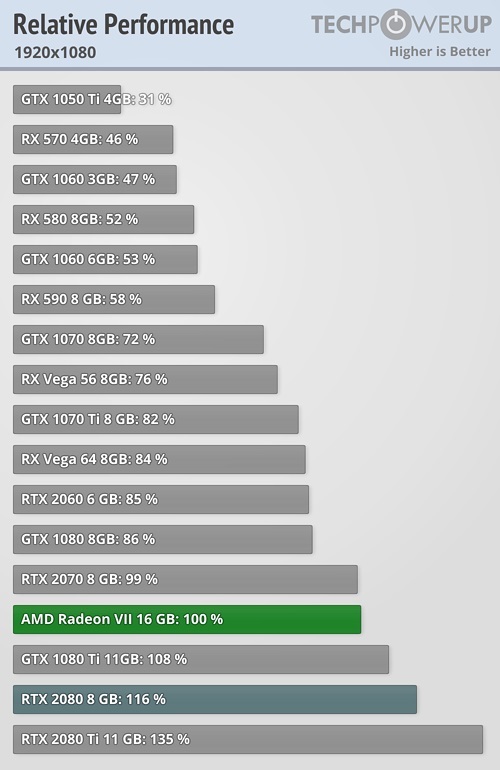

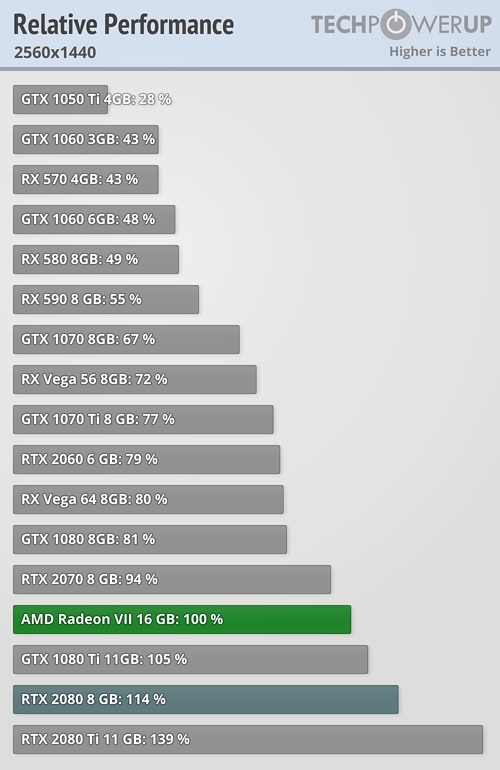

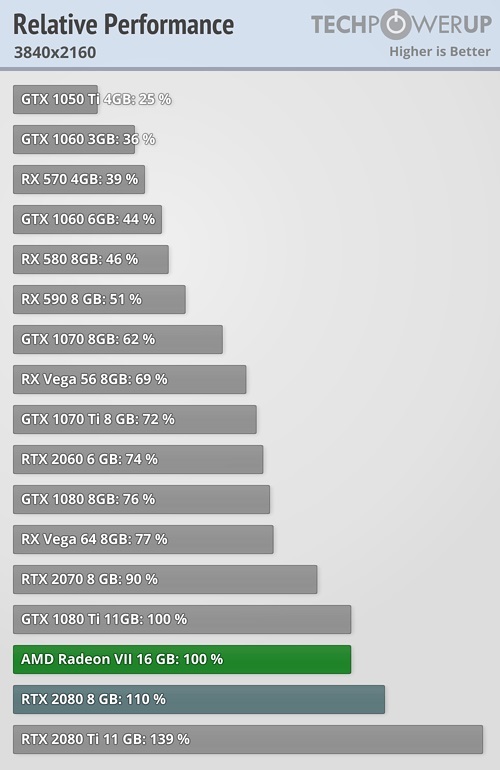

Slower than a 2080 and the 1080Ti especially in titles that dont use DX 12.

So complete shit then for 2019? My Vega 64 was not such a bad purchase last year from the looks of things. PS5/X02 are going to suck I bet.

Just watched a few reviews. One definite improvement is how it's 25-30% faster then previous Vega 64, while consuming fairly less power. However, when compared the the green team, it's clear the power efficiency is still nowhere near as good. Given that and the similar performance to an RTX 2080 (sometimes even slightly worse), this card needed to be at least $100 cheaper than what they're asking for. As it stands though, just get an RTX 2080.

AMD could've easily sell this for only $600, $700 is way too much then AMD is letting on. But remember, AMD is for the budget PC gamers after all, but paying $700 for an AMD GPU isn't one of them.

Overpriced flagship card that can't even fully match the performance of Nvidia's flagship from 2 years ago (1080 Ti) even while being 7nm....

Yeah... it's shit. Their biggest mistake is pricing it the same as the 2080 when it is clearly inferior.

I read that their making less than $100 per unit.. HBM and yields hurting them.

Overpriced flagship card that can't even fully match the performance of Nvidia's flagship from 2 years ago (1080 Ti) even while being 7nm....

Yeah... it's shit. Their biggest mistake is pricing it the same as the 2080 when it is clearly inferior.

I read that their making less than $100 per unit.. HBM and yields hurting them.

Not good. They need to get Navi rolling out ASAP.

Just watched a few reviews. One definite improvement is how it's 25-30% faster then previous Vega 64, while consuming fairly less power. However, when compared the the green team, it's clear the power efficiency is still nowhere near as good. Given that and the similar performance to an RTX 2080 (sometimes even slightly worse), this card needed to be at least $100 cheaper than what they're asking for. As it stands though, just get an RTX 2080.

AMD could've easily sell this for only $600, $700 is way too much then AMD is letting on. But remember, AMD is for the budget PC gamers after all, but paying $700 for an AMD GPU isn't one of them.

Being over $900 here in the UK is why I mentioned vega 64 not being a bad purchase last year, got it for peanuts during a sale. The extra performance for that price is a big **** off.

@Random_Matt: pricing seems all over the place.

If i was in the market for a new GPU and was set on Vega then i would be looking at the V64 or 56 (though there is only 10 quid in the difference between the 56 and 64 comparing the same model :S). The 64 is nearly half price compared to the VII on overclockers and thats for a good sapphire V64 nitro+.

im happy with my RX580 for another year i think.

@Random_Matt: pricing seems all over the place.

If i was in the market for a new GPU and was set on Vega then i would be looking at the V64 or 56 (though there is only 10 quid in the difference between the 56 and 64 comparing the same model :S). The 64 is nearly half price compared to the VII on overclockers and thats for a good sapphire V64 nitro+.

im happy with my RX580 for another year i think.

Still a good card.

It's still a Vega card, so using expensive HBM(8gb was around 150$ for AMD) was a must as they are very bandwidth heavy and gddr5/x(3-4 times cheaper than hbm) would increase the power draw by 75-100w on top so...

Just watched a few reviews. One definite improvement is how it's 25-30% faster then previous Vega 64, while consuming fairly less power. However, when compared the the green team, it's clear the power efficiency is still nowhere near as good. Given that and the similar performance to an RTX 2080 (sometimes even slightly worse), this card needed to be at least $100 cheaper than what they're asking for. As it stands though, just get an RTX 2080.

AMD could've easily sell this for only $600, $700 is way too much then AMD is letting on. But remember, AMD is for the budget PC gamers after all, but paying $700 for an AMD GPU isn't one of them.

Being over $900 here in the UK is why I mentioned vega 64 not being a bad purchase last year, got it for peanuts during a sale. The extra performance for that price is a big **** off.

I think some of the backlash stems from rumors around Christmas last year, saying that AMD would release Navi and not VII, and that you could get a 2070-equivalent card for around 250$. Absolute hogwash of a rumor, but it was very widespread and got people hyped. Then CES happened, AMD shows off their GPU first and price it 700$. Then they don't even give a fixed release date for their CPU's that are supposed to be shoulder-to-shoulder with a 9900k.

In other words, it got people pissed. This card exists because Navi wasn't ready yet. In some ways, it's actually a very cool product for consumers but it's main drawback is the price. You're Vega 64 is still a good GPU, it's best to wait for Navi.

This is why I am worried for Navi and next generation consoles.... Navi is still GCN and 7nm clearly doesn't help much for TDP, if this is anything to go by which it is a PS5 with console TDP will have GPU performance no better than a GTX 1070 Ti.

Also I called this a while ago, GCN is dead and AMD should have moved to something new right after Vega. It can't compete with the high end.

This is why I am worried for Navi and next generation consoles.... Navi is still GCN and 7nm clearly doesn't help much for TDP, if this is anything to go by which it is a PS5 with console TDP will have GPU performance no better than a GTX 1070 Ti.

Also I called this a while ago, GCN is dead and AMD should have moved to something new right after Vega. It can't compete with the high end.

GCN should have been left after R290X.

Now their next lineup of CPU looks promising. If the 3XXXX lineup has equal single core performance as my 6600K at 4.7, I'm buying an 8 core or higher from them. HEDT quite possible.

This is why I am worried for Navi and next generation consoles.... Navi is still GCN and 7nm clearly doesn't help much for TDP, if this is anything to go by which it is a PS5 with console TDP will have GPU performance no better than a GTX 1070 Ti.

Also I called this a while ago, GCN is dead and AMD should have moved to something new right after Vega. It can't compete with the high end.

GCN should have been left after R290X.

Now their next lineup of CPU looks promising. If the 3XXXX lineup has equal single core performance as my 6600K at 4.7, I'm buying an 8 core or higher from them. HEDT quite possible.

Its what I am waiting for right now Ryzen matches stock haswell single core performance but my overclocked i5 beats it... I want to upgrade to A Ryzen 3700X when its released and hopefully it will exceed my current single threaded performance and give me double the physical cores.

That said I have been eyeing up a 9700K recently so you never know, I have been delaying this CPU upgrade now for close to two years and I honestly have no reason to upgrade as at 4K my GPU is not bottle-necked at all.

3700X or a 9700K will probably be my coming thorn in the road of upgrading and as a gamer Intel is hard to ignore.

Its what I am waiting for right now Ryzen matches stock haswell single core performance but my overclocked i5 beats it... I want to upgrade to A Ryzen 3700X when its released and hopefully it will exceed my current single threaded performance and give me double the physical cores.

That said I have been eyeing up a 9700K recently so you never know, I have been delaying this CPU upgrade now for close to two years and I honestly have no reason to upgrade as at 4K my GPU is not bottle-necked at all.

3700X or a 9700K will probably be my coming thorn in the road of upgrading and as a gamer Intel is hard to ignore.

You're late for 9700K. Could as well wait for next gen from Intel.

It sucks that AMD is somewhat still catching up. However I also play at a resolution and (for time being) aiming for a FPS where AMD is no worse than Intel. I will also get new RAM, hoping AMD(or mobo manufactures) will support something above 3600MHz.

After that I am aiming for a new monitor. Keeping the 21:9 aspect, at 3440*1440 or higher. 5K is the next up I think. But mostly I want higher Hz. Still using 60Hz. I want a 120Hz or higher.

If this was 500 dollars, I'd snatch one up in a heartbeat.

I don't plan on going 4K any time soon, will probably just upgrade to 2K, but I like to future proof my builds. Still...hard to justify a 700 dollar card that is not as good as the 800 dollar best card on the market.

If this was 500 dollars, I'd snatch one up in a heartbeat.

I don't plan on going 4K any time soon, will probably just upgrade to 2K, but I like to future proof my builds. Still...hard to justify a 700 dollar card that is not as good as the 800 dollar best card on the market.

AMD couldn't afford to sell these cards at a loss, they already have a very small margin as these are expensive for them to make, just the 16gb hbm2 stack is something like 250-300$

rx 580 still enough no need to upgrad from that card. maybe in future if amd releases something hard to resist then maybe then upgrade

If this was 500 dollars, I'd snatch one up in a heartbeat.

I don't plan on going 4K any time soon, will probably just upgrade to 2K, but I like to future proof my builds. Still...hard to justify a 700 dollar card that is not as good as the 800 dollar best card on the market.

AMD couldn't afford to sell these cards at a loss, they already have a very small margin as these are expensive for them to make, just the 16gb hbm2 stack is something like 250-300$

$600 wouldn't be a total loss but paying for a Vega GPU $700 is really pushing it for what it's offering.

This is why I am worried for Navi and next generation consoles.... Navi is still GCN and 7nm clearly doesn't help much for TDP, if this is anything to go by which it is a PS5 with console TDP will have GPU performance no better than a GTX 1070 Ti.

Also I called this a while ago, GCN is dead and AMD should have moved to something new right after Vega. It can't compete with the high end.

GCN should have been left after R290X.

Now their next lineup of CPU looks promising. If the 3XXXX lineup has equal single core performance as my 6600K at 4.7, I'm buying an 8 core or higher from them. HEDT quite possible.

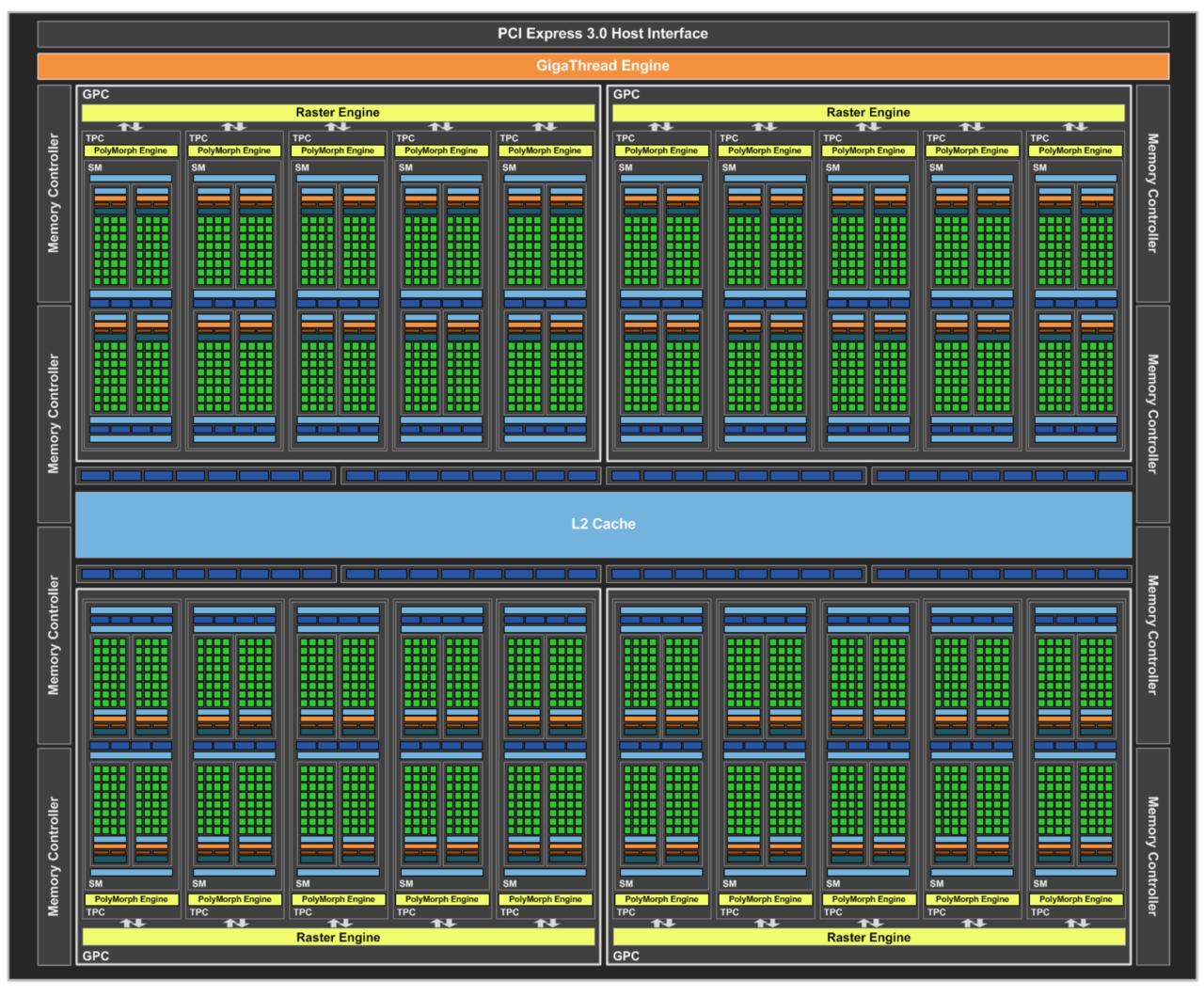

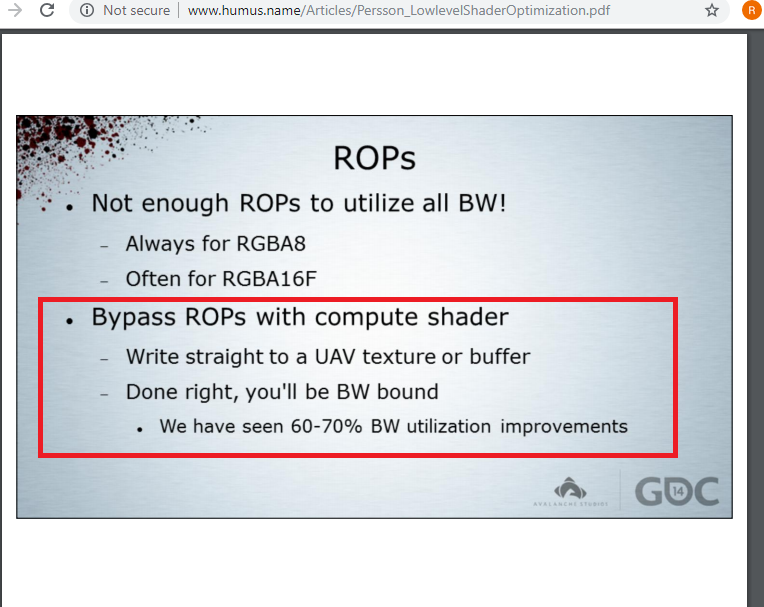

Under Raja "Mr TFLOPS" Koduri administration (joined AMD in 2013), GCN didn't evolved beyond R9-290X's quad raster engines and 64 ROPS (read-write) basic design.

AMD has yet to master Pascal's 8 ROPS per 32 bit memory channel design. R&D efforts was spent on HBM v1/v2 being coupled with 64 ROPS design and PR marketing pushing game devs towards async compute which used texture units as read-write units (e.g. different AA solution in Doom 2016 when async compute is enabled).

VII is like a fast 64 ROPS and quad raster engine GPU with high memory bandwidth.

For comparison, RTX 2080 has six raster engines with 64 ROPS and 4MB L2 cache with superior delta color compression. RTX 2080 design shows NVIDIA can decouple raster engines from 64 ROPS with 256bit bus design which could be important for any future GPU evolution.

For AMD Navi RX 680 level SKU, 256bit GDDR6 will need 64 ROPS design which is mastering Pascal's 8 ROPS per 32 bit memory channel design.

So complete shit then for 2019? My Vega 64 was not such a bad purchase last year from the looks of things. PS5/X02 are going to suck I bet.

At 4K resolution, Radeon VII is okay at GTX 1080 Ti level. Techpowerup's summaries are shewed by bias NVIDIA games e.g. lacks Titian Fall 2.

Techpwerup benchmarking flaws

Assassin's Creed Origins (NVIDIA Gameworks, 2017)

Battlefield V RTX (NVIDIA Gameworks, 2018)

Civilization VI (2016)

Darksiders 3 (NVIDIA Gameworks, 2018), old game remaster, where's Titan Fall 2.

Deus Ex: Mankind Divided (AMD, 2016)

Divinity Original Sin II (NVIDIA Gameworks, 2017)

Dragon Quest XI (Unreal 4 DX11, large NVIDIA bias, 2018)

F1 2018 (2018), Microsoft's Forza franchise is larger than this Codemaster game.

Far Cry 5 (AMD, 2018)

Ghost Recon Wildlands (NVIDIA Gameworks, 2017), missing Tom Clancy's The Division

Grand Theft Auto V (2013)

Hellblade: Senuas Sacrif (Unreal 4 DX11, NVIDIA Gameworks)

Hitman 2

Monster Hunter World (NVIDIA Gameworks, 2018)

Middle-earth: Shadow of War (NVIDIA Gameworks, 2017)

Prey (DX11, NVIDIA Bias, 2017 )

Rainbow Six: Siege (NVIDIA Gameworks, 2015)

Shadows of Tomb Raider (NVIDIA Gameworks, 2018)

SpellForce 3 (NVIDIA Gameworks, 2017)

Strange Brigade (AMD, 2018),

The Witcher 3 (NVIDIA Gameworks, 2015)

Wolfenstein II (2017, NVIDIA Gameworks) Results different from https://www.hardwarecanucks.com/forum/hardware-canucks-reviews/78296-nvidia-geforce-rtx-2080-ti-rtx-2080-review-17.html when certain Wolfenstein II map exceeded RTX 2080's 8 GB VRAM.

-------------

AMD has to address Unreal Engine 4 problem not just for Microsoft's Unreal Engine 4 modifications.

Under Raja "Mr TFLOPS" Koduri administration (joined AMD in 2013), GCN didn't evolved beyond R9-290X's quad raster engines and 64 ROPS (read-write) basic design.

AMD has yet to master Pascal's 8 ROPS per 32 bit memory channel design. R&D efforts was spent on HBM v1/v2 being coupled with 64 ROPS design and PR marketing pushing game devs towards async compute which used texture units as read-write units (e.g. different AA solution in Doom 2016 when async compute is enabled).

VII is like a fast 64 ROPS and quad raster engine GPU with high memory bandwidth.

For comparison, RTX 2080 has six raster engines with 64 ROPS and 4MB L2 cache with superior delta color compression. RTX 2080 design shows NVIDIA can decouple raster engines from 64 ROPS with 256bit bus design which could be important for any future GPU evolution.

For AMD Navi RX 680 level SKU, 256bit GDDR6 will need 64 ROPS design which is mastering Pascal's 8 ROPS per 32 bit memory channel design.

So if I understand this right. AMD is currently limited to 4 raster engine per 64 ROPS, while Nvidia has 6 raster engine per 64 ROPS? And the lack of raster engine is one of the main reasons for AMD lagging behind?

This is all a little technical for me.

Under Raja "Mr TFLOPS" Koduri administration (joined AMD in 2013), GCN didn't evolved beyond R9-290X's quad raster engines and 64 ROPS (read-write) basic design.

AMD has yet to master Pascal's 8 ROPS per 32 bit memory channel design. R&D efforts was spent on HBM v1/v2 being coupled with 64 ROPS design and PR marketing pushing game devs towards async compute which used texture units as read-write units (e.g. different AA solution in Doom 2016 when async compute is enabled).

VII is like a fast 64 ROPS and quad raster engine GPU with high memory bandwidth.

For comparison, RTX 2080 has six raster engines with 64 ROPS and 4MB L2 cache with superior delta color compression. RTX 2080 design shows NVIDIA can decouple raster engines from 64 ROPS with 256bit bus design which could be important for any future GPU evolution.

For AMD Navi RX 680 level SKU, 256bit GDDR6 will need 64 ROPS design which is mastering Pascal's 8 ROPS per 32 bit memory channel design.

So if I understand this right. AMD is currently limited to 4 raster engine per 64 ROPS, while Nvidia has 6 raster engine per 64 ROPS? And the lack of raster engine is one of the main reasons for AMD lagging behind?

This is all a little technical for me.

That's correct.

GP104 Pascal vs Hawaii GCN

What's "Raster Engine" or Rasterizer hardware?

ROPS is the classic read-write units for color and depth/Z data that interacts with "Raster Engine" or Rasterizer hardware. Shaders changes vertex or pixel color data.

Vega GCN has crossbar data transfer improvement which load balances rasterization workloads among it's quad Rasterizer hardware, hence AMD knows about Rasterizer hardware bottleneck.

If ROPS is a bottleneck, Texture Units can be used for read-write operations.

@ronvalencia: in other other games the radeon VII even with16gb is an issue

This is why I purchased RTX 2080 Ti i.e. VII like rapid pack maths compute features with better raster hardware. Tensor cores and RT hardware are extra benefits.

I like TU102's register storage vs CUDA core ratios.

Please Log In to post.

Log in to comment