Video: http://www.youtube.com/watch?v=QIWyf8Hyjbg

Mantle heavily reduces the CPU’s workload

R9-290X + FX 8350 Base Build:

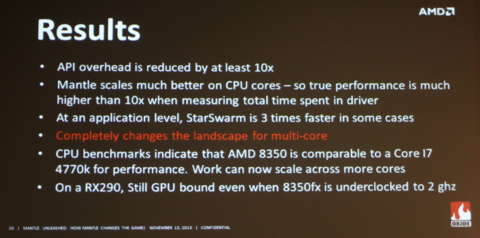

As the speaker discloses after the demo (at 37:18), their CPU is actually hardly even being used. The developer presented the audience with a before-and-after frame analysis of how Mantle affects the system’s workload in comparison to DirectX. In the frame analysis taken from the game running with DirectX, we see a chunk of the CPU being used by the driver thread. In the second (the one where Mantle was used), that thread is simply gone.

Not only did it free up a lot of CPU time when switched to Mantle, but Mantle itself took only a fraction of CPU time to function as opposed to DirectX’s required driver thread. This means that developers have the option to go one of two ways if they use Mantle: they can utilize that unused CPU time to improve their game, or they can simply drop the CPU requirement. The viability of lower CPU performance requirements was later verified even further (at 39:55) when the speaker disclosed that even when underclocked to 2GHz, the FX-8350 was still waiting for the GPU to finish on any given frame. Typically, we would see things the other way around.

http://wccftech.com/amd-mantle-demo-game-gpubound-cpu-cut-2ghz/#ixzz2o4EAfAgn

Log in to comment