Could someone tell me what is the difference between the ones we have now and the ones coming in the future. maxwell is supposed to use a 20nm, but I have no idea what means and how it benefits gaming. Will it make's the gpus more powerful, the video card being a bit smaller in terms of size, and could run at higher temps? And will this be a next gen improvement to the gpu I have now?

i want to know something about the next nvidia/amd gpu's

This topic is locked from further discussion.

Nvidia's Maxwell was nearly redesigned from the ground up for maximum power savings, I'm not sure they've added a whole lot feature wise though. Their current Maxwell offering hasn't gone down to 20nm yet and it matches Kepler with something like 50% of the power usage. Keep in mind this is still at 28nm! That is pretty phenomenal, and knowing Nvidia likes to build humongous GPUs, the more they scale without having to fight power limits is a good thing. Not much info from the AMD camp although they are releasing a new 28nm die that is also supposed to cut down on the power consumption. We'll see what happens.

The 20nm spec refers to the size of the transistors used in the gpu. I believe it's the length of the channel between the collector and emitter of each transistor. The decrease in size has two obvious benefits, you can fit more of them on to the same amount of space as before, and each one requires less power and generates less heat.

They are probably using cmos logic which reaches its physical limitations around 18nm, so using 20nm is very impressive.

As far as I know, we're not going to see 20nm GPU's this year. The first Maxwell GPU's will be 28nm...

are the 20nm gpus supposed to be more expensive than the 28 one's? You know, because it's new technology and all.

Hello. Maxwell GPUs are already out there.

GTX750 and GTX750ti are both Maxwell 28nm.

From Nvidia:

Turbocharge your gaming experience with the GeForce GTX 750 Ti. It’s powered by first-generation NVIDIA® Maxwell™ architecture, delivering twice the performance of previous generation cards at half the power consumption. For serious gamers, this means you get all the horsepower you need to play the hottest titles in beautiful 1080 HD resolution, all at full settings.

What Nvidia bring with Maxwell is a huge leap to performance per watt.

It delivers near HD7850/GTX650ti boost performance only with 60watt!!!

Imagine what it can do with behemoths of 250watt like GTX780/Titan Class.

Oh we know that @Coseniath, however the top line GPU haven't been released with Maxwell yet.

I like that they say Twice the performance and half the powerconsumption in the same sentence. The way it is worded it sounds like they have 4x performance per watt.

Oh we know that @Coseniath, however the top line GPU haven't been released with Maxwell yet.

I like that they say Twice the performance and half the powerconsumption in the same sentence. The way it is worded it sounds like they have 4x performance per watt.

Well not everyone have my knowledge/information or yours so I am refering the GM107 based cards to avoid any misunderstanding.

Yeah about the 4x performance per watt, both Nvidia and AMD have PhD at overhyping about their products :P.

Rumors saying that Nvidia will follow their Kepler tactics. So the next GTX880 or w/e will be called, it will have a full GM104 chip with 3200 cores (yeap Titan Black / 780ti have 2880 cores).

If this will be true we will not see the real flagship GM100/110 soon (again rumors on this are cloudy, some saying of 5000-6000 cores).

So TheShadowLord07, with 10% more cores, faster GPU clock (lower power consumption which means better thermals which means they can have a 1200Mhz/3200core monstorsity), and maybe improved drivers at the time of the realease, you can expect at least a ~30% performance jump similar to GTX580-GTX680 boost.

But this will be true if they use 28nm. If they use 20nm, it will be a different story...

Nvidia's Maxwell was nearly redesigned from the ground up for maximum power savings, I'm not sure they've added a whole lot feature wise though. Their current Maxwell offering hasn't gone down to 20nm yet and it matches Kepler with something like 50% of the power usage. Keep in mind this is still at 28nm! That is pretty phenomenal, and knowing Nvidia likes to build humongous GPUs, the more they scale without having to fight power limits is a good thing. Not much info from the AMD camp although they are releasing a new 28nm die that is also supposed to cut down on the power consumption. We'll see what happens.

GoFlo's AMD Jaguar based SoCs(28nm process node) has better performance per watt than TSMC's version and there a large power reduction when using HBM (High Bandwidth Memory) instead of AMD's 512bit GDDR5.

From http://wccftech.com/amd-feature-generation-hbm-memory-volcanic-islands-20-graphics-cards-allegedly-launching-2h-2014/

At ~500 GBps, 512bit GDDR5's 80 watts to HBM's 30 watts.

Hello. Maxwell GPUs are already out there.

GTX750 and GTX750ti are both Maxwell 28nm.

From Nvidia:

Turbocharge your gaming experience with the GeForce GTX 750 Ti. It’s powered by first-generation NVIDIA® Maxwell™ architecture, delivering twice the performance of previous generation cards at half the power consumption. For serious gamers, this means you get all the horsepower you need to play the hottest titles in beautiful 1080 HD resolution, all at full settings.

What Nvidia bring with Maxwell is a huge leap to performance per watt.

It delivers near HD7850/GTX650ti boost performance only with 60watt!!!

Imagine what it can do with behemoths of 250watt like GTX780/Titan Class.

Can be rivalled by AMD's mobile parts.

Oh Nvidia has also plans to use Stacked DRAM (I guess for marketing reasons AMD refers to it as HBM), but as it seems they will use it in the next gen Volta.

Future Nvidia 'Volta' GPU has stacked DRAM, offers 1TB/s bandwidth

Nvidia has updated its public GPU roadmap, revealing new details about upcoming products including a graphics solution that will purportedly offer about four times the memory bandwidth of the new $1,000 GeForce GTX Titan. Codenamed "Volta," the GPU family is expected to arrive sometime after 2014's Maxwell refresh, presumably in 2016 judging by Nvidia's typical two-year architecture refresh cycle.

Can be rivalled by AMD's mobile parts.

Well when it comes to PC desktop gaming, most of us don't care about low watt mobile parts.

If AMD could do this with an 60 watt GPU it will definitely be out there.

AMD had the performance crown for the best GPU (HD7750) without a 6-pin molex required and worked well for them.

Personally and for the most people using a desktop for gaming, 150watt+ for a GPU is pretty average.

I will wait what AMD will have to offer to counter Maxwell, cause I don't wanna see again a $1000 single GPU...

The best advantage I see to having power efficiency besides mobile supremacy is being able to scale up the number of transistors more easily without having to worry about the power ceiling. If you look at Hawaii vs GK110, they're pretty close in performance. Hawaii has something like 800mil - 1bil less transistors than GK110 and it's definitely a power hog in comparison. Do you think AMD could have bulked on even more hardware had they not hit the power ceiling? What if GCN had been a little more efficient and they were able to get that comfy 250W at a similar transistor count to Nvidia? It's hard to say what's harder to do, increase parallelism at lower bandwidth and fight yield problems, or get better yields with a smaller die but increase bandwidth and in the process, increase overall TDP. All I know is that these engineers have some very tough problems to tackle, and it's interesting that Nvidia and AMD have different takes on the problem. I'm interested to see what both parties bring to the table.

Hello @Marfoo

The best advantage I see to having power efficiency besides mobile supremacy is being able to scale up the number of transistors more easily without having to worry about the power ceiling.

I would disagree here. That's the best advantage of architecture shrink (40nm to 28nm to 20nm etc etc). If this wouldn't been able, now we would have 10m² GPUs :P.

If you look at Hawaii vs GK110, they're pretty close in performance. Hawaii has something like 800mil - 1bil less transistors than GK110 and it's definitely a power hog in comparison. Do you think AMD could have bulked on even more hardware had they not hit the power ceiling?

I would disagree here too. Hawaii has 6200M transistors while GK110 has 7100M transistors. The problem that most people notice and what AMD should do in the begining is that Hawaii chip (and GK110) should not go for the 1Ghz core clock as AMD did. You can see that Nvidia with a far superior cooler, aimed for 837Mhz on the first attempt to release such GPU monstrosity. AMD on the other hand, with crapier than crap cooler aimed for 1Ghz just for their lust to aim for the single GPU performance crown. If they aimed at 750Mhz (which was the limit of their cooler) there would be no problem and the companies like saphire/asus/gigabyte/msi would release 900+Mhz GPUs with their superior coolers (and they did).

I agree with the rest you said though...

@Coseniath: For the first part, it was assumed no die shrink involved. You can add more transistors if they're not sucking up too much power. ie, lower bandwidth (clock speed), wider architecture like Nvidia. As for the second part, we were basically talking about the same thing when I said AMD trades higher bandwidth for smaller die and higher yield where Nvidia uses low bandwidth, larger die, lower yield, more power efficiency.

So we're basically talking about the same things. :)

@Marfoo: Yeah I know that we are saying the same things about their techs, I just disagree in the reason AMD is doing this.

What I was saying was that competition is good, but you don't f@ck your customers in order to get better position in the launch date benchmarks...

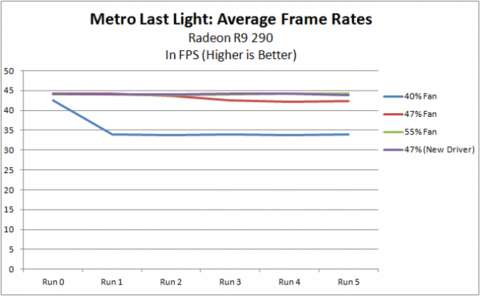

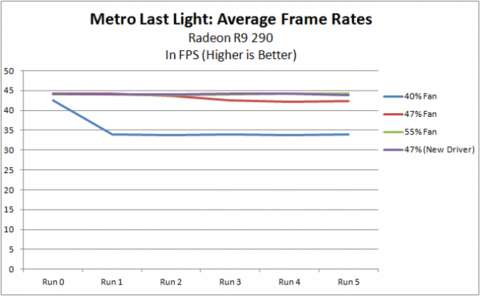

Many people that got the reference 290 and 290X were left with a GPU too loud, too hot and after a few minutes of gaming, not providing the performance they promised to them.

@Marfoo: Yeah I know that we are saying the same things about their techs, I just disagree in the reason AMD is doing this.

What I was saying was that competition is good, but you don't f@ck your customers in order to get better position in the launch date benchmarks...

Many people that got the reference 290 and 290X were left with a GPU too loud, too hot and after a few minutes of gaming, not providing the performance they promised to them.

For R9-290, http://www.extremetech.com/gaming/170636-well-that-was-quick-amd-solves-r9-290-throttling-problem-with-a-new-driver

I have both Sapphire (100362SR) reference R9-290 and MSI Radeon R9 290X Twin Frozr OC.

@Marfoo: Yeah I know that we are saying the same things about their techs, I just disagree in the reason AMD is doing this.

What I was saying was that competition is good, but you don't f@ck your customers in order to get better position in the launch date benchmarks...

Many people that got the reference 290 and 290X were left with a GPU too loud, too hot and after a few minutes of gaming, not providing the performance they promised to them.

For R9-290, http://www.extremetech.com/gaming/170636-well-that-was-quick-amd-solves-r9-290-throttling-problem-with-a-new-driver

The Cause Of And Fix For Radeon R9 290X And 290 Inconsistency

Retail Radeon R9 290X Frequency Variance Issues Still Present

The 2nd pic is from 25mins run of Metro LL. Just no comment.

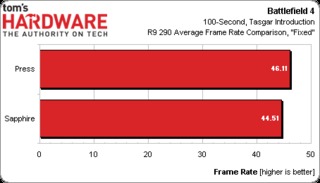

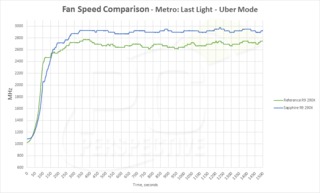

For the 3rd pic:

"However, what I found very interesting is that these cards did this at different fans speeds. It would appear that the 13.11 V9.2 driver did NOT normalize the fan speeds for Uber mode as 55% reported on both cards results in fan speeds that differ by about 200 RPM. That means the blue line, representing our retail card, is going to run louder than the reference card, and not by a tiny margin."

People who bought the retail reference, AMD should let them exchange it with a non-reference one...

I have 290 reference in crossfire and they never throttle.

There will abe always an exception that proves the rule. You might have an exceptional case for airflow. And maybe there are others too. But the majority don't.

Back in the day that only reference cards were released people were complaining all the time. Most of them wisely decide to wait and buy one with custom cooler and they didn't have probs at all :).

I dont want to hijack this thread... but (lol) if you where looking to get a new GPU this year, would you get any of the cards now (780/290)? Or are we expecting a worthy release this year?

Not sure how it works with GPUs and release cycles.. but is this a year with a full release or like a 1.5 release where it is just a few improvements and worth waiting a year for the next step?

I dont want to hijack this thread... but (lol) if you where looking to get a new GPU this year, would you get any of the cards now (780/290)? Or are we expecting a worthy release this year?

Not sure how it works with GPUs and release cycles.. but is this a year with a full release or like a 1.5 release where it is just a few improvements and worth waiting a year for the next step?

Nvidia has already released a chip (GM107) of their next architecture (Maxwell) and looks really promising. They might release Maxwell in the upcoming months if they don't wait for 20nm.

On the other hand, AMD will definitely not release at 20nm this year so if they have a new GPU architecture plans for this year should be in about the same time with Nvidia's release.

We expect Both GPUs to fully support DX12.

I dont want to hijack this thread... but (lol) if you where looking to get a new GPU this year, would you get any of the cards now (780/290)? Or are we expecting a worthy release this year?

Not sure how it works with GPUs and release cycles.. but is this a year with a full release or like a 1.5 release where it is just a few improvements and worth waiting a year for the next step?

New architecture this year from nVidia if they release. If they don't and you plan on buying a nVidia card, wait another year... Or how long it takes for Maxwell to be released. Preferably GM110/210 chip and not a GM104/204 chip. That would GTX 680 VS GTX 780.

@Coseniath: & @horgen Ah ok, cool.. the waiting goes on! And then I will have to wait for a nonref card :)

Please Log In to post.

Log in to comment