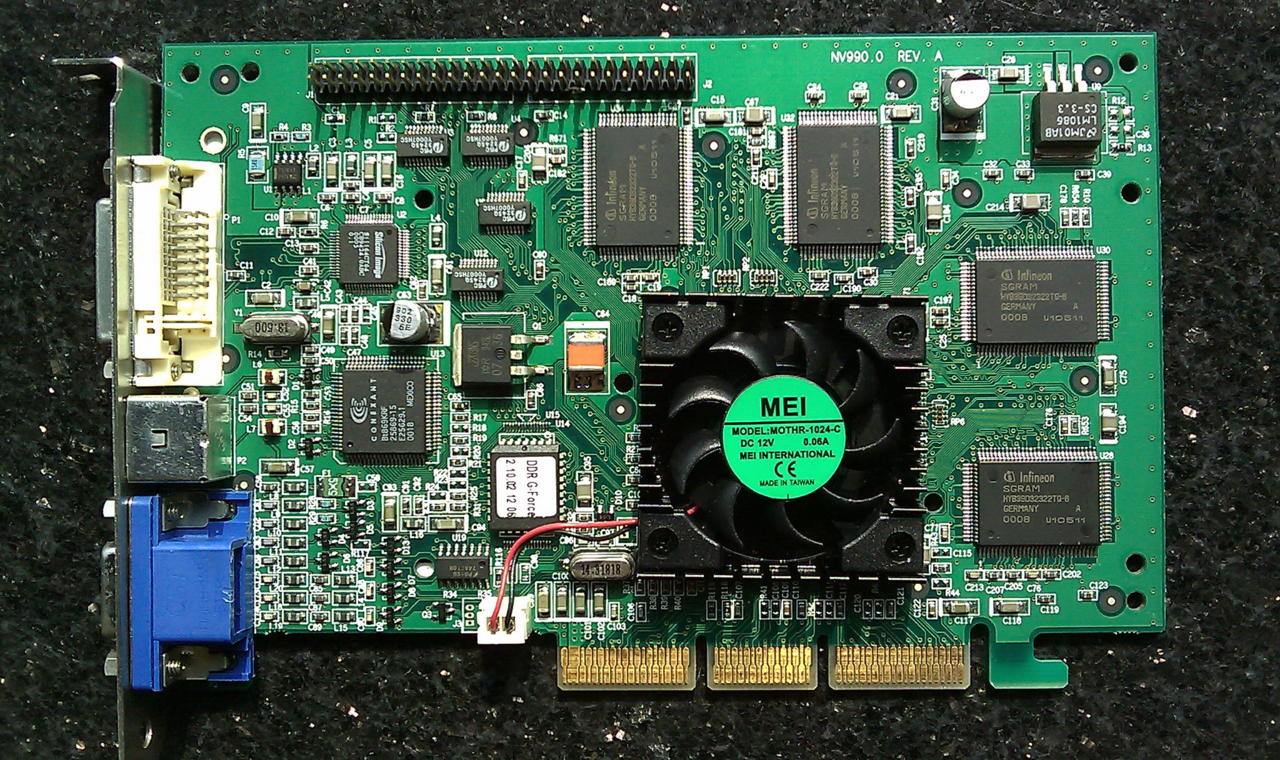

When it comes to picking a graphics card for your gaming PC, there are just two companies making cards that'll make your games look pretty and smooth: AMD and Nvidia. With capable GPUs from both companies going for as little as $100 these days, it's easy to take these marvels of pixel-pushing-power for granted. But this commoditization of 3D graphics hasn't been the smoothest of journeys. Since the introduction of the groundbreaking $300 3dfx back in 1996, a market that once saw over 20 different companies competing for 3D GPU domination has seen bankruptcies, buyouts, and ever increasing research and development costs reduce it to just two major players (integrated graphics from Intel notwithstanding).

While AMD and Nvidia have co-existed somewhat peacefully over the past few years, in recent months both companies have become increasingly outspoken about their competitor's products, and neither is backing down. For the consumer, competition is usually a wonderful thing, pushing down prices and leading to innovations that just wouldn't have been possible without the added pressure of a plucky upstart to push things things along. The origins of the GPU owe a lot to the sheer volume of companies trying to take ownership of the growing 3D gaming market back in the late 90s. Indeed, without the likes of 3dfx, Matrox, and ATI rapidly innovating on 3D graphics, the first true GPU--Nvidia's GeForce 256--might never have happened.

From $300 GPU to $3000 GPU

"The GPU era was first brought on when we integrated geometry in the same chip with the GeForce 256, around 12 years ago or so," Nvidia's senior vice president of content and technology Tony Tamasi tells me. "Part of that geometry processing was traditionally done by the CPU, and that even included really big machines like Silicon Graphics' reality engine and things like that. They didn't integrate geometry processing onto the same chip as pixel processing; they had separate chips to do that. It was pretty common for 3D games to have a few dozen to a few hundred triangles, and post geometry-integration, that went up into the thousands. Today you have millions of triangles per frame. It's really changed the way scenes look. You have an enormous amount of complexity that you can generate in a real-time game. It wasn't conceivable to do, let's say a tree, let alone entire forests like you have today, with limbs and leaves and mouldable polygons, it was completely inconceivable."

Today's GPUs are exponentially more powerful than the likes of the GeForce 256 or Nvidia's competitor at the time, the ATI Radeon DDR. The 64MB of video memory and 200 Mhz clocks speeds of those old cards pale in comparison to the multi-gigabyte, dual-slot monsters at home in current high-end gaming PCs. Additions like programmable shading, tessellation (the ability for a GPU to create geometry on-the-fly), and PhysX have made games look incredibly sophisticated, and with 3D games dominating the market, the demand for ever increasing levels of visual fidelity shows no sign of slowing down. But the GPU makers themselves don't drive all these advances, at least in Nvidia's case.

"Who would have thought we'd be selling graphics cards to consumers that are $3000, or $1000?" - Nvidia

"We don't really know [what we're going to work on next]," says Tamsi. "We talk to everybody, we talk to game developers, we talk to API vendors, we talk to people in the film industry, and we talk to anyone who wants to push the state of the art in terms of real time graphics. We ask them what things they're doing that are too hard, or too impractical, or that you really can't afford to do, and then we try to figure out a way to solve those problems. But there are things that we know about GPU architecture--or at least much more deeply--than the rest of the industry does, and so we continue to advance that way too. For example, we've worked hard on antialiasing and sampling, because we know what's possible, and how to make it better. Everyone wishes there weren't crawlies, and aliased edges, and sparklies, so we just keep working to make that better, and make that better in a way that we can all afford from a performance perspective."

"Who would have thought we'd be selling graphics cards to consumers that are $3000, or $1000?" continued Tamasi. "And there are a lot of them. And I think the reason for that is the demand has gone from a niche, with only a few games requiring 3D, to nearly every game requiring some form of 3D. But even more awesome is that the expectations of the visuals have risen just as much. If you go back to the nostalgia days and fire up something like GL Quake, and you're like 'wow, I can't believe I ever thought this was cool!' I mean, it was amazing at the time, but you look at it now, and you're like 'really?' If you compare a GL Quake to something like a Battlefield 4, it's just a night and day experience, and as good as games are these days, we're not even close to done. We've got so much more we need to do. If you compare games to really well done movies that have advanced CG, we've just got miles to go before we're done."

The Lighting Challenge

Real-time graphics that approach the visual fidelity of films might still be a ways away, but Nvidia and AMD are both working on new software technologies to make it happen. One of the most important aspects of creating convincing 3D visuals is implementing realistic lighting. The challenge is not having an artist manually animate realistic-looking lighting, but to simulate how a light reacts with, and bounces off, multiple surfaces in real-time, otherwise known as path tracing. In the film industry, path tracing is done offline, because the calculations required are simply too complex. Epic's Unreal Engine 4, while not true path tracing, did at one point feature a real-time, global illumination engine, called sparse voxel unity global illumination, which produced some impressive results. But, just before the engine launched, the feature was taken out.

Marvel Rivals - Official Loki Character Reveal Trailer | The King of Yggsgard Remnant 2 - The Forgotten Kingdom | DLC Launch Trailer Stellar Blade - Official "The Journey: Part 2" Behind The Scenes Trailer | PS5 Games Fortnite Festival - Official Billie Eilish Cinematic Season 3 Trailer Dead by Daylight | Tome 19: Splendor | Reveal Trailer Destiny 2: The Final Shape | Journey into The Traveler Trailer Starship Troopers: Extermination - Official "The New Vanguard" Update 0.7.0 Trailer 2XKO - Official Illaoi Champion Gameplay Reveal Trailer Solo Leveling: Arise - Character Gameplay Teaser #15: Seo Jiwoo Genshin Impact - Character Demo - "Arlecchino: Lullaby" Goddess Of Victory: Nikke | Last Kingdom Full Animated Cinematic Trailer Metaphor: ReFantazio The King’s Trial Trailer

Please enter your date of birth to view this video

By clicking 'enter', you agree to GameSpot's

Terms of Use and Privacy Policy

"We're not really at the point where it's practical to design a game around a fully ray traced or path traced renderer," says Nvidia's Tamasi. "Those renderers produce stunningly good looking images, though. And the other nice thing about them is that they're a physically based renderer, meaning you're literally just bouncing light into a scene of having it interact with a surface, it kind of addresses a bunch of other challenges. Shadows just kind of naturally fall out of that. But we're a long way from that being practically done in real time.

"What [Epic] was working on was the sparse voxel unity global illumination, and we worked with them on it," continued Tamasi. "In fact, it was based on research we did. And it was kind of that next step towards a more advanced lighting model. It supported indirect lighting. It produced some very nice results, but it was very expensive. We understand why they took it out. It's a technology that's not quite ready, because you can't really put something into a game or an engine that would require a Titan. I mean, we would love that. Trust me, we would love it if every game Epic put out required a Titan, but there's a business everyone has to run too. We want the games industry to be super successful and super profitable so they can keep making games, and that means it needs to be able to sell a lot of them. But we're still working with Epic. Just because it wasn't in that release of UE4 doesn't mean we're not still working on it. And there's both hardware and software advances that will make that a reality. I'd say stay tuned and don't give up hope on that."

The World's Fastest GPU

Creating the hardware to power such advances is a huge technical challenge, and AMD and Nvidia taken different paths in their quest for better hardware. For AMD, the focus has been on sheer brute force rendering and compute abilities. Its R9 295X2 joins two of its notoriously hot R9 290X GPUs onto a single board, adds watercooling, and ups the power consumption to a scarily large 500 watts. It's an approach that has resulted in AMD stealing back the world's fastest GPU crown back from Nvidia, and at $1500, it's not bad value either, particularly when compared to Nvidia's $3000 Titan Z. It's an achievement AMD is understandably proud of.

"If you want to find the world's fastest PC card, which company do you go to for the GPU? You go to AMD," says AMD's game scientist Richard Huddy. "The 295X2 is clearly ahead of anything else you can pick up right now, and that’s a tremendous amount of innovation, a fantastic amount of density to the compute capabilities there. It’s driven by an understanding that the business is quite different from the way it was 20 years ago."

"Having the world's fastest GPU is a big deal actually," continued Huddy. "It shows you are capable of building something that is quite extraordinary. There are two things you're trying to do: one is to drive the future; the other is to make money in the present. Darren Grasby [AMD's VP of EMEA] is here primarily as a businessman. For Darren, this is about running the business of AMD, organising things, keeping things so that the business as a whole can survive. Without it, technology enthusiasts will be out of work. We need both. And absolutely, the technology is wildly exciting. It's the stuff that drives the experience for billions of users around the world. You need both. Being able to produce something which is the absolute high point of technology at that point in the industry is a way of showing you deserve to play in the future."

Nvidia's most recent attempt at building a dual GPU card, the Titan Z, got off to a rocky start, with the product being delayed after its initial announcement. And when it did finally materialise, its performance--while still impressive--couldn't quite toppel the 295X2. Nvidia claims its priorities in developing the card--aircooling and power consumption--were part of the reason for its delay.

"Frankly, we feel really good about Titan Z. We made a different set of choices to AMD; they chose water, we chose air. They chose more power, we chose less," says Nvidia's Tamasi. "I don't think either one is necessarily right or wrong. The way I think about it is, if you want the fastest product, not everyone is looking at $1000, or $1500, or $2000 products. And then there's the quality of the product. How well does it work? How well does it work in SLI? And especially towards the higher end, I don't think that it's true at any price point that it's strictly about performance per dollar. I mean, that's an important factor, but I don't think that's the only factor...in the end, I think we're pretty pleased we got that much performance into an aircooled product at that power level. If you think about the GTX 750 or the Maxwell architecture, and how much performance that delivers in that power budget, and then you just kind of let your mind wander into the future for, you know, bigger versions of something like that, it's exciting."

"Think about the GTX 750 or the Maxwell architecture, and how much performance that delivers in that power budget, and then you just kind of let your mind wander into the future for bigger versions of something like that." - Nvidia

"The biggest challenge, frankly, is no longer being able to build a fast GPU," continued Tamasi. "In fact, the GPU industry figured that out a while ago when we started building these scalable architectures that allowed us to build really big GPUs. If you look at the GPU industry now, we're building the biggest, most complex processors on the planet, bigger than anybody else, because we figured out how to build really scalable architectures. Since graphics can consume a near infinite amount of horsepower, we build as big as the process and market will allow us. The biggest challenge is power. While the market has shown a willingness to pay for more expensive GPUs, people don't want to have to watercool everything, or have 19 fans and make things really loud. Power is the big challenge. It used to be, 10 or 20 years ago, was that the semiconductor process would largely address that. That's just really no longer true."

The Great GameWorks Debate

One of the most recent changes in the GPU market has been the rise of Nvidia's GameWorks technology and AMD's Mantle, which both companies are using to push performance forward alongside developing better GPUs. Nvidia's GamesWorks is a set of visual effects, physics effects, and tools designed to give developers more options when it comes to a game's visuals. Effects like HBAO+, TXAA, and FaceWorks are all part of GameWorks. AMD's Mantle is a competitor API to OpenGL and Direct X, allowing console-like low-level access to the CPU and GPU. By removing the some of the software layers of the operating system between the hardware and the software, with the right programming chops developers are able to see an increase in performance. Both technologies are sound in their efforts to push the performance and visual fidelity of 3D games, but there's a philosophical difference in how these technologies are being implemented. AMD claims its Mantle API is open, and free to use, while simultaneously bashing Nvidia's GameWorks for being more proprietary in nature.

"Can it be conceivably possible for one hardware company to write code which the other company has to run in a benchmark?", asks AMD's Huddy. "Doesn't sound to me like a great way of running the business. But the truth is, GameWorks creates that situation...AMD has never supplied its SDK in a method that's other than source code, because that isn’t what game developers want. They all want source code; they never want anything else. I have never in my entire experience working with games developers, since 1996, been asked for a DLL for a blackbox, a chunk of compiled code that you can't edit. As a games developer you want to edit it, there's no doubt editing and fixing it, and be able to debug it to understand it to tweak it and make it more efficient on their chosen platform is what they want."

"That's not what is happening with GameWorks I repeat, it is not happening with GameWorks," continued Huddy. "Under GameWorks, Nvidia have said since April 1st, they started to become willing to supply the source code to some game developers under certain conditions. We are forbidden from access to that. I believe Nvidia publicly said that games developers are not allowed to share the source code with us. Certainly that's what we hear from games developers. And in the majority of cases, game developers don't have access to the source code. So you can see the structure of what has been built here. Nvidia have been able to create code, which sits insides a game, which the game developer can't edit, because there's no source code there in the majority of cases. Even if there was, AMD can't edit it, because they're not allowed to share any source code if they had any access to it, which AMD can't optimise for in a reasonable way. That's the situation we're confronted with, it's as bad as that...I can show you cases where, for example in Call of Duty: Ghosts or in Batman: Arkham Origins, Nvidia is writing code which is monstrously inefficient, and hurting the performance of their own customers and ours, and they're just doing it just to harm us in benchmarks. That's the way I read it."

"I can show you cases where, for example in Call of Duty: Ghosts or in Batman: Arkham Origins, Nvidia is writing code which is monstrously inefficient, and hurting the performance of their own customers and ours, and they're just doing it just to harm us in benchmarks. That's the way I read it." - AMD

It's tough to say just how many of Huddy's comments are accurate, or are just being thrown out into the wild to stir up some good old fashioned fanboy flame wars between the two companies. However, AMD does have some reason to be concerned. Speaking to ExtremeTech, many developers--including Unreal Engine developer Epic--weighed in on AMD's comments about GameWorks. They agreed that developers will always want source code in order to optimise, and that GameWorks libraries make it more difficult for AMD to do so. But they also said that while AMD has a legitimate reason to be concerned about GameWorks, there's little evidence to suggest that Nvidia is using the technology to intentionally harm AMD, even if that could conceivably change in the future.

"Yeah, they've said a lot of thing that I guess we would classify as curious, says Nvidia's Tamsi when I put AMD's comments to him. "And I guess they're spending a lot of time talking about us. At the high level, maybe they'd be better off spending some time working on their own stuff. The comments about GameWorks being closed we do find a little bit interesting, because we do provide the source code for developers, and most of those effects do run on the competition, and we do spend a great deal of time making sure they run well on all platforms, because that's what is in the best interests of the industry."

"Now, they been running around basically saying that '[Nvidia] could make it bad, or they could do this, or they could do that,' and yeah, we could do a lot of things, which would be stupid and in the worst interest of game developers," continued Tamasi. "And if we did, game developers wouldn't use it. They're gonna try to spin up whatever story they can, and their mantra of 'open, open, open' rings hollow for me, because Mantle isn't open. Intel have asked for code, we've asked for it, there's no open spec, so that's not an open standard, so I think that's kind of ironic. And they keep claiming that they put all the source code for their effects out, but that's not really true either. When they did TressFX, hair, in Tomb Raider, they didn't publish the source code for that until well after the game has been released."

"They tend to use that open mantra, and I can understand why, but I think from our perspective we invest an enormous amount, in terms of innovation, not only in our hardware but in our software and algorithms. And we do that for benefit of the game industry, not for benefit of AMD. They try to make these claims that if they don't have source code they can't optimise, but that's just not true. That hasn't been true for the last 20 years and it's not true now. We don't get the source code for games that AMD or the effects that AMD works on generally, and yet, we do everything we can to make that the experience for every game is great on Nvidia. We don't complain about it, that's just the way it is."

TresFX and Mantle

This back and forth between the two companies is a strange development, particularly as I remember the days of attending Nvidia briefings when the company wouldn't even mention AMD's name during benchmark comparisons. But it's somewhat understandable given the problems both companies have faced for performance in specific games. Most recently, AMD faced issues with Ubisoft's Watch Dogs, with even its most powerful GPUs unable to match the performance of Nvidia's until it released a driver update. Meanwhile, Nvidia faced issues with AMD's TressFX technology in Tomb Raider, with frame rates taking a tumble when it was enabled. Nvidia blamed a lack of source code for the problem. Clearly, these issues aren't great for the consumer, but neither company is willing to take the fall for the problems people have experienced.

"The [TressFX] source code was given to the game developer," says Huddy. "I know because I talked to the guy who supported the developer on this. The source code was available to Nvidia two and a half weeks before the game was released. It took Nvidia about another six weeks to fix stuff, and the real blunt answer is because Nvidia's compute shader support was shoddy in its driver. It's not because we had something funny going on in the code, they should have been able to run that code on their driver; their driver was simply broken. They fixed the driver, but we gave source code to the game developer all of the way through, and I know because I look at the contract. I asked for this to make sure I wasn't walking into a place where I would regret saying some of these things, I had looked at the contract and I know the contract does not block Nvidia's access."

As for Nvidia's comments on Mantle, Huddy went on the offensive again. "The distinction between Mantle and GameWorks is this. GameWorks you can create a benchmark that your competitor has to run, AMD has to run, which is wrong. Mantle, there isn't the slightest possibility of that. With all of the work that we do on Mantle, the worst thing we can do is that we cannot service our DX11 drivers as well as we might be able to. It is possible--because we write our own DX11 drivers and the Mantle spec--for us to fail DX11 users by not putting enough work on to the DX11 driver, but there is no way we can write any code that harms Nvidia. It's not physically possible, because when we wrote the Mantle spec and the Mantle API it is presently for AMD hardware only. Moreover, if we contributed to a open standards body or to an API owner, like Microsoft, then they produce DX12--and we have taken them through the Mantle specs and that's one of the reasons why DX12 is going to be as good as it is--then they implement it in a way that it equitable for all vendors."

"We've said publicly in 2014 we will publish the Mantle SDK which will give people the ability to build a Mantle driver," continued Huddy. "In principle, that would mean Nvidia could pick up Battlefield 4, Thief or any of the other 12 or 13 games we expect to be released over this year which use Mantle, and they'll be able to have a Mantle driver and they'll simply run that app faster. Now I think Nvidia's pride will get in the way of them doing that, and they'd rather wait until DX12. But if its interest was really in delivering the best value to the consumer, they should seriously consider it. I think pride is a real problem, and maybe even we would struggle to pick up a competitor API in that kind of way. But the truth is if it was about delivering the best experience for consumers they would say, 'you know what, I can do something good with it.' They could do good for their own consumers with Mantle, and we can't do any harm. I see that as profoundly different. It's a really different situation, I find it hard to see how anyone could be confused into thinking 'wait a minute, AMD are using Mantle to favour their hardware, Nvidia are using it to favour their hardware.’ It isn't the same kind of thing at all."

The Future

So what next for these two giants of computer graphics? AMD's recent $36 million loss hasn't exactly had investors lining up to buy shares in the company, and the X86 side of the business means that it's fighting against not just Nvidia, but the market leading Intel too. But when it comes to its GPU business, despite some excellent products at tempting price points, the company still faces a perception problem. Jump onto any article about AMD or Nvidia and you'll likely find a comment about AMD's poor drivers, or shoddy hardware support. Overcoming that now ingrained perception of the company is a tall order, even for one with millions of marketing dollars to spend.

The fact is, it takes an awful amount of time to fix reputation for drivers. It stays around. It's the ‘halo effect’ in reverse. - AMD

"I think we've been suffering from the year 2000 to be honest," says AMD's Huddy. "This is a legacy of opinion which is not justified. Before you arrived, we were talking together about what we are doing about dispelling that. It's a myth; it's not the case. The fact is, it takes an awful amount of time to fix reputation for drivers. It stays around. It's the ‘halo effect’ in reverse. I believe there's a lot of independent research which shows AMD drivers are really solid, and I believe we will be able to come up with research that proves AMD drivers are better, and I believe we'll be able to do that."

"There is this oddity," continued Huddy. "Nvidia can produce an driver on a GameWorks title which comes out on the day it is released, and it will raise the performance, and you'll think, 'hey, nice one Nvidia, good job.' Interestingly it can happen because they've optimised a piece of broken code that they supplied to a games developer. Slightly worrisome again. They get the credit, for fixing the broken code they supplied, and we get criticised for our failure to fix the broken code that was supplied to us at very short notice. It's a real problem going on here, so let's name names and get it sorted."

As for Nvidia, it's making further inroads into ARM. Just recently, the company launched its 32-bit Tegra K1 ARM SOC, with a custom 64-bit version featuring its Denver CPU arriving later this year. Nvidia has also branched out into hardware, with the company hoping that its Shield Portable and the recently released Shield Tablet do for mobile gaming what its desktop GPUs have done for PC gaming. Its desktop GPUs aren't going away anytime soon (with a new range of flagships rumoured to be announced very soon), but Nvidia is confident that mobile is going to play a bigger part of its business in the future.

"At some point, you could envisage a future where the two markets [PC and Mobile] converge, or at least the lines blur between the two, and maybe really the only difference is power consumption," says Nvidia's Tamasi. "In fact, architecturally we've been working that way. If you look at K1 that's Kepler, and that's what a Titan is built on. The fundamental difference there is power. From a developer perspective, we're trying to put the infrastructure in place that lets them do that."

"Do I think that a phone game and a PC game are going to be the same game?" continued Tamasi. "Hard to say. If nothing else, the way you interact with the device is different, and the way people game on phones today tends to be different, or there's a different playing time. Who's to say the future isn't that you sit down, you put your phone on the table, it wirelessly pairs with a keyboard and mouse and display, and you're playing the next-gen version of your favourite game in that way. Is the market going to evolve that way? Don't know, but we're doing everything we can to make that happen, because if it does, that's great for Nvidia."