Apparently, Xbox One has bricked it's ACE units and second render unit.

From http://www.eurogamer.net/articles/digitalfoundry-microsoft-to-unlock-more-gpu-power-for-xbox-one-developers

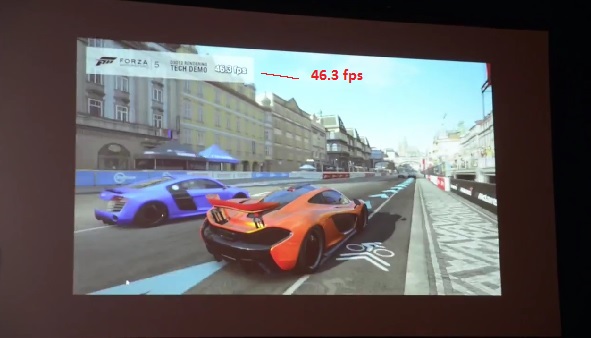

"In addition to asynchronous compute queues, the Xbox One hardware supports two concurrent render pipes," Goossen pointed out. "The two render pipes can allow the hardware to render title content at high priority while concurrently rendering system content at low priority. The GPU hardware scheduler is designed to maximise throughput and automatically fills 'holes' in the high-priority processing. This can allow the system rendering to make use of the ROPs for fill, for example, while the title is simultaneously doing synchronous compute operations on the compute units."

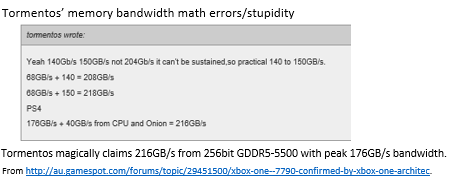

5. Fill rate is a function for both ROPS and memory writes. The existence of 7950's 32 ROPS at 800Mhz with 240 GB/s > PS4's 32 ROPS at 800Mhz with 176 GB/s shows that PS4's 32 ROPS are not being fully used.

7. Against your POV. Read http://gamingbolt.com/xbox-ones-esram-too-small-to-output-games-at-1080p-but-will-catch-up-to-ps4-rebellion-games

Bolcato stated that, “It was clearly a bit more complicated to extract the maximum power from the Xbox One when you’re trying to do that. I think eSRAM is easy to use. The only problem is…Part of the problem is that it’s just a little bit too small to output 1080p within that size. It’s such a small size within there that we can’t do everything in 1080p with that little buffer of super-fast RAM.

“It means you have to do it in chunks or using tricks, tiling it and so on. It’s a bit like the reverse of the PS3. PS3 was harder to program for than the Xbox 360. Now it seems like everything has reversed but it doesn’t mean it’s far less powerful – it’s just a pain in the ass to start with. We are on fine ground now but the first few months were hell.”

...

The PS4 is thus more of a gaming machine in its core focus. “Yeah, I mean that’s probably why, well at least on paper, it’s a bit more powerful. But I think the Xbox One is gonna catch up. But definitely there’s this eSRAM. PS4 has 8GB and it’s almost as fast as eSRAM [bandwidth wise] but at the same time you can go a little bit further with it, because you don’t have this slower memory. That’s also why you don’t have that many games running in 1080p, because you have to make it smaller, for what you can fit into the eSRAM with the Xbox One.”

One of the big difference between X1 vs Xbox 360 is connection bandwidth between embedded memory and GPU.

AMD is just interested in selling more GPU products..

Radeon HD 7950's 32 ROPS results > PS4 says Hi.

Doubling X1's 16 ROPS to 32 ROPS wouldn't be fully used with memory bandwidth around 150 GB/s.

If we use Radeon HD 7950 as the top 32 ROPS example for 3DMarks Vantage' fill rate score i..e

240 GB/s = 32 ROPS at 800Mhz and we divide it by 2, you get 120 GB/s = 16 ROPS at 800Mhz. X1's 16 ROPS is clock at 853Mhz = 127.95 GB/s. Here we establish that 16 ROPS go further than 7790/R7-260X.

7790's 16 ROPS at 1Ghz is gimped by 96 GB/s memory bandwidth, which place it lower than 7850. The fastest known 12 CU equipped GCN (1.32 TFLOPS) with 153 GB/s memory bandwidth is the prototype 7850 with 12 CU. Read http://www.tomshardware.com/reviews/768-shader-pitcairn-review,3196.html Note that prototype 7850 (with 12 CU) is slower than the retail 7850 (with 16 CU).

The big difference between prototype 7850 with 12 CU (1.32 TFLOPS) and Xbox One 1.32 TFLOPS) is hitting ~153 GB/s level memory bandwidth i.e. Xbox One needs "tiling tricks" for effective eSRAM usage.

After all the software tricks, PS4 is still faster than Xbox One i.e. the comparison is like R7-265 (1.89 TFLOPS + 179 GB/s memory bandwidth) > prototype 7850 with 12 CU (1.32 TFLOPS+ 153.6 GB/s memory bandwidth).

BttleField 4 and R7-265 vs 7850 review from http://www.guru3d.com/articles_pages/amd_radeon_r7_265_review,14.html

Remember,

1. prototype 7850 (1.32 TFLOPS + 153.6 GB/s) is slower than 7850 (1.76 TFLOPS + 153.6 GB/s).

2. 7850 (1.76 TFLOPS+ 153.6 GB/s) is slower than R7-265 (1.89 TFLOPS + 179 GB/s)

Radeon HD 7770 (1.28 TFLOPS)/7790/R7-260X doesn't have the software options to exceed their lower memory bandwidth limits i.e. missing ESRAM booster.

Log in to comment