@04dcarraher said:

1. Not talking about the "resolution parity" just namely the cpu causing the 30 fps cap... as with many new games.

2. No actually cpu usage can go up, Lowering the resolution of a game or program increases the load on a CPU. As the resolution lowers, less strain is placed on the graphics card because there are less pixels to render, but the strain is put on to the CPU. At lower resolutions, the frame rate is limited to the CPU's speed. Simply that at low resolutions, the GPU can render more speeding up the fps. So it becomes about how quickly the CPU can send those frames to the GPU.

3. its not damage control you cow, the X1 and PS4 cpu just does not have enough ass to do everything. They will need to transfer tasks to the gpu if they expect to get more complex worlds.

1-I didn't argue about the frames.

2-Again lowering your resolution will not free CPU time,if you are CPU bound at 1080p you will be if you lower the resolution to 900p as well.It is a fact not my opinion been CPU limited has 2 fixes,increase the CPU power or lower CPU usage and you don't lower CPU usage by lowering the resolution which is something that totally rest on the GPU.

Rendering more pixels isn't the issue here,since you still are bound by 1920x1080p where ever you have 1 NPC or 300 NPC the pixel number stay the same,if you are CPU bound at 1080p because you have 200 dancers, you still have 200 dancers at 900p and the one doing the pixel rendering is the GPU not the CPU in any way.

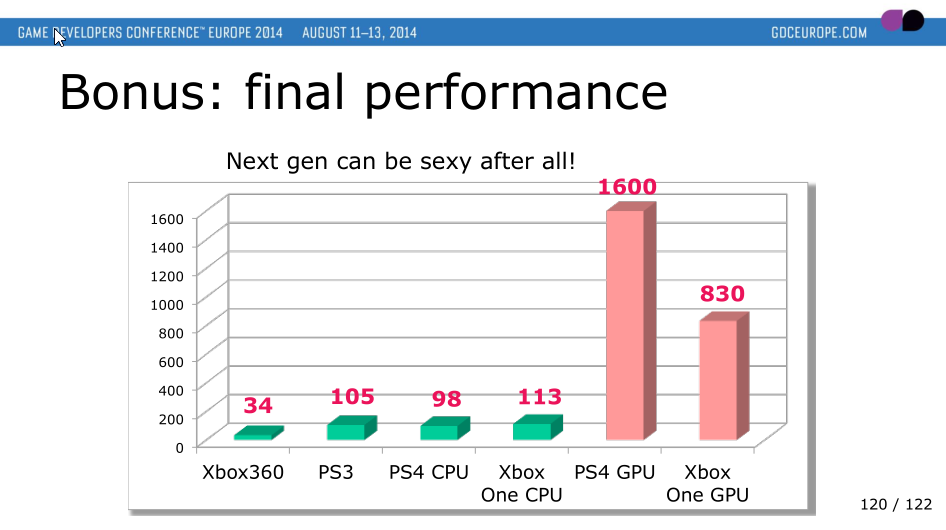

3-Yes it is damage control and UBI has a charge which show the PS4 destroyed the xbox one in GPU side,they could have done it,they could care less because this is a yearly milking game,the fact that Ubi admit that it hold back the PS4,the fact that Ubi hid setting on PC to not shame consoles prove my point it wasn't the PS4 problem the issue it was UBI.

Hell an employee was saying yesterday that the frame difference was 1 or 2 frames so it got lock at 30FPS,yeah like an R265 will have a 2 frame advantage over a 250X..lol

@Cloud_imperium said:

@cantloginnow said:

Its funny,the hermits.lems and sheep all accept the limitations of console hardware and really dont give a shit for the most part but the Cows are completely obsessed and in complete denial making fools of themselves everyday.You guys need to let it go.Its not something you can win and no one really gives a shit.If I owned a ps4 I'd be bitching more about how big of a disappointment this console and its crappy library is than what resolution a game is running at.

Sales and resolution,you guys really have nothing going on on the ps4 do you?

I don't blame them . They have been brainwashed by clever marketing of Sony since "teh powah of cell processor" days .

You are an idiot and considering that you defend the xbox one and hide on PC even more,that dude claim lems accept limitations of hardware in what fu**ing forum they do that.? Because here they don't have you seen the last few thread about this games.? All are a war because lemmings refuse to admit the xbox one is a weaker console,from magic DX12 API to hidden GPU by blackace arguments it is a joke some of you are just to blind.

@MK-Professor said:

and the solution to that is to increase the rez in order to fully utilize the GPU without any fps loss, but Ubisoft for some inexplicable reason didn't do that, that is why people are complaining.

This and 100 times this...

And you know we basically never agree,some people are playing blind UBI hid PC settings on watchdods to not make the console version look even worse after the whole downgrade crap on PS4,this company fu** up.

They had a parity clause with MS which is probably what preventing them from having a 1080p version on PS4.

Log in to comment