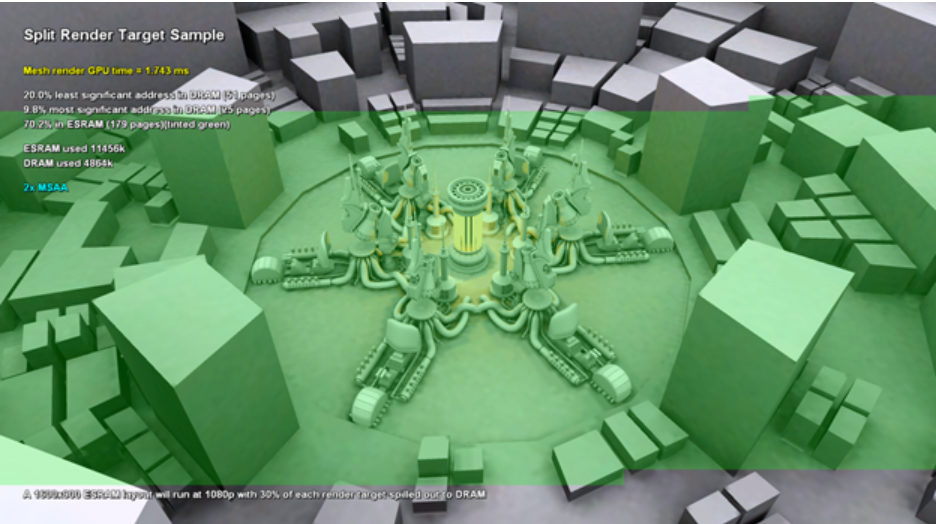

Whatever the PS4 is "losing" in cycles in terms of system performance from it's slightly slower processor piece is more than being made up for in terms of memory performance with a unified memory addressing architecture. If you are bottlenecking in terms of compute performance, it is because you have not optimized your engine for the CUs.

Seeing as how this is a multiplatform game from a small developer, these things come as no surprise. What also doesn't come as a surprise is that, once again, an Xbox fanpuppet is making a big deal about something no one is actually giving a flying crap about.

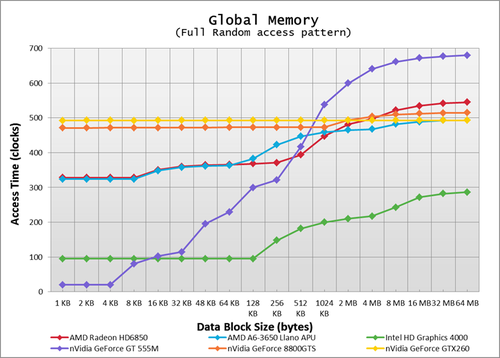

The memory does nothing for the ps4 besides increasing gpu performance, the latency in gddr5 is actually worse for cpu calculations.

It's like @04dcarraher says the longer we're in this gen the more prevalent the cpu advantage will become. X1 has already optimized by dropping the reserved resources fro the kinect and unlocking that 7th core. Dx 12 won't make the x1 run games at 1080p, but the framerate difference will become higher making the powerpoint station 4 do what it was born to do.

and that is making full hd slideshows lmao

Log in to comment