Digital Foundry article on the 6GB requirements. Mostly poitning out stuff we already knew, but some new stuff:

http://www.eurogamer.net/articles/digitalfoundry-2014-eyes-on-with-pc-shadow-of-mordors-6gb-textures

"PS4 is basically a match for the high quality setting on PC, which requires a graphics card with 3GB of RAM for best performance. Ultra is a small improvement over high based on the quality of the ground texture here, while medium looks a little blurry."

That's interesting. Having tested the medium and high textures, it didn't look that blurry on my TV, even in borderless mode (stupid v-sync). But interesting to find out the PS4 is running on high textures. Making use of that shared memory pool i guess.

Still, that's for textures. The rest is most likely pulling the "1 setting bellow max on pc" thing. But cool none the less

"One thing we should point out is that it is perfectly possible to run higher-quality artwork on lower-capacity graphics cards. However, you quickly fall foul of the split-memory architecture of the PC. On Xbox One and PS4, the available memory is unified in one address space, meaning instant access to everything. On PC, memory is typically split between system DDR3, and the graphics card's onboard GDDR5. Running high or ultra graphics on a 2GB card sees artwork swapping between the two memory pools, creating stutter. Shadow of Mordor has an optional 30fps cap incorporated into its options, though - with a 2GB GTX 760, we could run the game at ultra settings with high quality textures and frame-rate was pretty much locked at the target 30fps with only very minor stutter. In short, there's a way forward for those using 2GB cards, but it does involve locking frame-rate at the console standard - and the ultra textures didn't play nicely with the card, even at 30fps."

Hm, looks like games might actually start ass pulling 3GB for textures from now on. Seems stupid to me. So far, Wash Doge and SoM (TF too? Don't remember that one) only use them for texture quality.

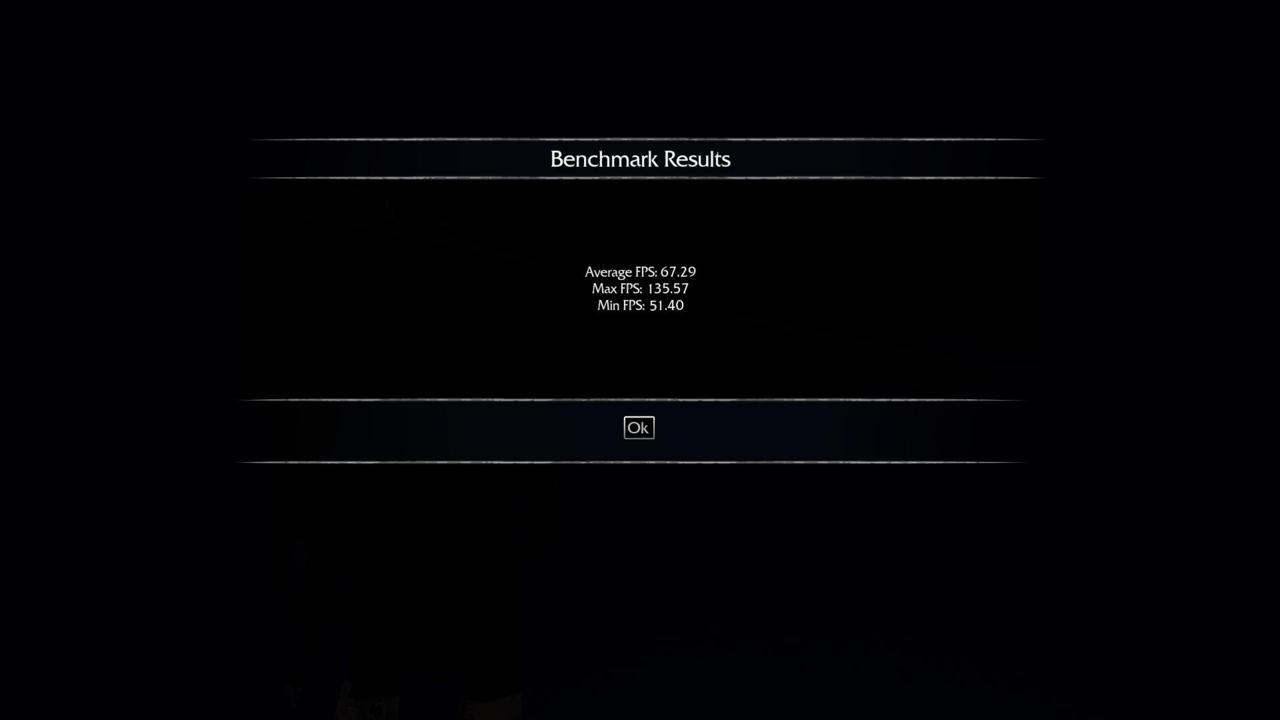

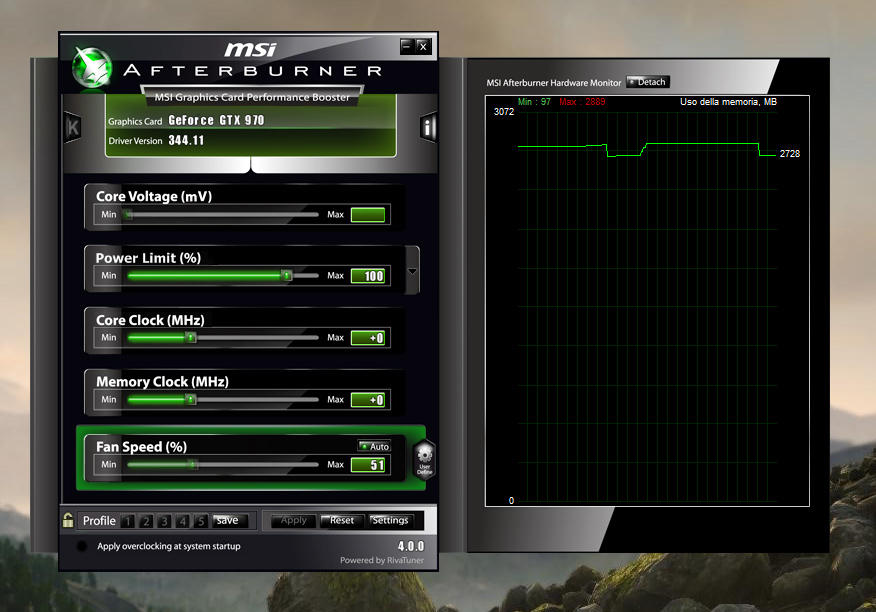

So, we have to run those bellow but can max others out. Ironically, i have a 2GB 760 too. I was getting around 46fps with those setting (fucking v-sync). I capped it at 30, but will see about 60fps today. Stutering was mostly absent, specially compared to WD.

So, brute GPU power is still more important, btu VRAM requirement might annoyingly go up from now on. Still, just the textures alone is fine with me.

Log in to comment