[QUOTE="Chozofication"]And that 8900 series GPU.... 8970 OEM (rename 7970 Ghz Edition aka Tahiti XT2) has about 4.3 TFLOPs and >200 watts chip. You are looking at Silverstone's Mini-ITX SG07/SG08/SG09/SG10 level box with a matching power supply.Lol 4.2 teraflops and ray tracing.

clyde46

Official ALL NextGen rumors and speculation (PS4, 720, Steambox)

This topic is locked from further discussion.

[QUOTE="clyde46"][QUOTE="Chozofication"]And that 8900 series GPU.... 8970 OEM (rename 7970 Ghz Edition aka Tahiti XT2) has about 4.3 TFLOPs and >200 watts chip. You are looking at Silverstone's Mini-ITX SG07/SG08/SG09/SG10 level box with a matching power supply. So its not going to be a regular GPU then?Lol 4.2 teraflops and ray tracing.

ronvalencia

[QUOTE="clyde46"][QUOTE="Chozofication"]And that 8900 series GPU....Lol 4.2 teraflops and ray tracing.

04dcarraher

No 8850 and a 8950 duct taped together! :P

8950 (renamed 7950 Boost Edition) would need a case similar to Silverstone's min-ITX SG07/SG08/SG09/SG10 cases with matching power supply.

Microsoft can sell low end microwave oven without a magnetron ray-gun.

a side note, was wondering what's the fastest card from AMD and noticed they have HD 7990 (which I never heard of it before now, but that thing is a beast)

ya, it's 2 GPU card, I have the older HD 6990 for the past two years and was wondering why the 7990 didn't come out on March last year (similar to the 6990 release date), so I assumed they aren't releasing one for the 7000 series until I saw that now. (I'm checking the benchmarks now).most powerful ATI card f*ck calling em amd is the dual 7970 gpu bs

cryemocry

P.S. the 6990 is really a good video card, it runs almost everything on very high settings, even today's games.

[QUOTE="cryemocry"]u jelly? Na just just does not understand , "great power comes greater amount of power and cooling required"most powerful ATI card f*ck calling em amd is the dual 7970 gpu bs

MonsieurX

[QUOTE="cryemocry"]ya, I have the HD 6990 for the past two years and was wondering why the 7990 didn't come out on March last year (similar to the 6990 release date), so I assumed they aren't releasing one for the 7000 series until I saw that now. (I'm checking the benchmarks now). P.S. the 6990 is really a very powerful card, it runs almost everything on high details even today's games. And two 7970's would need 400w or more and 2x the cooling of a single 7970. Your 6990 is two down clocked 6970's that needs 375w. Your gpu alone uses more then 2x the power draw of the original 360.most powerful ATI card f*ck calling em amd is the dual 7970 gpu bs

Mystery_Writer

I remember when people thought Sony was going to use a Cell 2.0, I actually thought they would too. I guess Sony finally saw it as a waste of money but it's looking like the overall rendering setup this time will be similar.

[QUOTE="Mystery_Writer"][QUOTE="cryemocry"]ya, I have the HD 6990 for the past two years and was wondering why the 7990 didn't come out on March last year (similar to the 6990 release date), so I assumed they aren't releasing one for the 7000 series until I saw that now. (I'm checking the benchmarks now). P.S. the 6990 is really a very powerful card, it runs almost everything on high details even today's games. And two 7970's would need 400w or more and 2x the cooling of a single 7970. Your 6990 is two down clocked 6970's that needs 375w. Your gpu alone uses more then 2x the power draw of the original 360.most powerful ATI card f*ck calling em amd is the dual 7970 gpu bs

04dcarraher

ya, it also very noisy. But it's been doing its job for the past 2 years. Thankfully I'm happy with it.

I went with a 6 core AMD x6 T1100 + 16 GB with that card back in the day (don't remember if it was at the same day, or bought it after the HD 6990).

Anyway, I'm happy with my AMD setup so far. It's surprisingly still runs all the new games at very high settings.

Lol, even to these days, these rumors still have no clue who really are behind the CPU design for the Next Xbox. It is not from AMD but IBM is real contributor. You fool...:lol:

I knew the Sony would abandon the Cell since the Cell was originally designed to be the cpu and gpu and after tests the Cell was sub par to what was out , so they contacted Nvidia for a stop gap measure using the G70 (7800).I remember when people thought Sony was going to use a Cell 2.0, I actually thought they would too. I guess Sony finally saw it as a waste of money but it's looking like the overall rendering setup this time will be similar.

Chozofication

[QUOTE="Chozofication"]I knew the Sony would abandon the Cell since the Cell was originally designed to be the cpu and gpu and after tests the Cell was sub par to what was out , so they contacted Nvidia for a stop gap measure using the G70 (7800).I remember when people thought Sony was going to use a Cell 2.0, I actually thought they would too. I guess Sony finally saw it as a waste of money but it's looking like the overall rendering setup this time will be similar.

04dcarraher

Yeah. They were gonna use 2 cell's and no gpu. If only they contacted Ati instead...

Cell was pretty incredible by itself but whoever thought it could do graphics by itself wasn't thinking straight.

Lol, you are such a hypocrite. You just wanted to beleive in whatever looks good for $ony but always rebuff any advantages that the Next Xbox might have. Also, you still don't understand the RAM types to being of.TheXFiles88Looks who's talking.. First it has been rumored to be 32MB that is what i read i have never read about 100MB of eDRAM. Second it sounded better if sony had an 8000 than 7870 AKA 7970M sadly that is another rumor as well. 3rd. I do understand the ram how eDRAM is been use to compensate for the slow DDR3 speed,fact is GDDR5 is almost 3 times faster. And lastly i actually even say that when all is say and done they will probably perform more or less the same,now stop been such a cry baby.

[QUOTE="TheXFiles88"]Lol, you are such a hypocrite. You just wanted to beleive in whatever looks good for $ony but always rebuff any advantages that the Next Xbox might have. Also, you still don't understand the RAM types to being of.tormentos3rd. I do understand the ram how eDRAM is been use to compensate for the slow DDR3 speed,fact is GDDR5 is almost 3 times faster. And lastly i actually even say that when all is say and done they will probably perform more or less the same,now stop been such a cry baby.

I doubt you've seen anything saying it'll have 32 mb's, that would be asinine. (Well there are crazy rumors but I haven't seen that one)

The way eDRAM works is, in addition to storing the framebuffer, the eDRAM helps with graphics tasks that require such fast ram, and the DDR3 can handle the rest.

Also, DDR3 1600 only has 12.8 gb's of bandwidth, I don't know where you got 68 from. But the DDR3 in 720 would have to be at least 1866 or more.

[QUOTE="TheXFiles88"]Lol, you are such a hypocrite. You just wanted to beleive in whatever looks good for $ony but always rebuff any advantages that the Next Xbox might have. Also, you still don't understand the RAM types to being of.tormentosLooks who's talking.. First it has been rumored to be 32MB that is what i read i have never read about 100MB of eDRAM. Second it sounded better if sony had an 8000 than 7870 AKA 7970M sadly that is another rumor as well. 3rd. I do understand the ram how eDRAM is been use to compensate for the slow DDR3 speed,fact is GDDR5 is almost 3 times faster. And lastly i actually even say that when all is say and done they will probably perform more or less the same,now stop been such a cry baby.

I am a cry baby? Lol. Look, you believe in "RUMORS" which are best suited for you but rebuffed "REAL" patent such as the "Scalable Console Patent" from M$ in 2010 which clearly stated that there have been multi-Core CPU & GPU?

"Microsoft applies for scalable console patent"

A patent application filed by Microsoft back in 2010 has just been discovered and points to the possibility that the next-gen Xbox will support a scalable hardware structure. The documents were first spotted by a member of theBeyond3Dforum and dissected byEurogamer. Based on the findings, it looks like Microsoft may be looking to create a hardware foundation that could then be customized to create models with varying performance capabilities.

http://www.gamerevolution.com/news/microsoft-patent-points-to-plans-for-scalable-console-13939

[QUOTE="ronvalencia"][QUOTE="clyde46"] So its not going to be a regular GPU then?04dcarraherThe guts of the GPU would be still based on 28nm fab'ed GCN. Which means at best you will see 10% power usage difference if they rearranged the transistors , but it seems they are just renamed 7000 series.

From GoFlo fabs, AMD could get another ~10%-to-15% by applying "resonant clock mesh" tech for AMD GCN, but desktop Radeon HD 79x0/89x0 would be still off limits for ~200 watts box.

I knew the Sony would abandon the Cell since the Cell was originally designed to be the cpu and gpu and after tests the Cell was sub par to what was out , so they contacted Nvidia for a stop gap measure using the G70 (7800).[QUOTE="04dcarraher"][QUOTE="Chozofication"]

I remember when people thought Sony was going to use a Cell 2.0, I actually thought they would too. I guess Sony finally saw it as a waste of money but it's looking like the overall rendering setup this time will be similar.

Chozofication

Yeah. They were gonna use 2 cell's and no gpu. If only they contacted Ati instead...

Cell was pretty incredible by itself but whoever thought it could do graphics by itself wasn't thinking straight.

PS3 with Radeon X1900/128bit GDDR3 + CELL/72bit XDR would have two processors capable for Fold@Home.On PS3, Radeon X1900 wouldn't be restricted by MS DX9c e.g. ATI's Close-To-Metal GpGPU compute, 3DC+ (recycled for DX10), Havok type GpGPU physics (AMD tech demo), MSAA with FP HDR.

I am a cry baby? Lol. Look, you beleive in "RUMORS" which are best suited for you but rebuffed "REAL"TheXFiles88Dude you know how many patents not only sony also MS had that they will never use,just because they patent something doesn't mean it will appear in a product.. In fact that patent is why some blind fanboys are saying the 720 will have 3 GPU,the 3 GPU in question all from the 8000 series 2 of them been top of the line 8970,the other 8850,there is no way in hell that MS will pack that unless the xbox 720 is the size of a huge tower with huge fans,and cost $1,500 dollars minimum.. The 8970 has a TDP of 250 Watts 1 alone is already to hot for the 720,let alone 2 of them + another 8850,ad to this ram,CPU we are looking at what a 700 watts console.? Please dude stop been a cry baby that just a lame patent MS did,to cash on any one who do something like that.

[QUOTE="Chozofication"]

[QUOTE="04dcarraher"] I knew the Sony would abandon the Cell since the Cell was originally designed to be the cpu and gpu and after tests the Cell was sub par to what was out , so they contacted Nvidia for a stop gap measure using the G70 (7800). ronvalencia

Yeah. They were gonna use 2 cell's and no gpu. If only they contacted Ati instead...

Cell was pretty incredible by itself but whoever thought it could do graphics by itself wasn't thinking straight.

PS3 with Radeon X1900/128bit GDDR3 + CELL/72bit XDR would have two processors capable for Fold@Home.On PS3, Radeon X1900 wouldn't be restricted by MS DX9c e.g. ATI's Close-To-Metal GpGPU compute, 3DC+ (recycled for DX10), Havok type GpGPU physics (AMD tech demo), MSAA with FP HDR.

Why do you think Sony went with Nvidia? Only thing I can think of is that they just weren't thinking. I don't think it would've cost more for a Xenos.

PS3 with Radeon X1900/128bit GDDR3 + CELL/72bit XDR would have two processors capable for Fold@Home.[QUOTE="ronvalencia"]

[QUOTE="Chozofication"]

Yeah. They were gonna use 2 cell's and no gpu. If only they contacted Ati instead...

Cell was pretty incredible by itself but whoever thought it could do graphics by itself wasn't thinking straight.

Chozofication

On PS3, Radeon X1900 wouldn't be restricted by MS DX9c e.g. ATI's Close-To-Metal GpGPU compute, 3DC+ (recycled for DX10), Havok type GpGPU physics (AMD tech demo), MSAA with FP HDR.

Why do you think Sony went with Nvidia? Only thing I can think of is that they just weren't thinking. I don't think it would've cost more for a Xenos.

Because it was a stop gap measure to use the G70 chipset.[QUOTE="Chozofication"][QUOTE="ronvalencia"] PS3 with Radeon X1900/128bit GDDR3 + CELL/72bit XDR would have two processors capable for Fold@Home.

On PS3, Radeon X1900 wouldn't be restricted by MS DX9c e.g. ATI's Close-To-Metal GpGPU compute, 3DC+ (recycled for DX10), Havok type GpGPU physics (AMD tech demo), MSAA with FP HDR.

04dcarraher

Why do you think Sony went with Nvidia? Only thing I can think of is that they just weren't thinking. I don't think it would've cost more for a Xenos.

Because it was a stop gap measure to use the G70 chipset.I don't follow.

PS3 with Radeon X1900/128bit GDDR3 + CELL/72bit XDR would have two processors capable for Fold@Home.[QUOTE="04dcarraher"][QUOTE="Chozofication"]

Yeah. They were gonna use 2 cell's and no gpu. If only they contacted Ati instead...

Cell was pretty incredible by itself but whoever thought it could do graphics by itself wasn't thinking straight.

Chozofication

On PS3, Radeon X1900 wouldn't be restricted by MS DX9c e.g. ATI's Close-To-Metal GpGPU compute, 3DC+ (recycled for DX10), Havok type GpGPU physics (AMD tech demo), MSAA with FP HDR.

Why do you think Sony went with Nvidia? Only thing I can think of is that they just weren't thinking. I don't think it would've cost more for a Xenos.

Sony was looking to patch CELL's missing GPU fix function units and G70 would be the closest match.

[QUOTE="Chozofication"][QUOTE="04dcarraher"] PS3 with Radeon X1900/128bit GDDR3 + CELL/72bit XDR would have two processors capable for Fold@Home.

On PS3, Radeon X1900 wouldn't be restricted by MS DX9c e.g. ATI's Close-To-Metal GpGPU compute, 3DC+ (recycled for DX10), Havok type GpGPU physics (AMD tech demo), MSAA with FP HDR.

ronvalencia

Why do you think Sony went with Nvidia? Only thing I can think of is that they just weren't thinking. I don't think it would've cost more for a Xenos.

Sony was looking to patch CELL's missing GPU fix function units and G70 would be the closest match.Huh. Does that mean a X1900 or Xenos actually would've been worse for the PS3?

[QUOTE="TheXFiles88"]I am a cry baby? Lol. Look, you beleive in "RUMORS" which are best suited for you but rebuffed "REAL"tormentosDude you know how many patents not only sony also MS had that they will never use,just because they patent something doesn't mean it will appear in a product.. In fact that patent is why some blind fanboys are saying the 720 will have 3 GPU,the 3 GPU in question all from the 8000 series 2 of them been top of the line 8970,the other 8850,there is no way in hell that MS will pack that unless the xbox 720 is the size of a huge tower with huge fans,and cost $1,500 dollars minimum.. The 8970 has a TDP of 250 Watts 1 alone is already to hot for the 720,let alone 2 of them + another 8850,ad to this ram,CPU we are looking at what a 700 watts console.? Please dude stop been a cry baby that just a lame patent MS did,to cash on any one who do something like that.

Lol, you still don't really understand the most basic fundamental architecture in video game consoles, dude. You just can not put the retail PC products in a video game console. It just doesn't work that way.

These are the custom built CPU & GPU in which IBM/AMD/M$ would remove any functions which are not applicable for games. Also, M$ has full control of the costs since they manuflacture these chips from a 3rd party such as Flexronics...

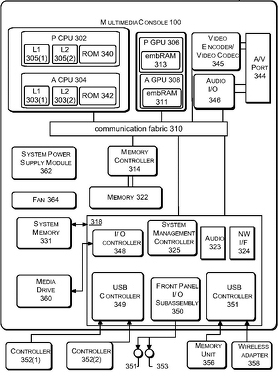

Also, the CPU & GPU may have multi-core structures, no multiple phycial CPU's & GPU's, just like the Xbox's Xenon design. If that pic doesn't satisfy you, how about this one. Same muiti-core CPU & GPU layout. So who is the cry baby now???

Sony was looking to patch CELL's missing GPU fix function units and G70 would be the closest match.[QUOTE="ronvalencia"][QUOTE="Chozofication"]

Why do you think Sony went with Nvidia? Only thing I can think of is that they just weren't thinking. I don't think it would've cost more for a Xenos.

Chozofication

Huh. Does that mean a X1900 or Xenos actually would've been worse for the PS3?

No. Sony didn't factor in the lack of forward design with G70. Radeon X1900 goes beyond DX9c's SM3.0 and fails DX10's SM4.0.Dude you know how many patents not only sony also MS had that they will never use,just because they patent something doesn't mean it will appear in a product.. In fact that patent is why some blind fanboys are saying the 720 will have 3 GPU,the 3 GPU in question all from the 8000 series 2 of them been top of the line 8970,the other 8850,there is no way in hell that MS will pack that unless the xbox 720 is the size of a huge tower with huge fans,and cost $1,500 dollars minimum.. The 8970 has a TDP of 250 Watts 1 alone is already to hot for the 720,let alone 2 of them + another 8850,ad to this ram,CPU we are looking at what a 700 watts console.? Please dude stop been a cry baby that just a lame patent MS did,to cash on any one who do something like that.[QUOTE="tormentos"][QUOTE="TheXFiles88"]I am a cry baby? Lol. Look, you beleive in "RUMORS" which are best suited for you but rebuffed "REAL"TheXFiles88

Lol, you still don't really understand the most basic fundamental architecture in video game consoles, dude. You just can not put the retail PC products in a video game console. It just doesn't work that way.

These are the custom built CPU & GPU in which IBM/AMD/M$ would remove any functions which are not applicable for games. Also, M$ has full control of the costs since they manuflacture these chips from a 3rd party such as Flexronics...

Also, the CPU & GPU may have multi-core structures, no multiple phycial CPU's & GPU's, just like the Xbox's Xenon design. If that pic doesn't satisfy you, how about this one. Same muiti-core CPU & GPU layout. So who is the cry baby now???

[QUOTE="Chozofication"][QUOTE="ronvalencia"] Sony was looking to patch CELL's missing GPU fix function units and G70 would be the closest match.ronvalencia

Huh. Does that mean a X1900 or Xenos actually would've been worse for the PS3?

No. Sony didn't factor in the lack of forward design with G70. Radeon X1900 goes beyond DX9c's SM3.0 and fails DX10's SM4.0.I think I get it.

RSX was better for the type of rendering going on when Sony chose Nvidia, but Xenos was made with future rendering in mind.

I just thought that Xenos was always better period, regardless of workload.

No. Sony didn't factor in the lack of forward design with G70. Radeon X1900 goes beyond DX9c's SM3.0 and fails DX10's SM4.0.[QUOTE="ronvalencia"][QUOTE="Chozofication"]

Huh. Does that mean a X1900 or Xenos actually would've been worse for the PS3?

Chozofication

I think I get it.

RSX was better for the type of rendering going on when Sony chose Nvidia, but Xenos was made with future rendering in mind.

I just thought that Xenos was always better period, regardless of workload.

Xenos has it's own issues i.e. NEC provided "SMART" eDRAM part. CELL's SPUs has enough power to patch RSX's aging design.

In general, Xbox 360 and PS3 are roughtly similar.

PS: SMART eDRAM includes NEC's GPU fix function backend units i.e. it's not 100 percent ATI.

Please Log In to post.

Log in to comment