Is 1.84 TFLOPS a lot for a GPU?

This topic is locked from further discussion.

[QUOTE="ShadowDeathX"]LOL comparing Nvidia design to AMD design. FAIL.... A Radeon HD 6850 has almost the same amount of TFLOPs @ SP as the PS4 does. Yet, a GTX580 destroys a 6850. HUSH!DarkLink77AMD is for fools and consoles gamers. People who care about performance buy the GTX series. Thank you Based Nvidia.

So going for a cheaper alternative with better image quality and some visionary feautes = foolish?

The things you read on here...

[QUOTE="ShadowDeathX"]LOL comparing Nvidia design to AMD design. FAIL.... A Radeon HD 6850 has almost the same amount of TFLOPs @ SP as the PS4 does. Yet, a GTX580 destroys a 6850. HUSH!DarkLink77AMD is for fools and consoles gamers. People who care about performance buy the GTX series. Thank you Based Nvidia.

Go spend ~$1000(1) when GDDR6 is coming in 2014. http://vr-zone.com/articles/gddr6-memory-coming-in-2014/16359.html

1. http://pcpartpicker.com/parts/video-card/#c=130

The fool is you.

AMD is for fools and consoles gamers. People who care about performance buy the GTX series. Thank you Based Nvidia.[QUOTE="DarkLink77"][QUOTE="ShadowDeathX"]LOL comparing Nvidia design to AMD design. FAIL.... A Radeon HD 6850 has almost the same amount of TFLOPs @ SP as the PS4 does. Yet, a GTX580 destroys a 6850. HUSH!nameless12345

So going for a cheaper alternative with better image quality and some visionary feautes = foolish?

The things you read on here...

AMD's drivers are absolute garbage and not worth dealing with.

Plus, at the end of the day, Nvidia really does make better cards.

AMD's a good budget option, but their stuff costs less for a reason.

Better image quality? Visionary features? Wut.

[QUOTE="nameless12345"]

[QUOTE="DarkLink77"] AMD is for fools and consoles gamers. People who care about performance buy the GTX series. Thank you Based Nvidia.DarkLink77

So going for a cheaper alternative with better image quality and some visionary feautes = foolish?

The things you read on here...

AMD's drivers are absolute garbage and not worth dealing with.

Plus, at the end of the day, Nvidia really does make better cards.

AMD's a good budget option, but their stuff costs less for a reason.

Better image quality? Visionary features? Wut.

Kepler GK110 still has garbage GpGPU performance and still lacking DirectX11.1 Level 11.1 support.

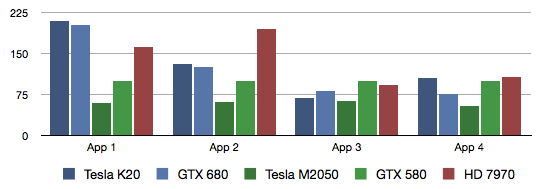

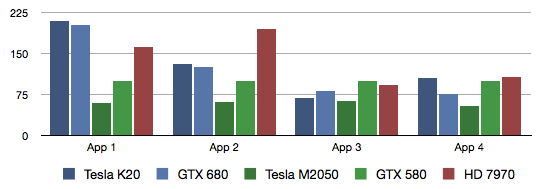

Non-Microsoft Direct3D GPGPU face-off: K20 vs 7970 vs GTX680 vs M2050 vs GTX580 from http://wili.cc/blog/gpgpu-faceoff.html

K20 = GK110

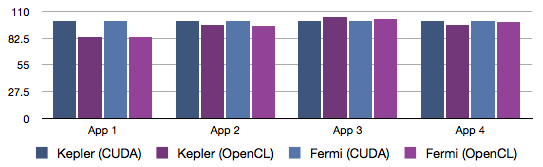

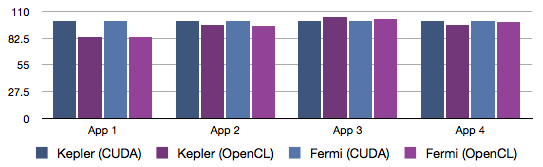

There's very difference between NVIDIA's OpenCL and CUDA.

PS, Sony PS4 would not be using Microsoft's Direct3D stack.

App 1. Digital Hydraulics code is all about basic floating point arithmetics, both algebraic and transcendental. No dynamic branching, very little memory traffic.

App 2. Ambient Occlusion code is a very mixed load of floating point and integer arithmetics, dynamic branching, texture sampling and memory access. Despite the memory traffic, this is a very compute intensive kernel.

App 3. Running Sum code, in contrast to the above, is memory intensive. It shuffles data through at a high rate, not doing much calculations on it. It relies heavily on the on-chip L1 cache, though, so it's not a raw memory bandwidth test.

App 4. Geometry Sampling code is texture sampling intensive. It sweeps through geometry data in "waves", and stresses samplers, texture caches, and memory equally. It also has a high register usage and thus low occupancy.

I don't think Sony would be repeating yet another GpGPU challenged solution.

1st party PS4 games would have an extreme AMD Gaming Evolved results.

[QUOTE="DarkLink77"][QUOTE="ShadowDeathX"]

AMD is for fools and consoles gamers. People who care about performance buy the GTX series. Thank you Based Nvidia.[QUOTE="DarkLink77"][QUOTE="ShadowDeathX"]LOL comparing Nvidia design to AMD design. FAIL.... A Radeon HD 6850 has almost the same amount of TFLOPs @ SP as the PS4 does. Yet, a GTX580 destroys a 6850. HUSH!ronvalencia

Go spend ~$1000(1) when GDDR6 is coming in 2014. http://vr-zone.com/articles/gddr6-memory-coming-in-2014/16359.html

1. http://pcpartpicker.com/parts/video-card/#c=130

The fool is you.

[QUOTE="DarkLink77"]

[QUOTE="nameless12345"]

So going for a cheaper alternative with better image quality and some visionary feautes = foolish?

The things you read on here...

ronvalencia

AMD's drivers are absolute garbage and not worth dealing with.

Plus, at the end of the day, Nvidia really does make better cards.

AMD's a good budget option, but their stuff costs less for a reason.

Better image quality? Visionary features? Wut.

Kepler GK110 still has garbage GpGPU performance and still lacking DirectX11.1 Level 11.1 support.

Non-Microsoft Direct3D GPGPU face-off: K20 vs 7970 vs GTX680 vs M2050 vs GTX580 from http://wili.cc/blog/gpgpu-faceoff.html

K20 = GK110

There's very difference between NVIDIA's OpenCL and CUDA.

PS, Sony PS4 would not be using Microsoft's Direct3D stack.

App 1. Digital Hydraulics code is all about basic floating point arithmetics, both algebraic and transcendental. No dynamic branching, very little memory traffic.

App 2. Ambient Occlusion code is a very mixed load of floating point and integer arithmetics, dynamic branching, texture sampling and memory access. Despite the memory traffic, this is a very compute intensive kernel.

App 3. Running Sum code, in contrast to the above, is memory intensive. It shuffles data through at a high rate, not doing much calculations on it. It relies heavily on the on-chip L1 cache, though, so it's not a raw memory bandwidth test.

App 4. Geometry Sampling code is texture sampling intensive. It sweeps through geometry data in "waves", and stresses samplers, texture caches, and memory equally. It also has a high register usage and thus low occupancy.

I don't think Sony would be repeating yet another GpGPU challenged solution.

AMD is for fools and consoles gamers. People who care about performance buy the GTX series. Thank you Based Nvidia.[QUOTE="DarkLink77"][QUOTE="ShadowDeathX"]LOL comparing Nvidia design to AMD design. FAIL.... A Radeon HD 6850 has almost the same amount of TFLOPs @ SP as the PS4 does. Yet, a GTX580 destroys a 6850. HUSH!ronvalencia

Go spend ~$1000(1) when GDDR6 is coming in 2014. http://vr-zone.com/articles/gddr6-memory-coming-in-2014/16359.html

1. http://pcpartpicker.com/parts/video-card/#c=130

The fool is you.

[QUOTE="ronvalencia"][QUOTE="DarkLink77"] AMD is for fools and consoles gamers. People who care about performance buy the GTX series. Thank you Based Nvidia.

DarkLink77

Go spend ~$1000(1) when GDDR6 is coming in 2014. http://vr-zone.com/articles/gddr6-memory-coming-in-2014/16359.html

1. http://pcpartpicker.com/parts/video-card/#c=130

The fool is you.

I expected more from GK110's 551 mm^2 die size and ~$1000 price tag.

Having "King Tiger" tanks doesn't win the war.

8800 GTX has very little effect against Wii, Xbox 360 and PS3. Intel Haswell GT3 will shutdown NVIDIA's low end PC parts (upto Geforce 650).

Qualcomm is strong enough to constrain Tegra 4 (single design win from ZTE).

[QUOTE="BlbecekBobecek"]

Yeah but its the best data we have for comparison. It is to be expected that the PS4 GPU will be further optimised to provide even better results than standard PC architecture.

RyviusARC

Optimization won't help it.

Most of the API overhead on PC is from the CPU not the GPU.

So GPU performance from console to PC with similar GPU specs will be pretty much equal.

The only people I hear say this are pc gamers, Yet the people that actually develope the game say the opposite. I think I would take the word of the people that actually make the games. A PC with the same GPU isnt going to hold a candle to a console, it never has and it never will.

So how does this compare with my I920, 6 gigs of GDDR3 and a GTX285?

AM-Gamer

Google Radeon HD 7850 vs Geforce GTX 285.

PS4's GPU is between 7850 and 7870 e.g. "7860".

[QUOTE="NoodleFighter"]

[QUOTE="RyviusARC"]

Yah it's a monster compared to the PS3.

I am happy they went all out and also added more RAM.

Now PC won't be held back as much.

Can't wait for the Maxwell series of Nvidia GPUs next year.

I am predicting another 8800GTX.

nameless12345

I'm expecting it once GDDR6 hits the market

and they'll need to invest in some high end PC exclusive to take advantage of it like they did with Crysis

Will it happen this time, tho?

CryTek clearly said that developing just for PC is not worth it and Epic are like minded as well...

The future of high budget "AAA" games is multi-platform and (most likely) console-centric development.

If you're refering to the typical blockbuster 5 hour long game than sure, but plenty of AAA devs on PC and some potential ones, but what exactly are talking about in budgets and AAA? Ironic epic games is developing a PC exclusive but I have a feeling that it might get ported to consoles later considering these new specs.

Star Citizen and RSI seem like the number 1 PC game and developer to challenge PS4 and Xbox 720

[QUOTE="Chozofication"]

Why are people talk about emulating the PS4? Today's PC's can't even emulate 360. People must have no idea how emulation works on here...

nameless12345

True.

The first Xbox was literally a PC in console case yet emulating it was not an easy task...

Also, you would need atleast 10-times better PC to emulate it so good luck with that...

Poor documentation among other issues.

The only people I hear say this are pc gamers, Yet the people that actually develope the game say the opposite. I think I would take the word of the people that actually make the games. A PC with the same GPU isnt going to hold a candle to a console, it never has and it never will.

AM-Gamer

Instead of taking my word or anyone else look up the facts.

If you look at gameplay performance and compare an 8600gt to a 360 you will see they perform similar with the 8600gt sometimes having the edge.

The 8600gt is similar in power to consoles.

If what you think were true the 360 should be smashing the 8600gt and yet it isn't.

On the other hand you need a more powerful CPU than the 360's to get similar performance.

This is because of the API overhead.

[QUOTE="RyviusARC"]

[QUOTE="BlbecekBobecek"]

Yeah but its the best data we have for comparison. It is to be expected that the PS4 GPU will be further optimised to provide even better results than standard PC architecture.

AM-Gamer

Optimization won't help it.

Most of the API overhead on PC is from the CPU not the GPU.

So GPU performance from console to PC with similar GPU specs will be pretty much equal.

The only people I hear say this are pc gamers, Yet the people that actually develope the game say the opposite. I think I would take the word of the people that actually make the games. A PC with the same GPU isnt going to hold a candle to a console, it never has and it never will.

Of course not, a console has very little overhead so that more resources can be put into running the games. Also, the makeup of a PC is hugely different to a console despite running on similar hardware.Yes!

Its more powerful than:

GeForce GTX 480 (1.34 TFLOPS)

GeForce GTX 580 (1.58 TFLOPS)

Its almost equal to:

GeForce GTX 660 (1.9 TFLOPS)

Its less powerful than:

GeForce GTX 680 (3 TFLOPS)

-------------------------------

src:

http://www.pcpro.co.uk/reviews/graphics-cards/362647/nvidia-geforce-gtx-580

http://www.nordichardware.com/Graphics/geforce-gtx-660-for-oems-appears-on-nvidias-website.html

-------------------------------

So as we can see its quite a bit more powerful than what was considered bleeding edge in 2011 and only the latest and most expensive and powerfull current GPUs gain considerable edge over it.

Noone has ever optimised a game for such powerful hardware. We have not yet seen how a game optimised for it might look. Forget Crysis 3 on ultra settings - games that the new PS4 shall bring us will wipe floor with it. Crysis 3 on ultra runs just fine on the less powerful GTX 580 afterall, and that is even further slowed down through API.

BlbecekBobecek

you can compare TFLOPS between AMD gpus and nvidia gpus

HD7850: 1.76 TFLOPS, Engine Clock 860Mhz, 16 Compute Units (1024 Stream Processors), 64 Texture Units , 2/4GB GDDR5 with 153.6GB/s memory bandwidth

PS4 GPU: 1.84 TFLOPS, Engine Clock 800Mhz, 18 Compute Units (1154 Stream Processors), 72 Texture Units , 8GB GDDR5 with 176.0GB/s memory bandwidth (shared with CPU)

HD7870: 2.56 TFLOPS, Engine Clock 1000Mhz, 20 Compute Units (1280 Stream Processors) , 80 Texture Units , 2/4GB GDDR5 with 153.6GB/s memory bandwidth

HD7950: 3.05 TFLOPS, Engine Clock 850Mhz, 28 Compute Units (1792 Stream Processors) , 112 Texture Units , 3GB GDDR5 with 240.0GB/s memory bandwidth

HD7970: 4.10 TFLOPS, Engine Clock 1000Mhz, 32 Compute Units (2048 Stream Processors) , 128 Texture Units , 3/6GB GDDR5 with 288.0GB/s memory bandwidth

as you can see the ps4 GPU is very similar to the HD7850 and it is a barely a mid range GPU and by the time ps4 will be released will be low end GPU.

No it won't it needed to be upped because the shareing features will eat the crap out of the RAM and PS4 games would of looked worse.... CPU and GPU are far more important. 8gb ram is overkill. I cant think of a PC game that uses more than 2gb.... with mods.its a good GPU. Very similiar performance off the shelf to a 7850 in terms of raw TFLOPS comparison. Its GCN architecture based though, so more efficiency and better Compute performance than that architecture provides.

The bigger thing is 8Gb of RAM. Its a absolutel godsend that Sony upped that from the rumored 4gb. It will have a larger effect on PS3 games then pretty much everything else.

Cali3350

So how does this compare with my I920, 6 gigs of GDDR3 and a GTX285?

AM-Gamer

Like this:

(your rig is in the bottom)

[QUOTE=""]

HD7850: 1.76 TFLOPS, Engine Clock 860Mhz, 16 Compute Units (1024 Stream Processors), 64 Texture Units , 2/4GB GDDR5 with 153.6GB/s memory bandwidth

PS4 GPU: 1.84 TFLOPS, Engine Clock 800Mhz, 18 Compute Units (1154 Stream Processors), 72 Texture Units , 8GB GDDR5 with 176.0GB/s memory bandwidth (shared with CPU)

HD7870: 2.56 TFLOPS, Engine Clock 1000Mhz, 20 Compute Units (1280 Stream Processors) , 80 Texture Units , 2/4GB GDDR5 with 153.6GB/s memory bandwidth

HD7950: 3.05 TFLOPS, Engine Clock 850Mhz, 28 Compute Units (1792 Stream Processors) , 112 Texture Units , 3GB GDDR5 with 240.0GB/s memory bandwidth

HD7970: 4.10 TFLOPS, Engine Clock 1000Mhz, 32 Compute Units (2048 Stream Processors) , 128 Texture Units , 3/6GB GDDR5 with 288.0GB/s memory bandwidthMK-Professor

as you can see the ps4 GPU is very similar to the HD7850 and it is a barely a mid range GPU and by the time ps4 will be released will be low end GPU.

Its pretty high end if you ask me. Saying its "barely mid-range" is downplaying it a lot. Its about 5x more powerful than my GeForce GT 555m and I can run every single PC game on medium - high and most of them even on ultra (for example Im running DotA 2 maxed out on 1080p resolution, Crysis 2 on highest settings on 720p resolution with AA off, etc).

I mean - my laptop runs all of the games hermits rave about on these boards on highest or near-highest settings and thats with GPU that has fractio of PS4's GPU power, and its still crippled by API overhead. The point is that whatever the graphics of PS4 will look like is still only a matter of imagination since we have not seen such graphics yet.

I remember a day when a 50 MB hard drive, 1 MB of RAM and 3.5" floppy discs were bleeding edge.Zeviander

Me too. Your point?

[QUOTE="MK-Professor"]

[QUOTE=""]

HD7850: 1.76 TFLOPS, Engine Clock 860Mhz, 16 Compute Units (1024 Stream Processors), 64 Texture Units , 2/4GB GDDR5 with 153.6GB/s memory bandwidth

PS4 GPU: 1.84 TFLOPS, Engine Clock 800Mhz, 18 Compute Units (1154 Stream Processors), 72 Texture Units , 8GB GDDR5 with 176.0GB/s memory bandwidth (shared with CPU)

HD7870: 2.56 TFLOPS, Engine Clock 1000Mhz, 20 Compute Units (1280 Stream Processors) , 80 Texture Units , 2/4GB GDDR5 with 153.6GB/s memory bandwidth

HD7950: 3.05 TFLOPS, Engine Clock 850Mhz, 28 Compute Units (1792 Stream Processors) , 112 Texture Units , 3GB GDDR5 with 240.0GB/s memory bandwidth

HD7970: 4.10 TFLOPS, Engine Clock 1000Mhz, 32 Compute Units (2048 Stream Processors) , 128 Texture Units , 3/6GB GDDR5 with 288.0GB/s memory bandwidthBlbecekBobecek

as you can see the ps4 GPU is very similar to the HD7850 and it is a barely a mid range GPU and by the time ps4 will be released will be low end GPU.

Its pretty high end if you ask me. Saying its "barely mid-range" is downplaying it a lot. Its about 5x more powerful than my GeForce GT 555m and I can run every single PC game on medium - high and most of them even on ultra (for example Im running DotA 2 maxed out on 1080p resolution, Crysis 2 on highest settings on 720p resolution with AA off, etc).

I mean - my laptop runs all of the games hermits rave about on these boards on highest or near-highest settings and thats with GPU that has fractio of PS4's GPU power, and its still crippled by API overhead. The point is that whatever the graphics of PS4 will look like is still only a matter of imagination since we have not seen such graphics yet.

no it is not

HD7950 & HD7970 are high end(for single gpu), and the HD7850 & HD7870 are mid range and also all these models are already one year old.

Essentially when the ps4 will be released will have a gpu that it was mid range 2 years ago.

So in the end of the year new GPU's will be released making the HD7850 completely low end.

Me too. Your point?BlbecekBobecekPick one: - I feel old - Computing technology has reached astronomical performance in essentially two decades - games have become more about raw graphics tech than using what limited resources a dev has to create a new idea or concept - kids growing up in this century might not even know what a "floppy disc" is

[QUOTE="BlbecekBobecek"]

[QUOTE="MK-Professor"]

as you can see the ps4 GPU is very similar to the HD7850 and it is a barely a mid range GPU and by the time ps4 will be released will be low end GPU.

MK-Professor

Its pretty high end if you ask me. Saying its "barely mid-range" is downplaying it a lot. Its about 5x more powerful than my GeForce GT 555m and I can run every single PC game on medium - high and most of them even on ultra (for example Im running DotA 2 maxed out on 1080p resolution, Crysis 2 on highest settings on 720p resolution with AA off, etc).

I mean - my laptop runs all of the games hermits rave about on these boards on highest or near-highest settings and thats with GPU that has fractio of PS4's GPU power, and its still crippled by API overhead. The point is that whatever the graphics of PS4 will look like is still only a matter of imagination since we have not seen such graphics yet.

no it is not

HD7950 & HD7970 are high end(for single gpu), and the HD7850 & HD7870 are mid range and also all these models are already one year old.

Essentially when the ps4 will be released will have a gpu that it was mid range 2 years ago.

So in the end of the year new GPU's will be released making the HD7850 completely low end.

And still it will take at least a year before a PC game will equal PS4 launch games in graphics... old story, its every single gen like this.

-games have become more about raw graphics tech than using what limited resources a dev has to create a new idea or concept Zeviander

This one. :)

There is more to GPU than just flops,sure raw flops is a good measure to say this card is stronger than another,but it doesn't show the real picture,since you can have a 2TF GPU been beating by a 1.84 TF one,because of efficiency which the GPU on the PS4 is more than its PC counter part. Generally GPU on PC do by brute force what they can't do by efficiency. That say a disparity like 600Gflops or more will likely create a disparity,but considering some of those GPU are from the same line,3TF vs 1.84 will mean a real difference.Yes!

Its more powerful than:

GeForce GTX 480 (1.34 TFLOPS)

GeForce GTX 580 (1.58 TFLOPS)

Its almost equal to:

GeForce GTX 660 (1.9 TFLOPS)

Its less powerful than:

GeForce GTX 680 (3 TFLOPS)

-------------------------------

src:

http://www.pcpro.co.uk/reviews/graphics-cards/362647/nvidia-geforce-gtx-580

http://www.nordichardware.com/Graphics/geforce-gtx-660-for-oems-appears-on-nvidias-website.html

-------------------------------

So as we can see its quite a bit more powerful than what was considered bleeding edge in 2011 and only the latest and most expensive and powerfull current GPUs gain considerable edge over it.

Noone has ever optimised a game for such powerful hardware. We have not yet seen how a game optimised for it might look. Forget Crysis 3 on ultra settings - games that the new PS4 shall bring us will wipe floor with it. Crysis 3 on ultra runs just fine on the less powerful GTX 580 afterall, and that is even further slowed down through API.

BlbecekBobecek

[QUOTE="MK-Professor"]

[QUOTE="BlbecekBobecek"]

Its pretty high end if you ask me. Saying its "barely mid-range" is downplaying it a lot. Its about 5x more powerful than my GeForce GT 555m and I can run every single PC game on medium - high and most of them even on ultra (for example Im running DotA 2 maxed out on 1080p resolution, Crysis 2 on highest settings on 720p resolution with AA off, etc).

I mean - my laptop runs all of the games hermits rave about on these boards on highest or near-highest settings and thats with GPU that has fractio of PS4's GPU power, and its still crippled by API overhead. The point is that whatever the graphics of PS4 will look like is still only a matter of imagination since we have not seen such graphics yet.

BlbecekBobecek

no it is not

HD7950 & HD7970 are high end(for single gpu), and the HD7850 & HD7870 are mid range and also all these models are already one year old.

Essentially when the ps4 will be released will have a gpu that it was mid range 2 years ago.

So in the end of the year new GPU's will be released making the HD7850 completely low end.

And still it will take at least a year before a PC game will equal PS4 launch games in graphics... old story, its every single gen like this.

LOL?

a HD7870/4GB will play any multiplat with better graphics and performance than ps4, deal with it.

no it is notThere are variables on this equation. The PS4 GPU is between a 7850 and 7870,the GPU it self is on the same die as the CPU,which on PC is not like that unless you are talking about an APU which has no gain paring with a dedicated GPU on PC,which means that the GPU on the PS4 by that alone is more efficient and less prone to latency,which hurt GPU allot on PC. Another thing is coders like the Guy from Nvidia who created an algorithm for AA say,can code to the Metal on PS4,on PC they have to through MS API which doesn't allow that and restrict what developer can do,this will enable coders to get more out of the PS4 GPU that they would from their PC counterpart. Another thing here is,if you remember well when the PS3 was launch,video cards coming out already had as much ram for video as the complete PS3 system had for all system and video,the same with the 360 and even more some shipped with 1GB far eclipsing what the PS3 and xbox 360 had for ram,this time is not the same,the PS4 GPU has 8GB of GDDR5,the very best top of the line GPU now the $1,000+ dollars Gforce Titan has less ram than the PS4,so does the 7990. Now i know the PS4 one is shared but the PS4 OS was say to take 512MB when the memory was rumored to be 4GB,i can see it need it more than 1GB,the PS4 is not running windows,which should ensure that at least on the textures side and things like that the PS4 will not suffer much for quite some time,unlike the PS4 and 360,in fact the 360 a few months after launch already had inferior textures to those found on PC. I am not saying the PS4 will beat a 7970 but it should not suffer as much as the 7850,and since the PS4 is not targeting 2560x1600 with 8XMSAA and 16XAF,and is just targeting 1080p the performance should increase. http://www.anandtech.com/bench/Product/549?vs=550 This should give you a nice idea,everything the 7950 is doing the 7850 does it as well just a tad slower on frames per second.. Look at Crysis Warhead on 2560x1600 just 9 frame difference. Look at Metro once again the same resolution,the difference 10 frames. As soon as the resolution hit 1920X1200 the 7850 get a boost in frames,and that still higher than 1080p.HD7950 & HD7970 are high end(for single gpu), and the HD7850 & HD7870 are mid range and also all these models are already one year old.

Essentially when the ps4 will be released will have a gpu that it was mid range 2 years ago.

So in the end of the year new GPU's will be released making the HD7850 completely low end.

MK-Professor

[QUOTE="BlbecekBobecek"]Me too. Your point?ZevianderPick one: - I feel old - Computing technology has reached astronomical performance in essentially two decades - games have become more about raw graphics tech than using what limited resources a dev has to create a new idea or concept - kids growing up in this century might not even know what a "floppy disc" is

........ Exactly this.. Killzone 4 is basically the posterchild of the same sh!t in a new wrapper..

1.84 TFLOPS good?

For a gizmo dedicated to nothing but games? Darn right it is....at least until 4k becomes the standard for games. That's pretty far off though.

Heck. my GPU is only 1.2 TFLOPS and it still does the job for me at 1080p. Of course, I haven't tried Crysis 3 yet. :lol:

[QUOTE="MK-Professor"]LOL?Not necessarily. And the 7870 don't have 4GB of ram at least no the ones i see.a HD7870/4GB will play any multiplat with better graphics and performance than ps4, deal with it.

tormentos

HD7850 & HD7870 have both 2GB and 4Gb versions, the HD7970 have 3GB and 6GB and the HD7950 have only 3GB version

and the HD7870 will play multiplats with better graphics and performance than ps4, it is just faster no argument here.

seems emulatable though.[QUOTE="BrunoBRS"][QUOTE="loosingENDS"]

The unified ram makes it totally different than PC architecture really

loosingENDS

How ? On PC you have to account for the swaping of ram to Vram, if a game is based on the unified architecture and optimized for zero swaps, how do you emulate that fast ram with no swaps ?

Seems PS4 will be one of the hardest to emulate

I think the DX11 specification actually covers the no swap issue (not too sure), though regardless, I think it will be a very long time before one could emulate a PS4 due to the extremely high main RAM bandwidth in general. It will be years before we reach that kind of speed in PCs between main RAM and the CPU/APU. Like AMDs next gen PC APUs, the PS4's APU probably see the RAM as one large bank, there will be no "VRAM" or "SRAM" it's just one pool to do with as pleased. 7850-7870 like performance should not need much more than 4 GB total. I'm sure Sony will have the PS4's OS restrict game usage to 5.5 to 6 GB at most so there is plenty of memory left for all the background tasks Sony has planned.[QUOTE="MK-Professor"]no it is notThere are variables on this equation. The PS4 GPU is between a 7850 and 7870,the GPU it self is on the same die as the CPU,which on PC is not like that unless you are talking about an APU which has no gain paring with a dedicated GPU on PC,which means that the GPU on the PS4 by that alone is more efficient and less prone to latency,which hurt GPU allot on PC. Another thing is coders like the Guy from Nvidia who created an algorithm for AA say,can code to the Metal on PS4,on PC they have to through MS API which doesn't allow that and restrict what developer can do,this will enable coders to get more out of the PS4 GPU that they would from their PC counterpart. Another thing here is,if you remember well when the PS3 was launch,video cards coming out already had as much ram for video as the complete PS3 system had for all system and video,the same with the 360 and even more some shipped with 1GB far eclipsing what the PS3 and xbox 360 had for ram,this time is not the same,the PS4 GPU has 8GB of GDDR5,the very best top of the line GPU now the $1,000+ dollars Gforce Titan has less ram than the PS4,so does the 7990. Now i know the PS4 one is shared but the PS4 OS was say to take 512MB when the memory was rumored to be 4GB,i can see it need it more than 1GB,the PS4 is not running windows,which should ensure that at least on the textures side and things like that the PS4 will not suffer much for quite some time,unlike the PS4 and 360,in fact the 360 a few months after launch already had inferior textures to those found on PC. I am not saying the PS4 will beat a 7970 but it should not suffer as much as the 7850,and since the PS4 is not targeting 2560x1600 with 8XMSAA and 16XAF,and is just targeting 1080p the performance should increase. http://www.anandtech.com/bench/Product/549?vs=550 This should give you a nice idea,everything the 7950 is doing the 7850 does it as well just a tad slower on frames per second.. Look at Crysis Warhead on 2560x1600 just 9 frame difference. Look at Metro once again the same resolution,the difference 10 frames. As soon as the resolution hit 1920X1200 the 7850 get a boost in frames,and that still higher than 1080p.HD7950 & HD7970 are high end(for single gpu), and the HD7850 & HD7870 are mid range and also all these models are already one year old.

Essentially when the ps4 will be released will have a gpu that it was mid range 2 years ago.

So in the end of the year new GPU's will be released making the HD7850 completely low end.

tormentos

One variable you totally forgot... Sony is said to only target 14CU of the 18CU for the gaming while the other 4CU will be used for the lcd controller gpgpu workloads, physics etc. Which means the gpu will normally will never match a 7850 performance. 14CU=1.4 TFLOP. Also you totally have the idea of that guy who made FXAA wrong.... his statement was talking about improving texture fetching improvement not the whole gpu's performance, they can not bypass the hardware's physical processing limitations. Also you totally ignored basic computer functions The PS4 will never use 6 or 8gb for video usage, You have to account OS all the features, game data load and then video load into the memory.

Also the PS4 OS and all the features stated will use alot more then 512mb... you will be looking at 2gb OS+features, game will use 4gb(no streaming data) and video use 2gb since anything over 2gb is pointless at 1080.. Also your example of the 7850 vs 7950 is off you should only look at Direct x 11 based games since that will be the new set standard, The 7950 at 1080+ is still much faster then the 7850. Also you should look at dirt3 minimum fps too that gives you the real difference in base line performance.But lets not forget the 7950 boost clock performance or even the 7970 or even crossfire or SLI into the mix by the end of the year the 7850 will be considered mid ranged at best. Heck AMD APU's are going to get igp's nearly as fast as a 7750.

Also the PS4 OS and all the features stated will use alot more then 512mb... you will be looking at 2gb OS+features, game will use 4gb(no streaming data) and video use 2gb since anything over 2gb is pointless at 1080.. Also your example of the 7850 vs 7950 is off you should only look at Direct x 11 based games since that will be the new set standard, The 7950 at 1080+ is still much faster then the 7850. Also you should look at dirt3 minimum fps too that gives you the real difference in base line performance.But lets not forget the 7950 boost clock performance or even the 7970 or even crossfire or SLI into the mix by the end of the year the 7850 will be considered mid ranged at best. Heck AMD APU's are going to get igp's nearly as fast as a 7750.

NO.. All the 18CU are unified and can be use as developers want to,if the want to use all of them for rendering that would be the case,and if the want to use 2 for physics just as well,the rumor of 14+4 was debunked,so you can erase that variable. If you are going to quote me at least read what i post don't tell me that i totally ignore basics just say you did not read my post or you are not fallowing my argument,since i stated already the the PS4 OS was say to be 512MB,when the memory configuration was 4GB,when sony decide to add another 4GB they did not say the OS would eat even more ram,but if you read what i say i already compensate,saying 1GB for OS which is allot since the PS4 is not running windows 8. Also i did not say it will bypass the GPU limitation,but what about pushing it to its very limits.? Efficiency on PC suck,especially with Latency,and the huge and endless variables configurations that can be made,and that are bound do to legacy,the 7850 doesn't reach 100% efficiency not even close,to do the nature of GPU never been fully exploited each one. Once again stop trying to put the words of an Nvidia guy who knows much more about this that you,again when was the last time you invented an algorithm for AA on PC.? You are pulling the 2GB OS requirement out of you a$$ link or your making sh** up,in fact it was rumored by people inside that the OS was 512 last time,how the hell the OS went from 512MB to 2GB,maybe you should look at what the Vita is doing with just 512MB of ram for OS,the PS4 is not windows 8 based or close,and even if the worst case scenario was 2GB for OS,that still leaves 6GB of GDDR5 for video which is as much as the current $1000 dollar and newly release Gforce Titan. In fact many of the features of the PS4 are handle by separate chips,recording is done on 1 chip,OS is handle by a low power AMR like CPU,so basically the features touch the OS and ram as little as possible sony was pretty smart with the design,no wonder Anantech in his last podcast call the PS4 the way PC should fallow..MM' When people like Jonh Carmak which has been one of the most fierce critic of the PS3 hardware even before it was release,come and claim that sony did a great engineer choices is because something was well done. Now i am not saying the PS4 will beat the crap out of the 7970,but it should deliver impressive games under it,just like the crappy RSX deliver games like Uncharted.[QUOTE="04dcarraher"]One variable you totally forgot... Sony is said to only target 14CU of the 18CU for the gaming while the other 4CU will be used for the lcd controller gpgpu workloads, physics etc. Which means the gpu will normally will never match a 7850 performance. 14CU=1.4 TFLOP. Also you totally have the idea of that guy who made FXAA wrong.... his statement was talking about improving texture fetching improvement not the whole gpu's performance, they can not bypass the hardware's physical processing limitations. Also you totally ignored basic computer functions The PS4 will never use 6 or 8gb for video usage, You have to account OS all the features, game data load and then video load into the memory.NO.. All the 18CU are unified and can be use as developers want to,if the want to use all of them for rendering that would be the case,and if the want to use 2 for physics just as well,the rumor of 14+4 was debunked,so you can erase that variable. If you are going to quote me at least read what i post don't tell me that i totally ignore basics just say you did not read my post or you are not fallowing my argument,since i stated already the the PS4 OS was say to be 512MB,when the memory configuration was 4GB,when sony decide to add another 4GB they did not say the OS would eat even more ram,but if you read what i say i already compensate,saying 1GB for OS which is allot since the PS4 is not running windows 8. Also i did not say it will bypass the GPU limitation,but what about pushing it to its very limits.? Efficiency on PC suck,especially with Latency,and the huge and endless variables configurations that can be made,and that are bound do to legacy,the 7850 doesn't reach 100% efficiency not even close,to do the nature of GPU never been fully exploited each one. Once again stop trying to put the words of an Nvidia guy who knows much more about this that you,again when was the last time you invented an algorithm for AA on PC.? You are pulling the 2GB OS requirement out of you a$$ link or your making sh** up,in fact it was rumored by people inside that the OS was 512 last time,how the hell the OS went from 512MB to 2GB,maybe you should look at what the Vita is doing with just 512MB of ram for OS,the PS4 is not windows 8 based or close,and even if the worst case scenario was 2GB for OS,that still leaves 6GB of GDDR5 for video which is as much as the current $1000 dollar and newly release Gforce Titan. In fact many of the features of the PS4 are handle by separate chips,recording is done on 1 chip,OS is handle by a low power AMR like CPU,so basically the features touch the OS and ram as little as possible sony was pretty smart with the design,no wonder Anantech in his last podcast call the PS4 the way PC should fallow..MM' When people like Jonh Carmak which has been one of the most fierce critic of the PS3 hardware even before it was release,come and claim that sony did a great engineer choices is because something was well done. Now i am not saying the PS4 will beat the crap out of the 7970,but it should deliver impressive games under it,just like the crappy RSX deliver games like Uncharted. :lol: so many things wrong go back to sony's teatAlso the PS4 OS and all the features stated will use alot more then 512mb... you will be looking at 2gb OS+features, game will use 4gb(no streaming data) and video use 2gb since anything over 2gb is pointless at 1080.. Also your example of the 7850 vs 7950 is off you should only look at Direct x 11 based games since that will be the new set standard, The 7950 at 1080+ is still much faster then the 7850. Also you should look at dirt3 minimum fps too that gives you the real difference in base line performance.But lets not forget the 7950 boost clock performance or even the 7970 or even crossfire or SLI into the mix by the end of the year the 7850 will be considered mid ranged at best. Heck AMD APU's are going to get igp's nearly as fast as a 7750.

tormentos

Please Log In to post.

Log in to comment