@osan0 said:

@ronvalencia: any chance nvidia can also do something similar for kepler(or find some way to get more performance from it)? sadly i have to admit that my laptop (780M) has been resolutely beaten by the PS4 in Doom 2016.

anywho on topic this isnt news. we knew this already. its an underclocked TX1 (very undercloked when undocked). the only question i have (and im guessing the answer is no since there isnt even a whiff of news on it) is have nintendo done anything to counteract the low memory bandwidth in the switch. e.g. have they added EDram to the TX1 (maybe replaced the little cores with it).

Sorry, Kepler is in legacy support. Read http://www.nvidia.com/page/legacy.html

My 980 Ti is not yet on the legacy list.

Both Pascal (not GP100) and Maxwell v2 has very similar designs.

TX1 actually has newer SM unit design than desktop PC's Pascal SM units i.e. TX1's SM unit has double rate FP16 feature while desktop PC variant has broken 1/64 speed native FP16 feature. Don't worry FP32 units emulates FP16 at same rate as FP32.

All NVIDIA Maxwells has tile based rasterization and binning which includes small storage very high speed cache.

https://en.wikipedia.org/wiki/Tiled_rendering

- Nvidia GPUs based on the Maxwell architecture and later architectures

- Xbox 360 (2005): the GPU contains an embedded 10 MiBeDRAM; this is not sufficient to hold the raster for an entire 1280×720 image with 4× multisample anti-aliasing, so a tiling solution is superimposed when running in HD resolutions and 4× MSAA is enabled.[9]

- Xbox One (2013): the GPU contains an embedded 32 MBeSRAM, which can be used to hold all or part of an image. It is not a tiled architecture, but is flexible enough that software developers can emulate tiled rendering

Xbox One's GCN GPU is not tiled hardware architecture i.e. software emulated tiled render.

https://github.com/nlguillemot/trianglebin/releases

Test tool for tiling vs non-tiling. Test tool works on Windows 10.

NVIDIA's tiled rendering is known as immediate tiled render which is backward compatible to traditional non-tiling immediate render.

NVIDIA Maxwell automatically adjust tile size based on data size and complexity (watch the youtube). On Xbox One, this is a manual process.

From http://www.anandtech.com/show/11002/the-amd-vega-gpu-architecture-teaser/3

ROPs & Rasterizers: Binning for the Win(ning)

We’ll suitably round-out our overview of AMD’s Vega teaser with a look at the front and back-ends of the GPU architecture. While AMD has clearly put quite a bit of effort into the shader core, shader engines, and memory, they have not ignored the rasterizers at the front-end or the ROPs at the back-end. In fact this could be one of the most important changes to the architecture from an efficiency standpoint.

Back in August, our pal David Kanter discovered one of the important ingredients of the secret sauce that is NVIDIA’s efficiency optimizations. As it turns out, NVIDIA has been doing tile based rasterization and binning since Maxwell, and that this was likely one of the big reasons Maxwell’s efficiency increased by so much. Though NVIDIA still refuses to comment on the matter, from what we can ascertain, breaking up a scene into tiles has allowed NVIDIA to keep a lot more traffic on-chip, which saves memory bandwidth, but also cuts down on very expensive accesses to VRAM.

For Vega, AMD will be doing something similar. The architecture will add support for what AMD calls the Draw Stream Binning Rasterizer, which true to its name, will give Vega the ability to bin polygons by tile. By doing so, AMD will cut down on the amount of memory accesses by working with smaller tiles that can stay-on chip. This will also allow AMD to do a better job of culling hidden pixels, keeping them from making it to the pixel shaders and consuming resources there.

As we have almost no detail on how AMD or NVIDIA are doing tiling and binning, it’s impossible to say with any degree of certainty just how close their implementations are, so I’ll refrain from any speculation on which might be better. But I’m not going to be too surprised if in the future we find out both implementations are quite similar. The important thing to take away from this right now is that AMD is following a very similar path to where we think NVIDIA captured some of their greatest efficiency gains on Maxwell, and that in turn bodes well for Vega.

Meanwhile, on the ROP side of matters, besides baking in the necessary support for the aforementioned binning technology, AMD is also making one other change to cut down on the amount of data that has to go off-chip to VRAM. AMD has significantly reworked how the ROPs (or as they like to call them, the Render Back-Ends) interact with their L2 cache. Starting with Vega, the ROPs are now clients of the L2 cache rather than the memory controller, allowing them to better and more directly use the relatively spacious L2 cache.

---------

When combined with superior delta memory compression and the above tile cache rasterization feature, GTX 1070 (6.4 TFLOPS) was able jump higher than RX-480 and both RX-480 and GTX 1070 has the same physical memory bandwidth 256bit GDDR5-8000.

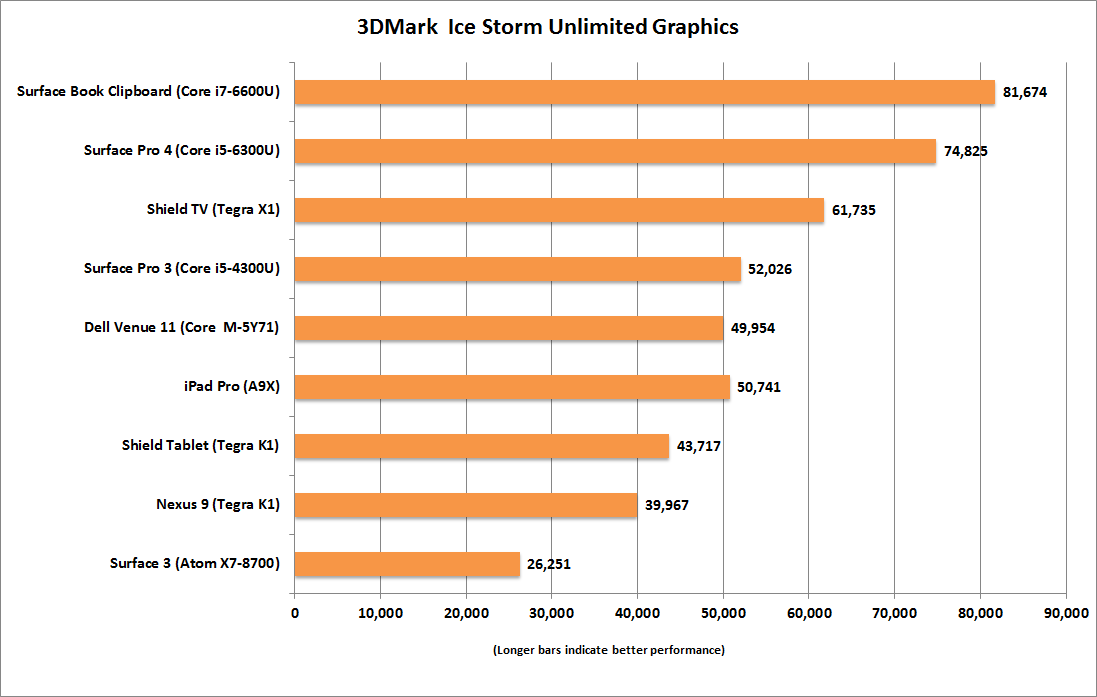

The problem for Shield TV.... Xbox 360 is also tile based rasterization which is very efficient.

The key design is both ROPS and shader ALUs are linked to small high speed memory.

Xbox 360's ROPS are located next to EDRAM and there's 30 GB/s link to GPU's shader ALUs. There's a bottleneck between EDRAM and GPU's shader ALUs.

Xbox One's ESRAM version can only write 109 GB/s rate which is not fast for tile render i.e. it's not at same memory bandwidth as L2 cache.

Log in to comment